Genome

Visualization

See also Bioconductor > BiocViews > Visualization. Search 'genom' as the keyword.

IGV

- UserGuide

- Inspect alignments with IGV in Anders2013

- For paired data we will see two reads (one is -> and the other is <- direction) from the same fragment.

nano ~/binary/IGV_2.3.52/igv.sh # Change -Xmx2000m to -Xmx4000m in order to increase the memory to 4GB ~/binary/IGV_2.3.52/igv.sh

Simulated DNA-Seq

The following shows 3 simulated DNA-Seq data; the top has 8 insertions (purple '|') per read, the middle has 8 deletions (black '-') per read and the bottom has 8 snps per read.

Whole genome

Whole exome

- (Left) GSE48215, UCSC hg19. It is seen there is a good coverage on all exons.

- (Right) 1 of 3 whole exome data from SRP066363, UCSC hg19.

RNA-Seq

- (Left) Anders2013, Drosophila_melanogaster/Ensembl/BDGP5. It is seen there are no coverages on some exons.

- (Right) GSE46876, UCSC/hg19.

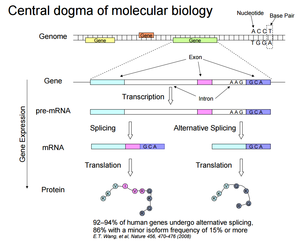

Tell DNA or RNA

- DNA: no matter it is whole genome or whole exome, the coverage is more even. For whole exome, there is no splicing.

- RNA: focusing on expression so the coverage changes a lot. The base name still A,C,G,T (not A,C,G,U).

Gviz

GIVE: Genomic Interactive Visualization Engine

Build your own genome browser

ChromHeatMap

Heat map plotting by genome coordinate.

ggbio

NOISeq package

Exploratory analysis (Sequencing depth, GC content bias, RNA composition) and differential expression for RNA-seq data.

rtracklayer

R interface to genome browsers and their annotation tracks

- Retrieve annotation from GTF file and parse the file to a GRanges instance. See the 'Counting reads with summarizeOverlaps' vignette from GenomicAlignments package.

ssviz

A small RNA-seq visualizer and analysis toolkit. It includes a function to draw bar plot of counts per million in tag length with two datasets (control and treatment).

Sushi

See fig on p22 of Sushi vignette where genes with different strands are shown with different directions when plotGenes() was used. plotGenes() can be used to plot gene structures that are stored in bed format.

cBioPortal

The cBioPortal for Cancer Genomics provides visualization, analysis and download of large-scale cancer genomics data sets.

https://github.com/cBioPortal/cbioportal

Qualimap

Qualimap 2 is a platform-independent application written in Java and R that provides both a Graphical User Inteface (GUI) and a command-line interface to facilitate the quality control of alignment sequencing data and its derivatives like feature counts.

SeqMonk

SeqMonk is a program to enable the visualisation and analysis of mapped sequence data.

Copy Number

Copy number work flow using Bioconductor

Detect copy number variation (CNV) from the whole exome sequencing

- http://www.ncbi.nlm.nih.gov/pubmed/24172663

- http://www.biomedcentral.com/1471-2164/15/661

- http://www.blog.biodiscovery.com/2014/06/16/comparison-of-cnv-detection-from-whole-exome-sequencing-wes-as-compared-with-snp-microarray/

- Bioconductor - CopyNumberVariation

- exomeCopy package

- exomeCNV package and more info including slides by Fah Sathirapongsasuti.

- ximmer and source

Whole exome sequencing != whole genome sequencing

Consensus CDS/CCDS

- UCSC

- http://genome.ucsc.edu/cgi-bin/hgTrackUi?hgsid=573539327_9Ga3TjKR7LNFYJ2JIMZKDNZfl9eF&c=chr1&g=ccdsGene

- Schema/Example

- The GenomicFeatures package can download the CCDS regions.

- The CCDS regions are convenient to use as genomic ranges for CNV detection in exome sequencing data, as they are often in the center of the targeted region in exome enrichment protocols and tend to have less variable coverage then the flanking regions -- exomeCopy package vignette.

NGS

Introduction to Sequence Data Analysis

- Review of RNA-Seq Data Analysis Tools

- Modeling and analysis of RNA-seq data: a review from a statistical perspective Li et al 2018

- Introduction to RNA Sequencing Malachi Griffith from the Genome Institute at Washington University

- RNA-Seq workshop from NYU

- Modern RNA-seq differential expression analyses: transcript-level or gene-level

- http://www.pasteur.fr/~tekaia/BCGA2014/TALKS/Pabinger_Tools4VariantAnalysisOfNGS_EMBO2014.pdf (QC, duplicates, SAM, BAM, picard, variant calling, somatic variant, structural variants, CNV, variant filter, variant annotation, tools for vcf, visualization, pipeline). The source code is available on github.

- https://en.wiki2.org/wiki/List_of_RNA-Seq_bioinformatics_tools

- http://www.rnaseq.wiki/ or https://github.com/griffithlab/rnaseq_tutorial/wiki from The Griffith Lab. It has a paper too at PLOS.

- http://bioinformatics.ca/workshops/2015/high-throughput-biology-sequence-networks-2015#material

- http://www.cbcb.umd.edu/~hcorrada/CMSC858B/

- http://watson.nci.nih.gov/~sdavis/tutorials/biowulf-2011/

- http://cbsu.tc.cornell.edu/ngw2010/Day3_lecture1.pdf

- http://static.msi.umn.edu/tutorial/lifescience/RNA-Seq-Module-1.pdf

- http://www.rna-seqblog.com/introduction-to-rna-seq-analysis/ (focus on statistical part)

- BroadE: GATK/Mapping and processing RNAseq from youtube.

- Low-count transcripts in RNA-Seq analysis pipeline

- RNA-seq analysis of human breast cancer data from QIAGEN. Biomedical Genomics Workbench 3.0.1 is used.

- RNA-seq Chipster tutorials

- Discovering the Genome

NIH only

- Some training material from NIH:

- Biowulf seminar by Steven Fellini & Susan Chacko.

- Bash Scripting and Linux commands

RNA-seq: Basics, Applications and Protocol

https://www.technologynetworks.com/genomics/articles/rna-seq-basics-applications-and-protocol-299461

Why Do You Need To Use Cdna For Rna-Seq?

https://www.biostars.org/p/54969/

RNA-Seq vs DNA-Seq

- With DNA, you'd be randomly sequencing the entire genome

- DNA-Seq cannot be used for measuring gene expression.

Total RNA-Seq

- Total RNA-Seq vs. mRNA sequencing from gatc-biotech.com

- A comprehensive picture of the transcriptome from illumina.com

- https://en.wikipedia.org/wiki/RNA-Seq RNA-Seq is used to analyze the continuously changing cellular transcriptome.

How to know that your RNA-seq is stranded or not?

https://www.biostars.org/p/98756/

Workshops

- UC Davis Bioinformatics Core.

- CSAMA 2017: Statistical Data Analysis for Genome-Scale Biology

Blogs

Automation

- Implementation of an Open Source Software solution for Laboratory Information Management and automated RNA-seq data analysis

- TRAPLINE – a standardized and automated pipeline for RNA sequencing data analysis, evaluation and annotation

Quality control

For base quality score, the quality value, Q(sanger) = - 10log10(prob) where prob = probability that the call at base b is correct. Sanger (Phred quality scores) is between 0 and 93. In practice the maximum quality score is ~40. Quality values below 20 are typically considered low.

Phred quality score and scales

- http://blog.nextgenetics.net/?e=33

- https://en.wikipedia.org/wiki/FASTQ_format#Encoding

- http://wiki.bits.vib.be/index.php/Identify_the_Phred_scale_of_quality_scores_used_in_fastQ contains a link to a python script to guess the encoding format

The original Phred scaling of 33-126 (representing scores 0-93) is also called the Sanger scale. There are also 2 other scales used by Illumina that shifts the range up:

- Illumina 1.0 format uses ASCII 59 - 126 representing scores -5 - 62.

- Illumina 1.3+ format uses ASCII 64 - 126 representing scores 0 - 62.

- Illumina 1.8, the quality scores have basically returned to the use of the Sanger format (Phred+33).

FastQC (java based)

One problem is the reads in trimmed fastq files from one end may not appear in other end for paired data. So consider Trimmomatic.

Qualimap (java based)

Qualimap examines sequencing alignment data in SAM/BAM files according to the features of the mapped reads and provides an overall view of the data that helps to the detect biases in the sequencing and/or mapping of the data and eases decision-making for further analysis.

The first time when we launch Qualimap we may get a message: The following R packages are missing: optparse, NOISeq, Repitools. Features dependent on these packages are disabled. See user manual for details. OK.

Trimmomatic

https://hpc.nih.gov/apps/trimmomatic.html

Trim Galore!

https://hpc.nih.gov/apps/trimgalore.html

QoRTs

A comprehensive toolset for quality control and data processing of RNA-Seq experiments.

SeqKit

A cross-platform and ultrafast toolkit for FASTA/Q file manipulation

FastqCleaner

An interactive Bioconductor application for quality-control, filtering and trimming of FASTQ files

Alignment and Indexing

- https://en.wikipedia.org/wiki/Sequence_alignment

- https://en.wikibooks.org/wiki/Next_Generation_Sequencing_(NGS)/Alignment

- Simulation-based comprehensive benchmarking of RNA-seq aligners

- Systematic evaluation of spliced alignment programs for RNA-seq data

- (Video) http://bioinformatics.ca//files/public/RNA-seq_2014_Montreal_Module2.mp4, http://www.rna-seqblog.com/rna-seq-alignment-and-visualization/

- (Video) https://youtu.be/n77EAk8C1es RNA-SEQ: Mapping to a Reference Genome

- RNA-Seq alignment methods emphasize gene count while DNA-Seq alignment methods (no junctions) emphasize variant detection. The read length in RNA-Seq alignment does not have be long but it has to be long in DNA-Seq since DNA-Seq cares about a good coverage of the whole exome/genome.

SAM file format

Here are some important fields

- Col 1: Read name

- Col 2: FLAG [0,2^16-1]

- Col 3: RNAME/Chrom

- Col 4: Pos [0,2^31-1]

- Col 5: MAPQ [0,2^8-1]

- Col 6: CIGAR

- Col 7: RNEXT (se as "=" if RNEXT is equal to RNAME)

- Col 8: PNEXT - Mate position [0,2^31-1]

- Col 9: Insert size [-2^31+1, 2^31-1]

- Col 10: SEQ

- Col 11: QUAL

- Col 14 (not fixed): AS

Note pair-end data,

1. Fastq files A_1.fastq A_2.fastq read1 read1 read2 read2 ... ... 2. SAM files (sorted by read name) read1 read1 read2 read2 ...

For example, consider paired-end reads SRR925751.192 that was extracted from so called inproper paired reads in samtools. If we extract the paired reads by grep -w SRR925751.192 bt20/bwa.sam we will get

SRR925751.192 97 chr1 13205 60 101M = 13429 325 ..... SRR925751.192 145 chr1 13429 60 101M = 13205 -325 .....

By entering the flag values 97 & 145 into the SAM flag entry on http://broadinstitute.github.io/picard/explain-flags.html, we see

- FLAG value 97 means read paired, mate reverse strand, first in pair.

- FLAG value 145 means read paired, read reverse strand, second in pair.

The two reads have positions. As we can see the insert size (it's the name shown on samtools help page and IGV program) is defined by start positions instead of end positions)

13205 13305 13429 13529

|-------------> <----------|

Consider a properly paired reads SRR925751.1. If we extract the paired reads, we will get

SRR925751.1 99 chr1 10010 0 90M11S = 10016 95 ..... SRR925751.1 147 chr1 10016 0 12S89M = 10010 -95 .....

Again by entering the flag values 99 and 147 into the Decoding SAM flags website, we see

- FLAG value 99 means read paired, read mapped in proper pair, mate reverse strand, first in pair.

- FLAG value 147 means read paired, read mapped in proper pair, read reverse strand, second in pair.

10010 10099

|------------>

10016 10104

<-------------|

The insert size here is 10104-10010+1=95. Running the following command,

cat foo.bam | awk '{ if ($9 >0) {S+=$9; T+=1}} END {print "Mean: "S/T}'

I get 133.242 for the average insert size from properly paired reads on my data (GSE48215subset, read length=101).

If I run the same command on in-properly paired reads, I got 1.9e07 for the average insert size.

Bowtie2 (RNA/DNA)

Extremely fast, general purpose short read aligner.

Create index files

bowtie needs to have an index of the genome in order to perform its alignment functionality. For example, to build a bowtie index against UCSC hg19 (See Getting started with Bowtie 2 -> Indexing a reference genome)

bowtie2-build /data/ngs/public/sequences/hg19/genome.fa hg19

Even the index file can be directly downloaded without going through Bowtie program, Bowtie program is still needed by Tophat program where Tophat's job is to align the RNA-seq data to reference genome.

Mapping

To run alignment,

bowtie2 -p 4 -x /data/ngs/public/sequences/hg19 XXX.fastq -S XXX.bt2.sam

At the end of alignment, it will show how many (and percent) of reads are aligned 0 times, exactly 1 time, and >1 times.

We can transform them into a bam format ('-@' means threads and '-T' means the reference file, it is optional)

samtools view -@ 16 -T XXX.fa XXX.bt2.sam > XXX.bt2.bam

and view the bam file

samtools view XXX.bt2.bam

Note that it is faster to use pipe to directly output the file in a bam format (reducing files I/O) if the aligner does not provide an option to output a binary bam format.

BWA (DNA)

- BWA MEM Algorithm https://www.biostars.org/p/140502/

- https://www.broadinstitute.org/files/shared/mpg/nextgen2010/nextgen_li.pdf By Heng Li

- http://schatzlab.cshl.edu/teaching/2010/Lecture%202%20-%20Sequence%20Alignment.pdf

- https://bioinformatics.cancer.gov/sites/default/files/course_material/Data%20Analysis%20for%20Exome%20Sequencing%20Data_0318.ppt

Used by whole-exome sequencing. For example, http://bib.oxfordjournals.org/content/15/2/256.full and http://www.ncbi.nlm.nih.gov/pmc/articles/PMC3865592/. Whole Exome Analysis.

Creating index file

Without creating index files, we will get an error [E::bwa_idx_load_from_disk] fail to locate the index files when we run the 'bwa mem' command (though the command only requires fa file in its arguments).

Prepare a reference for use with BWA and GATK

bwa index genome.fa # generate 4 new files amb, ann, pac and bwt (not enough for running 'bwa mem') bwa index -a bwtsw genome.fa # generate 5 new files amb, ann, pac, bwt and sa (right thing to do)

where -a bwtsw specifies that we want to use the indexing algorithm that is capable of handling the whole human genome.

It takes 3 hours to create the index files on the combined human+mouse genomes. Though there is no multhreads optionin bwa index, we can use the -b option to increase the block size in order to speed up the time. See https://github.com/lh3/bwa/issues/104. The default value is 10000000 (1.0e8). See the following output from bwa index:

[bwa_index] Pack FASTA... 46.43 sec [bwa_index] Construct BWT for the packed sequence... [BWTIncCreate] textLength=11650920420, availableWord=831801828 [BWTIncConstructFromPacked] 10 iterations done. 99999988 characters processed. [BWTIncConstructFromPacked] 20 iterations done. 199999988 characters processed. ... [BWTIncConstructFromPacked] 1230 iterations done. 11647338276 characters processed. [bwt_gen] Finished constructing BWT in 1232 iterations. [bwa_index] 4769.18 seconds elapse. [bwa_index] Update BWT... 45.78 sec [bwa_index] Pack forward-only FASTA... 32.66 sec [bwa_index] Construct SA from BWT and Occ... 2054.29 sec [main] Version: 0.7.15-r1140 [main] CMD: bwa index -a bwtsw genomecb.fa [main] Real time: 7027.396 sec; CPU: 6948.342 sec

Note that another index file fai is created by samtools

samtools faidx genome.fa

For example, the BWAIndex folder contains the following files

$ ls -l ~/igenomes/Homo_sapiens/UCSC/hg19/Sequence/BWAIndex/ total 12 lrwxrwxrwx 1 brb brb 22 Mar 10 03:40 genome.fa -> version0.6.0/genome.fa lrwxrwxrwx 1 brb brb 26 Mar 10 03:37 genome.fa.amb -> version0.6.0/genome.fa.amb lrwxrwxrwx 1 brb brb 26 Mar 10 03:40 genome.fa.ann -> version0.6.0/genome.fa.ann lrwxrwxrwx 1 brb brb 26 Mar 10 03:40 genome.fa.bwt -> version0.6.0/genome.fa.bwt -rw-r--r-- 1 brb brb 783 Apr 12 14:46 genome.fa.fai lrwxrwxrwx 1 brb brb 26 Mar 10 03:40 genome.fa.pac -> version0.6.0/genome.fa.pac lrwxrwxrwx 1 brb brb 25 Mar 10 03:37 genome.fa.sa -> version0.6.0/genome.fa.sa drwxrwxr-x 2 brb brb 4096 Mar 15 2012 version0.5.x drwxrwxr-x 2 brb brb 4096 Mar 15 2012 version0.6.0

Mapping

bwa mem # display the help bwa mem -t 4 XXX.fa XXX.fastq > XXX.sam more XXX.sam samtools view -dT XXX.fa XXX.sam > XXX.bam # transform to bam format samtools view XXX.bam | more

and output directly to a binary format

bwa mem -t 4 XXX.fa XXX.fastq | samtools view -bS - > XXX.bam # transform to bam format directly

where '-S' means Ignoring for compatibility with previous samtools versions. Previously this option was required if input was in SAM format, but now the correct format is automatically detected by examining the first few characters of input. See http://www.htslib.org/doc/samtools.html.

And this is a tutorial to use bwa and freebayes to do variant calling by Freebayes's author.

This tutorial is using a whole genome data SRR030257 from SRP001369

Best pipeline for human whole exome sequencing

Summary report of a BAM file: samtools flagstat

Unlike Tophat and STAR, BWA mem does not provide a summary report of percentage of mapped reads. To get the percentage of mapped reads, use samtoolss flagstat command

$ export PATH=$PATH:/opt/SeqTools/bin/samtools-1.3 # fastq files from ExomeLungCancer/test.SRR2923335_*.fastq # 'bwa mem' and 'samtools fixmate' have been run to generate <accepted_hits.bam> $ wc -l ../test.SRR2923335_1.fastq # 5000 reads in each of PAIRED end fastq files 20000 ../test.SRR2923335_1.fastq $ samtools flagstat accepted_hits.bam 10006 + 0 in total (QC-passed reads + QC-failed reads) 6 + 0 secondary 0 + 0 supplementary 0 + 0 duplicates 9971 + 0 mapped (99.65% : N/A) 10000 + 0 paired in sequencing 5000 + 0 read1 5000 + 0 read2 8502 + 0 properly paired (85.02% : N/A) 9934 + 0 with itself and mate mapped 31 + 0 singletons (0.31% : N/A) 1302 + 0 with mate mapped to a different chr 1231 + 0 with mate mapped to a different chr (mapQ>=5)

See also https://wikis.utexas.edu/display/CoreNGSTools/Alignment#Alignment-Exercise#4:BWA-MEM-HumanmRNA-seq for alignment (including BWA mem and samtools flagstat).

- Properly mapped (inward oriented & same chromosome & good insert size): samtools view -f 3 -F 2 foo.bam. But the result is not the same as flagstat??

- Itself and mate mapped: samtools view -f 1 -F 12 foo.bam. But the result is not the same as flagstat??

- Singleton: samtools view -F 4 -f 8 foo.bam. The flags indicate that the current read is mapped but its mate isn't. On GSE48215subset data, the return number of reads does not match with flagstat result but samtools view -f 4 -F 8 foo.bam (current read is unmapped and mate is mapped) gives the same result as the flagstat command.

Stringent criterion

- https://sourceforge.net/p/bio-bwa/mailman/message/31968535/

- http://seqanswers.com/forums/showthread.php?p=186581

Applied to RNA-Seq data?

https://www.biostars.org/p/130451/. BWA isn't going to handle spliced alignment terribly well, so it's not normally recommended for RNAseq datasets.

Minimap2

- BWA replacement. Fast and almost identical mapping

- https://academic.oup.com/bioinformatics/advance-article-abstract/doi/10.1093/bioinformatics/bty191/4994778

- https://arxiv.org/abs/1708.01492

- Latex source

Tophat (RNA)

Aligns RNA-Seq reads to the genome using Bowtie/Discovers splice sites. It does so by splitting longer reads into small sections and aligning those to the genome. It then looks for potential splice sites between pairs of sections to construct a final alignment.

Linux part.

$ type -a tophat # Find out which command the shell executes: tophat is /home/mli/binary/tophat $ ls -l ~/binary

Quick test of Tophat program

$ wget http://tophat.cbcb.umd.edu/downloads/test_data.tar.gz $ tar xzvf test_data.tar.gz $ cd ~/tophat_test_data/test_data $ PATH=$PATH:/home/mli/bowtie-0.12.8 $ export PATH $ ls reads_1.fq test_ref.1.ebwt test_ref.3.bt2 test_ref.rev.1.bt2 test_ref.rev.2.ebwt reads_2.fq test_ref.2.bt2 test_ref.4.bt2 test_ref.rev.1.ebwt test_ref.1.bt2 test_ref.2.ebwt test_ref.fa test_ref.rev.2.bt2 $ tophat -r 20 test_ref reads_1.fq reads_2.fq $ # This will generate a new folder <tophat_out> $ ls tophat_out accepted_hits.bam deletions.bed insertions.bed junctions.bed logs prep_reads.info unmapped.bam

TopHat accepts FASTQ and FASTA files of sequencing reads as input. Alignments are reported in BAM files. BAM is the compressed, binary version of SAM43, a flexible and general purpose read alignment format. SAM and BAM files are produced by most next-generation sequence alignment tools as output, and many downstream analysis tools accept SAM and BAM as input. There are also numerous utilities for viewing and manipulating SAM and BAM files. Perhaps most popular among these are the SAM tools (http://samtools.sourceforge.net/) and the Picard tools (http://picard.sourceforge.net/).

Note that if the data is DNA-Seq, we can merely use Bowtie2 or BWA tools since we don't have to worry about splicing.

An example of using Tophat2 (paired end in this case, 5 threads) is

tophat2 --no-coverage-search -p 5 \

-o "Sample1" \

-G ~/iGenomes/Homo_sapiens/UCSC/hg19/Annotation/Genes/genes.gtf \

--transcriptome-index=transcriptome_data/known \

~/iGenomes/Homo_sapiens/UCSC/hg19/Sequence/Bowtie2Index/genome \

myfastq_R1.fastq.gz myfastq_R2.fastq.gz

To find out the alignment rate for ALL bam files (eg we have ctrl1, ctrl2, test1, test2 directories and each of them have align_summary.txt file),

grep "overall read mapping rate" */align_summary.txt

Novel transcripts

How does TopHat find junctions?

https://sites.google.com/a/brown.edu/bioinformatics-in-biomed/cufflinks-and-tophat

Can Tophat Be Used For Mapping Dna-Seq

https://www.biostars.org/p/63878/

- In contrast to DNA-sequence alignment, RNA-seq mapping algorithms have two additional challenges. First, because genes in eukaryotic genomes contain introns, and because reads sequenced from mature mRNA transcripts do not include these introns, any RNA-seq alignment program must be able to handle gapped (or spliced) alignment with very large gaps.

- TopHat is designed to map reads to a reference allowing splicing. In your case, the reads are not spliced because are genomic, so don't waste your time and resources and use Bowtie/BWA directly.

Hisat (RNA/DNA)

- https://bioinformatics.ca/workshops/2017/informatics-rna-seq-analysis-2017

- Hisat on Biowulf

- The comparison between HISAT2 and Tophat2

STAR (Spliced Transcripts Alignment to a Reference, RNA)

Optimizing RNA-Seq Mapping with STAR by Alexander Dobin and Thomas R. Gingeras

Its manual is on github. The 2015 paper includes scripts to run STAR.

Note that the readme file says HARDWARE/SOFTWARE REQUIREMENTS:

- x86-64 compatible processors

- 64 bit Linux or Mac OS X

- 30GB of RAM for human genome. In fact, it requires 34GB (tested on UCSC/hg38). See the following jobhist output from running indexing on Biowulf.

Submission Command : sbatch --cpus-per-task=2 --mem=64g --time=4:00:00 createStarIndex Jobid Partition State Nodes CPUs Walltime Runtime MemReq MemUsed Nodelist 44714895 norm COMPLETED 1 2 04:00:00 02:54:21 64.0GB/node 33.6GB cn3144

See the blog on gettinggeneticsdone.com for a comparison of speed and memory requirement.

In short, the notable increase in speed comes at the price of a larger memory requirement.

To build STAR on Ubuntu (14.04)

wget https://github.com/alexdobin/STAR/archive/STAR_2.4.2a.tar.gz sudo tar -xzf STAR_2.4.2a.tar.gz -C /opt/RNA-Seq/bin cd /opt/RNA-Seq/bin/STAR-STAR_2.4.2a cd source sudo make STAR

Create index folder

STAR --runMode genomeGenerate --runThreadN 11 \

--genomeDir STARindex \

--genomeFastaFiles genome.fa \

--sjdbGTFfile genes.gtf \

--sjdbOverhang 100

where 100 = read length (one side) -1 = 101 - 100.

STARindex folder

brb@T3600 ~/SRP050992 $ ls ~/SRP012607/STARindex/ chrLength.txt chrStart.txt geneInfo.tab SA sjdbList.fromGTF.out.tab chrNameLength.txt exonGeTrInfo.tab Genome SAindex sjdbList.out.tab chrName.txt exonInfo.tab genomeParameters.txt sjdbInfo.txt transcriptInfo.tab brb@T3600 ~/SRP050992 $ cat ~/SRP012607/STARindex/genomeParameters.txt ### STAR --runMode genomeGenerate --runThreadN 11 --genomeDir STARindex --genomeFastaFiles /home/brb/igenomes/Homo_sapiens/UCSC/hg38/Sequence/WholeGenomeFasta/genome.fa --sjdbGTFfile /home/brb/igenomes/Homo_sapiens/UCSC/hg38/Annotation/Genes/genes.gtf --sjdbOverhang 100 versionGenome 20201 genomeFastaFiles /home/brb/igenomes/Homo_sapiens/UCSC/hg38/Sequence/WholeGenomeFasta/genome.fa genomeSAindexNbases 14 genomeChrBinNbits 18 genomeSAsparseD 1 sjdbOverhang 100 sjdbFileChrStartEnd - sjdbGTFfile /home/brb/igenomes/Homo_sapiens/UCSC/hg38/Annotation/Genes/genes.gtf sjdbGTFchrPrefix - sjdbGTFfeatureExon exon sjdbGTFtagExonParentTranscript transcript_id sjdbGTFtagExonParentGene gene_id sjdbInsertSave Basic

Splice junction

- Manual. Search 'sjdbOverhang' (length of the donor/acceptor sequence on each side of the junctions). The --sjdbOverhang is used only at the genome generation step, and tells STAR how many bases to concatenate from donor and acceptor sides of the junctions. If you have 100b reads, the ideal value of --sjdbOverhang is 99, which allows the 100b read to map 99b on one side, 1b on the other side. One can think of --sjdbOverhang as the maximum possible overhang for your reads. See A post.

- For the <SJ.out.tab> format see section 4.4 of STAR manual. The first few lines of the file looks like (It is strange that the values in column 7, 8 are so large)

$ head SJ.out.tab

chrM 711 764 1 1 1 2 1 44

chrM 717 1968 1 1 1 1 0 43

chrM 759 1189 0 0 1 11 3 30

chrM 795 830 2 2 1 335 344 30

chrM 822 874 1 1 1 1 1 17

chrM 831 917 2 2 1 733 81 38

chrM 831 3135 2 2 1 1 0 30

chrM 846 1126 0 0 1 4 0 50

chrM 876 922 1 1 1 26 9 31

chrM 962 1052 2 2 1 3 0 30

^ ^ ^ ^ ^ ^ ^ ^ ^

| | | | | | | | |

chrom start end strand intron annotated | # of |

0=undef motif # of multi mapped|

1=+ uniquely mapped max spliced alignment overhang

2=- reads

- Tophat. Search overhang, donor/acceptor keywords. Check out <junctions.bed>. Tophat suggest users use this second option (--coverage-search) for short reads (< 45bp) and with a small number of reads (<= 10 million).

- HiSAT comes with a python script that can extract splice sites from a GTF file. See this post.

$ cd /tmp $ /opt/SeqTools/bin/hisat2-2.0.4/hisat2_extract_splice_sites.py \ ~/igenomes/Homo_sapiens/UCSC/hg19/Annotation/Genes/genes.gtf > splicesites.txt $ head splicesites.txt chr1 12226 12612 + chr1 12720 13220 + chr1 14828 14969 - chr1 15037 15795 - chr1 15946 16606 - chr1 16764 16857 - chr1 17054 17232 - chr1 17367 17605 - chr1 17741 17914 - chr1 18060 18267 -

Screen output

Oct 07 19:25:19 ..... started STAR run Oct 07 19:25:19 ... starting to generate Genome files Oct 07 19:27:08 ... starting to sort Suffix Array. This may take a long time... Oct 07 19:27:38 ... sorting Suffix Array chunks and saving them to disk... Oct 07 20:07:07 ... loading chunks from disk, packing SA... Oct 07 20:22:52 ... finished generating suffix array Oct 07 20:22:52 ... generating Suffix Array index Oct 07 20:26:32 ... completed Suffix Array index Oct 07 20:26:32 ..... processing annotations GTF Oct 07 20:26:38 ..... inserting junctions into the genome indices Oct 07 20:30:15 ... writing Genome to disk ... Oct 07 20:31:00 ... writing Suffix Array to disk ... Oct 07 20:37:24 ... writing SAindex to disk Oct 07 20:37:39 ..... finished successfully

Log.final.out

An example of the Log.final.out file

$ cat Log.final.out

Started job on | Jul 21 13:12:11

Started mapping on | Jul 21 13:35:06

Finished on | Jul 21 13:57:42

Mapping speed, Million of reads per hour | 248.01

Number of input reads | 93418927

Average input read length | 202

UNIQUE READS:

Uniquely mapped reads number | 61537064

Uniquely mapped reads % | 65.87%

Average mapped length | 192.62

Number of splices: Total | 15658546

Number of splices: Annotated (sjdb) | 15590438

Number of splices: GT/AG | 15400163

Number of splices: GC/AG | 178602

Number of splices: AT/AC | 14658

Number of splices: Non-canonical | 65123

Mismatch rate per base, % | 0.86%

Deletion rate per base | 0.02%

Deletion average length | 1.61

Insertion rate per base | 0.03%

Insertion average length | 1.40

MULTI-MAPPING READS:

Number of reads mapped to multiple loci | 4962849

% of reads mapped to multiple loci | 5.31%

Number of reads mapped to too many loci | 62555

% of reads mapped to too many loci | 0.07%

UNMAPPED READS:

% of reads unmapped: too many mismatches | 0.00%

% of reads unmapped: too short | 28.70%

% of reads unmapped: other | 0.05%

CHIMERIC READS:

Number of chimeric reads | 0

% of chimeric reads | 0.00%

Note that 65.87 (uniquely mapped) + 5.31 (mapped to multiple loci) + 0.07 (mapped too many loci) + 28.70 (unmapped too short) + 0.05 (unmapped other) = 100

By default, STAR only outputs reads that map to <=10 loci, others are considered "mapped to too many loci". You can increase this threshold by increasing --outFilterMultimapNmax. See here.

Subread (RNA/DNA)

- http://bioinf.wehi.edu.au/subread-package/SubreadUsersGuide.pdf

- HISAT vs STAR vs TopHat2 vs Olego vs SubJunc by Mark Ziemann

- Get a 'Segmentation fault' error on GSE46876 (SRR902884.fastq, single-end) rna-seq data

In practice,

- For DNA-Seq data, the subread aligner is used.

- For RNA-Seq data,

- the subread aligner is used when selecting gene counting analysis only.

- the subjunc aligner is used when variant calling analysis is selected.

RNASequel

RNASequel: accurate and repeat tolerant realignment of RNA-seq reads

snap

Scalable Nucleotide Alignment Program -- a fast and accurate read aligner for high-throughput sequencing data

snap is the best choice so far. star/hisat2 are as fast but not as good for genomic reads. accurate mappers need to do alignment anyway

Keep in mind SNAP's index is 10-15x bigger than input. 4Gbp->50GB index. SNAP loads index into memory. HISAT2 index is similar size as input

Lessons

- Tophat and STAR needs index files. So if we want to run the alignment using multiple nodes, we want to first run the alignment on a single sample first. Then we can run alignment on others samples using multiple nodes.

- The index files of Tophat only depends on the reference genome. However the index files of STAR depends on both the reference genome and the read length of the data.

Alignment Algorithms

- What is the difference between local and global sequence alignments?

- Sequence alignment

- CMSC 858s: Computational Genomics

- Substitution matrix

Alignment free

SAMtools

SAMtools bundle include samtools, bcftools, bgzip, tabix, wgsim and htslib. samtools and bcftools are based on htslib.

- https://en.wikipedia.org/wiki/SAMtools

- http://www.htslib.org/doc/samtools.html

- http://www.htslib.org/doc/samtools-1.2.html (it is strange bam2fq appears in v1.2 but not in v1.3 documentation)

- SAMtools: Primer / Tutorial by Ethan Cerami. It covers installing samtools, bcftools, generating simulated reads, align reads, convert sam to bam, sorting & indexing, identifying genomic variants, understand vcf format, visualize reads on a command line.

- http://davetang.org/wiki/tiki-index.php?page=SAMTools contains many recipes like counting number of mapped reads

$ /opt/SeqTools/bin/samtools-1.3/samtools

Program: samtools (Tools for alignments in the SAM format)

Version: 1.3 (using htslib 1.3)

Usage: samtools <command> [options]

Commands:

-- Indexing

dict create a sequence dictionary file

faidx index/extract FASTA

index index alignment

-- Editing

calmd recalculate MD/NM tags and '=' bases

fixmate fix mate information

reheader replace BAM header

rmdup remove PCR duplicates

targetcut cut fosmid regions (for fosmid pool only)

addreplacerg adds or replaces RG tags

-- File operations

collate shuffle and group alignments by name

cat concatenate BAMs

merge merge sorted alignments

mpileup multi-way pileup

sort sort alignment file

split splits a file by read group

quickcheck quickly check if SAM/BAM/CRAM file appears intact

fastq converts a BAM to a FASTQ

fasta converts a BAM to a FASTA

-- Statistics

bedcov read depth per BED region

depth compute the depth

flagstat simple stats

idxstats BAM index stats

phase phase heterozygotes

stats generate stats (former bamcheck)

-- Viewing

flags explain BAM flags

tview text alignment viewer

view SAM<->BAM<->CRAM conversion

depad convert padded BAM to unpadded BAM

To compile the new version (v1.x) of samtools,

git clone https://github.com/samtools/samtools.git git clone https://github.com/samtools/htslib.git cd samtools make

Multi-thread option

Only view and sort have multi-thread (-@) option.

samtools sort

A lot of temporary files will be created.

For example, if my output file is called <bwa_homo2_sort.bam>, there are 80 <bwa_homo2_sort.bam.tmp.XXXX.bam> files (each is about 210 MB) generated.

samtools sort -@ $SLURM_CPUS_PER_TASK -O bam -n bwa_homo2.sam -o bwa_homo2_sort.bam

Decoding SAM flags

http://broadinstitute.github.io/picard/explain-flags.html

samtools flagstat: Know how many alignment a bam file contains

brb@brb-T3500:~/GSE11209$ /opt/RNA-Seq/bin/samtools-0.1.19/samtools flagstat dT_bio_s.bam 1393561 + 0 in total (QC-passed reads + QC-failed reads) 0 + 0 duplicates 1393561 + 0 mapped (100.00%:-nan%) 0 + 0 paired in sequencing 0 + 0 read1 0 + 0 read2 0 + 0 properly paired (-nan%:-nan%) 0 + 0 with itself and mate mapped 0 + 0 singletons (-nan%:-nan%) 0 + 0 with mate mapped to a different chr 0 + 0 with mate mapped to a different chr (mapQ>=5)

We can see how many reads have multiple alignments by comparing the number of reads and the number of alignments output from samtools flagstat.

brb@brb-T3500:~/GSE11209$ wc -l SRR002062.fastq 13778616 SRR002062.fastq

Note: there is no multithread option in samtools flagstat.

samtools flagstat source code

samtools view

See also Anders2013-sam/bam and http://genome.sph.umich.edu/wiki/SAM

Note that we can use both -f and -F flags together.

$ /opt/SeqTools/bin/samtools-1.3/samtools view -h

Usage: samtools view [options] <in.bam>|<in.sam>|<in.cram> [region ...]

Options:

-b output BAM

-C output CRAM (requires -T)

-1 use fast BAM compression (implies -b)

-u uncompressed BAM output (implies -b)

-h include header in SAM output

-H print SAM header only (no alignments)

-c print only the count of matching records

-o FILE output file name [stdout]

-U FILE output reads not selected by filters to FILE [null]

-t FILE FILE listing reference names and lengths (see long help) [null]

-L FILE only include reads overlapping this BED FILE [null]

-r STR only include reads in read group STR [null]

-R FILE only include reads with read group listed in FILE [null]

-q INT only include reads with mapping quality >= INT [0]

-l STR only include reads in library STR [null]

-m INT only include reads with number of CIGAR operations consuming

query sequence >= INT [0]

-f INT only include reads with all bits set in INT set in FLAG [0]

-F INT only include reads with none of the bits set in INT set in FLAG [0]

-x STR read tag to strip (repeatable) [null]

-B collapse the backward CIGAR operation

-s FLOAT integer part sets seed of random number generator [0];

rest sets fraction of templates to subsample [no subsampling]

-@, --threads INT

number of BAM/CRAM compression threads [0]

-? print long help, including note about region specification

-S ignored (input format is auto-detected)

--input-fmt-option OPT[=VAL]

Specify a single input file format option in the form

of OPTION or OPTION=VALUE

-O, --output-fmt FORMAT[,OPT[=VAL]]...

Specify output format (SAM, BAM, CRAM)

--output-fmt-option OPT[=VAL]

Specify a single output file format option in the form

of OPTION or OPTION=VALUE

-T, --reference FILE

Reference sequence FASTA FILE [null]

View bam files:

samtools view XXX.bam # No header samtools view -@ 16 -h XXX.bam # include header in SAM output samtools view -@ 16 -H xxx.bam # See the header only. The header may contains the reference genome fasta information.

Note that according to SAM pdf, the header is optional.

Sam and bam files conversion:

/opt/RNA-Seq/bin/samtools-0.1.19/samtools view --help # sam -> bam (need reference genome file) samtools view -@ 16 -b XXX.fa XXX.bt2.sam > XXX.bt2.bam # bam -> sam samtools view -@ 16 -h -o out.sam in.bam

Subset:

# assuming bam file is sorted & indexed samtools view -@ 16 XXX.bam "chr22:24000000-25000000" | more

Remove unpaired reads

According to the samtools manual, the 7th column represents the chromosome name of the mate/next read. If the field is set as '*' when the information is unavailable and set as '=' if RNEXT is identical RNAME.

When we use samtools view command to manually removed certain reads from a pair-end sam/bam file, the bam file will has a problem when it is used in picard MarkDuplicates. A solution is to run the following line to remove these unpaired reads (so we don't need to the VALIDATION_STRINGENCY parameter)

samtools view -h accepted_hits_bwa.bam | awk -F "\t" '$7!="*" { print $0 }' > accepted_hits_bwa2.sam

less bam files

http://martinghunt.github.io/2016/01/25/less-foo.bam.html

Convert SAM to BAM

samtools view -b input.sam > output.bam # OR samtools view -b input.sam -o output.bam

There is no need to add "-S". The header will be kept in the bam file.

Convert BAM to SAM

samtools view -h input.bam -o output.sam

where '-h' is to ensure the converted SAM file contains the header information. Generally, it is useful to store only sorted BAM files as they use much less disk space and are faster to process.

Convert BAM to FASTQ

- http://www.metagenomics.wiki/tools/samtools/converting-bam-to-fastq

- http://seqanswers.com/forums/showthread.php?t=7061

# Method 1: samtools samtools bam2fq SAMPLE.bam > SAMPLE.fastq cat SAMPLE.fastq | grep '^@.*/1$' -A 3 --no-group-separator > SAMPLE_r1.fastq cat SAMPLE.fastq | grep '^@.*/2$' -A 3 --no-group-separator > SAMPLE_r2.fastq # Method 2: picard java -Xmx2g -jar Picard/SamToFastq.jar I=SAMPLE.bam F=SAMPLE_r1.fastq F2=SAMPLE_r2.fastq # Method 3: bam2fastx # http://manpages.ubuntu.com/manpages/quantal/man1/bam2fastx.1.html # Method 4: Bedtools - bamtofastq # http://bedtools.readthedocs.io/en/latest/content/tools/bamtofastq.html # Single fastq bedtools bamtofastq -i input.bam -fq output.fastq # BAM should be sorted by query name (samtools sort -n aln.bam aln.qsort) if creating paired FASTQ samtools sort -n input.bam -o input_sorted.bam # sort by read namem (-n) bedtools bamtofastq -i input_sorted.bam -fq output_r1.fastq -fq2 output_r2.fastq # Method 5: Bamtools # http://github.com/pezmaster31/bamtools

Consider the ExomeLungCancer example

# ExomeLungCancer/test.SRR2923335_*.fastq $ # Method 1 $ samtools bam2fq bwa.sam > SAMPLE.fastq $ cat SAMPLE.fastq | grep '^@.*/1$' -A 3 --no-group-separator > SAMPLE_r1.fastq $ cat SAMPLE.fastq | grep '^@.*/2$' -A 3 --no-group-separator > SAMPLE_r2.fastq $ head -n 4 SAMPLE_r1.fastq @SRR2923335.1/1 NGCAAGTAGAGGGGCGCACACCTACCCGCAGTTGTTCACAGCCATGAGGTCGCCAACTAGCAGGAAGGGGTAGGTCAGCATGCTCACTGCAATCTGAAAC + #1=DDFDFHHHHHJIJJJJIJJJJJJJJIJJJJJIJIHHHHHFFFFFEECEDDDDDDDDDDDDDDDDDDD>BDDACCDDDDDDDDDDDDDDCCDDEDDDC $ head -n 4 output_r2.fastq @SRR2923335.1/2 AACCCTGGTAATGGCTGGAGGCAGNNCTTGGTACAGNGTGTNNGCGTGGTGTGTCNNTGCTNNCTGGGCCGGGGTGGGTCACTGGCACTCAGGCCTCTCT + CCCFFFFFFHHGHJJJJJEHJIJI##11CCG?DFGI#0)88##0/77CF=@FDDG##--;?##,,5=BABBDDD)9@D59ADCDDBDDDDDDDCDDDDC> $ # Method 4 $ samtools sort -n accepted_hits.bam -o accepted_sortbyname.bam $ /opt/SeqTools/bin/bedtools2/bin/bedtools bamtofastq -i accepted_sortbyname.bam -fq output_r1.fastq -fq2 output_r2.fastq # Compare the first read # Original fastq file $ head -n 4 ../test.SRR2923335_1.fastq @SRR2923335.1 1 length=100 NGCAAGTAGAGGGGCGCACACCTACCCGCAGTTGTTCACAGCCATGAGGTCGCCAACTAGCAGGAAGGGGTAGGTCAGCATGCTCACTGCAATCTGAAAC +SRR2923335.1 1 length=100 #1=DDFDFHHHHHJIJJJJIJJJJJJJJIJJJJJIJIHHHHHFFFFFEECEDDDDDDDDDDDDDDDDDDD>BDDACCDDDDDDDDDDDDDDCCDDEDDDC $ head -n 4 ../test.SRR2923335_2.fastq @SRR2923335.1 1 length=100 AACCCTGGTAATGGCTGGAGGCAGNNCTTGGTACAGNGTGTNNGCGTGGTGTGTCNNTGCTNNCTGGGCCGGGGTGGGTCACTGGCACTCAGGCCTCTCT +SRR2923335.1 1 length=100 CCCFFFFFFHHGHJJJJJEHJIJI##11CCG?DFGI#0)88##0/77CF=@FDDG##--;?##,,5=BABBDDD)9@D59ADCDDBDDDDDDDCDDDDC> $ ################################################################################################## $ head -n 4 output_r1.fastq @SRR2923335.1/1 NGCAAGTAGAGGGGCGCACACCTACCCGCAGTTGTTCACAGCCATGAGGTCGCCAACTAGCAGGAAGGGGTAGGTCAGCATGCTCACTGCAATCTGAAAC + #1=DDFDFHHHHHJIJJJJIJJJJJJJJIJJJJJIJIHHHHHFFFFFEECEDDDDDDDDDDDDDDDDDDD>BDDACCDDDDDDDDDDDDDDCCDDEDDDC $ head -n 4 output_r2.fastq @SRR2923335.1/2 AACCCTGGTAATGGCTGGAGGCAGNNCTTGGTACAGNGTGTNNGCGTGGTGTGTCNNTGCTNNCTGGGCCGGGGTGGGTCACTGGCACTCAGGCCTCTCT + CCCFFFFFFHHGHJJJJJEHJIJI##11CCG?DFGI#0)88##0/77CF=@FDDG##--;?##,,5=BABBDDD)9@D59ADCDDBDDDDDDDCDDDDC>

Using CRAM files

- CRAM files are more dense than BAM files. CRAM files are smaller than BAM by taking advantage of an additional external "reference sequence" file.

- http://www.htslib.org/workflow/#mapping_to_cram

- CRAM format

- The CRAM format was used (to replace the BAM format) in 1000genome

Extract single chromosome

https://www.biostars.org/p/46327/

samtools sort accepted_hits.bam -o accepted_hits_sorted.bam samtools index accepted_hits_sorted.bam samtools view -h accepted_hits_sorted.bam chr22 > accepted_hits_sub.sam

Primary, secondary, supplementary alignment

- Multiple mapping and primary from SAM format specification

- https://www.biostars.org/p/138116/

- supplementary alignment = chimeric alignments = non-linear alignments. It's often the case that the sample we're sequencing has structural variations when compared to the reference sequence. Imagine a 100bp read. Let us suppose that the first 50bp align to chr1 and the last 50bp to chr6.

- How to extract unique mapped results from Bowtie2 bam results? If you don't extract primary or unique reads from the sam/bam, the reads that maps equally well to multiple sequences will cause a serious bias in quantification of repeated elements.

To exact primary alignment reads (here), use

samtools view -F 260 input.bam

Extract reads based on read IDs

https://www.biostars.org/p/68358/#68362

samtools view Input.bam chrA:x-y | cut -f1 > idFile.txt LC_ALL=C grep -w -F -f idFile.txt < in.sam > subset.sam

Extract mapped/unmapped reads from BAM

http://www.htslib.org/doc/samtools.html

0x1 PAIRED paired-end (or multiple-segment) sequencing technology 0x2 PROPER_PAIR each segment properly aligned according to the aligner 0x4 UNMAP segment unmapped 0x8 MUNMAP next segment in the template unmapped 0x10 REVERSE SEQ is reverse complemented 0x20 MREVERSE SEQ of the next segment in the template is reverse complemented 0x40 READ1 the first segment in the template 0x80 READ2 the last segment in the template 0x100 SECONDARY secondary alignment 0x200 QCFAIL not passing quality controls 0x400 DUP PCR or optical duplicate 0x800 SUPPLEMENTARY supplementary alignment

# get the unmapped reads from a bam file use : samtools view -f 4 file.bam > unmapped.sam # get the output in bam use : samtools view -b -f 4 file.bam > unmapped.bam # get only the mapped reads, the parameter 'F', which works like -v of grep samtools view -b -F 4 file.bam > mapped.bam # get only properly aligned/properly paired reads (https://www.biostars.org/p/111481/) # # Q: What Does The "Proper Pair" Bitwise Flag? # A1: https://www.biostars.org/p/8318/ # Properly paired means the read itself as well as its mate are both mapped and # they were mapped within a reasonable distance given the expected distance # A2: https://www.biostars.org/p/12475/ # It means means both mates of a read pair map to the same chromosome, oriented towards each other, # and with a sensible insert size. samtools view -b -f 0x2 accepted_hits.bam > mappedPairs.bam

- https://www.biostars.org/p/14518/ So for all pair-end reads where one end is mapped and the other end isn't mapped, this should output all the mapped end entries:

samtools view -u -f 8 -F 260 map.bam > oneEndMapped.bam

and this will output all the unmapped end entries:

samtools view -u -f 4 -F 264 map.bam > oneEndUnmapped.bam

Count number of mapped/unmapped reads from BAM - samtools idxstats

# count the number of mapped reads samtools view -c -F0x4 accepted_hits.bam 9971 # count the number of unmapped reads samtools view -c -f0x4 accepted_hits.bam 35

The result can be checked with samtools idxstats command. See https://pepebioinformatics.wordpress.com/2014/05/29/samtools-count-mapped-and-unmapped-reads-in-bam-file/

$ samtools sort accepted_hits.bam -o accepted_hits_sorted.bam

$ samtools index accepted_hits_sorted.bam accepted_hits_sorted.bai # BAI file is binary

$ ls -t

accepted_hits_sorted.bai accepted_hits_sorted.bam accepted_hits.bam

$ samtools idxstats accepted_hits_sorted.bam

1 249250621 949 1

2 243199373 807 0

3 198022430 764 0

4 191154276 371 1

5 180915260 527 0

6 171115067 411 3

7 159138663 888 5

8 146364022 434 0

9 141213431 409 2

10 135534747 408 1

11 135006516 490 2

12 133851895 326 1

13 115169878 149 1

14 107349540 399 3

15 102531392 249 1

16 90354753 401 4

17 81195210 466 1

18 78077248 99 0

19 59128983 503 1

20 63025520 275 1

21 48129895 87 0

22 51304566 228 2

X 155270560 315 1

Y 59373566 6 0

MT 16569 10 0

* 0 0 4

$ samtools idxstats accepted_hits_sorted.bam | awk '{s+=$3+$4} END {print s}'

10006

$ samtools idxstats accepted_hits_sorted.bam | awk '{s+=$3} END {print s}'

9971

Find unmapped reads

Find multi-mapped reads

http://seqanswers.com/forums/showthread.php?t=16427

samtools view -F 4 file.bam | awk '{printf $1"\n"}' | sort | uniq -d | wc -l

# uniq -d will only print duplicate lines

Realignment and recalibration

https://www.biostars.org/p/141784/

- Realignment and recalibration won't change these metrics output from samtools flagstat.

- Recalibration is typically not needed anymore (the same goes for realignment if you're using something like GATK's haplotype caller).

- Realignment just locally realigns things and typically the assigned MAPQ values don't change (unmapped mates etc. also won't change).

- Recalibration typically affects only base qualities.

htslib

Suppose we download a vcf.gz from NCBI ftp site. We want to subset chromosome 1 and index the new file.

- tabix - subset a vcf.gz file or index a vcf.gz file

tabix -h common_all_20160601.vcf.gz 1: > common_all_20160601_1.vcf $ tabix -h Version: 1.3 Usage: tabix [OPTIONS] [FILE] [REGION [...]] Indexing Options: -0, --zero-based coordinates are zero-based -b, --begin INT column number for region start [4] -c, --comment CHAR skip comment lines starting with CHAR [null] -C, --csi generate CSI index for VCF (default is TBI) -e, --end INT column number for region end (if no end, set INT to -b) [5] -f, --force overwrite existing index without asking -m, --min-shift INT set minimal interval size for CSI indices to 2^INT [14] -p, --preset STR gff, bed, sam, vcf -s, --sequence INT column number for sequence names (suppressed by -p) [1] -S, --skip-lines INT skip first INT lines [0] Querying and other options: -h, --print-header print also the header lines -H, --only-header print only the header lines -l, --list-chroms list chromosome names -r, --reheader FILE replace the header with the content of FILE -R, --regions FILE restrict to regions listed in the file -T, --targets FILE similar to -R but streams rather than index-jumps

- bgzip - create a vcf.gz file from a vcf file

bgzip -c common_all_20160601_1.vcf > common_all_20160601_1.vcf.gz # Indexing. Output is <common_all_20160601_1.vcf.gz.tbi> tabix -f -p vcf common_all_20160601_1.vcf.gz $ bgzip -h Version: 1.3 Usage: bgzip [OPTIONS] [FILE] ... Options: -b, --offset INT decompress at virtual file pointer (0-based uncompressed offset) -c, --stdout write on standard output, keep original files unchanged -d, --decompress decompress -f, --force overwrite files without asking -h, --help give this help -i, --index compress and create BGZF index -I, --index-name FILE name of BGZF index file [file.gz.gzi] -r, --reindex (re)index compressed file -s, --size INT decompress INT bytes (uncompressed size) -@, --threads INT number of compression threads to use [1]

hts-nlim

hts-nim: scripting high-performance genomic analyses and source in github.

Examples include bam filtering (bam files), read counts in regions (bam & bed files) and quality control variant call files (vcf files). https://github.com/brentp/hts-nim-tools

SAMStat

Displaying sequence statistics for next generation sequencing. SAMStat reports nucleotide composition, length distribution, base quality distribution, mapping statistics, mismatch, insertion and deletion error profiles, di-nucleotide and 10-mer over-representation. The output is a single html5 page which can be interpreted by a non-specialist.

Usage

samstat <file.sam> <file.bam> <file.fa> <file.fq> ....

For each input file SAMStat will create a single html page named after the input file name plus a dot html suffix.

Picard

A set of tools (in Java) for working with next generation sequencing data in the BAM (http://samtools.sourceforge.net) format.

https://github.com/broadinstitute/picard

Note that As of version 2.0.1 (Nov. 2015) Picard requires Java 1.8 (jdk8u66). The last version to support Java 1.7 was release 1.141. Use the following to check your Java version

brb@T3600 ~/github/picard $ java -version java version "1.7.0_101" OpenJDK Runtime Environment (IcedTea 2.6.6) (7u101-2.6.6-0ubuntu0.14.04.1) OpenJDK 64-Bit Server VM (build 24.95-b01, mixed mode)

See my Linux -> JRE and JDK page.

Some people reported errors or complain about memory usage. See this post from seqanswers.com. And HTSeq python program is another option.

sudo apt-get install ant

git clone https://github.com/broadinstitute/picard.git

cd picard

git clone https://github.com/samtools/htsjdk.git

ant -lib lib/ant package-commands # We will see 'BUILD SUCCESSFUL'

# It will create <dist/picard.jar>

SamToFastq

java jvm-args -jar picard.jar PicardCommandName OPTION1=value1 OPTION2=value2... # For example cd ~/github/freebayes/test/tiny java -Xmx2g -jar ~/github/picard/dist/picard.jar SamToFastq \ INPUT=NA12878.chr22.tiny.bam \ FASTQ=tmp_1.fq \ SECOND_END_FASTQ=tmp_2.fq \ INCLUDE_NON_PF_READS=True \ VALIDATION_STRINGENCY=SILENT wc -l tmp_1.fq # 6532 tmp_1.fq wc -l tmp_2.fq # 6532 tmp_2.fq

SAM validation error; Mate Alignment start should be 0 because reference name = *

Errors in SAM/BAM files can be diagnosed with ValidateSamFile

When I run picard with MarkDuplicates, AddOrReplaceReadGroups or ReorderSam parameter, I will get an error on the bam file aligned to human+mouse genomes but excluding mouse mappings:

Ignoring SAM validation error: ERROR: Record 3953, Read name D00748:53:C8KPMANXX:7:1109:11369:5479, Mate Alignment start should be 0 because reference name = *.'

https://www.biostars.org/p/9876/ & https://www.biostars.org/p/108148/. Many of the validation errors reported by Picard are "technically" errors, but do not have any impact for the vast majority of downstream processing.

For example,

java -Xmx10g -jar picard.jar MarkDuplicates VALIDATION_STRINGENCY=LENIENT METRICS_FILE=MarkDudup.metrics INPUT=input.bam OUTPUT=output.bam # OR to avoid messages java -Xmx10g -jar picard.jar MarkDuplicates VALIDATION_STRINGENCY=SILENT METRICS_FILE=MarkDudup.metrics INPUT=input.bam OUTPUT=output.bam

Another (different) error message is:

SAM validation error: ERROR: Record 16642, Read name SRR925751.3835, First of pair flag should not be set for unpaired read.

BEDtools

The bed format is similar to gtf format but with more compact representation. We can use the apt-get method to install it on Linux environment.

bedtools intersect bedtools bamtobed bedtools bedtobam bedtools getfasta bedtools bamtofastq [OPTIONS] -i <BAM> -fq <FASTQ>

For example,

bedtools intersect -wo -a RefSeq.gtf -b XXX.bed | wc -l # in this case every line is one exon bedtools intersect -wo -a RefSeq.gtf -b XXX.bed | cut -f9 | cut -d ' ' -f2 | more bedtools intersect -wo -a RefSeq.gtf -b XXX.bed | cut -f9 | cut -d ' ' -f2 | sort -u | wc -l bedtools intersect -wo -a RefSeq.bed -b XXX.bed | more # one gene is one line with multiple intervals

One good use of bedtools is to find the intersection of bam and bed files (to double check the insertion/deletion/junction reads in bed files are in accepted_hits.bam). See this page

$ bedtools --version bedtools v2.17.0 $ bedtools intersect -abam accepted_hits.bam -b junctions.bed | samtools view - | head -n 3

We can use bedtool to reverse bam files to fastq files. See https://www.biostars.org/p/152956/

Installation

git clone https://github.com/arq5x/bedtools2.git cd bedtools2 make

bamtofastq

cd ~/github/freebayes/test/tiny # Working directory

~/github/samtools/samtools sort -n NA12878.chr22.tiny.bam NA12878.chr22.tiny.qsort

~/github/bedtools2/bin/bamToFastq -i NA12878.chr22.tiny.qsort.bam \

-fq aln.end1.fq \

-fq2 aln.end2.fq 2> bamToFastq.warning.out

## 60 warnings; see <bamToFastq.warning.out>

*****WARNING: Query ST-E00118:53:H02GVALXX:1:1102:1487:23724 is marked as paired, but its mate does not occur next to it in your BAM file. Skipping.

wc -l tmp.out # 60

wc -l aln.end1.fq # 6548

wc -l aln.end2.fq # 6548

# recall tmp_1.fq is 6532

Cufflinks package

Transcriptome assembly and differential expression analysis for RNA-Seq.

Both Cufflinks and Cuffdiff accept SAM and BAM files as input. It is not uncommon for a single lane of Illumina HiSeq sequencing to produce FASTQ and BAM files with a combined size of 20 GB or larger. Laboratories planning to perform more than a small number of RNA-seq experiments should consider investing in robust storage infrastructure, either by purchasing their own hardware or through cloud storage services.

Tuxedo protocol

- Differential gene and transcript expression analysis of RNA-seq experiments with TopHat and Cufflinks by Trapnell, et al 2012.

- https://speakerdeck.com/stephenturner/rna-seq-qc-and-data-analysis-using-the-tuxedo-suite

- bowtie2 - fast alignment

- tophat2 - splice alignment (rna-seq reads, rna are spliced, introns are removed, some reads may span over 2 exons)

- cufflinks - transcript assembly & quantitation

- cuffdiff2 - differential expression

Cufflinks - assemble reads into transcript

Installation

# about 47MB on ver 2.2.1 for Linux binary version wget http://cole-trapnell-lab.github.io/cufflinks/assets/downloads/cufflinks-2.2.1.Linux_x86_64.tar.gz sudo tar xzvf ~/Downloads/cufflinks-2.2.1.Linux_x86_64.tar.gz -C /opt/RNA-Seq/bin/ export PATH=$PATH:/opt/RNA-Seq/bin/cufflinks-2.2.1.Linux_x86_64/ # test cufflinks -h

Cufflinks uses this map (done from Tophat) against the genome to assemble the reads into transcripts.

# Quantifying Known Transcripts using Cufflinks cufflinks -o OutputDirectory/ -G refseq.gtf mappedReads.bam # De novo Transcript Discovery using Cufflinks cufflinks -o OutputDirectory/ mappedReads.bam

The output files are genes.fpkm_tracking, isoforms.fpkm_tracking, skipped.gtf and transcripts.gtf.

It can be used to calculate FPKM.

- https://www.biostars.org/p/11378/

- http://www.partek.com/Tutorials/microarray/User_Guides/UnderstandingReads.pdf

Cuffcompare - compares transcript assemblies to annotation

Cuffmerge - merges two or more transcript assemblies

First create a text file <assemblies.txt>

./dT_bio/transcripts.gtf ./dT_ori/transcripts.gtf ./dT_tech/transcripts.gtf ./RH_bio/transcripts.gtf ./RH_ori/transcripts.gtf ./RH_tech/transcripts.gtf

Then run

cd GSE11209 cuffmerge -g genes.gtf -s genome.fa assemblies.txt

Cuffdiff

Finds differentially expressed genes and transcripts/Detect differential splicing and promoter use.

Cuffdiff takes the aligned reads from two or more conditions and reports genes and transcripts that are differentially expressed using a rigorous statistical analysis.

Follow the tutorial, we can quickly test the cuffdiff program.

$ wget http://cufflinks.cbcb.umd.edu/downloads/test_data.sam $ cufflinks ./test_data.sam $ ls -l total 56 -rw-rw-r-- 1 mli mli 221 2013-03-05 15:51 genes.fpkm_tracking -rw-rw-r-- 1 mli mli 231 2013-03-05 15:51 isoforms.fpkm_tracking -rw-rw-r-- 1 mli mli 0 2013-03-05 15:51 skipped.gtf -rw-rw-r-- 1 mli mli 41526 2009-09-26 19:15 test_data.sam -rw-rw-r-- 1 mli mli 887 2013-03-05 15:51 transcripts.gtf

In real data,

cd GSE11209

cuffdiff -p 5 -o cuffdiff_out -b genome.fa -L dT,RH \

-u merged_asm/merged.gtf \

./dT_bio/accepted_hits.bam,./dT_ori/accepted_hits.bam,./dT_tech/accepted_hits.bam \

./RH_bio/accepted_hits.bam,./RH_ori/accepted_hits.bam,./RH_tech/accepted_hits.bam

N.B.: the FPKM value (<genes.fpkm_tracking>) is generated per condition not per sample. If we want FPKM per sample, we want to specify one condition for each sample.

The method of constructing test statistics can be found online.

Pipeline

CummeRbund

Plots abundance and differential expression results from Cuffdiff. CummeRbund also handles the details of parsing Cufflinks output file formats to connect Cufflinks and the R statistical computing environment. CummeRbund transforms Cufflinks output files into R objects suitable for analysis with a wide variety of other packages available within the R environment and can also now be accessed through the Bioconductor website

The tool appears on the paper Differential gene and transcript expression analysis of RNA-seq experiments with TopHat and Cufflinks by Trapnell, et al 2012.

Finding differentially expressed genes

Big pictures

BWA/Bowtie samtools fa ---------> sam ------> sam/bam (sorted indexed, short reads), vcf or tophat Rsamtools GenomeFeatures edgeR (normalization) ---------> --------------> table of counts --------->

Readings

- Introduction to RNA Sequencing and Analysis Kukurba KR, Montgomery SB. (2015) RNA Sequencing and Analysis. Cold Spring Harb Protoc

- RNA-Seq: a revolutionary tool for transcriptomics

- Alignment and visualization from bioinformatics.ca.

- The RNA-seqlopedia from the Cresko Lab of the University of Oregon.

- RNA-Seq Analysis by Simon Andrews.

- Benchmarking differential expression analysis tools for RNA-Seq: normalization-based vs. log-ratio transformation-based methods by Thomas P. Quinn 2018. Analysis scripts (R) are included.

Technical replicate vs biological replicate

We sequence more DNA from the same DNA "library", which is called a "technical replicate". If we perform a new experiment with a new organism/tissue/population of cells, which is called a "biological replicate".

Youtube videos

- Analyze public dataset by using Galaxy and IGV David Coil from UC Davis Genoem Center

Download the raw fastq data GSE19602 from GEO and uncompress fastq.bz2 to fastq (~700MB) file. NOTE: the data downloaded from ncbi is actually sra file format. We can use fastq_dump program in SRA_toolkit to convert sra to fastq. http://www.ncbi.nlm.nih.gov/Traces/sra/?view=software

~/Downloads/sratoolkit.2.3.2-ubuntu64/bin/fastq-dump ~/Downloads/SRR034580.sra

If we want to run Galaxy locally, we can install it simply by 2 command lines

hg clone https://bitbucket.org/galaxy/galaxy-dist/ cd galaxy-dist hg update stable

To run Galaxy locally, we do

cd galaxy-dist sh run.sh --reload

The command line will show Starting server in PID XXXX. serving on http://127.0.0.1:8080. We can use Ctrl + C to stop the Galaxy process.

Note: One problem with this simple instruction is we have not created a user yet.

- Upload one fastq data. Click 'refresh' icon on the history panel to ensure the data is uploaded. Don't use 'refresh' button on the browser; it will cause an error for the current process.

- FASTQ Groomer. Convert the data to Galaxy needs. NGS: QC and manipulation => Illumina FASTQ. FASTQ quality scores type: Sanger. (~10 minutes). This part uses CPU not memory.

- Open a new browser tab and go to ftp://ftp.plantbiology.msu.edu/pub/data/Eukaryotic_Projects/o_sativa/annotation_dbs/pseudomolecules/version_6.1/all.dir/. Right click the file all.cDNA and copy link location. In Galaxy click 'Upload File from your computer' paste URL to URL/Text entry.

- Scroll down Galaxy and select NGS:Mapping -> Map with BWA. PS. for some reason, BWA is not available. So I use Bowtie2 instead. The output of Bowtie2 is bam file.

- For reference genome, choose 'Use one from the history'. Galaxy automatically find the reference file 'ftp://ftp.plantbiology....' from history.

- Library mate-paired => Single-end.

- FASTQ file => 2: FASTQ Groomer on data 1.

- BWA settings to use => Commonly Used.

- Execute (~ 15 minutes)

- We can view the alignment file (sam format) created from BWA by using UCSV or IGV (input is bam or bai format). We now use NGS: SAM Tools to convert sam file to bam file. Click 'SAM-to-BAM converts SAM format to BAM format' tool.

- Choose the source for the reference list => History

- Converts SAM file => 4: Map with BWA on data 2 and data 3.

- Using reference file => 3:ftp://ftp.plantbiology.....

- Execute (~5 minutes)

- We want to create bai file which is a shortcut to IGV. It breaks the data into smaller accessible chunks. So when you pull up a certain cDNA, it goes straight to the subset. Go to the history, click the pencil icon (Edit Attributes) on the file SAM-to-BAM on data 3 and data 4.

- Look at 'Convert to new format' section. Go ahead and click 'Convert'. (< 1 minute). This will create another file.

- Use browser and go to ftp website to download all.cDNA file to desktop. The desktop should contain 3 files - all.cDNA, rice.bam and rice.bai files for IGV.

- Goto http://www.broadinstitute.org/software/igv/download to download IGV which is a java-based application. I need to install java machine first by install openjdk-7-jdk. IGV by default will launch 'Human hg18' genome. Launch IGV by cd IGV_2.2.13;java -Xmx750m -jar igv.jar. I found the IGV input requires sam+bai OR bam+bai. So we need to click the pencil icon to create bai file first before we want to upload sam or bam file to IGV.

- Goto File => Import Genome. Call it 'rice' and select 'all.cDNA' sequence file. Click 'Save' button.

- Goto File => Upload from File => rice.bam.

- Top right panel is cDNA

- Middle right panel has a lot of 'boxes' which is a read. If we zoom in, we can see some read points to left (backward) while some points to right (forward). On the top is a histogram. For example, a base may be covered by a lot of reads then the histogram will show the high frequence.

- If we keep zoom in, we can see color at the Bottom right panel. Keeping zoom in, we can see the base G, C, T, A themselves.

- Using IGV, we can 1. examine coverage.

- We can 2. check 'alternative splicing'. (not for this cDNA)

- We can 3. examine SNPs for base change. If we see gray color (dark gray is hight quality read, light gray means low quality read), it means they are perfect match. If we see color, it means there is a change. For example, a read is 'C' but in fact it should be 'A'. If a case has many high quality reads, and half of them are 'G' but the reference genome shows 'A'. This is most likely a SNP. This is heterogeisity.

- Tophat - align RNA seq data to genomic DNA

- Suppose we have use Galaxy to upload 2 data. One is SRR034580 and we have run FASTQ Groomer on data 1. The second data is SRR034584 and we also have run FASTQ Groomer on data 2. We also have uploaded reference genome sequence.

- Goto Galaxy and find NGS: RNA Analysis => Tophat.

- reference genome => Use one from the history

- RNA-Seq FASTQ file => 2; FASTQ Groomer on data 1.

- Execute. This will create 2 files. One is splice junctions and the other is accepted_hits. We queue the job and run another Tophat with the 2nd 'groomer'ed data file. We are going to work on accepted_hits file.

- While the queue are running, we can click on 'pencil' icon on 'accepted_hits' job and run the utlity 'Convert to new format' (Bam to Bai). We should do this for both 'accepted_hits' files.

- For some reason, the execution failed: An error occurred with this dataset: TopHat v2.0.7 TopHat v2.0.7 Error indexing reference sequence /bin/sh: 1: bowtie-build: not found.

- Cufflinks. We will estimate transcript abundance by using FPKM (RPKM).

- SAM or BAM file of alignmed RNA-Seq reads => tophat on data 2.. accepted_hits

- Use Reference Annotation - No (choose Yes if we want annotation. This requires GTF format. See http://genome.ucsc.edu/FAQ/FAQformat.html#format4. We don't have it for rice.)

- Execute. This will create 3 files. Gene expression, transcript expression and assembled transcripts.

- We also run Cufflinks for 2nd accepted_hits file. (~ 25 minutes)

- Cuffcompare. Compare one to each other.

- GTF file produced by Cufflinks => assembled transcript from the 1st data

- Use another GTF file produced by Cufflinks => Yes. It automatically find the other one.

- Execute. (< 10 minutes). This will create 7 files. Transcript accuracy, tmap file & refmap flie from each assembled transcripts, combined transcripts and transcript tracking.

- We are interested in combined transcripts file (to use in Cuffdiff).

- Cuffdiff.

- Transcripts => combined transcripts.

- SAM or BAM file of aligned RNA-Seq reads => 1st accepted_hits

- SAM or BAM file or aligned RNA-Seq reads => 2nd accepted_hits

- Execute. This will generate 11 files. Isoform expression, gene expression, TSS groups expression, CDS Expression FPKM Tracking, isoform FPKM tracking, gene FPKM tracking, TSS groups FPKM tracking, CDS FPKM tracking, splicing diff, promoters diff, CDS diff. We are interested in 'gene expression' file. We can save it and open it in Excel.

- IGV - 2 RNA-Seq datasets aligned to genomic DNA using Tophat

- Load the reference genome rice (see above)

- Upload from file => rice4.bam. Upload from file => rice5.bam.

- Alternative RNA splicing.

edX course

PH525.5x Case Study: RNA-seq data analysis. The course notes are forming a book. Check out https://github.com/genomicsclass/labs and http://genomicsclass.github.io/book/.

Variant calling

- Variants include (See p21 on Broad Presentation -> Pipeline Talks -> MPG_Primer_2016-Seq_and_Variant_Discovery)

- Germline SNPs & indels

- Somatic SNVs & indels

- Somatic CNVs

Overview/papers

- Systematic comparison of variant calling pipelines using gold standard personal exome variants by Hwang 2015.

- A statistical framework for SNP calling, mutation discovery, association mapping and population genetical parameter estimation from sequencing data by Heng Li.

- http://www.slideshare.net/AustralianBioinformatics/introduction-to-nextgeneration

- http://www.slideshare.net/thomaskeane/overview-of-methods-for-variant-calling-from-nextgeneration-sequence-data-9608507

- http://www.slideshare.net/jandot/nextgeneration-sequencing-variation-discovery

- Strelka2: Fast and accurate variant calling for clinical sequencing applications Sep 2017

Workflow

- Sequence reads -> Quality control -> Mapping -> Mark Duplicates -> Local realignment around INDELS -> Base quality score recalibration -> Variant calling -> Filtering & Annotation -> Querying.

- http://www.ngscourse.org/Course_Materials/variant_calling/presentation/2015-Cambridge_variant_calling.pdf Lots of pictures to explain the concepts!!

- Galaxy workflow guide for variant detection

- http://seqanswers.com/wiki/How-to/exome_analysis

- Alignment step includes

- mark PCR duplicates some reads will be exact copies of each other. These reads are called PCR duplicates due to amplification biases in PCR. They share the same sequence and the same alignment position and could cause trouble during SNP calling as possibly some allele is overrepresented due to amplification biases. See Conceptual overview of duplicate flagging and Call variants with samtools 1.0

- local alignment around indels Indels within reads often lead to false positive SNPs at the end of sequence reads. To prevent this artifact, local realignment around indels is done using local realignment tools from the Genome Analysis Tool Kit.

- quality recalibration

- SNP calling step includes producing raw SNP calls and filter SNPs

- Annotation

- Alignment step includes

- WGS/WES Mapping to Variant Calls http://www.htslib.org/workflow/

Step 1: Mapping

bwa index <ref.fa> bwa mem -R '@RG\tID:foo\tSM:bar\tLB:library1' <ref.fa> <read1.fa> <read1.fa> > lane.sam samtools fixmate -O bam <lane.sam> <lane_fixmate.bam> samtools sort -O bam -o <lane_sorted.bam> -T </tmp/lane_temp> <lane_fixmate.sam>

Step 2: Improvement

java -Xmx2g -jar GenomeAnalysisTK.jar -T RealignerTargetCreator -R <ref.fa> -I <lane.bam> \

-o <lane.intervals> --known <bundle/b38/Mills1000G.b38.vcf>

java -Xmx4g -jar GenomeAnalysisTK.jar -T IndelRealigner -R <ref.fa> -I <lane.bam> \

-targetIntervals <lane.intervals> --known <bundle/b38/Mills1000G.b38.vcf> -o <lane_realigned.bam>

java -Xmx4g -jar GenomeAnalysisTK.jar -T BaseRecalibrator -R <ref.fa> \

-knownSites >bundle/b38/dbsnp_142.b38.vcf> -I <lane.bam> -o <lane_recal.table>

java -Xmx2g -jar GenomeAnalysisTK.jar -T PrintReads -R <ref.fa> -I <lane.bam> \

--BSQR <lane_recal.table> -o <lane_recal.bam>

java -Xmx2g -jar MarkDuplicates.jar VALIDATION_STRINGENCY=LENIENT \

INPUT=<lane_1.bam> INPUT=<lane_2.bam> INPUT=<lane_3.bam> OUTPUT=<library.bam>

samtools merge <sample.bam> <library1.bam> <library2.bam> <library3.bam>

samtools index <sample.bam>

Step 3: Variant calling

samtools mpileup -ugf <ref.fa> <sample1.bam> <sample2.bam> <sample3.bam> | \

bcftools call -vmO z -o <study.vcf.gz>

Non-R Software

Variant detector/discovery, genotyping

- Evaluation of variant detection software for pooled next-generation sequence data

- A survey of tools for variant analysis of next-generation genome sequencing data

- Evaluation of variant detection software for pooled next-generation sequence data

- Reliable Identification of Genomic Variants from RNA-Seq Data

- Bioinformatics at COMAV

Variant Identification

- Samtools

- https://wikis.utexas.edu/display/bioiteam/Variant+calling+using+SAMtools

- http://petridishtalk.com/2013/02/01/variant-discovery-annotation-filtering-with-samtools-the-gatk/

- http://lab.loman.net/2012/10/31/a-simple-guide-to-variant-calling-with-bwa-samtools-varscan2/

- Samtools and GATK

- Difference Between Samtools And Gatk Algorithms

- GATK by BROAD Institute.

- varscan2

- bcbio - Validated, scalable, community developed variant calling and RNA-seq analysis. Validated variant calling with human genome build 38.

- freebayes and a complete tutorial from alignment to variant calling.

GUI software

Variant Annotation

Biocoductor and R packages

- Bioconductor VariantTools package can export to VCF and VariantAnnotation package can read VCF format files. See also the Annotating Variants workflow in Bioconductor. See also SIFT.Hsapiens.dbSNP137, COSMIC.67, and PolyPhen.Hsapiens.dbSNP131 packages.

- R packages seqminer

fundamental - VariantAnnotation

readVcf() can be used to read a *.vcf or *.vcf.gz file.

dbSNP - SNPlocs.Hsapiens.dbSNP.20101109

Compare the rs numbers of the data and dbSNP.

variant wrt genes - TxDb.Hsapiens.UCSC.hg19.knownGene

Look for the column LOCATION which has values coding, fiveUTR, threeUTR, intron, intergenic, spliceSite, and promoter.

amino acid change for the non-synonymous variants - BSgenome.Hsapiens.UCSC.hg19

Look for the column CONSEQUENCE which has values synonymous or nonsynonymous.

SIFT & Polyphen for predict the impact of amino acid substitution on a human protein - PolyPhen.Hsapiens.dbSNP131

Look for the column PREDICTION which has values possibly damaging or benign.

rentrez tutorial

https://ropensci.org/tutorials/rentrez_tutorial.html

Accessing and Manipulating Biological Databases Exercises

http://www.r-exercises.com/2017/05/04/accessing-and-manipulating-biological-databases-exercises-part-2/? utm_source=rss&utm_medium=rss&utm_campaign=accessing-and-manipulating-biological-databases-exercises-part-2

vcf

vcf format

- One row is one variant or one position/nucleotide in genome.

- Mapping quality is called MQ on the INFO column of a vcf file. It is on the 5th column (no header) or the MQ field on the last column of a sam file.

- MQ denotes RMS mapping quality in vcf.

- MapQ = -10 log10(P) where P = probability that this mapping is NOT the correct one.

- https://en.wikipedia.org/wiki/Phred_quality_score. If Phred assigns a quality score of 30 to a base, the chances that this base is called incorrectly are 1 in 1000 (1/10^(MAPQ/10)=1/10^3)

- When MAPQ=0, it means that the read maps to two or more places with equal score. See here.

- When MAPQ=255, it means the mapping quality is not available. See https://biostar.usegalaxy.org/p/4077/

- http://davetang.org/muse/2011/09/14/mapping-qualities/

- Fastq file

- variant category (snp, insertion, deletion, mixed) http://www.1000genomes.org/node/101

- What is a VCF and how should I interpret it? from broad.

- See also SAM/BAM format.

Below is an example of each record/row

| 1 | #CHROM | 2 |

| 2 | POS | 14370 |

| 3 | ID | rs6054257 or . |

| 4 | REF | G |

| 5 | ALT | A |

| 6 | QUAL | 29 |

| 7 | FILTER | PASS or . |

| 8 | INFO | NS=3;DP=14;AF=0.5;DB;H2 |

| opt | FORMAT | GT:AD:DP:GQ:PL |

| opt | NA00001 | 0/1:3,2:5:34:34,0,65 |

where the meanings of GT(genotype), AD(Allelic depths for the ref and alt alleles in the order listed), HQ (haplotype quality), GQ (genotype quality)... can be found in the header of the VCF file.

Examples

See the samtools and GATK output from GSE48215subset data included in BRB-SeqTools.

Count number of rows

grep -cv "#" vcffile

Filtering

- Filtering A Sam File For Quality Scores. For example, to filter out reads with MAQP < 30 in SAM/BAM files

samtools view -b -q 30 input.bam > filtered.bam

- To filter vcf based on MQ, use for example,

bcftools filter -i"QUAL >= 20 && DP >= 5 && MQ >= 30" INPUTVCF > OUTPUTVCF

Open with LibreOffice Calc

Use delimiter 'Tab' only and uncheck the others.

It will then get the correct header #CHROM, POS, ID, REF, ALT, QUAL, FILTER, INFO (separated by ;), FORMAT (separated by :), SampleID (separated by :) where INFO field contains site-level annotations and FORMAT&SampleID fields contain sample-level annotations.

Count number of records, snps and indels

- vcftools.

- https://www.biostars.org/p/49386/, https://www.biostars.org/p/109086/, https://www.biostars.org/p/50923/, https://wikis.utexas.edu/display/bioiteam/Variant+calling+using+SAMtools.

- Summary statistics

bcftools stats XXX.vcf # SN, Summary numbers: # SN [2]id [3]key [4]value SN 0 number of samples: 0 SN 0 number of records: 71712 SN 0 number of no-ALTs: 0 SN 0 number of SNPs: 65950 SN 0 number of MNPs: 0 SN 0 number of indels: 5762 SN 0 number of others: 0 SN 0 number of multiallelic sites: 0 SN 0 number of multiallelic SNP sites: 0

- Remove the header

grep -v "^#" input.vcf > output.vcf

- Count number of records

grep -c -v "^#" XXX.vcf # -c means count, -v is invert search

VariantsToTable tool from Broad GATK

VariantAnnotation package

- readVcf(), ScanVcfParam() and readInfo() functions.

- scanVcfHeader() and scanVcfParam() functions.

To install the R package, first we need to install required software in Linux

sudo apt-get update sudo apt-get install libxml2-dev sudo apt-get install libcurl4-openssl-dev

and then

source("http://bioconductor.org/biocLite.R")

biocLite("VariantAnnotation")

library(VariantAnnotation)

vcf <- readVcf("filename.vcf", "hg19")

# Header

header(vcf)

# Sample names

samples(header(vcf))

# Geno

geno(header(vcf))

# Genomic positions

head(rowRanges(vcf), 3)

ref(vcf)[1:5]

# Variant quality