Glmnet

Basic

- https://en.wikipedia.org/wiki/Lasso_(statistics). It has a discussion when two covariates are highly correlated. For example if gene [math]\displaystyle{ i }[/math] and gene [math]\displaystyle{ j }[/math] are identical, then the values of [math]\displaystyle{ \beta _{j} }[/math] and [math]\displaystyle{ \beta _{k} }[/math] that minimize the lasso objective function are not uniquely determined. Elastic Net has been designed to address this shortcoming.

- Strongly correlated covariates have similar regression coefficients, is referred to as the grouping effect. From the wikipedia page "one would like to find all the associated covariates, rather than selecting only one from each set of strongly correlated covariates, as lasso often does. In addition, selecting only a single covariate from each group will typically result in increased prediction error, since the model is less robust (which is why ridge regression often outperforms lasso)".

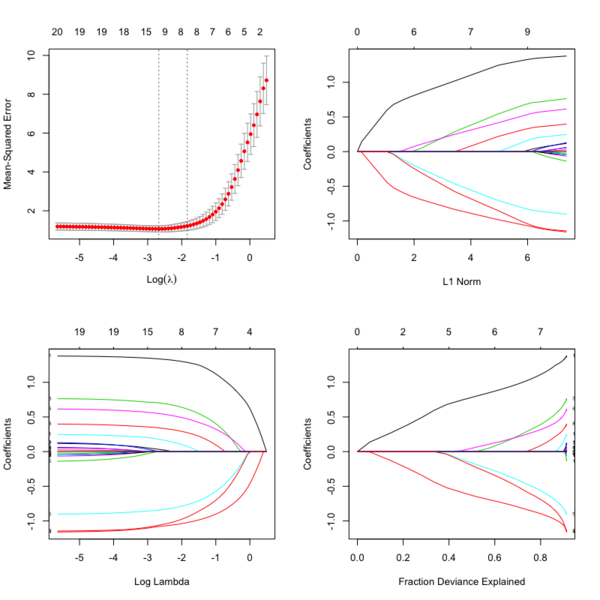

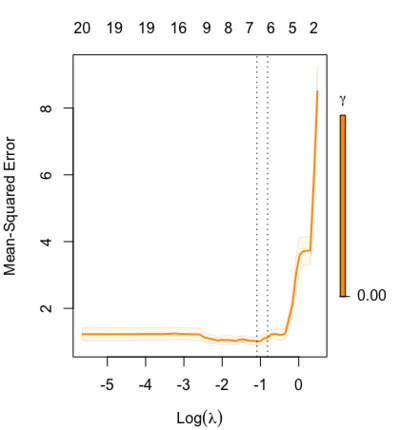

- Glmnet Vignette. It tries to minimize [math]\displaystyle{ RSS(\beta) + \lambda [(1-\alpha)\|\beta\|_2^2/2 + \alpha \|\beta\|_1] }[/math]. The elastic-net penalty is controlled by [math]\displaystyle{ \alpha }[/math], and bridge the gap between lasso ([math]\displaystyle{ \alpha = 1 }[/math]) and ridge ([math]\displaystyle{ \alpha = 0 }[/math]). Following is a CV curve (adaptive lasso) using the example from glmnet(). Two vertical lines are indicated: left one is lambda.min (that gives minimum mean cross-validated error) and right one is lambda.1se (the most regularized model such that error is within one standard error of the minimum). For the tuning parameter [math]\displaystyle{ \lambda }[/math],

- The larger the [math]\displaystyle{ \lambda }[/math], more coefficients are becoming zeros (think about coefficient path plots) and thus the simpler (more regularized) the model.

- If [math]\displaystyle{ \lambda }[/math] becomes zero, it reduces to the regular regression and if [math]\displaystyle{ \lambda }[/math] becomes infinity, the coefficients become zeros.

- In terms of the bias-variance tradeoff, the larger the [math]\displaystyle{ \lambda }[/math], the higher the bias and the lower the variance of the coefficient estimators.

- Video by Trevor Hastie

File:Glmnetplot.svg File:Glmnet path.svg File:Glmnet l1norm.svg

set.seed(1010) n=1000;p=100 nzc=trunc(p/10) x=matrix(rnorm(n*p),n,p) beta=rnorm(nzc) fx= x[,seq(nzc)] %*% beta eps=rnorm(n)*5 y=drop(fx+eps) px=exp(fx) px=px/(1+px) ly=rbinom(n=length(px),prob=px,size=1) ## Full lasso set.seed(999) cv.full <- cv.glmnet(x, ly, family='binomial', alpha=1, parallel=TRUE) plot(cv.full) # cross-validation curve and two lambda's plot(glmnet(x, ly, family='binomial', alpha=1), xvar="lambda", label=TRUE) # coefficient path plot plot(glmnet(x, ly, family='binomial', alpha=1)) # L1 norm plot log(cv.full$lambda.min) # -4.546394 log(cv.full$lambda.1se) # -3.61605 sum(coef(cv.full, s=cv.full$lambda.min) != 0) # 44 ## Ridge Regression to create the Adaptive Weights Vector set.seed(999) cv.ridge <- cv.glmnet(x, ly, family='binomial', alpha=0, parallel=TRUE) wt <- 1/abs(matrix(coef(cv.ridge, s=cv.ridge$lambda.min) [, 1][2:(ncol(x)+1)] ))^1 ## Using gamma = 1, exclude intercept ## Adaptive Lasso using the 'penalty.factor' argument set.seed(999) cv.lasso <- cv.glmnet(x, ly, family='binomial', alpha=1, parallel=TRUE, penalty.factor=wt) # defautl type.measure="deviance" for logistic regression plot(cv.lasso) log(cv.lasso$lambda.min) # -2.995375 log(cv.lasso$lambda.1se) # -0.7625655 sum(coef(cv.lasso, s=cv.lasso$lambda.min) != 0) # 34

- cv.glmnet() has a logical parameter parallel which is useful if a cluster or cores have been previously allocated

- Ridge regression and the LASSO

- Standard error/Confidence interval

- Standard Errors in GLMNET and Confidence intervals for Ridge regression

- Why SEs are not meaningful and are usually not provided in penalized regression?

- Hint: standard errors are not very meaningful for strongly biased estimates such as arise from penalized estimation methods.

- Penalized estimation is a procedure that reduces the variance of estimators by introducing substantial bias.

- The bias of each estimator is therefore a major component of its mean squared error, whereas its variance may contribute only a small part.

- Any bootstrap-based calculations can only give an assessment of the variance of the estimates.

- Reliable estimates of the bias are only available if reliable unbiased estimates are available, which is typically not the case in situations in which penalized estimates are used.

- Hottest glmnet questions from stackexchange.

- Standard errors for lasso prediction. There might not be a consensus on a statistically valid method of calculating standard errors for the lasso predictions.

- Code for Adaptive-Lasso for Cox's proportional hazards model by Zhang & Lu (2007). This can compute the SE of estimates. The weights are originally based on the maximizers of the log partial likelihood. However, the beta may not be estimable in cases such as high-dimensional gene data, or the beta may be unstable if strong collinearity exists among covariates. In such cases, robust estimators such as ridge regression estimators would be used to determine the weights.

- Some issues:

- With group of highly correlated features, Lasso tends to select amongst them arbitrarily.

- Often empirically ridge has better predictive performance than lasso but lasso leads to sparser solution

- Elastic-net (Zou & Hastie '05) aims to address these issues: hybrid between Lasso and ridge regression, uses L1 and L2 penalties.

- Gradient-Free Optimization for GLMNET Parameters

- Outlier detection and robust variable selection via the penalized weighted LAD-LASSO method Jiang 2020

- LASSO REGRESSION (HOME MADE)

- Course notes on Optimization for Machine Learning

- High dimensional data analysis course by P. Breheny

- How to calculate R Squared value for Lasso regression using glmnet in R

- Getting AIC/BIC/Likelihood from GLMNet

Lambda [math]\displaystyle{ \lambda }[/math]

- A list of potential lambdas: see Linear Regression case. The λ sequence is determined by lambda.max and lambda.min.ratio.

- lambda.max is computed from the input x and y; it is the maximal value for lambda such that all the coefficients are zero. How does glmnet compute the maximal lambda value?. However see the Random data example.

- lambda.min.ratio (default is ifelse(nobs<nvars,0.01,0.0001)) is the ratio of smallest value of the generated λ sequence (say lambda.min) to lambda.max.

- The program then generated nlambda values linear on the log scale from lambda.max down to lambda.min.

- The sequence of lambda does not change with different data partitions from running cv.glmnet().

- Avoid supplying a single value for lambda (for predictions after CV use predict() instead). Supply instead a decreasing sequence of lambda values. I'll get different coefficients when I use coef(cv.glmnet obj) and coef(glmnet obj). See the complete code on gist.

cvfit <- cv.glmnet(x, y, family = "cox", nfolds=10) fit <- glmnet(x, y, family = "cox", lambda = lambda) coef.cv <- coef(cvfit, s = lambda) coef.fit <- coef(fit) length(coef.cv[coef.cv != 0]) # 31 length(coef.fit[coef.fit != 0]) # 30 all.equal(lambda, cvfit$lambda[40]) # TRUE length(cvfit$lambda) # [1] 100

- Choosing hyper-parameters (α and λ) in penalized regression by Florian Privé

- lambda.min vs lambda.1se

- The lambda.1se represents the value of λ in the search that was simpler than the best model (lambda.min), but which has error within 1 standard error of the best model ( lambda.min < lambda.1se ). In other words, using the value of lambda.1se as the selected value for λ results in a model that is slightly simpler than the best model but which cannot be distinguished from the best model in terms of error given the uncertainty in the k-fold CV estimate of the error of the best model.

- lambda.1se can be checked by looking at the CV plot. The CV plot contains the SE of RMSE/Deviance and cv.glmnet object contains cvm and cvup elements. If we draw a horizontal line at cvup[i] where i is the index of the lambda.min, then the largest lambda satisfying cvm[j] <= cvup[i] will be lambda.1se. This can be seen on the example of Logistic Regression: family = "binomial".

- lambda.1se not being in one standard error of the error When we say 1 standard error, we are not talking about the standard error across the lambda's but the standard error across the folds for a given lambda. Suppose A=cvfit$cvsd[which(cv.lasso$lambda == cv.lasso$lambda.min)] is the sd at lambda.min and B=cvfit$cvm[which(cv.lasso$lambda == cv.lasso$lambda.min)] the minimum cross-validated MSE. If we draw a horizontal line at A+B, then the largest lambda less than the cross point (on x-axis) should be lambda.1se.

- The lambda.min option refers to value of λ at the lowest CV error. The error at this value of λ is the average of the errors over the k folds and hence this estimate of the error is uncertain.

- https://www.rdocumentation.org/packages/glmnet/versions/2.0-10/topics/glmnet

- glmnetUtils: quality of life enhancements for elastic net regression with glmnet

- Mixing parameter: alpha=1 is the lasso penalty, and alpha=0 the ridge penalty and anything between 0–1 is Elastic net.

- RIdge regression uses Euclidean distance/L2-norm as the penalty. It won't remove any variables.

- Lasso uses L1-norm as the penalty. Some of the coefficients may be shrunk exactly to zero.

- In ridge regression and lasso, what is lambda?

- Lambda is a penalty coefficient. Large lambda will shrink the coefficients.

- cv.glment()$lambda.1se gives the most regularized model such that error is within one standard error of the minimum

- A deep dive into glmnet: penalty.factor, standardize, offset

- Lambda sequence is not affected by the "penalty.factor"

- How "penalty.factor" used by the objective function may need to be corrected

- A deep dive into glmnet: predict.glmnet

Optimal lambda for Cox model

See Regularization Paths for Cox’s Proportional Hazards Model via Coordinate Descent Simon 2011. We choose the λ value which maximizes [math]\displaystyle{ \hat{CV}(\lambda) }[/math].

- [math]\displaystyle{ \begin{align} \hat{CV}_{i}(\lambda) = l(\beta_{-i}(\lambda)) - l_{-i}(\beta_{-i}(\lambda)). \end{align} }[/math]

Our total goodness of fit estimate, [math]\displaystyle{ \hat{CV}(\lambda) }[/math], is the sum of all [math]\displaystyle{ \hat{CV}_{i}(\lambda). }[/math] By using the equation above – subtracting the log-partial likelihood evaluated on the non-left out data from that evaluated on the full data – we can make efficient use of the death times of the left out data in relation to the death times of all the data.

Get the partial likelihood of a Cox PH model with new data

Random data

- For random survival (response) data, glmnet easily returns 0 covariates by using lambda.min.

- For random binary or quantitative trait (response) data, it seems glmnet returns at least 1 covariate at lambda.min which is max(lambda). This seems contradicts the documentation which describes all coefficients are zero with max(lambda).

See the code here.

Mixing parameter [math]\displaystyle{ \alpha }[/math]

cva.glmnet() from the glmnetUtils package to choose both the alpha and lambda parameters via cross-validation, following the approach described in the help page for cv.glmnet.

Underfittig, overfitting and relaxed lasso

- https://cran.r-project.org/web/packages/glmnet/vignettes/relax.pdf

- The Relaxed Lasso: A Better Way to Fit Linear and Logistic Models

- Overfitting: small lambda, lots of non-zero coefficients

- Underfitting: large lambda, not enough variables

- Relaxed Lasso by Nicolai Meinshausen

- Why is “relaxed lasso” different from standard lasso?

- https://web.stanford.edu/~hastie/TALKS/hastieJSM2017.pdf#page=13

- Stabilizing the lasso against cross-validation variability

Plots

library(glmnet) data(QuickStartExample) # x is 100 x 20 matrix cvfit = cv.glmnet(x, y) fit = glmnet(x, y) oldpar <- par(mfrow=c(2,2)) plot(cvfit) # mse vs log(lambda) plot(fit) # coef vs L1 norm plot(fit, xvar = "lambda", label = TRUE) # coef vs log(lambda) plot(fit, xvar = "dev", label = TRUE) # coef vs Fraction Deviance Explained par(oldpar)

print() method

?print.glmnet and ?print.cv.glmnet

Extract/compute deviance

deviance(fitted_glmnet_object) coxnet.deviance(pred = NULL, y, x = 0, offset = NULL, weights = NULL, beta = NULL) # This calls a Fortran function loglike()

According to the source code, coxnet.deviance() returns 2 *(lsat-fit$flog).

coxnet.deviance() was used in assess.coxnet.R (CV, not intended for use by users) and buildPredmat.coxnetlist.R (CV).

See also Survival → glmnet.

cv.glmnet and deviance

Usage

cv.glmnet(x, y, weights = NULL, offset = NULL, lambda = NULL,

type.measure = c("default", "mse", "deviance", "class", "auc", "mae",

"C"), nfolds = 10, foldid = NULL, alignment = c("lambda",

"fraction"), grouped = TRUE, keep = FALSE, parallel = FALSE,

gamma = c(0, 0.25, 0.5, 0.75, 1), relax = FALSE, trace.it = 0, ...)

type.measure parameter (loss to use for CV):

- default

- type.measure = deviance which uses squared-error for gaussian models (a.k.a type.measure="mse"), logistic and poisson regression (PS: for binary response data I found that type='class' gives a discontinuous CV curve while 'deviance' give a smooth CV curve),

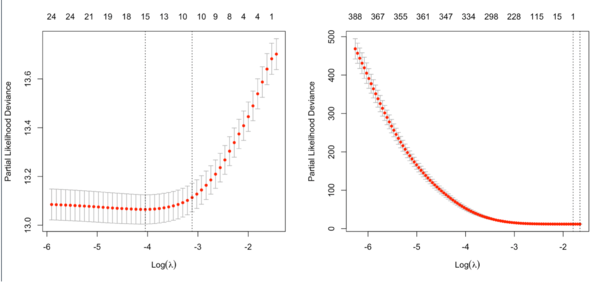

- type.measure = partial-likelihood for the Cox model (note that the y-axis from plot.cv.glmnet() gives deviance but the values are quite different from what deviace() gives from a non-CV modelling).

- mse or mae (mean absolute error) can be used by all models except the "cox"; they measure the deviation from the fitted mean to the response

- class applies to binomial and multinomial logistic regression only, and gives misclassification error.

- auc is for two-class logistic regression only, and gives area under the ROC curve.

- C is Harrel's concordance measure, only available for cox models

grouped parameter

- This is an experimental argument, with default TRUE, and can be ignored by most users.

- For the "cox" family, grouped=TRUE obtains the CV partial likelihood for the Kth fold by subtraction; by subtracting the log partial likelihood evaluated on the full dataset from that evaluated on the on the (K-1)/K dataset. This makes more efficient use of risk sets. With grouped=FALSE the log partial likelihood is computed only on the Kth fold

- Gradient descent for the elastic net Cox-PH model

| package | deviance | CV |

|---|---|---|

| survival | -2*fit$loglik[1] | |

| glmnet | deviance(fit) assess.glmnet(fit, newx, newy, family = "cox") coxnet.deviance(x, y, beta) |

cv.glmnet(x, y, lambda, family="cox", nfolds, foldid)$cvm which calls cv.glmnet.raw() (save lasso models for each CV) which calls buildPredmat.coxnetlist(foldid) to create a matrix cvraw (eg 10x100) and then calls cv.coxnet(foldid) (it creates weights of length 10 based on status and do cvraw/=weights) and cvstats(foldid) which will calculate weighted mean of cvraw matrix for each lambda and return the cvm vector; cvm[1]=sum(original cvraw[,1])/sum(weights) Note: the document says the measure (cvm) is partial likelihood for survival data. But cvraw calculation from buildPredmat.coxnetlist() shows the original/unweighted cvraw is CVPL. |

| BhGLM | measure.bh(fit, new.x, new.y) | cv.bh(fit, nfolds=10, foldid, ncv)$measures[1] which calls an internal function measure.cox(y.obj, lp) (lp is obtained from CV) which calls bcoxph() [coxph()] using lp as the covariate and returns deviance = -2 * ff$loglik[2] (a CV deviance) cindex = summary(ff)$concordance[[1]] (a CV c-index) |

An example from the glmnet vignette The deviance value is the same from both survival::deviance() and glmnet::deviance(). But how about cv.glmnet()$cvm (partial-likelihood)?

library(glmnet)

library(survival)

library(tidyverse); library(magrittr)

data(CoxExample)

dim(x) # 1000 x 30

# I'll focus some lambdas based on one run of cv.glmnet()

set.seed(1); cvfit = cv.glmnet(x, y, family = "cox", lambda=c(10,2,1,.237,.016,.003))

rbind(cvfit$lambda, cvfit$cvm,

deviance(glmnet(x, y, family = "cox", lambda=c(10, 2, 1, .237, .016, .003))))%>%

set_rownames(c("lambda", "cvm", "deviance"))

[,1] [,2] [,3] [,4] [,5] [,6]

lambda 10.00000 2.00000 1.00000 0.23700 0.01600 0.0030

cvm 13.70484 13.70484 13.70484 13.70316 13.07713 13.1101

deviance 8177.16378 8177.16378 8177.16378 8177.16378 7707.53515 7697.3357

-2* coxph(Surv(y[,1], y[, 2]) ~ x)$loglik[1]

[1] 8177.164

coxph(Surv(y[,1], y[, 2]) ~ x)$loglik[1]

[1] -4088.582

# coxnet.deviance: compute the deviance (-2 log partial likelihood) for right-censored survival data

fit1 = glmnet(x, y, family = "cox", lambda=.016)

coxnet.deviance(x=x, y=y, beta=fit1$coef)

# [1] 8177.164

fit2 = glmnet(x, y, family = "cox", lambda=.003)

coxnet.deviance(x=x, y=y, beta=fit2$coef)

# [1] 8177.164

# assess.glmnet

assess.glmnet(fit1, newx=x, newy=y)

# $deviance

# [1] 7707.444

# attr(,"measure")

# [1] "Partial Likelihood Deviance"

#

# $C

# [1] 0.7331241

# attr(,"measure")

# [1] "C-index"

assess.glmnet(fit2, newx=x, newy=y)

# $deviance

# [1] 7697.314

# attr(,"measure")

# [1] "Partial Likelihood Deviance"

#

# $C

# [1] 0.7342417

# attr(,"measure")

# [1] "C-index"

No need for glmnet if we have run cv.glmnet

https://stats.stackexchange.com/a/77549 Do not supply a single value for lambda. Supply instead a decreasing sequence of lambda values. glmnet relies on its warms starts for speed, and its often faster to fit a whole path than compute a single fit.

cv.glmnet in cox model

Note: the CV result may changes unless we fix the random seed.

Note that the y-axis on the plot depends on the type.measure parameter. It is not the objective function used to find the estimator. For survival data, the y-axis is deviance (-2*loglikelihood) [so the optimal lambda should give a minimal deviance value].

It is not always partial likelihood device has a largest value at a large lambda. In the following two plots, the first one is from the glmnet vignette and the 2nd one is from the coxnet vignette. The survival data are not sparse in both examples.

Sparse data

library(glmnet); library(survival)

n = 100; p <- 1000

beta1 = 2; beta2 = -1; beta3 =1; beta4 = -2

lambdaT = .002 # baseline hazard

lambdaC = .004 # hazard of censoring

set.seed(1234)

x1 = rnorm(n)

x2 = rnorm(n)

x3 <- rnorm(n)

x4 <- rnorm(n)

# true event time

T = Vectorize(rweibull)(n=1, shape=1, scale=lambdaT*exp(-beta1*x1-beta2*x2-beta3*x3-beta4*x4))

C = rweibull(n, shape=1, scale=lambdaC) #censoring time

time = pmin(T,C) #observed time is min of censored and true

event = time==T # set to 1 if event is observed

cox <- coxph(Surv(time, event)~ x1 + x2 + x3 + x4); cox

-2*cox$loglik[2] # deviance [1] 301.7461

summary(cox)$concordance[1] # 0.9006085

# create a sparse matrix

X <- cbind(x1, x2, x3, x4, matrix(rnorm(n*(p-4)), nr=n))

colnames(X) <- paste0("x", 1:p)

# X <- data.frame(X)

y <- Surv(time, event)

set.seed(1234)

nfold <- 10

foldid <- sample(rep(seq(nfold), length = n))

cvfit <- cv.glmnet(X, y, family = "cox", foldid = foldid)

plot(cvfit)

plot(cvfit$lambda, log = "y")

assess.glmnet(cvfit, newx=X, newy = y, family="cox") # return deviance 361.4619 and C 0.897421

# Question: what lambda has been used?

# Ans: assess.glmnet() calls predict.cv.glmnet() which by default uses s = "lambda.1se"

fit <- glmnet(X, y, family = "cox", lambda = cvfit$lambda.min)

assess.glmnet(fit, newx=X, newy = y, family="cox") # deviance 308.3646 and C 0.9382788

cvfit$cvm[cvfit$lambda == cvfit$lambda.min]

# [1] 7.959283

fit <- glmnet(X, y, family = "cox", lambda = cvfit$lambda.1se)

assess.glmnet(fit, newx=X, newy = y, family="cox") # deviance 361.4786 and C 0.897421

deviance(fit)

# [1] 361.4786

fit <- glmnet(X, y, family = "cox", lambda = 1e-3)

assess.glmnet(fit, newx=X, newy = y, family="cox") # deviance 13.33405 and C 1

fit <- glmnet(X, y, family = "cox", lambda = 1e-8)

assess.glmnet(fit, newx=X, newy = y, family="cox") # deviance 457.3695 and C .5

fit <- glmnet(cbind(x1,x2,x3,x4), y, family = "cox", lambda = 1e-8)

assess.glmnet(fit, newx=X, newy = y, family="cox")

# Error in h(simpleError(msg, call)) :

# error in evaluating the argument 'x' in selecting a method for function 'as.matrix': Cholmod error

# 'X and/or Y have wrong dimensions' at file ../MatrixOps/cholmod_sdmult.c, line 90

deviance(fit) # [1] 301.7462

library(BhGLM)

X2 <- data.frame(X)

f1 = bmlasso(X2, y, family = "cox", ss = c(.04, .5))

measure.bh(f1, X2, y)

# deviance Cindex

# 303.39 0.90

o <- cv.bh(f1, foldid = foldid)

o$measures # deviance and C

# deviance Cindex

# 311.743 0.895

update() function

update() will update and (by default) re-fit a model. It does this by extracting the call stored in the object, updating the call and (by default) evaluating that call. Sometimes it is useful to call update with only one argument, for example if the data frame has been corrected.

It can be used in glmnet() object without a new implementation method.

- Linear regression

lm(y ~ x + z, data=myData) lm(y ~ x + z, data=subset(myData, sex=="female")) lm(y ~ x + z, data=subset(myData, age > 30))

- Lasso regression

R> fit <- glmnet(glmnet(X, y, family="cox", lambda=cvfit$lambda.min); fit Call: glmnet(x = X, y = y, family = "cox", lambda = cvfit$lambda.min) Df %Dev Lambda 1 21 0.3002 0.1137 R> fit2 <- update(fit, subset = c(rep(T, 50), rep(F, 50)); fit2 Call: glmnet(x = X[1:50, ], y = y[1:50], family = "cox", lambda = cvfit$lambda.min) Df %Dev Lambda 1 24 0.4449 0.1137 R> fit3 <- update(fit, lambda=cvfit$lambda); fit3 Call: glmnet(x = X, y = y, family = "cox", lambda = cvfit$lambda) Df %Dev Lambda 1 1 0.00000 0.34710 2 2 0.01597 0.33130 ...

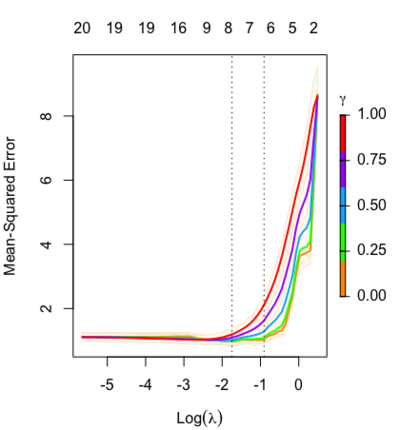

Relaxed fit and [math]\displaystyle{ \gamma }[/math] parameter

https://cran.r-project.org/web/packages/glmnet/vignettes/relax.pdf

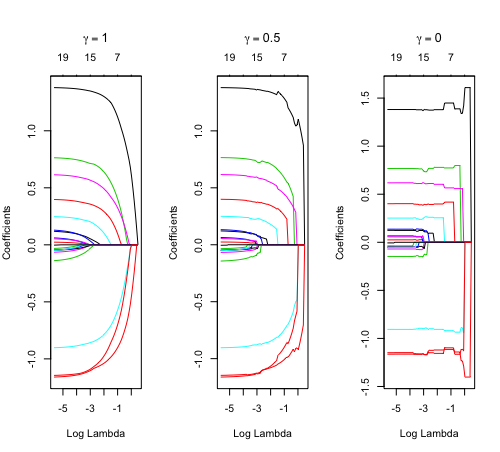

Relaxed fit: Take a glmnet fitted object, and then for each lambda, refit the variables in the active set without any penalization.

Suppose the glmnet fitted linear predictor at [math]\displaystyle{ \lambda }[/math] is [math]\displaystyle{ \hat\eta_\lambda(x) }[/math] and the relaxed version is [math]\displaystyle{ \tilde\eta_\lambda(x) }[/math]. We also allow for shrinkage between the two:

- [math]\displaystyle{ \begin{align} \tilde \eta_{\lambda,\gamma}= \gamma\hat\eta_\lambda(x) + (1-\gamma)\tilde\eta_\lambda(x). \end{align} }[/math]

[math]\displaystyle{ \gamma\in[0,1] }[/math] is an additional tuning parameter which can be selected by cross validation.

The debiasing will potentially improve prediction performance, and CV will typically select a model with a smaller number of variables.

The default behavior of extractor functions like predict and coef, as well as plot will be to present results from the glmnet fit (not cv.glmnet), unless a value of [math]\displaystyle{ \gamma }[/math] is given different from the default value [math]\displaystyle{ \gamma=1 }[/math].

Question: how does cv.glmnet() select [math]\displaystyle{ \gamma }[/math] parameter? Ans: it depends on the parameter type.measure in cv.glmnet.

library(glmnet) data(QuickStartExample) fitr=glmnet(x,y, relax=TRUE) set.seed(1) cfitr=cv.glmnet(x,y,relax=TRUE) c(fitr$lambda.min, fitr$lambda.1se) # [1] 0.08307327 0.15932708 str(cfitr$relaxed) plot(cfitr),oldpar <- par(mfrow=c(1,3), mar = c(5,4,6,2)) plot(fitr, main = expression(gamma == 1)) plot(fitr,gamma=0.5, main = expression(gamma == .5)) plot(fitr,gamma=0, main = expression(gamma == 0)) par(oldpar) |

Special cases:

set.seed(1) cfitr2=cv.glmnet(x,y,gamma=0,relax=TRUE) # default gamma = c(0, 0.25, 0.5, 0.75, 1) plot(cfitr2) c(cfitr2$lambda.min, cfitr2$lambda.1se) # [1] 0.08307327 0.15932708 str(cfitr2$relaxed) |

Computation time

beta1 = 2; beta2 = -1 lambdaT = .002 # baseline hazard lambdaC = .004 # hazard of censoring set.seed(1234) x1 = rnorm(n) x2 = rnorm(n) # true event time T = Vectorize(rweibull)(n=1, shape=1, scale=lambdaT*exp(-beta1*x1-beta2*x2)) # No censoring event2 <- rep(1, length(T)) system.time(fit <- cv.glmnet(x, Surv(T,event2), family = 'cox')) # user system elapsed # 4.701 0.016 4.721 system.time(fitr <- cv.glmnet(x, Surv(T,event2), family = 'cox', relax= TRUE)) # user system elapsed # 161.002 0.382 161.573

predict() and coef() methods

?predict.glmnet OR ?coef.glmnet OR ?coef.relaxed. Similar to other predict methods, this functions predicts fitted values, logits, coefficients and more from a fitted "glmnet" object.

## S3 method for class 'glmnet'

predict(object, newx, s = NULL, type = c("link",

"response", "coefficients", "nonzero", "class"), exact = FALSE,

newoffset, ...)

## S3 method for class 'relaxed'

predict(object, newx, s = NULL, gamma = 1,

type = c("link", "response", "coefficients", "nonzero", "class"),

exact = FALSE, newoffset, ...)

## S3 method for class 'glmnet'

coef(object, s = NULL, exact = FALSE, ...)

?predict.cv.glmnet OR ?coef.cv.glmnet OR ?coef.cv.relaxed. This function makes predictions from a cross-validated glmnet model, using the stored "glmnet.fit" object, and the optimal value chosen for lambda (and gamma for a 'relaxed' fit).

## S3 method for class 'cv.glmnet'

predict(object, newx, s = c("lambda.1se",

"lambda.min"), ...)

## S3 method for class 'cv.relaxed'

predict(object, newx, s = c("lambda.1se",

"lambda.min"), gamma = c("gamma.1se", "gamma.min"), ...)

Cindex

https://www.rdocumentation.org/packages/glmnet/versions/3.0-2/topics/Cindex

Usage:

Cindex(pred, y, weights = rep(1, nrow(y)))

assess.glmnet

https://www.rdocumentation.org/packages/glmnet/versions/3.0-2/topics/assess.glmnet

Usage:

assess.glmnet(object, newx = NULL, newy, weights = NULL,

family = c("gaussian", "binomial", "poisson", "multinomial", "cox",

"mgaussian"), ...)

confusion.glmnet(object, newx = NULL, newy, family = c("binomial",

"multinomial"), ...)

roc.glmnet(object, newx = NULL, newy, ...)

ridge regression

# example from SGL package

set.seed(1)

n = 50; p = 100; size.groups = 10

X = matrix(rnorm(n * p), ncol = p, nrow = n)

beta = (-2:2)

y = X[,1:5] %*% beta + 0.1*rnorm(n)

data = list(x = X, y = y)

cvfit <- cv.glmnet(X, y, alpha = 0)

plot(cvfit)

o <- coef(cvfit, lambda = cvfit$lambda.min) %>% drop()

sum(o != 0) # [1] 101.

# Too biased.

o[1:10]

# (Intercept) V1 V2 V3 V4

# -3.269401e-01 -2.253226e-36 -8.900799e-37 5.198885e-37 1.311976e-36

# V5 V6 V7 V8 V9

# 1.873125e-36 1.582532e-37 2.085781e-37 4.732839e-37 2.997614e-37

y_predicted <- predict(cvfit, s = cvfit$lambda.min, newx = X)

# Sum of Squares Total and Error

sst <- sum((y - mean(y))^2)

sse <- sum((y_predicted - y)^2)

# R squared

rsq <- 1 - sse / sst

rsq # 0.46

library(SGL) # sparse group lasso

set.seed(1)

index <- ceiling(1:p / size.groups)

cvFit = cvSGL(data, index, type = "linear", alpha=.95) # this alpha is the default

plot(cvFit)

cvFit$fit$beta[, 20] # 20th lambda gives smallest negative log likelihood

# identify correct predictors

# [1] -10.942712 -6.167799 0.000000 6.595406 14.442019 0.000000 ...

set.seed(1)

cvFit2 = cvSGL(data, index, type = "linear", alpha=0)

plot(cvFit2)

cvFit2$fit$beta[, 20]

# [1] -10.8417371 -6.5251240 0.2476438 6.7223001 14.1605263 0.2149542

# [7] 0.2481450 0.1404282 0.1799818 0.3784596 0.0000000 0.0000000 ...

- Tikhonov regularization (ridge regression). It was used to handle ill-posed/overfitting situation. Ridge regression shrinks the coefficients by a uniform factor of [math]\displaystyle{ {\displaystyle (1+N\lambda )^{-1}}{\displaystyle (1+N\lambda )^{-1}} }[/math] and does not set any coefficients to zero.

- cvSGL

- How and when: ridge regression with glmnet. On training data, ridge regression fits less well than the OLS but the parameter estimate is more stable. So it does better in prediction because it is less sensitive to extreme variance in the data such as outliers.

Group lasso

- BhGLM on github, Gsslasso Cox: a Bayesian hierarchical model for predicting survival and detecting associated genes by incorporating pathway information 2019

- grplasso package. Why use group lasso instead of lasso? Already for the special case in linear regression when not only continuous but also categorical predictors (factors) are present, the lasso solution is not satisfactory as it only selects individual dummy variables instead of whole factors. Moreover, the lasso solution depends on how the dummy variables are encoded. Choosing different contrasts for a categorical predictor will produce different solutions in general.

- gglasso: Group Lasso Penalized Learning Using a Unified BMD Algorithm. Using LASSO in R with categorical variables

- SGL

- MSGLasso

- sgPLS, paper Liquet 2016

- pcLasso: Principal Components Lasso package

- pclasso paper, slides, Blog

- Each feature must be assigned to a group

- It allows to assign each feature to groups (including overlapping).

library(pcLasso) set.seed(1) n = 50; p = 100; size.groups = 10 index <- ceiling(1:p / size.groups) X = matrix(rnorm(n * p), ncol = p, nrow = n) beta = (-2:2) y = X[,1:5] %*% beta + 0.1*rnorm(n) groups <- vector("list", 3) for (k in 1:2) { groups[[k]] <- 5 * (k-1) + 1:5 } groups[[3]] <- 11:p cvfit <- cv.pcLasso(X, y, ratio = 0.8, groups = groups) plot(cvfit) pred.pclasso <- predict(cvfit, xnew = X, s = "lambda.min") mean((y-pred.pclasso)^2) # [1] 1.956387 library(SGL) index <- ceiling(1:p / size.groups) data = list(x = X, y = y) set.seed(1) cvFit = cvSGL(data, index, type = "linear") Fit = SGL(data, index, type = "linear") # SGL() uses a different set of lambdas than cvSGL() does # After looking at cvFit$lambdas; Fit$lambdas # I should pick the last lambda pred.SGL <- predictSGL(Fit, X, length(Fit$lambdas)) mean((y-pred.SGL)^2) # [1] 0.146027 library(ggplot2) library(tidyr) dat <- tibble(y=y, SGL=pred.SGL, pclasso=pred.pclasso) %>% gather("method", "predict", 2:3) ggplot(dat, aes(x=y, y=predict, color=method)) + geom_point(shape=1)

- Gsslasso Cox: A Bayesian Hierarchical Model for Predicting Survival and Detecting Associated Genes by Incorporating Pathway Information, Tang 2019

- Spike-and-slab regression

- Gsslasso Cox: A Bayesian Hierarchical Model for Predicting Survival and Detecting Associated Genes by Incorporating Pathway Information, Tang et al., 2019

- Spike-and-Slab Group Lassos for Grouped Regression and Sparse Generalized Additive Models Bai 2020

- Spike-and-slab type variable selection in the Cox proportional hazards model for high-dimensional features Wu 2021

- Fast Sparse-Group Lasso Method for Multi-response Cox Model with Applications to UK Biobank Li 2020

Minimax concave penalty (MCP)

- The minimax concave penalty (MCP)

- High-dimensional data anlaysis by Patrick Breheny

- grpregOverlap and grpreg

- ncvreg package by Patrick Breheny. It is an R package for fitting regularization paths for linear regression, GLM, and Cox regression models using lasso or nonconvex penalties, in particular the minimax concave penalty (MCP) and smoothly clipped absolute deviation (SCAD) penalty, with options for additional L2 penalties (the “elastic net” idea).

- glmgraph

penalty.factor

The is available in glmnet() but not in cv.glmnet().

Adaptive lasso and weights

Oracle property and adaptive lasso

- Variable Selection via Nonconcave Penalized Likelihood and Its Oracle Properties, Fan & Li (2001) JASA

- Adaptive Lasso: What it is and how to implement in R. Adaptive lasso weeks to minimize [math]\displaystyle{ RSS(\beta) + \lambda \sum_1^p \hat{\omega}_j |\beta_j| }[/math] where [math]\displaystyle{ \lambda }[/math] is the tuning parameter, [math]\displaystyle{ \hat{\omega}_j = \frac{1}{(|\hat{\beta}_j^{ini}|)^\gamma} }[/math] is the adaptive weights vector and [math]\displaystyle{ \hat{\beta}_j^{ini} }[/math] is an initial estimate of the coefficients obtained through ridge regression. Adaptive Lasso ends up penalizing more those coefficients with lower initial estimates. [math]\displaystyle{ \gamma }[/math] is a positive constant for adjustment of the adaptive weight vector, and the authors suggest the possible values of 0.5, 1 and 2.

- When n goes to infinity, [math]\displaystyle{ \hat{\omega}_j |\beta_j| }[/math] converges to [math]\displaystyle{ I(\beta_j \neq 0) }[/math]. So the adaptive Lasso procedure can be regarded as an automatic implementation of best-subset selection in some asymptotic sense.

- What is the oracle property of an estimator? An oracle estimator must be consistent in 1) variable selection and 2) consistent parameter estimation.

- Oracle property: Oracle property is a name given to techniques for estimating the regression parameters in the models fitted to high-dimensional data which have the property that they can correctly select the nonzero coefficients with the probability converging to one and that the estimators of nonzero coefficients are asymptotically normal with the identical means and covariances that they would have if the zero coefficients were known in advance that is the estimators are asymptotically as efficient as the ideal estimation assisted by an 'oracle' who knows which coefficients are nonzero.

- (Linear regression) The adaptive lasso and its oracle properties Zou (2006, JASA)

- (Cox model) Adaptive-LASSO for Cox's proportional hazard model by Zhang and Lu (2007, Biometrika)

- When the LASSO fails???. Adaptive lasso is used to demonstrate its usefulness.

Survival data

- The sum of errors term can be replaced with [math]\displaystyle{ -l(\beta, \gamma) }[/math], where [math]\displaystyle{ l(\cdot, \cdot) }[/math] stands for the log-likelihood function and [math]\displaystyle{ \gamma }[/math] for the intercept. See The lasso method for variable selection in the cox model 1997.

- Regularization Paths for Cox's Proportional Hazards Model via Coordinate Descent Noah Simon, Jerome H. Friedman, Trevor Hastie, Rob Tibshirani, 2011

- Cox regression: Estimation by Patrick Breheny

- Another implementation coxnet

- Gradient lasso for Cox proportional hazards model

- Prediction method. ?predict.cv.glmnet is not clear. ?coef.glmnet is more clear.

data(CoxExample) dim(x) # 1000 x 30 ind.train <- 1:nrow(x)/2 cv.fit <- cv.glmnet(x[ind.train, ], y[ind.train, ], family="cox") coef(cv.fit, s = "lambda.min") # 30 x 1, coef(...) is equivalent to predict(type="coefficients",...) pr <- predict(cv.fit, x[-ind.train, ], type = "link", s = "lambda.min") # the default option for type is 'link' which gives the linear predictors for "cox" models. pr2 <- x[-ind.train, ] %*% coef(cv.fit, s = "lambda.min") all.equal(pr[, 1], pr2[, 1]) # [1] TRUE class(pr) # [1] "matrix" class(pr2) # [1] "dgeMatrix" # we can also use predict() with glmnet object pr22 <- predict(fit, x[-ind.train, ], type='link', s = cv.fit$lambda.min) all.equal(pr[, 1], pr22[, 1]) # [1] TRUE pr3 <- predict(cv.fit, x[-ind.train, ], type = "response", s = "lambda.min") range(pr3) # relative risk [1] 0.05310623 19.80143519

- Solving the Cox Proportional Hazards Model and Its Applications. Solving the Model by Coordinate Descent.

glmnet 4.0: family

glmnet v4.0: generalizing the family parameter

Timing

nvec <- c(100, 400)

pvec <- c(1000, 5000)

for(n in nvec)

for(p in pvec) {

nzc = trunc(p/10) # 1/10 variables are non-zeros

x = matrix(rnorm(n * p), n, p)

beta = rnorm(nzc)

fx = x[, seq(nzc)] %*% beta/3

hx = exp(fx)

ty = rexp(n, hx)

tcens = rbinom(n = n, prob = 0.3, size = 1) # censoring indicator

y = cbind(time = ty, status = 1 - tcens) # y=Surv(ty,1-tcens) with library(survival)

foldid = sample(rep(seq(10), length = n))

o <- system.time(fit1_cv <- cv.glmnet(x, y, family = "cox", foldid = foldid) )

cat(sprintf("n=%1d, p=%5d, time=%8.3f\n", n, p, o['elapsed']))

}

Running on core i7 2.6GHz, macbook pro 2018. Unit is seconds.

| p ∖ n | 100 | 400 |

|---|---|---|

| 1000 | 5 | 58 |

| 5000 | 9 | 96 |

Xeon E5-2680 v4 @ 2.40GHz

| p ∖ n | 100 | 400 |

|---|---|---|

| 1000 | 7 | 98 |

| 5000 | 13 | 125 |

All interactions

How to make all interactions before using glmnet

Algorithm

warm start

- Why is my Rcpp code is much slower than glmnet's?

- Lasso: Algorithms by Patrick Breheny

Gradient descent

Gradient descent for the elastic net Cox-PH model

Cyclic coordinate descent

A deep dive into glmnet: type.gaussian

Quadratic programming

- Optimization and Mathematical Programming

- Optimization with R –Tips and Tricks

- Quadratic Programming with R

- Using quadratic programming to solve L1-norm regularization.

- The examples there pick up specified lambdas in order to compare the solutions from glmnet and quadratic programming and

- The examples still have n>p. If p>n, then it is impossible to compute the norm of the least squares solution. So it is difficult to get the shrinkage size and there is no way to reproduce glmnet solution using quadratic programming packages.

Other

- Ridge coefficient estimates do not match OLS estimates when lambda = 0. Adjust

- thresh - Convergence threshold for coordinate descent. Each inner coordinate-descent loop continues until the maximum change in the objective after any coefficient update is less than thresh times the null deviance. Defaults value is 1E-7.

- pmax - Limit the maximum number of variables ever to be nonzero

- dfmax - Limit the maximum number of variables in the model. Useful for very large nvars, if a partial path is desired.

- Make cv.glmnet select something between lambda.min and lambda.1se

- Difference Between Built-In Cross Validation Functions and Using Caret

Release

- glmnet v4.1: regularized Cox models for (start, stop and stratified data]

- glmnet v4.0: generalizing the family parameter

Discussion

- https://stackoverflow.com/questions/tagged/glmnet

- https://stats.stackexchange.com/questions/tagged/glmnet

Prediction

- Comparison of pathway and gene-level models for cancer prognosis prediction Zheng, 2020

- Cancer prognosis prediction using somatic point mutation and copy number variation data: a comparison of gene-level and pathway-based models Zheng 2020

- CAncer bioMarker Prediction Pipeline (CAMPP)—A standardized framework for the analysis of quantitative biological data Terkelsen, 2020

ROC

How to plot ROC-curve for logistic regression (LASSO) in R?

LASSO vs Least angle regression

- https://web.stanford.edu/~hastie/Papers/LARS/LeastAngle_2002.pdf

- Least Angle Regression, Forward Stagewise and the Lasso

- https://www.quora.com/What-is-Least-Angle-Regression-and-when-should-it-be-used

- A simple explanation of the Lasso and Least Angle Regression

- https://stats.stackexchange.com/questions/4663/least-angle-regression-vs-lasso

- https://cran.r-project.org/web/packages/lars/index.html

caret

- https://cran.r-project.org/web/packages/caret/. In the vignette, the pls method was used (so the pls package needs to be installed in order to run the example).

- The tune parameter tuneLength controls how many parameter values are evaluated,

- The tuneGrid argument is used when specific values are desired.

- caret ebook

- method = 'glmnet' only works for regression and classification problems; see train models by tag

- The caret Package -> Model Training and Tuning

- Predictive Modeling with R and the caret Package (useR! 2013)

- Caret R Package for Applied Predictive Modeling

- coefficients from glmnet and caret are different for the same lambda.

- the exact lambda you specified was not used by caret. the coefficients are interpolated from the coefficients actually calculated.

- when you provide lambda to the glmnet call the model returns exact coefficients for that lambda

library(caret) set.seed(0) train_control = trainControl(method = 'cv', number = 10) grid = 10 ^ seq(5, -2, length = 100) tune.grid = expand.grid(lambda = grid, alpha = 0) ridge.caret = train(x[train, ], y[train], method = 'glmnet', trControl = train_control, tuneGrid = tune.grid) ridge.caret$bestTune ridge_full <- train(x, y, method = 'glmnet', trControl = trainControl(method = 'none'), tuneGrid = expand.grid( lambda = ridge.caret$bestTune$lambda, alpha = 0) ) coef(ridge_full$finalModel, s = ridge.caret$bestTune$lambda)

- trainControl()

- method - "boot", "boot632", "optimism_boot", "boot_all", "cv", "repeatedcv", "LOOCV", "LGOCV", ...

- number - Either the number of folds or number of resampling iterations

- repeats - For repeated k-fold cross-validation only: the number of complete sets of folds to compute

- search - Either "grid" or "random", describing how the tuning parameter grid is determined.

- seeds - an optional set of integers that will be used to set the seed at each resampling iteration. This is useful when the models are run in parallel.

- train(). A long printout comes from the foreach loop when the nominalTrainWorkflow() function is called.

- method - A string specifying which classification or regression model to use. Some examples are knn, lm, nnet, rpart, glmboost, ... Possible values are found using names(getModelInfo()).

- metric - ifelse(is.factor(y_dat), "Accuracy", "RMSE"). By default, possible values are "RMSE" and "Rsquared" for regression and "Accuracy" and "Kappa" for classification. If custom performance metrics are used (via the summaryFunction argument in trainControl, the value of metric should match one of the arguments.

- maximize - ifelse(metric %in% c("RMSE", "logLoss", "MAE"), FALSE, TRUE)

- Return object from train()

- results: matrix. Each row = one parameter (alpha, lambda)

- finalModel$lambda: vector of very long length instead of what we specify. What is this?

- finalModel$lambdaOpt

- finalModel$tuneValue$alpha, finalModel$tuneValue$lambda

- finalModel$a0

- finalModel$beta

-

Regularization: Ridge, Lasso and Elastic Net. Notice the repeatedcv method. If repeatedcv is selected, the performance RMSE is computed by the average of (# CV * # reps) RMSEs for each (alpha, lambda). Then the best tuning parameter (alpha, lambda) is selected with the minimum RMSE in performance. See the releated code at Line 679, Line 744,Line 759 where trControl$selectionFunction()=best().

library(glmnet) # for ridge regression library(dplyr) # for data cleaning data("mtcars") # Center y, X will be standardized in the modelling function y <- mtcars %>% select(mpg) %>% scale(center = TRUE, scale = FALSE) %>% as.matrix() X <- mtcars %>% select(-mpg) %>% as.matrix() dim(X) # [1] 32 10 library(caret) # Set training control train_control <- trainControl(method = "repeatedcv", number = 3, # 3-fold CV, 2 times repeats = 2, search = "grid", # "random" verboseIter = TRUE) # Train the model set.seed(123) # seed for reproducibility elastic_net_model <- train(mpg ~ ., data = cbind(y, X), method = "glmnet", preProcess = c("center", "scale"), tuneLength = 4, # 4 alphas x 4 lambdas trControl = train_control) # Check multiple R-squared y_hat_enet <- predict(elastic_net_model, X) cor(y, y_hat_enet)^2 names(elastic_net_model) # [1] "method" "modelInfo" "modelType" "results" "pred" # [6] "bestTune" "call" "dots" "metric" "control" # [11] "finalModel" "preProcess" "trainingData" "resample" "resampledCM" # [16] "perfNames" "maximize" "yLimits" "times" "levels" # [21] "terms" "coefnames" "xlevels" elastic_net_model$bestTune # alpha and lambda names(elastic_net_model$finalModel) # [1] "a0" "beta" "df" "dim" "lambda" # [6] "dev.ratio" "nulldev" "npasses" "jerr" "offset" # [11] "call" "nobs" "lambdaOpt" "xNames" "problemType" # [16] "tuneValue" "obsLevels" "param" length(elastic_net_model$finalModel$lambda) # [1] 95 length(elastic_net_model$finalModel$lambdaOpt) # [1] 1 dim(elastic_net_model$finalModel$beta) # [1] 10 95 which(elastic_net_model$finalModel$lambda == elastic_net_model$finalModel$lambdaOpt) # integer(0) <=== Weird, why - For the Repeated k-fold Cross Validation. The final model accuracy is taken as the mean from the number of repeats.

- Penalized Regression Essentials: Ridge, Lasso & Elastic Net -> Using caret package. tuneLength was used. Note that the results element gives the details for each candidate of parameter combination.

# Load the data data("Boston", package = "MASS") # Split the data into training and test set set.seed(123) training.samples <- Boston$medv %>% createDataPartition(p = 0.8, list = FALSE) train.data <- Boston[training.samples, ] set.seed(123) # affect CV samples, not affect tuning parameter set cv_5 <- trainControl(method = "cv", number = 5) # number of folds model <- train( medv ~., data = train.data, method = "glmnet", trControl = cv_5, tuneLength = 10 # 10 alphas x 10 lambdas ) model # one row per parameter combination model$results # Best tuning parameter model$bestTune

seeds

Tidymodel and tune

- tidymodels, tune

- https://www.tidymodels.org/

- Tidy Modeling with R

- Caret for Survival Analysis?

- Survival Methods in tidymodels

- A Gentle Introduction to tidymodels

- Handle class imbalance in climbing expedition data with tidymodels

- Parallel processing with tune

- Tutorial on tidymodels for Machine Learning

- Lasso regression using tidymodels and #tidytuesday data for the office

- Tidymodel and glmnet - Jun

- Tidymodels: tidy machine learning in R - Rebecca Barter

- Tidy Model Stacking 2021 John M. Chambers Statistical Software Award.

- Explore art media over time in the #TidyTuesday Tate collection dataset

biglasso

biglasso: Extend Lasso Model Fitting to Big Data in R