ROC: Difference between revisions

| (96 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

= Confusion matrix, Sensitivity/Specificity/Accuracy = | |||

[https://en.wikipedia.org/wiki/Confusion_matrix Wikipedia]. Note in wikipedia, it uses positive/negative to represent two classes. | |||

{| border="1" style="border-collapse:collapse; text-align:center;" | |||

|- | |||

| || || colspan="2" | Predict || | |||

|- | |||

| || || 1 || 0 || | |||

|- | |||

| rowspan="2" | True || 1 || TP || FN || style="color: white;background: red;"|Sens=TP/(TP+FN)=Recall=TPR <br/> FNR=FN/(TP+FN) | |||

|- | |||

| 0 || FP || TN || style="color: white;background: blue;"|Spec=TN/(FP+TN), 1-Spec=FPR | |||

|- | |||

| || || style="color: white;background: green;"|PPV=TP/(TP+FP)=Precision <br/> FDR=FP/(TP+FP) =1-PPV|| NPV=TN/(FN+TN) || N = TP + FP + FN + TN | |||

|} | |||

* <span style="color: red">Sensitivity 敏感度</span> = TP / (TP + FN) = Recall | |||

* <span style="color: blue">Specificity 特異度</span> = TN / (TN + FP) | |||

* Accuracy = (TP + TN) / N | |||

* <span style="color: green">False discovery rate FDR = FP / (TP + FP)</span> | |||

* False negative rate FNR = FN / (TP + FN) | |||

* <span style="color: blue">False positive rate FPR = FP / (FP + TN) = 1 - Spec</span> | |||

* <span style="color: red">True positive rate = TP / (TP + FN) = Sensitivity</span> | |||

* [https://en.wikipedia.org/wiki/Positive_and_negative_predictive_values <span style="color: green">Positive predictive value (PPV)</span>] = TP / # positive calls = TP / (TP + FP) = 1 - FDR = <span style="color: green">Precision</span> | |||

* Negative predictive value (NPV) = TN / # negative calls = TN / (FN + TN) | |||

* Prevalence 盛行率 = (TP + FN) / N. | |||

* Note that PPV & NPV can also be computed from sensitivity, specificity, and '''prevalence''': | |||

** [https://en.wikipedia.org/wiki/Positive_and_negative_predictive_values#cite_note-AltmanBland1994-2 PPV is directly proportional to the prevalence of the disease or condition.]. | |||

** For example, in the extreme case if the prevalence =1, then PPV is always 1. | |||

::<math> \text{PPV} = \frac{\text{sensitivity} \times \text{prevalence}}{\text{sensitivity} \times \text{prevalence}+(1-\text{specificity}) \times (1-\text{prevalence})} </math> | |||

::<math> \text{NPV} = \frac{\text{specificity} \times (1-\text{prevalence})}{(1-\text{sensitivity}) \times \text{prevalence}+\text{specificity} \times (1-\text{prevalence})} </math> | |||

* [https://bmcbioinformatics.biomedcentral.com/articles/10.1186/s12859-020-03626-y Prediction of heart disease and classifiers’ sensitivity analysis] Almustafa, 2020 | |||

* [https://hutsons-hacks.info/confusiontabler-has-made-it-to-cran ConfusionTableR has made it to CRAN] 21/07/2021. [https://mran.microsoft.com/web/packages/ConfusionTableR/index.html ConfusionTableR] | |||

== caret::confusionMatrix == | |||

<ul> | |||

<li>[https://cran.r-project.org/web/packages/caret/vignettes/caret.html Vignette], [https://www.rdocumentation.org/packages/caret/versions/7.0-1/topics/confusionMatrix ?confusionMatrix]. It can produce a several statistics, including Precision, Recall, F1, .... | |||

<pre> | |||

confusionMatrix(pred, truth) | |||

cm <- confusionMatrix(data = plsClasses, testing$Class) | |||

#> Confusion Matrix and Statistics | |||

#> | |||

#> Reference | |||

#> Prediction M R | |||

#> M 21 7 | |||

#> R 6 17 | |||

#> | |||

#> Accuracy : 0.745 | |||

#> 95% CI : (0.604, 0.857) | |||

#> No Information Rate : 0.529 | |||

#> P-Value [Acc > NIR] : 0.00131 | |||

#> | |||

#> Kappa : 0.487 | |||

#> | |||

#> Mcnemar's Test P-Value : 1.00000 | |||

#> | |||

#> Sensitivity : 0.778 | |||

#> Specificity : 0.708 | |||

#> Pos Pred Value : 0.750 | |||

#> Neg Pred Value : 0.739 | |||

#> Prevalence : 0.529 | |||

#> Detection Rate : 0.412 | |||

#> Detection Prevalence : 0.549 | |||

#> Balanced Accuracy : 0.743 | |||

#> | |||

#> 'Positive' Class : M | |||

# Extracting the values | |||

accuracy <- cm$overall["Accuracy"] | |||

sensitivity <- cm$byClass["Sensitivity"] | |||

specificity <- cm$byClass["Specificity"] | |||

ppv <- cm$byClass["Pos Pred Value"] | |||

npv <- cm$byClass["Neg Pred Value"] | |||

metrics_vector <- c( | |||

Accuracy = accuracy, | |||

Sensitivity = sensitivity, | |||

Specificity = specificity, | |||

PPV = ppv, | |||

NPV = npv | |||

) | |||

# Round the vector to 3 decimal places | |||

rounded_metrics <- round(metrics_vector, digits = 3) | |||

# Print the rounded named vector | |||

print(rounded_metrics) | |||

</pre> | |||

<li>[https://www.datatechnotes.com/2019/02/accuracy-metrics-in-classification.html Precision, Recall, Specificity, Prevalence, Kappa, F1-score check with R] by using the [https://www.rdocumentation.org/packages/caret/versions/6.0-86/topics/confusionMatrix caret:: confusionMatrix()] function. ''If there are only two factor levels, the first level will be used as the "positive" result.'' | |||

<li>One thing caret::confusionMatrix can't do is ROC/AUC. For this, use [https://cran.r-project.org/web/packages/pROC/index.html pROC]::ROC() and the plot function. [https://www.statology.org/auc-in-r/ How to Calculate AUC (Area Under Curve) in R]. | |||

</ul> | |||

== False positive rates vs false positive rates == | |||

[https://stats.stackexchange.com/a/340079 FPR (false positive rate) vs FDR (false discovery rate)] | |||

== PPA, NPA == | |||

<ul> | |||

<li>Cf '''PPV'''/positive predictive value & '''NPV'''/negative predictive value | |||

<li>Positive percent agreement (PPA) and negative percent agreement (NPA) - [https://www.fda.gov/regulatory-information/search-fda-guidance-documents/statistical-guidance-reporting-results-studies-evaluating-diagnostic-tests-guidance-industry-and-fda Statistical Guidance on Reporting Results from Studies Evaluating Diagnostic Tests - Guidance for Industry and FDA Staff] | |||

* The ''percentage of subjects (or samples) that test positive by one test (TA)'' that are found positive by a second test (TB); the two tests are indicated with the ordered subscripts, as <math>PPA_{TA:TB}</math>. The test listed first is treated as the '''“reference” test''' (denominator a+b in the formula given below). | |||

<li>[https://analyse-it.com/blog/2020/4/diagnostic-accuracy-sensitivity-specificity-versus-agreement-ppa-npa-statistics Diagnostic accuracy (sensitivity/specificity) versus agreement (PPA/NPA) statistics] | |||

* Positive agreement is the ''proportion of '''comparative/reference method''' positive results'' in which the '''test method''' result is positive. | |||

* Negative agreement is the proportion of comparative/reference method negative results in which the test method result is negative. | |||

<li>The focus on PPA or NPA depends on the context and the specific objectives of the test evaluation. | |||

* <span style="color: red">PPA is particularly important when the consequence of a false negative is high. For example, in a disease screening context, a false negative (a sick person being incorrectly identified as healthy) could lead to delayed treatment and worse health outcomes. Therefore, a high PPA (few false negatives) is desirable.</span> | |||

* <span style="color: red">NPA is crucial when the cost of a false positive is significant</span>. For instance, in a scenario where a positive test result could lead to an invasive follow-up procedure, a false positive (a healthy person being incorrectly identified as sick) could lead to unnecessary risk and anxiety for the patient. In this case, a high NPA (few false positives) is important. | |||

<li>Paper examples. | |||

* [https://www.frontiersin.org/articles/10.3389/fnagi.2022.1003792/full Primary progressive aphasia and motor neuron disease: A review] | |||

<li>Other evaluation of diagnostic tests: Sensitivity/Specificity, Positive Predictive Value (PPV)/Negative Predictive Value (NPV), Overall Test Accuray, Diagnostic Odds Ratio (DOR), Youden Index (YI), Positive Likelihood Ratio (LR+). See | |||

* [https://www.degruyter.com/document/doi/10.1515/tjb-2020-0337/html Evaluation of binary diagnostic tests accuracy for medical researches] 2020, | |||

* [https://journals.sagepub.com/doi/epdf/10.1177/201010581102000411 Measures of Diagnostic Accuracy: Sensitivity, Specificity, PPV and NPV] 2011 where Fig 1 shows the effect of '''disease prevalence''' on PPV and NPV. | |||

* [https://journals.plos.org/plosone/article?id=10.1371%2Fjournal.pone.0223832 Diagnostic test evaluation methodology: A systematic review of methods employed to evaluate diagnostic tests in the absence of gold standard – An update] 2019. | |||

<li>Assume rows are '''new test''' and columns are '''reference'''. | |||

{| class="wikitable" | |||

|- | |||

| | |||

| reference + | |||

| reference - | |||

|- | |||

| test + | |||

| TP (a) | |||

| FP (c) | |||

|- | |||

| test - | |||

| FN (b) | |||

| TN (d) | |||

|- | |||

| | |||

| x.1 | |||

| x.2 | |||

|} PPA = TP/x.1 = TP/(TP+FN), NPA = TN/x.2 = TN/(FP+TN) | |||

<li>APA ('''Average''' positive agreement) and ANA ('''Average''' negative agreement). See [https://www.accessdata.fda.gov/cdrh_docs/reviews/DEN200001.pdf#page=3 EVALUATION OF AUTOMATIC CLASS III DESIGNATION FOR Cell-Free DNA BCT] and [https://analyse-it.com/docs/user-guide/method-comparison/agreementmeasures Agreement measures for binary and semi-quantitative data]. '''Note, APA and ANA are not standard terms used in diagnostic test evaluation, and their definitions may vary depending on the context'''. | |||

<ul> | |||

<li>Suppose <math>PPA = \frac{a}{a+b}</math>, then <math>APA = \frac{a}{((a+b)+(a+c))/2} = \frac{2x_{11}}{x_{1.}+x_{.1}} = | |||

\frac{\mbox{n. of concordant positives}}{\mbox{n. of concordant positives} + \frac{\mbox{n. of disconcordant calls}}{2}} </math>. | |||

<li>Suppose <math>NPA = \frac{d}{c+d}</math>, then <math>ANA = \frac{d}{((d+b)+(d+c))/2} = \frac{2x_{22}}{x_{2.}+x_{.2}} = | |||

\frac{\mbox{n. of concordant negatives}}{\mbox{n. of concordant negatives} + \frac{\mbox{n. of disconcordant calls}}{2}} </math>. | |||

<li>The overall proportion of agreement is the sum of the diagonal entries divided by the total. | |||

</ul> | |||

<li>The above two APA and ANA statistics are in the same form as the Harrell's C-index. See Harrell's C-index formula at [https://statisticaloddsandends.wordpress.com/2019/10/26/what-is-harrells-c-index/ What is Harrell’s C-index?] | |||

</ul> | |||

== Random classifier == | |||

* Sensitivity and specificity should be close to 0.5 for a random classifier assuming a balanced class distribution in a binary classification problem. | |||

* However, PPV or NPV is not close to 0.5 for a random classifier. PPV for a random classifier will depend on the class distribution in the dataset and the randomness of the classifier's guesses. It would vary randomly depending on the classifier's random guesses and the class distribution in the dataset. | |||

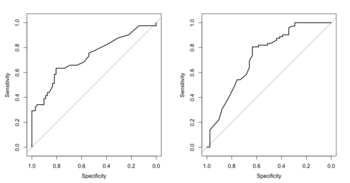

= ROC curve = | = ROC curve = | ||

* Binary case: | * Binary case: | ||

| Line 14: | Line 163: | ||

** The AUC can be defined as '''The probability that a randomly selected case will have a higher test result than a randomly selected control'''. | ** The AUC can be defined as '''The probability that a randomly selected case will have a higher test result than a randomly selected control'''. | ||

** Plot of sensitivity/specificity (y-axis) vs cutoff points of the biomarker | ** Plot of sensitivity/specificity (y-axis) vs cutoff points of the biomarker | ||

** The Mann-Whitney U test statistic (or Wilcoxon or Kruskall-Wallis test statistic) is equivalent to the AUC (Mason, 2002) | ** The '''Mann-Whitney U test statistic''' (or Wilcoxon or Kruskall-Wallis test statistic) is equivalent to the AUC (Mason, 2002). [https://stats.stackexchange.com/a/207065 Verify in R]. | ||

:<syntaxhighlight lang='rsplus'> | |||

library(pROC) | |||

set.seed(123) | |||

group1 <- rnorm(50) | |||

group2 <- rnorm(50, mean=1) | |||

roc_obj <- roc(rep(c(0,1), each=50), c(group1, group2)) | |||

# Setting levels: control = 0, case = 1 | |||

# Setting direction: controls < cases | |||

auc(roc_obj) | |||

# Area under the curve: 0.8036 | |||

wilcox.test(group2, group1)$statistic/(50*50) # notice the group1,group2 order | |||

# W | |||

# 0.8036 | |||

</syntaxhighlight> | |||

** The p-value of the Mann-Whitney U test can thus safely be used to test whether the AUC differs significantly from 0.5 (AUC of an uninformative test). | ** The p-value of the Mann-Whitney U test can thus safely be used to test whether the AUC differs significantly from 0.5 (AUC of an uninformative test). | ||

* [https://stackoverflow.com/questions/4903092/calculate-auc-in-r Calculate AUC by hand]. AUC is equal to the '''probability that a true positive is scored greater than a true negative.''' | * [https://stackoverflow.com/questions/4903092/calculate-auc-in-r Calculate AUC by hand]. AUC is equal to the '''probability that a true positive is scored greater than a true negative.''' | ||

| Line 55: | Line 221: | ||

* [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3068975/ pROC: an open-source package for R and S+ to analyze and compare ROC curves] | * [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC3068975/ pROC: an open-source package for R and S+ to analyze and compare ROC curves] | ||

** [https://www.rdocumentation.org/packages/pROC/versions/1.18.0/topics/auc pROC::auc()] | ** [https://www.rdocumentation.org/packages/pROC/versions/1.18.0/topics/auc pROC::auc()] | ||

* [https://onlinelibrary.wiley.com/doi/pdf/10.1111/1541-0420.00071 Partial AUC Estimation and Regression] Dodd 2003. <math>AUC(t_0,t_1) = \int_{t_0}^{t_1} ROC(t) dt </math> where the interval <math>(t_0, t_1)</math> denotes the false-positive rates of interest. | |||

== summary ROC == | |||

* [https://onlinelibrary.wiley.com/doi/10.1002/sim.2103 The partial area under the summary ROC curve] Walter 2005 | * [https://onlinelibrary.wiley.com/doi/10.1002/sim.2103 The partial area under the summary ROC curve] Walter 2005 | ||

* [https:// | * [https://www.tandfonline.com/doi/abs/10.1080/02664763.2022.2041565 On summary ROC curve for dichotomous diagnostic studies: an application to meta-analysis of COVID-19] 2022 | ||

== Weighted ROC == | == Weighted ROC == | ||

| Line 71: | Line 240: | ||

** [https://juliasilge.com/blog/sf-trees-random-tuning/ Tuning random forest hyperparameters with #TidyTuesday trees data] | ** [https://juliasilge.com/blog/sf-trees-random-tuning/ Tuning random forest hyperparameters with #TidyTuesday trees data] | ||

== Optimal threshold == | == Optimal threshold, Youden's index/Youden's J statistic == | ||

* [https://homepage.stat.uiowa.edu/~rdecook/stat6220/Class_notes/ROC_introduction.pdf#page=25 Max of “sensitivity + specificity”]. See [https://cran.r-project.org/web/packages/Epi/index.html Epi::ROC()] function. | * [https://homepage.stat.uiowa.edu/~rdecook/stat6220/Class_notes/ROC_introduction.pdf#page=25 Max of “sensitivity + specificity”]. See [https://cran.r-project.org/web/packages/Epi/index.html Epi::ROC()] function. | ||

* [https://onlinelibrary.wiley.com/doi/abs/10.1002/sim.9432 On optimal biomarker cutoffs accounting for misclassification costs in diagnostic trilemmas with applications to pancreatic cancer] Bantis, 2022 | |||

* [https://en.wikipedia.org/wiki/Youden%27s_J_statistic Youden's index] | |||

** Youden's index = sensitivity + specificity -1 | |||

** Youden's index ranges from 0 to 1 with higher values indicating better overall test performance | |||

** The maximum value of the index may be used as a criterion for selecting the optimum cut-off point when a diagnostic test gives a numeric rather than a dichotomous result. | |||

* [https://cran.r-project.org/web/packages/rocbc/index.html rocbc] package. Provides inferences and comparisons around the AUC, the Youden index, the sensitivity at a given specificity level (and vice versa), the optimal operating point of the ROC curve (in the Youden sense), and the Youden based cutoff. | |||

* [https://onlinelibrary.wiley.com/doi/full/10.1002/bimj.70053?campaign=woletoc Impact of Methodological Assumptions and Covariates on the Cutoff Estimation in ROC Analysis] and [https://github.com/soutikghosal/Covariate-wise-optimal-cutoff R code]. | |||

== ROC Curve AUC for Hypothesis Testing == | |||

* [https://towardsdatascience.com/interpreting-auroc-in-hypothesis-testing-a45f6f757a62 Interpreting AUROC in Hypothesis Testing] | |||

* [https://www.sciencedirect.com/science/article/pii/S1556086415306043 Receiver Operating Characteristic Curve in Diagnostic Test Assessment] 2010 | |||

== Challenges, issues == | |||

[https://bmcmedresmethodol.biomedcentral.com/articles/10.1186/s12874-022-01577-x Methodological conduct of prognostic prediction models developed using machine learning in oncology: a systematic review] 2022. '''class imbalance, data pre-processing, and hyperparameter tuning'''. [https://twitter.com/pauladhiman/status/1512429344316182529?s=20&t=D8wSCw3ET5Sx2C1ZKJxq-g twitter]. | |||

= Survival data = | = Survival data = | ||

| Line 81: | Line 264: | ||

* ROC curves are useful for comparing the discriminatory capacity of different potential biomarkers. | * ROC curves are useful for comparing the discriminatory capacity of different potential biomarkers. | ||

= Precision recall curve = | = Precision recall curve = | ||

| Line 124: | Line 272: | ||

** Y-axis: Sensitivity = tp/(tp + fn) = Recall | ** Y-axis: Sensitivity = tp/(tp + fn) = Recall | ||

** X-axis: 1-Specificity = fp/(fp + tn) | ** X-axis: 1-Specificity = fp/(fp + tn) | ||

* ROC curves are appropriate when the observations are balanced between each class, whereas <span style="color: red">'''PR curves''' are appropriate for imbalanced datasets</span>. When dealing with highly skewed datasets, '''PR curves''' can give a more informative picture of an algorithm’s performance. | |||

* [https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0118432 The Precision-Recall Plot Is More Informative than the ROC Plot When Evaluating Binary Classifiers on Imbalanced Datasets] | * [https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0118432 The Precision-Recall Plot Is More Informative than the ROC Plot When Evaluating Binary Classifiers on Imbalanced Datasets] | ||

== F1-score == | |||

<ul> | |||

<li>[https://en.wikipedia.org/wiki/F-score#Dependence_of_the_F-score_on_class_imbalance F-score]/F1-score. | |||

* F1 = 2 * Precision * Recall / (Precision + Recall) = 2 / (1/Precision + 1/Recall) '''Harmonic mean''' of precision and recall. | |||

* This means that comparison of the F-score across different problems with differing class ratios is problematic. | |||

* Recall = Sensitivity = true positive rate. Precision = PPV. They are from the two directions of the "1" class in the confusion matrix. | |||

* [https://towardsdatascience.com/the-f1-score-bec2bbc38aa6 The F1 score] | |||

* [https://stephenallwright.com/interpret-f1-score/ How to interpret F1 score (simply explained)]. It is a popular metric to use for classification models as <span style="color: red">it provides accurate results for both balanced and imbalanced datasets</span>, and takes into account both the precision and recall ability of the model. '''F1 score tells you the model’s balanced ability to both capture positive cases (recall) and be accurate with the cases it does capture (precision).''' | |||

* [https://www.statology.org/what-is-a-good-f1-score/ What is Considered a “Good” F1 Score?] It gives an example of F1-score where all the predictions are on one class ('''baseline model''') for comparison purpose. | |||

* An example. '''confusionMatrix()''' assumes the rows are predicted and columns are true; that's why I put a transpose on my table object. The 'positive' class seems to be always the 1st row of the 2x2 table. If the levels are not specified, it will assign 'A' to the first row and 'B' to the 2nd row. For this case, we can see accuracy (0.615) is between sensitivity (0.429) and specificity (0.684) and F1-score (0.375) is between precision (0.333) and recall (0.429) . Also F1-score is smaller than sensitivity and specificity. | |||

<pre> | |||

predicted | |||

sensitive resistant | |||

------------------------------- | |||

true | |||

sensitive 3 4 | |||

resistant 6 13 | |||

xtab <- matrix(c(3,6,4,13),nr=2) | |||

confusionMatrix(t(xtab))$byClass | |||

# Sensitivity Specificity Pos Pred Value Neg Pred Value | |||

# 0.4285714 0.6842105 0.3333333 0.7647059 | |||

# Precision Recall F1 Prevalence | |||

# 0.3333333 0.4285714 0.3750000 0.2692308 | |||

# Detection Rate Detection Prevalence Balanced Accuracy | |||

# 0.1153846 0.3461538 0.5563910 | |||

confusionMatrix(t(xtab))$overall | |||

# Accuracy Kappa AccuracyLower AccuracyUpper AccuracyNull | |||

# 0.6153846 0.1034483 0.4057075 0.7977398 0.7307692 | |||

# AccuracyPValue McnemarPValue | |||

# 0.9348215 0.7518296 | |||

confusionMatrix(t(xtab))$positive | |||

# [1] "A" | |||

</pre> | |||

<li>[https://stackoverflow.com/a/49467039 Using F1 score metric in KNN through caret package] | |||

<pre> | |||

library(caret) | |||

library(mlbench) | |||

data(Sonar) | |||

f1Summary <- function(data, lev = NULL, model = NULL) { | |||

# Calculate precision and recall | |||

precision <- posPredValue(data$pred, data$obs, positive = lev[1]) | |||

recall <- sensitivity(data$pred, data$obs, positive = lev[1]) | |||

# Calculate F1 score | |||

f1 <- (2 * precision * recall) / (precision + recall) | |||

# Return F1 score | |||

c(F1 = f1) | |||

} | |||

# Create a trainControl object that uses the custom summary function | |||

trainControl <- trainControl(summaryFunction = f1Summary) | |||

# Use the trainControl object when calling the train() function | |||

model <- train(Class ~., data = Sonar, method = "rf", | |||

trControl = trainControl, metric = "F1") | |||

> model | |||

Random Forest | |||

208 samples | |||

60 predictor | |||

2 classes: 'M', 'R' | |||

No pre-processing | |||

Resampling: Bootstrapped (25 reps) | |||

Summary of sample sizes: 208, 208, 208, 208, 208, 208, ... | |||

Resampling results across tuning parameters: | |||

mtry F1 | |||

2 0.8416808 | |||

31 0.7986326 | |||

60 0.7820982 | |||

F1 was used to select the optimal model using the largest value. | |||

The final value used for the model was mtry = 2. | |||

</pre> | |||

<li>Examples in papers | |||

* [https://www.biorxiv.org/content/10.1101/2023.04.19.537463v1.full.pdf A Comprehensive Benchmark Study on Biomedical Text Generation and Mining with ChatGPT] | |||

</ul> | |||

== PPV/Precision == | |||

The relationship between PPV and sensitivity depends on the '''prevalence''' of the condition being tested for in the population. If the condition is rare, a test with high sensitivity may still have a low PPV because there will be many false positive results. On the other hand, if the condition is common, a test with high sensitivity is more likely to also have a high PPV. | |||

Here we have a diagnostic test for a rare disease that affects 1 in 1000 people and the test has a sensitivity of 100% and a false positive rate of 5%: | |||

<pre> | |||

Test| positive negative | |||

----------------------------------------- | |||

Disease present | 1 0 | |||

Disease absent | 50 949 | |||

</pre> | |||

The prevalence of the disease is 1/1000. | |||

= Incidence, Prevalence = | = Incidence, Prevalence = | ||

https://www.health.ny.gov/diseases/chronic/basicstat.htm | https://www.health.ny.gov/diseases/chronic/basicstat.htm | ||

= Jaccard index = | |||

* https://en.wikipedia.org/wiki/Jaccard_index | |||

* [https://youtu.be/YotbvhndSf4?t=306 Clear Example of Jaccard Similarity // Visual Explanation of What is the Jaccard Index?] (video). Jaccard similarity = TP / (TP + FP + FN) | |||

= Calculate area under curve by hand (using trapezoid), relation to concordance measure and the Wilcoxon–Mann–Whitney test = | = Calculate area under curve by hand (using trapezoid), relation to concordance measure and the Wilcoxon–Mann–Whitney test = | ||

| Line 153: | Line 403: | ||

= Use and Misuse of the Receiver Operating Characteristic Curve in Risk Prediction = | = Use and Misuse of the Receiver Operating Characteristic Curve in Risk Prediction = | ||

http://circ.ahajournals.org/content/115/7/928 | * http://circ.ahajournals.org/content/115/7/928 | ||

* [https://bmcmedicine.biomedcentral.com/articles/10.1186/s12916-019-1466-7 Calibration: the Achilles heel of predictive analytics] 2019 | |||

= | = Comparison of two AUCs = | ||

* [https://onlinelibrary.wiley.com/doi/epdf/10.1002/sim.5727 Testing for improvement in prediction model performance] by Pepe et al 2013. | * [https://onlinelibrary.wiley.com/doi/epdf/10.1002/sim.5727 Testing for improvement in prediction model performance] by Pepe et al 2013. | ||

* [https://statcompute.wordpress.com/2018/12/25/statistical-assessments-of-auc/ Statistical Assessments of AUC]. This is using the '''pROC::roc.test''' function. | |||

* [https://cran.r-project.org/web/packages/prioritylasso/vignettes/prioritylasso_vignette.html prioritylasso]. It is using roc(), auc(), roc.test(), plot.roc() from the '''pROC''' package. The calculation based on the training data is biased so we need to report the one based on test data. | |||

== DeLong test for comparing two ROC curves == | |||

* [https://glassboxmedicine.com/2020/02/04/comparing-aucs-of-machine-learning-models-with-delongs-test/ Comparing AUCs of Machine Learning Models with DeLong’s Test] | |||

* [https://pubmed.ncbi.nlm.nih.gov/22415937/ Misuse of DeLong test to compare AUCs for nested models] | |||

* [https://statisticaloddsandends.wordpress.com/2020/06/07/what-is-the-delong-test-for-comparing-aucs/ What is the DeLong test for comparing AUCs?] | |||

* [https://www.jianshu.com/p/9b0556134eec R语言,ROC曲线,deLong test]. [https://cran.r-project.org/web/packages/pROC/ pROC]::roc.test() was used. | |||

* [https://www.rdocumentation.org/packages/Daim/versions/1.1.0/topics/deLong.test Daim::deLong.test()] | |||

= Some R packages = | = Some R packages = | ||

| Line 169: | Line 429: | ||

** [https://cran.r-project.org/web/packages/caret/ caret] | ** [https://cran.r-project.org/web/packages/caret/ caret] | ||

* [https://github.com/dariyasydykova/open_projects/tree/master/ROC_animation ROC animation] | * [https://github.com/dariyasydykova/open_projects/tree/master/ROC_animation ROC animation] | ||

== pROC == | |||

<ul> | |||

<li>https://cran.r-project.org/web/packages/pROC/index.html | |||

* response: a factor, numeric or character vector of responses (true class), typically encoded with 0 (controls) and 1 (cases). | |||

* Pay attention to the message printed on screen after calling roc(). It will show which one is control and which one is case. | |||

<li>[https://web.expasy.org/pROC/ pROC: display and analyze ROC curves in R and S+] | |||

<li>[https://medium.com/swlh/roc-curve-and-auc-detailed-understanding-and-r-proc-package-86d1430a3191 ROC Curve and AUC in Machine learning and R pROC Package]. Pay attention to the '''legacy.axes''' parameter. | |||

<pre> | |||

library(pROC) | |||

data(aSAH) | |||

str(aSAH[,c('outcome', "s100b")]) | |||

# 'data.frame': 113 obs. of 2 variables: | |||

# $ outcome: Factor w/ 2 levels "Good","Poor": 1 1 1 1 2 2 1 2 1 1 ... | |||

# $ s100b : num 0.13 0.14 0.1 0.04 0.13 0.1 0.47 0.16 0.18 0.1 ... | |||

roc.s100b <- roc(aSAH$outcome, aSAH$s100b) | |||

roc(aSAH$outcome, aSAH$s100b, | |||

plot=TRUE, | |||

auc=TRUE, # already the default | |||

col="green", | |||

lwd =4, | |||

legacy.axes=TRUE, | |||

main="ROC Curves") | |||

# Data: aSAH$s100b in 72 controls (aSAH$outcome Good) < 41 cases (aSAH$outcome Poor). | |||

# Area under the curve: 0.7314 | |||

auc(roc.s100b) | |||

# Area under the curve: 0.7314 | |||

auc(aSAH$outcome, aSAH$s100b) | |||

# Area under the curve: 0.7314 | |||

</pre> | |||

Note: in pROC::roc() or auc(), the '''predicted response''' is on the 1st argument while in [https://rdrr.io/cran/caret/man/confusionMatrix.html caret::confusionMatrix()], '''truth/reference''' is on the 2nd argument. | |||

If we flipped the outcomes, it won't affect AUC. | |||

<pre> | |||

aSAH$outcome2 <- factor(ifelse(aSAH$outcome == "Good", "Poor", "Good"), | |||

levels=c("Good", "Poor")) | |||

roc(aSAH$outcome2, aSAH$s100b) | |||

# Data: aSAH$s100b in 41 controls (aSAH$outcome2 Good) > 72 cases (aSAH$outcome2 Poor). | |||

# Area under the curve: 0.7314 | |||

</pre> | |||

[[File:Roc asah.png|350px]] | |||

</li> | |||

<li>Plot multiple ROC curves in one plot. | |||

<pre> | |||

roc1 <- roc() | |||

roc2 <- roc() | |||

roc3 <- roc() | |||

plot(roc1) | |||

lines(roc2, col="red") | |||

lines(roc3, col="blue") | |||

</pre> | |||

Another solution is to use [https://rdrr.io/cran/pROC/man/ggroc.html pROC::ggroc()]. The annotation was taken care automatically. | |||

<pre> | |||

rocobj <- roc(aSAH$outcome, aSAH$s100b) | |||

rocobj2 <- roc(aSAH$outcome, aSAH$wfns) | |||

g2 <- ggroc(list(s100b=rocobj, | |||

wfns=rocobj2, | |||

ndka=roc(aSAH$outcome, aSAH$ndka))) # 3 curves | |||

</pre> | |||

<li>Plot a panel of ROC plots by using facet_wrap(). | |||

* [https://stackoverflow.com/a/69394230 How to add AUC to a multiple ROC graph with pROC's ggroc]. ggroc() is part of pROC package. | |||

* See [https://stackoverflow.com/a/11889798 Annotating text on individual facet in ggplot2] | |||

* See [[Ggplot2#facet_wrap_and_facet_grid_to_create_a_panel_of_plots|facet_wrap]] on how to change the labels in each plots. | |||

</li> | |||

</ul> | |||

== ROCR == | |||

* https://cran.r-project.org/web/packages/ROCR/, | |||

** [https://cran.r-project.org/web/packages/ROCR/vignettes/ROCR.html Vignette]. The vignette shows the package has a way to take the output from multiple prediction (eg 10-fold CV) and create a plot with 10 curves or 1 curve by '''averaging multiple curves'''. | |||

* [https://www.r-bloggers.com/2014/12/a-small-introduction-to-the-rocr-package/ A small introduction to the ROCR package] | |||

* [https://financetrain.com/measure-model-performance-in-r-using-rocr-package Measure Model Performance in R Using ROCR Package] | |||

* [https://www.r-bloggers.com/2021/02/roc-for-decision-trees-where-did-the-data-come-from-2/ ROC for Decision Trees – where did the data come from?]. NB: 1) Change the double quote sign when we do copy-and-paste, 2) the result is biased since it does not use CV or a test data. The right way is to use a newdata in '''predict.rpart()''' function. When the newdata is omitted, fitted values are used. | |||

= Cross-validation ROC = | = Cross-validation ROC = | ||

<ul> | |||

<li>[https://bookdown.org/content/4818/cross-validation.html ROC cross-validation] caret::train(, metric = "ROC") | |||

<li>[https://stackoverflow.com/a/20825828 Feature selection + cross-validation, but how to make ROC-curves in R]. Small samples within the cross-validation may lead to '''underestimated''' AUC as the ROC curve with all data will tend to be smoother and less underestimated by the trapezoidal rule. | |||

{{Pre}} | |||

library(pROC) | |||

data(aSAH) | |||

k <- 10 | |||

n <- dim(aSAH)[1] | |||

set.seed(1) | |||

indices <- sample(rep(1:k, ceiling(n/k))[1:n]) | |||

all.response <- all.predictor <- aucs <- c() | |||

for (i in 1:k) { | |||

test = aSAH[indices==i,] | |||

learn = aSAH[indices!=i,] | |||

model <- glm(as.numeric(outcome)-1 ~ s100b + ndka + as.numeric(wfns), data = learn, family=binomial(link = "logit")) | |||

model.pred <- predict(model, newdata=test) | |||

aucs <- c(aucs, roc(test$outcome, model.pred)$auc) | |||

all.response <- c(all.response, test$outcome) | |||

all.predictor <- c(all.predictor, model.pred) | |||

} | |||

roc(all.response, all.predictor) | |||

# Setting levels: control = 1, case = 2 | |||

# Setting direction: controls < cases | |||

# | |||

# Call: | |||

# roc.default(response = all.response, predictor = all.predictor) | |||

# | |||

# Data: all.predictor in 72 controls (all.response 1) < 41 cases (all.response 2). | |||

# Area under the curve: 0.8279 | |||

mean(aucs) | |||

# [1] 0.8484921 | |||

</pre> | |||

<li>[https://stackoverflow.com/a/31141059 ROC curve from training data in caret] | |||

<li>[https://stackoverflow.com/a/37261201 Plot ROC curve from Cross-Validation (training) data in R] | |||

<li>[https://datascience.stackexchange.com/q/14394 How to represent ROC curve when using Cross-Validation]. the standard way to do it is to find the AUC per fold, then the mean AUC would be your performance +/- sd(AUC)/sqrt(5). Your mean AUC of all the folds should be close to if you found all the out-of-fold probabilities and lined them up and found a grand AUC. | |||

<li>[https://intobioinformatics.wordpress.com/2019/11/26/how-to-easily-make-a-roc-curve-in-r/ How to easily make a ROC curve in R] | |||

<li>[https://stats.stackexchange.com/a/386341 Appropriate way to get Cross Validated AUC] | |||

<li>[https://cran.r-project.org/web/packages/cvAUC/index.html cvAUC] (Cross-validated Area Under the ROC Curve) package as linked from [https://rviews.rstudio.com/2019/03/01/some-r-packages-for-roc-curves/ Some R Packages for ROC Curves] | |||

<li>[https://stats.stackexchange.com/a/12428 How do you generate ROC curves for '''leave-one-out''' cross validation?] If the classifier outputs probabilities, then combining all the test point outputs for a single ROC curve is appropriate. If not, then scale the output of the classifier in a manner that would make it directly comparable across classifiers. | |||

<li>[https://bookdown.org/content/68ffa8db-a8cf-468c-94cd-e0ff75ad9f33/cross-validation.html ROC cross-validation] using the caret package (from U. of Sydney). | |||

</ul> | |||

= mean ROC curve = | = mean ROC curve = | ||

[https://stackoverflow.com/a/66001395 ROC with cross-validation for linear regression in R] | [https://stackoverflow.com/a/66001395 ROC with cross-validation for linear regression in R] | ||

= | = Assess risk of bias = | ||

[https://www.acpjournals.org/doi/10.7326/M18-1377 PROBAST: A Tool to Assess Risk of Bias and Applicability of Prediction Model Studies: Explanation and Elaboration] 2019. http://www.probast.org/ | |||

= Confidence interval of AUC = | = Confidence interval of AUC = | ||

[http://theautomatic.net/2019/08/20/how-to-get-an-auc-confidence-interval/ How to get an AUC confidence interval]. [https://cran.r-project.org/web/packages/pROC/index.html pROC] package was used. | [http://theautomatic.net/2019/08/20/how-to-get-an-auc-confidence-interval/ How to get an AUC confidence interval]. [https://cran.r-project.org/web/packages/pROC/index.html pROC] package was used. | ||

= AUC can be a misleading measure of performance = | = AUC can be a misleading measure of performance = | ||

| Line 200: | Line 567: | ||

[https://academic.oup.com/bib/article/13/1/83/218392 Caveats and pitfalls of ROC analysis in clinical microarray research (and how to avoid them)] Berrar 2011 | [https://academic.oup.com/bib/article/13/1/83/218392 Caveats and pitfalls of ROC analysis in clinical microarray research (and how to avoid them)] Berrar 2011 | ||

= Picking a threshold based on model performance/utility = | = Limitation in clinical data = | ||

[https://win-vector.com/2020/10/05/squeezing-the-most-utility-from-your-models/ Squeezing the Most Utility from Your Models] | * [https://www.sciencedirect.com/science/article/abs/pii/S0895435618310047 ROC curves for clinical prediction models part 1. ROC plots showed no added value above the AUC when evaluating the performance of clinical prediction models] Verbakel 2020 | ||

= Sample size = | |||

* [https://twitter.com/GSCollins/status/1563916550121426951 Sample size MATTERS - don't ignore it]. | |||

** [https://onlinelibrary.wiley.com/doi/10.1002/sim.7992 Minimum sample size for '''developing''' a multivariable prediction model: PART II - binary and time-to-event outcomes] 2018, | |||

** [https://onlinelibrary.wiley.com/doi/10.1002/sim.9025 Minimum sample size for '''external validation''' of a clinical prediction model with a binary outcome] 2021 | |||

* [https://onlinelibrary.wiley.com/doi/full/10.1002/sim.9412 Sample size methods for evaluation of predictive biomarkers] 2022 | |||

= Threshold = | |||

== Myths about risk thresholds for prediction models == | |||

[https://bmcmedicine.biomedcentral.com/articles/10.1186/s12916-019-1425-3 Three myths about risk thresholds for prediction models] 2019 | |||

== Picking a threshold based on model performance/utility == | |||

[https://win-vector.com/2020/10/05/squeezing-the-most-utility-from-your-models/ Squeezing the Most Utility from Your Models] 2020 | |||

= Why does my ROC curve look like a V = | |||

https://stackoverflow.com/a/42917384 | |||

= Unbalanced classes = | = Unbalanced classes = | ||

* [https://machinelearningmastery.com/tactics-to-combat-imbalanced-classes-in-your-machine-learning-dataset/ 8 Tactics to Combat Imbalanced Classes in Your Machine Learning Dataset] | * [https://machinelearningmastery.com/tactics-to-combat-imbalanced-classes-in-your-machine-learning-dataset/ 8 Tactics to Combat Imbalanced Classes in Your Machine Learning Dataset] | ||

** [https://machinelearningmastery.com/cross-validation-for-imbalanced-classification/ How to Fix k-Fold Cross-Validation for Imbalanced Classification]. It teaches you how to split samples in CV by using '''stratified k-fold cross-validation'''. | |||

* ROC is especially useful for unbalanced data where the 0.5 threshold may not be appropriate. | * ROC is especially useful for unbalanced data where the 0.5 threshold may not be appropriate. | ||

* [https://www.kaggle.com/code/lct14558/imbalanced-data-why-you-should-not-use-roc-curve/ Imbalanced data & why you should NOT use ROC curve] | |||

* [https://stats.stackexchange.com/questions/260164/auc-and-class-imbalance-in-training-test-dataset AUC and class imbalance in training/test dataset] | |||

* [[ROC#Confusion_matrix.2C_Sensitivity.2FSpecificity.2FAccuracy|Use Precison/PPV to replace FDR]] | * [[ROC#Confusion_matrix.2C_Sensitivity.2FSpecificity.2FAccuracy|Use Precison/PPV to replace FDR]] | ||

** [https://stackoverflow.com/a/33297162 Good ROC curve but poor precision-recall curve] | ** [https://stackoverflow.com/a/33297162 Good ROC curve but poor precision-recall curve] | ||

| Line 217: | Line 603: | ||

* [https://twitter.com/joshuastarmer/status/1432753482948300801 SMOTE] | * [https://twitter.com/joshuastarmer/status/1432753482948300801 SMOTE] | ||

* [https://www.tandfonline.com/doi/abs/10.1080/01621459.2021.2005609?journalCode=uasa20 Classification Trees for Imbalanced Data: Surface-to-Volume Regularization] Zhu, JASA 2021 | * [https://www.tandfonline.com/doi/abs/10.1080/01621459.2021.2005609?journalCode=uasa20 Classification Trees for Imbalanced Data: Surface-to-Volume Regularization] Zhu, JASA 2021 | ||

* [https://academic.oup.com/jamia/article/29/9/1525/6605096 The harm of class imbalance corrections for risk prediction models: illustration and simulation using logistic regression] 2022 | |||

* [https://datasciencetut.com/how-to-handle-imbalanced-data/ How to handle Imbalanced Data?] | |||

== Weights == | |||

* [https://en.wikipedia.org/wiki/Weighted_least_squares Weighted least squares] | |||

* [https://stats.stackexchange.com/a/123051 Is an WLS estimator unbiased, when wrong weights are used?] | |||

* [https://www.statology.org/weighted-least-squares-in-r How to Perform Weighted Least Squares Regression in R] | |||

* [https://stats.stackexchange.com/questions/246085/how-to-determine-weights-for-wls-regression-in-r? How to determine weights for WLS regression in R?] | |||

* [https://towardsdatascience.com/weighted-logistic-regression-for-imbalanced-dataset-9a5cd88e68b Weighted Logistic Regression for Imbalanced Dataset] | |||

== Metric == | == Metric == | ||

* [https://machinelearningmastery.com/tour-of-evaluation-metrics-for-imbalanced-classification/ Tour of Evaluation Metrics for Imbalanced Classification]. More strategies are available. | * [https://machinelearningmastery.com/tour-of-evaluation-metrics-for-imbalanced-classification/ Tour of Evaluation Metrics for Imbalanced Classification]. More strategies are available. | ||

* [https://en.wikipedia.org/wiki/F-score F-score] | * [https://en.wikipedia.org/wiki/F-score F-score] | ||

** [https://yardstick.tidymodels.org/reference/f_meas.html tidymodels::f_meas()], [https://towardsdatascience.com/modelling-with-tidymodels-and-parsnip-bae2c01c131c Modelling with Tidymodels and Parsnip], [https://medium.com/the-researchers-guide/modelling-binary-logistic-regression-using-tidymodels-library-in-r-part-1-c1bdce0ac055 Modelling Binary Logistic Regression using Tidymodels Library in R] | |||

** [https://towardsdatascience.com/caret-vs-tidymodels-create-complete-reusable-machine-learning-workflows-5c50a7befd2d caret::train(,metric)] from ''Caret vs. tidymodels — create reusable machine learning workflows'' | |||

* [https://cran.r-project.org/web/packages/MLmetrics/ MLmetrics]: Machine Learning Evaluation Metrics | |||

* [https://stats.stackexchange.com/a/367911 Classification/evaluation metrics for highly imbalanced data] | * [https://stats.stackexchange.com/a/367911 Classification/evaluation metrics for highly imbalanced data] | ||

* [https://towardsdatascience.com/what-metrics-should-we-use-on-imbalanced-data-set-precision-recall-roc-e2e79252aeba What metrics should be used for evaluating a model on an imbalanced data set? (precision + recall or ROC=TPR+FPR)] | * [https://towardsdatascience.com/what-metrics-should-we-use-on-imbalanced-data-set-precision-recall-roc-e2e79252aeba What metrics should be used for evaluating a model on an imbalanced data set? (precision + recall or ROC=TPR+FPR)] | ||

* [https://finnstats.com/index.php/2022/09/06/assess-performance-of-the-classification-model/ Assess Performance of the Classification Model]: Matthews correlation coefficient (MCC) | |||

= AI models = | |||

[https://arxiv.org/abs/2412.10288 Performance evaluation of predictive AI models to support medical decisions: Overview and guidance] | |||

= Class comparison problem = | = Class comparison problem = | ||

* [https://bioconductor.org/packages/release/bioc/html/compcodeR.html compcodeR]: RNAseq data simulation, differential expression analysis and performance comparison of differential expression methods | * [https://bioconductor.org/packages/release/bioc/html/compcodeR.html compcodeR]: RNAseq data simulation, differential expression analysis and performance comparison of differential expression methods | ||

* [https://academic.oup.com/bioinformatics/article/31/17/2778/183245 Polyester]: simulating RNA-seq datasets with differential transcript expression, [https://github.com/leekgroup/polyester_code github], [https://htmlpreview.github.io/?https://github.com/leekgroup/polyester_code/blob/master/polyester_manuscript.html HTML] | * [https://academic.oup.com/bioinformatics/article/31/17/2778/183245 Polyester]: simulating RNA-seq datasets with differential transcript expression, [https://github.com/leekgroup/polyester_code github], [https://htmlpreview.github.io/?https://github.com/leekgroup/polyester_code/blob/master/polyester_manuscript.html HTML] | ||

* [https://journals.plos.org/plosone/article?id=10.1371/journal.pone.0232271 Benchmarking RNA-seq differential expression analysis methods using spike-in and simulation data] Baik 2020 | |||

= Reporting = | |||

* [https://www.acpjournals.org/doi/10.7326/M14-0697 Transparent Reporting of a multivariable prediction model for Individual Prognosis Or Diagnosis (TRIPOD): The TRIPOD Statement] 2015 | |||

* [https://academic.oup.com/jamia/advance-article/doi/10.1093/jamia/ocac002/6511611 Trends in the conduct and reporting of clinical prediction model development and validation: a systematic review] Yang 2022 | |||

* [https://jamanetwork.com/journals/jamasurgery/article-abstract/2799008 Appraising the Quality of Development and Reporting in Surgical Prediction Models] 2022 | |||

= Applications = | |||

* [https://www.nature.com/articles/s41598-022-12199-0 Development and validation of an RNA-seq-based transcriptomic risk score for asthma] 2022 | |||

= Lessons = | |||

* Unbalanced data: '''kNN''' or '''nearest centroid''' is better than the traditional methods | |||

* Small sample size and large number of predictors: '''t-test''' can select predictors while '''lasso''' cannot | |||

Latest revision as of 14:19, 13 August 2025

Confusion matrix, Sensitivity/Specificity/Accuracy

Wikipedia. Note in wikipedia, it uses positive/negative to represent two classes.

| Predict | ||||

| 1 | 0 | |||

| True | 1 | TP | FN | Sens=TP/(TP+FN)=Recall=TPR FNR=FN/(TP+FN) |

| 0 | FP | TN | Spec=TN/(FP+TN), 1-Spec=FPR | |

| PPV=TP/(TP+FP)=Precision FDR=FP/(TP+FP) =1-PPV |

NPV=TN/(FN+TN) | N = TP + FP + FN + TN | ||

- Sensitivity 敏感度 = TP / (TP + FN) = Recall

- Specificity 特異度 = TN / (TN + FP)

- Accuracy = (TP + TN) / N

- False discovery rate FDR = FP / (TP + FP)

- False negative rate FNR = FN / (TP + FN)

- False positive rate FPR = FP / (FP + TN) = 1 - Spec

- True positive rate = TP / (TP + FN) = Sensitivity

- Positive predictive value (PPV) = TP / # positive calls = TP / (TP + FP) = 1 - FDR = Precision

- Negative predictive value (NPV) = TN / # negative calls = TN / (FN + TN)

- Prevalence 盛行率 = (TP + FN) / N.

- Note that PPV & NPV can also be computed from sensitivity, specificity, and prevalence:

- PPV is directly proportional to the prevalence of the disease or condition..

- For example, in the extreme case if the prevalence =1, then PPV is always 1.

- [math]\displaystyle{ \text{PPV} = \frac{\text{sensitivity} \times \text{prevalence}}{\text{sensitivity} \times \text{prevalence}+(1-\text{specificity}) \times (1-\text{prevalence})} }[/math]

- [math]\displaystyle{ \text{NPV} = \frac{\text{specificity} \times (1-\text{prevalence})}{(1-\text{sensitivity}) \times \text{prevalence}+\text{specificity} \times (1-\text{prevalence})} }[/math]

- Prediction of heart disease and classifiers’ sensitivity analysis Almustafa, 2020

- ConfusionTableR has made it to CRAN 21/07/2021. ConfusionTableR

caret::confusionMatrix

- Vignette, ?confusionMatrix. It can produce a several statistics, including Precision, Recall, F1, ....

confusionMatrix(pred, truth) cm <- confusionMatrix(data = plsClasses, testing$Class) #> Confusion Matrix and Statistics #> #> Reference #> Prediction M R #> M 21 7 #> R 6 17 #> #> Accuracy : 0.745 #> 95% CI : (0.604, 0.857) #> No Information Rate : 0.529 #> P-Value [Acc > NIR] : 0.00131 #> #> Kappa : 0.487 #> #> Mcnemar's Test P-Value : 1.00000 #> #> Sensitivity : 0.778 #> Specificity : 0.708 #> Pos Pred Value : 0.750 #> Neg Pred Value : 0.739 #> Prevalence : 0.529 #> Detection Rate : 0.412 #> Detection Prevalence : 0.549 #> Balanced Accuracy : 0.743 #> #> 'Positive' Class : M # Extracting the values accuracy <- cm$overall["Accuracy"] sensitivity <- cm$byClass["Sensitivity"] specificity <- cm$byClass["Specificity"] ppv <- cm$byClass["Pos Pred Value"] npv <- cm$byClass["Neg Pred Value"] metrics_vector <- c( Accuracy = accuracy, Sensitivity = sensitivity, Specificity = specificity, PPV = ppv, NPV = npv ) # Round the vector to 3 decimal places rounded_metrics <- round(metrics_vector, digits = 3) # Print the rounded named vector print(rounded_metrics)

- Precision, Recall, Specificity, Prevalence, Kappa, F1-score check with R by using the caret:: confusionMatrix() function. If there are only two factor levels, the first level will be used as the "positive" result.

- One thing caret::confusionMatrix can't do is ROC/AUC. For this, use pROC::ROC() and the plot function. How to Calculate AUC (Area Under Curve) in R.

False positive rates vs false positive rates

FPR (false positive rate) vs FDR (false discovery rate)

PPA, NPA

- Cf PPV/positive predictive value & NPV/negative predictive value

- Positive percent agreement (PPA) and negative percent agreement (NPA) - Statistical Guidance on Reporting Results from Studies Evaluating Diagnostic Tests - Guidance for Industry and FDA Staff

- The percentage of subjects (or samples) that test positive by one test (TA) that are found positive by a second test (TB); the two tests are indicated with the ordered subscripts, as [math]\displaystyle{ PPA_{TA:TB} }[/math]. The test listed first is treated as the “reference” test (denominator a+b in the formula given below).

- Diagnostic accuracy (sensitivity/specificity) versus agreement (PPA/NPA) statistics

- Positive agreement is the proportion of comparative/reference method positive results in which the test method result is positive.

- Negative agreement is the proportion of comparative/reference method negative results in which the test method result is negative.

- The focus on PPA or NPA depends on the context and the specific objectives of the test evaluation.

- PPA is particularly important when the consequence of a false negative is high. For example, in a disease screening context, a false negative (a sick person being incorrectly identified as healthy) could lead to delayed treatment and worse health outcomes. Therefore, a high PPA (few false negatives) is desirable.

- NPA is crucial when the cost of a false positive is significant. For instance, in a scenario where a positive test result could lead to an invasive follow-up procedure, a false positive (a healthy person being incorrectly identified as sick) could lead to unnecessary risk and anxiety for the patient. In this case, a high NPA (few false positives) is important.

- Paper examples.

- Other evaluation of diagnostic tests: Sensitivity/Specificity, Positive Predictive Value (PPV)/Negative Predictive Value (NPV), Overall Test Accuray, Diagnostic Odds Ratio (DOR), Youden Index (YI), Positive Likelihood Ratio (LR+). See

- Evaluation of binary diagnostic tests accuracy for medical researches 2020,

- Measures of Diagnostic Accuracy: Sensitivity, Specificity, PPV and NPV 2011 where Fig 1 shows the effect of disease prevalence on PPV and NPV.

- Diagnostic test evaluation methodology: A systematic review of methods employed to evaluate diagnostic tests in the absence of gold standard – An update 2019.

- Assume rows are new test and columns are reference.

PPA = TP/x.1 = TP/(TP+FN), NPA = TN/x.2 = TN/(FP+TN)reference + reference - test + TP (a) FP (c) test - FN (b) TN (d) x.1 x.2 - APA (Average positive agreement) and ANA (Average negative agreement). See EVALUATION OF AUTOMATIC CLASS III DESIGNATION FOR Cell-Free DNA BCT and Agreement measures for binary and semi-quantitative data. Note, APA and ANA are not standard terms used in diagnostic test evaluation, and their definitions may vary depending on the context.

- Suppose [math]\displaystyle{ PPA = \frac{a}{a+b} }[/math], then [math]\displaystyle{ APA = \frac{a}{((a+b)+(a+c))/2} = \frac{2x_{11}}{x_{1.}+x_{.1}} = \frac{\mbox{n. of concordant positives}}{\mbox{n. of concordant positives} + \frac{\mbox{n. of disconcordant calls}}{2}} }[/math].

- Suppose [math]\displaystyle{ NPA = \frac{d}{c+d} }[/math], then [math]\displaystyle{ ANA = \frac{d}{((d+b)+(d+c))/2} = \frac{2x_{22}}{x_{2.}+x_{.2}} = \frac{\mbox{n. of concordant negatives}}{\mbox{n. of concordant negatives} + \frac{\mbox{n. of disconcordant calls}}{2}} }[/math].

- The overall proportion of agreement is the sum of the diagonal entries divided by the total.

- The above two APA and ANA statistics are in the same form as the Harrell's C-index. See Harrell's C-index formula at What is Harrell’s C-index?

Random classifier

- Sensitivity and specificity should be close to 0.5 for a random classifier assuming a balanced class distribution in a binary classification problem.

- However, PPV or NPV is not close to 0.5 for a random classifier. PPV for a random classifier will depend on the class distribution in the dataset and the randomness of the classifier's guesses. It would vary randomly depending on the classifier's random guesses and the class distribution in the dataset.

ROC curve

- Binary case:

- Y = true positive rate = sensitivity,

- X = false positive rate = 1-specificity = 假陽性率

- Area under the curve AUC from the wikipedia: the probability that a classifier will rank a randomly chosen positive instance higher than a randomly chosen negative one (assuming 'positive' ranks higher than 'negative').

- [math]\displaystyle{ A = \int_{\infty}^{-\infty} \mbox{TPR}(T) \mbox{FPR}'(T) \, dT = \int_{-\infty}^{\infty} \int_{-\infty}^{\infty} I(T'\gt T)f_1(T') f_0(T) \, dT' \, dT = P(X_1 \gt X_0) }[/math]

- Interpretation of the AUC. A small toy example (n=12=4+8) was used to calculate the exact probability [math]\displaystyle{ P(X_1 \gt X_0) }[/math] (4*8=32 all combinations).

- It is a discrimination measure which tells us how well we can classify patients in two groups: those with and those without the outcome of interest.

- Since the measure is based on ranks, it is not sensitive to systematic errors in the calibration of the quantitative tests.

- The AUC can be defined as The probability that a randomly selected case will have a higher test result than a randomly selected control.

- Plot of sensitivity/specificity (y-axis) vs cutoff points of the biomarker

- The Mann-Whitney U test statistic (or Wilcoxon or Kruskall-Wallis test statistic) is equivalent to the AUC (Mason, 2002). Verify in R.

library(pROC) set.seed(123) group1 <- rnorm(50) group2 <- rnorm(50, mean=1) roc_obj <- roc(rep(c(0,1), each=50), c(group1, group2)) # Setting levels: control = 0, case = 1 # Setting direction: controls < cases auc(roc_obj) # Area under the curve: 0.8036 wilcox.test(group2, group1)$statistic/(50*50) # notice the group1,group2 order # W # 0.8036

- The p-value of the Mann-Whitney U test can thus safely be used to test whether the AUC differs significantly from 0.5 (AUC of an uninformative test).

- Calculate AUC by hand. AUC is equal to the probability that a true positive is scored greater than a true negative.

- See the uROC() function in <functions.R> from the supplementary of the paper (need access right) Bivariate Marker Measurements and ROC Analysis Wang 2012. Let [math]\displaystyle{ n_1 }[/math] be the number of obs from X1 and [math]\displaystyle{ n_0 }[/math] be the number of obs from X0. X1 and X0 are the predict values for data from group 1 and 0. [math]\displaystyle{ TP_i=Prob(X_1\gt X_{0i})=\sum_j (X_{1j} \gt X_{0i})/n_1, ~ FP_i=Prob(X_0\gt X_{0i}) = \sum_j (X_{0j} \gt X_{0i}) / n_0 }[/math]. We can draw a scatter plot or smooth.spline() of TP(y-axis) vs FP(x-axis) for the ROC curve.

uROC <- function(marker, status) ### ROC function for univariate marker ###

{

x <- marker

bad <- is.na(status) | is.na(x)

status <- status[!bad]

x <- x[!bad]

if (sum(bad) > 0)

cat(paste("\n", sum(bad), "records with missing values dropped. \n"))

no_case <- sum(status==1)

no_control <- sum(status==0)

TP <- rep(0, no_control)

FP <- rep(0, no_control)

for (i in 1: no_control){

TP[i] <- sum(x[status==1]>x[status==0][i])/no_case

FP[i] <- sum(x[status==0]>x[status==0][i])/no_control

}

list(TP = TP, FP = FP)

}

- How to calculate Area Under the Curve (AUC), or the c-statistic, by hand or by R

- Introduction to the ROCR package. Add threshold labels

- http://freakonometrics.hypotheses.org/9066, http://freakonometrics.hypotheses.org/20002

- Illustrated Guide to ROC and AUC

- ROC Curves in Two Lines of R Code

- Learning Data Science: Understanding ROC Curves

- Gini and AUC. Gini = 2*AUC-1.

- Generally, an AUC value over 0.7 is indicative of a model that can distinguish between the two outcomes well. An AUC of 0.5 tells us that the model is a random classifier, and it cannot distinguish between the two outcomes.

- ROC Day at BARUG

- ROC and AUC, Clearly Explained! StatQuest

- Optimal threshold

- Precision/PPV (proportion of positive results that were correctly classified) replacing the False Positive Rate. Useful for unbalanced data.

partial AUC

- https://onlinelibrary.wiley.com/doi/10.1111/j.1541-0420.2012.01783.x

- pROC: an open-source package for R and S+ to analyze and compare ROC curves

- Partial AUC Estimation and Regression Dodd 2003. [math]\displaystyle{ AUC(t_0,t_1) = \int_{t_0}^{t_1} ROC(t) dt }[/math] where the interval [math]\displaystyle{ (t_0, t_1) }[/math] denotes the false-positive rates of interest.

summary ROC

- The partial area under the summary ROC curve Walter 2005

- On summary ROC curve for dichotomous diagnostic studies: an application to meta-analysis of COVID-19 2022

Weighted ROC

- What is the difference between area under roc and weighted area under roc? Weighted ROC curves are used when you're interested in performance in a certain region of ROC space (e.g. high recall) and was proposed as an improvement over partial AUC (which does exactly this but has some issues)

Adjusted AUC

- 'auc.adjust': R function for optimism-adjusted AUC (internal validation)

- GmAMisc::aucadj(data, fit, B = 200)

Difficult to compute for some models

- Plot ROC curve for Nearest Centroid. For NearestCentroid it is not possible to compute a score. This is simply a limitation of the model.

- k-NN model. class::knn() can output prediction probability.

- predict.randomForest() can output class probabilities. See ROC curve for classification from randomForest

Optimal threshold, Youden's index/Youden's J statistic

- Max of “sensitivity + specificity”. See Epi::ROC() function.

- On optimal biomarker cutoffs accounting for misclassification costs in diagnostic trilemmas with applications to pancreatic cancer Bantis, 2022

- Youden's index

- Youden's index = sensitivity + specificity -1

- Youden's index ranges from 0 to 1 with higher values indicating better overall test performance

- The maximum value of the index may be used as a criterion for selecting the optimum cut-off point when a diagnostic test gives a numeric rather than a dichotomous result.

- rocbc package. Provides inferences and comparisons around the AUC, the Youden index, the sensitivity at a given specificity level (and vice versa), the optimal operating point of the ROC curve (in the Youden sense), and the Youden based cutoff.

- Impact of Methodological Assumptions and Covariates on the Cutoff Estimation in ROC Analysis and R code.

ROC Curve AUC for Hypothesis Testing

- Interpreting AUROC in Hypothesis Testing

- Receiver Operating Characteristic Curve in Diagnostic Test Assessment 2010

Challenges, issues

Methodological conduct of prognostic prediction models developed using machine learning in oncology: a systematic review 2022. class imbalance, data pre-processing, and hyperparameter tuning. twitter.

Survival data

'Survival Model Predictive Accuracy and ROC Curves' by Heagerty & Zheng 2005

- Recall Sensitivity= [math]\displaystyle{ P(\hat{p_i} \gt c | Y_i=1) }[/math], Specificity= [math]\displaystyle{ P(\hat{p}_i \le c | Y_i=0 }[/math]), [math]\displaystyle{ Y_i }[/math] is binary outcomes, [math]\displaystyle{ \hat{p}_i }[/math] is a prediction, [math]\displaystyle{ c }[/math] is a criterion for classifying the prediction as positive ([math]\displaystyle{ \hat{p}_i \gt c }[/math]) or negative ([math]\displaystyle{ \hat{p}_i \le c }[/math]).

- For survival data, we need to use a fixed time/horizon (t) to classify the data as either a case or a control. Following Heagerty and Zheng's definition in Survival Model Predictive Accuracy and ROC Curves (Incident/dynamic) 2005, Sensitivity(c, t)= [math]\displaystyle{ P(M_i \gt c | T_i = t) }[/math], Specificity= [math]\displaystyle{ P(M_i \le c | T_i \gt t }[/math]) where M is a marker value or [math]\displaystyle{ Z^T \beta }[/math]. Here sensitivity measures the expected fraction of subjects with a marker greater than c among the subpopulation of individuals who die at time t, while specificity measures the fraction of subjects with a marker less than or equal to c among those who survive beyond time t.

- The AUC measures the probability that the marker value for a randomly selected case exceeds the marker value for a randomly selected control

- ROC curves are useful for comparing the discriminatory capacity of different potential biomarkers.

Precision recall curve

- Precision and recall from wikipedia

- Y-axis: Precision = tp/(tp + fp) = PPV. How accurately the model predicted the positive classes. large is better

- X-axis: Recall = tp/(tp + fn) = Sensitivity, large is better

- The Relationship Between Precision-Recall and ROC Curves. Remember ROC is defined as

- Y-axis: Sensitivity = tp/(tp + fn) = Recall

- X-axis: 1-Specificity = fp/(fp + tn)

- ROC curves are appropriate when the observations are balanced between each class, whereas PR curves are appropriate for imbalanced datasets. When dealing with highly skewed datasets, PR curves can give a more informative picture of an algorithm’s performance.

- The Precision-Recall Plot Is More Informative than the ROC Plot When Evaluating Binary Classifiers on Imbalanced Datasets

F1-score

- F-score/F1-score.

- F1 = 2 * Precision * Recall / (Precision + Recall) = 2 / (1/Precision + 1/Recall) Harmonic mean of precision and recall.

- This means that comparison of the F-score across different problems with differing class ratios is problematic.

- Recall = Sensitivity = true positive rate. Precision = PPV. They are from the two directions of the "1" class in the confusion matrix.

- The F1 score

- How to interpret F1 score (simply explained). It is a popular metric to use for classification models as it provides accurate results for both balanced and imbalanced datasets, and takes into account both the precision and recall ability of the model. F1 score tells you the model’s balanced ability to both capture positive cases (recall) and be accurate with the cases it does capture (precision).

- What is Considered a “Good” F1 Score? It gives an example of F1-score where all the predictions are on one class (baseline model) for comparison purpose.

- An example. confusionMatrix() assumes the rows are predicted and columns are true; that's why I put a transpose on my table object. The 'positive' class seems to be always the 1st row of the 2x2 table. If the levels are not specified, it will assign 'A' to the first row and 'B' to the 2nd row. For this case, we can see accuracy (0.615) is between sensitivity (0.429) and specificity (0.684) and F1-score (0.375) is between precision (0.333) and recall (0.429) . Also F1-score is smaller than sensitivity and specificity.

predicted sensitive resistant ------------------------------- true sensitive 3 4 resistant 6 13 xtab <- matrix(c(3,6,4,13),nr=2) confusionMatrix(t(xtab))$byClass # Sensitivity Specificity Pos Pred Value Neg Pred Value # 0.4285714 0.6842105 0.3333333 0.7647059 # Precision Recall F1 Prevalence # 0.3333333 0.4285714 0.3750000 0.2692308 # Detection Rate Detection Prevalence Balanced Accuracy # 0.1153846 0.3461538 0.5563910 confusionMatrix(t(xtab))$overall # Accuracy Kappa AccuracyLower AccuracyUpper AccuracyNull # 0.6153846 0.1034483 0.4057075 0.7977398 0.7307692 # AccuracyPValue McnemarPValue # 0.9348215 0.7518296 confusionMatrix(t(xtab))$positive # [1] "A" - Using F1 score metric in KNN through caret package

library(caret) library(mlbench) data(Sonar) f1Summary <- function(data, lev = NULL, model = NULL) { # Calculate precision and recall precision <- posPredValue(data$pred, data$obs, positive = lev[1]) recall <- sensitivity(data$pred, data$obs, positive = lev[1]) # Calculate F1 score f1 <- (2 * precision * recall) / (precision + recall) # Return F1 score c(F1 = f1) } # Create a trainControl object that uses the custom summary function trainControl <- trainControl(summaryFunction = f1Summary) # Use the trainControl object when calling the train() function model <- train(Class ~., data = Sonar, method = "rf", trControl = trainControl, metric = "F1") > model Random Forest 208 samples 60 predictor 2 classes: 'M', 'R' No pre-processing Resampling: Bootstrapped (25 reps) Summary of sample sizes: 208, 208, 208, 208, 208, 208, ... Resampling results across tuning parameters: mtry F1 2 0.8416808 31 0.7986326 60 0.7820982 F1 was used to select the optimal model using the largest value. The final value used for the model was mtry = 2. - Examples in papers

PPV/Precision

The relationship between PPV and sensitivity depends on the prevalence of the condition being tested for in the population. If the condition is rare, a test with high sensitivity may still have a low PPV because there will be many false positive results. On the other hand, if the condition is common, a test with high sensitivity is more likely to also have a high PPV.

Here we have a diagnostic test for a rare disease that affects 1 in 1000 people and the test has a sensitivity of 100% and a false positive rate of 5%:

Test| positive negative ----------------------------------------- Disease present | 1 0 Disease absent | 50 949

The prevalence of the disease is 1/1000.

Incidence, Prevalence

https://www.health.ny.gov/diseases/chronic/basicstat.htm

Jaccard index

- https://en.wikipedia.org/wiki/Jaccard_index

- Clear Example of Jaccard Similarity // Visual Explanation of What is the Jaccard Index? (video). Jaccard similarity = TP / (TP + FP + FN)

Calculate area under curve by hand (using trapezoid), relation to concordance measure and the Wilcoxon–Mann–Whitney test

- https://stats.stackexchange.com/a/146174

- The meaning and use of the area under a receiver operating characteristic (ROC) curve J A Hanley, B J McNeil 1982

genefilter package and rowpAUCs function

- rowpAUCs function in genefilter package. The aim is to find potential biomarkers whose expression level is able to distinguish between two groups.

# source("http://www.bioconductor.org/biocLite.R")

# biocLite("genefilter")

library(Biobase) # sample.ExpressionSet data

data(sample.ExpressionSet)

library(genefilter)

r2 = rowpAUCs(sample.ExpressionSet, "sex", p=0.1)

plot(r2[1]) # first gene, asking specificity = .9

r2 = rowpAUCs(sample.ExpressionSet, "sex", p=1.0)

plot(r2[1]) # it won't show pAUC

r2 = rowpAUCs(sample.ExpressionSet, "sex", p=.999)

plot(r2[1]) # pAUC is very close to AUC now

Use and Misuse of the Receiver Operating Characteristic Curve in Risk Prediction

- http://circ.ahajournals.org/content/115/7/928

- Calibration: the Achilles heel of predictive analytics 2019

Comparison of two AUCs

- Testing for improvement in prediction model performance by Pepe et al 2013.

- Statistical Assessments of AUC. This is using the pROC::roc.test function.

- prioritylasso. It is using roc(), auc(), roc.test(), plot.roc() from the pROC package. The calculation based on the training data is biased so we need to report the one based on test data.

DeLong test for comparing two ROC curves

- Comparing AUCs of Machine Learning Models with DeLong’s Test

- Misuse of DeLong test to compare AUCs for nested models

- What is the DeLong test for comparing AUCs?

- R语言,ROC曲线,deLong test. pROC::roc.test() was used.

- Daim::deLong.test()

Some R packages

- Some R Packages for ROC Curves

- ROCR 2005

- pROC 2010. get AUC and plot multiple ROC curves together at the same time

- PRROC 2014

- plotROC 2014

- precrec 2015

- ROCit 2019

- ROC from Bioconductor

- caret

- ROC animation

pROC

- https://cran.r-project.org/web/packages/pROC/index.html

- response: a factor, numeric or character vector of responses (true class), typically encoded with 0 (controls) and 1 (cases).

- Pay attention to the message printed on screen after calling roc(). It will show which one is control and which one is case.

- pROC: display and analyze ROC curves in R and S+

- ROC Curve and AUC in Machine learning and R pROC Package. Pay attention to the legacy.axes parameter.

library(pROC) data(aSAH) str(aSAH[,c('outcome', "s100b")]) # 'data.frame': 113 obs. of 2 variables: # $ outcome: Factor w/ 2 levels "Good","Poor": 1 1 1 1 2 2 1 2 1 1 ... # $ s100b : num 0.13 0.14 0.1 0.04 0.13 0.1 0.47 0.16 0.18 0.1 ... roc.s100b <- roc(aSAH$outcome, aSAH$s100b) roc(aSAH$outcome, aSAH$s100b, plot=TRUE, auc=TRUE, # already the default col="green", lwd =4, legacy.axes=TRUE, main="ROC Curves") # Data: aSAH$s100b in 72 controls (aSAH$outcome Good) < 41 cases (aSAH$outcome Poor). # Area under the curve: 0.7314 auc(roc.s100b) # Area under the curve: 0.7314 auc(aSAH$outcome, aSAH$s100b) # Area under the curve: 0.7314Note: in pROC::roc() or auc(), the predicted response is on the 1st argument while in caret::confusionMatrix(), truth/reference is on the 2nd argument.

If we flipped the outcomes, it won't affect AUC.

aSAH$outcome2 <- factor(ifelse(aSAH$outcome == "Good", "Poor", "Good"), levels=c("Good", "Poor")) roc(aSAH$outcome2, aSAH$s100b) # Data: aSAH$s100b in 41 controls (aSAH$outcome2 Good) > 72 cases (aSAH$outcome2 Poor). # Area under the curve: 0.7314 - Plot multiple ROC curves in one plot.

roc1 <- roc() roc2 <- roc() roc3 <- roc() plot(roc1) lines(roc2, col="red") lines(roc3, col="blue")

Another solution is to use pROC::ggroc(). The annotation was taken care automatically.

rocobj <- roc(aSAH$outcome, aSAH$s100b) rocobj2 <- roc(aSAH$outcome, aSAH$wfns) g2 <- ggroc(list(s100b=rocobj, wfns=rocobj2, ndka=roc(aSAH$outcome, aSAH$ndka))) # 3 curves - Plot a panel of ROC plots by using facet_wrap().

- How to add AUC to a multiple ROC graph with pROC's ggroc. ggroc() is part of pROC package.

- See Annotating text on individual facet in ggplot2

- See facet_wrap on how to change the labels in each plots.

ROCR

- https://cran.r-project.org/web/packages/ROCR/,

- Vignette. The vignette shows the package has a way to take the output from multiple prediction (eg 10-fold CV) and create a plot with 10 curves or 1 curve by averaging multiple curves.

- A small introduction to the ROCR package

- Measure Model Performance in R Using ROCR Package

- ROC for Decision Trees – where did the data come from?. NB: 1) Change the double quote sign when we do copy-and-paste, 2) the result is biased since it does not use CV or a test data. The right way is to use a newdata in predict.rpart() function. When the newdata is omitted, fitted values are used.

Cross-validation ROC

- ROC cross-validation caret::train(, metric = "ROC")

- Feature selection + cross-validation, but how to make ROC-curves in R. Small samples within the cross-validation may lead to underestimated AUC as the ROC curve with all data will tend to be smoother and less underestimated by the trapezoidal rule.

library(pROC) data(aSAH) k <- 10 n <- dim(aSAH)[1] set.seed(1) indices <- sample(rep(1:k, ceiling(n/k))[1:n]) all.response <- all.predictor <- aucs <- c() for (i in 1:k) { test = aSAH[indices==i,] learn = aSAH[indices!=i,] model <- glm(as.numeric(outcome)-1 ~ s100b + ndka + as.numeric(wfns), data = learn, family=binomial(link = "logit")) model.pred <- predict(model, newdata=test) aucs <- c(aucs, roc(test$outcome, model.pred)$auc) all.response <- c(all.response, test$outcome) all.predictor <- c(all.predictor, model.pred) } roc(all.response, all.predictor) # Setting levels: control = 1, case = 2 # Setting direction: controls < cases # # Call: # roc.default(response = all.response, predictor = all.predictor) # # Data: all.predictor in 72 controls (all.response 1) < 41 cases (all.response 2). # Area under the curve: 0.8279 mean(aucs) # [1] 0.8484921 - ROC curve from training data in caret

- Plot ROC curve from Cross-Validation (training) data in R

- How to represent ROC curve when using Cross-Validation. the standard way to do it is to find the AUC per fold, then the mean AUC would be your performance +/- sd(AUC)/sqrt(5). Your mean AUC of all the folds should be close to if you found all the out-of-fold probabilities and lined them up and found a grand AUC.

- How to easily make a ROC curve in R

- Appropriate way to get Cross Validated AUC

- cvAUC (Cross-validated Area Under the ROC Curve) package as linked from Some R Packages for ROC Curves

- How do you generate ROC curves for leave-one-out cross validation? If the classifier outputs probabilities, then combining all the test point outputs for a single ROC curve is appropriate. If not, then scale the output of the classifier in a manner that would make it directly comparable across classifiers.

- ROC cross-validation using the caret package (from U. of Sydney).

mean ROC curve

ROC with cross-validation for linear regression in R

Assess risk of bias

PROBAST: A Tool to Assess Risk of Bias and Applicability of Prediction Model Studies: Explanation and Elaboration 2019. http://www.probast.org/

Confidence interval of AUC

How to get an AUC confidence interval. pROC package was used.

AUC can be a misleading measure of performance

AUC is high but precision is low (i.e. FDR is high). https://twitter.com/michaelhoffman/status/1398380674206285830?s=09.

Caveats and pitfalls of ROC analysis in clinical microarray research

Caveats and pitfalls of ROC analysis in clinical microarray research (and how to avoid them) Berrar 2011

Limitation in clinical data

Sample size

- Sample size MATTERS - don't ignore it.

- Sample size methods for evaluation of predictive biomarkers 2022

Threshold

Myths about risk thresholds for prediction models

Three myths about risk thresholds for prediction models 2019

Picking a threshold based on model performance/utility

Squeezing the Most Utility from Your Models 2020

Why does my ROC curve look like a V

https://stackoverflow.com/a/42917384

Unbalanced classes

- 8 Tactics to Combat Imbalanced Classes in Your Machine Learning Dataset

- How to Fix k-Fold Cross-Validation for Imbalanced Classification. It teaches you how to split samples in CV by using stratified k-fold cross-validation.

- ROC is especially useful for unbalanced data where the 0.5 threshold may not be appropriate.

- Imbalanced data & why you should NOT use ROC curve

- AUC and class imbalance in training/test dataset

- Use Precison/PPV to replace FDR

- Practical Guide to deal with Imbalanced Classification Problems in R

- The Precision-Recall Plot Is More Informative than the ROC Plot When Evaluating Binary Classifiers on Imbalanced Datasets

- Roc animation

- Undersampling By Groups In R. See the ROSE package & its paper in 2014.

- imbalance package

- Chapter 11 Subsampling For Class Imbalances from the caret package documentation

- SMOTE

- Classification Trees for Imbalanced Data: Surface-to-Volume Regularization Zhu, JASA 2021

- The harm of class imbalance corrections for risk prediction models: illustration and simulation using logistic regression 2022

- How to handle Imbalanced Data?

Weights

- Weighted least squares

- Is an WLS estimator unbiased, when wrong weights are used?

- How to Perform Weighted Least Squares Regression in R

- How to determine weights for WLS regression in R?

- Weighted Logistic Regression for Imbalanced Dataset

Metric

- Tour of Evaluation Metrics for Imbalanced Classification. More strategies are available.

- F-score

- tidymodels::f_meas(), Modelling with Tidymodels and Parsnip, Modelling Binary Logistic Regression using Tidymodels Library in R

- caret::train(,metric) from Caret vs. tidymodels — create reusable machine learning workflows

- MLmetrics: Machine Learning Evaluation Metrics

- Classification/evaluation metrics for highly imbalanced data

- What metrics should be used for evaluating a model on an imbalanced data set? (precision + recall or ROC=TPR+FPR)

- Assess Performance of the Classification Model: Matthews correlation coefficient (MCC)

AI models

Performance evaluation of predictive AI models to support medical decisions: Overview and guidance

Class comparison problem

- compcodeR: RNAseq data simulation, differential expression analysis and performance comparison of differential expression methods