T-test: Difference between revisions

No edit summary |

|||

| (102 intermediate revisions by the same user not shown) | |||

| Line 1: | Line 1: | ||

= Overview = | |||

* [https://martinctc.github.io/blog/common-statistical-tests-in-r-part-i/ Common Statistical Tests in R - Part I] | |||

= T-statistic = | = T-statistic = | ||

Let <math style="vertical-align:-.3em">\scriptstyle\hat\beta</math> be an [[estimator]] of parameter ''β'' in some [[statistical model]]. Then a '''''t''-statistic''' for this parameter is any quantity of the form | Let <math style="vertical-align:-.3em">\scriptstyle\hat\beta</math> be an [[estimator]] of parameter ''β'' in some [[statistical model]]. Then a '''''t''-statistic''' for this parameter is any quantity of the form | ||

| Line 23: | Line 26: | ||

* (From [https://support.minitab.com/en-us/minitab/18/help-and-how-to/statistics/basic-statistics/supporting-topics/data-concepts/what-is-the-pooled-standard-deviation/ minitab]) The pooled standard deviation is the average spread of all data points about their group mean (''not the overall mean''). It is a weighted average of each group's standard deviation. The weighting gives larger groups a proportionally greater effect on the overall estimate. | * (From [https://support.minitab.com/en-us/minitab/18/help-and-how-to/statistics/basic-statistics/supporting-topics/data-concepts/what-is-the-pooled-standard-deviation/ minitab]) The pooled standard deviation is the average spread of all data points about their group mean (''not the overall mean''). It is a weighted average of each group's standard deviation. The weighting gives larger groups a proportionally greater effect on the overall estimate. | ||

* [https://heuristicandrew.blogspot.com/2018/01/type-i-error-rates-in-two-sample-t-test.html Type I error rates in two-sample t-test by simulation] | * [https://heuristicandrew.blogspot.com/2018/01/type-i-error-rates-in-two-sample-t-test.html Type I error rates in two-sample t-test by simulation] | ||

=== Pooled variance vs overall variance === | |||

<ul> | |||

<li>Overall variance is typically larger than the pooled variance unless the group means are identical. | |||

<li>This is because overall variance captures both within-group variability and between-group variability, while pooled variance only accounts for within-group variability. | |||

<li>Pooled variance does not directly capture between-group variability, only within-group variability under the assumption of homogeneous variance. | |||

<li>Overall variance reflects the influence of different group means and different variances. | |||

<li>Examples | |||

<pre> | |||

foo <- function(n1, n2, seed=1, mu1=0, mu2=0, s1=1, s2=1) { | |||

set.seed(seed) | |||

x1 <- rnorm(n1, mu1, s1); x2 <- rnorm(n2, mu2, s2) | |||

cat("var(all)", round(var(c(x1, x2)), 3), ", ") | |||

pv <- ((n1-1)*var(x1) + (n2-1)*var(x2)) / (n1+n2-2) | |||

cat("var(pooled)", round(pv, 3) , "\n") | |||

} | |||

foo(10, 10) # var(all) 0.834 , var(pooled) 0.877 | |||

foo(100, 100, 1) # var(all) 0.863 , var(pooled) 0.862 | |||

foo(100, 100, 1234) # var(all) 1.042 , var(pooled) 1.037 | |||

foo(100, 100, 1234, 0, 1) # var(all) 1.393 , var(pooled) 1.037 | |||

foo(100, 100, 1234, s1=1, s2=3) # var(all) 5.292 , var(pooled) 5.299 | |||

</pre> | |||

</ul> | |||

=== Assumptions === | |||

<ul> | |||

<li>[https://www.statology.org/t-test-assumptions/ The Four Assumptions Made in a T-Test] | |||

<li>[https://www.statology.org/anova-assumptions/ How to Check ANOVA Assumptions]. ANOVA also assumes variances of the populations that the samples come from are equal. | |||

<li>T-tests that assume equal variances of the two populations aren't valid when the two populations have different variances, & it's worse for unequal sample sizes. If the smallest sample size is the one with highest variance the test will have inflated Type I error). [https://stats.stackexchange.com/a/45676 Small and unbalanced sample sizes for two groups - what to do?] | |||

<syntaxhighlight lang='r'> | |||

type1err <- function(n1=10, n2=100, sigma1=1, sigma2=1, n_simulations=10000) { | |||

set.seed(1) | |||

# Set the population means and standard deviations for the two groups | |||

mu1 <- 0 | |||

mu2 <- 0 | |||

# Initialize a counter for the number of significant results | |||

n_significant <- 0 | |||

# Run the simulations | |||

for (i in 1:n_simulations) { | |||

# Generate random samples from two normal distributions | |||

sample1 <- rnorm(n1, mean = mu1, sd = sigma1) | |||

sample2 <- rnorm(n2, mean = mu2, sd = sigma2) | |||

# Perform a two-sample t-test | |||

t_test <- t.test(sample1, sample2, var.equal = FALSE) | |||

# Check if the result is significant at the 0.05 level | |||

if (t_test$p.value < 0.05) { | |||

n_significant <- n_significant + 1 | |||

} | |||

} | |||

# Calculate the proportion of significant results | |||

prop_significant <- n_significant / n_simulations | |||

cat(sprintf('Proportion of significant results: %.4f', prop_significant)) | |||

} | |||

type1err(10, 100) # 0.0521 | |||

type1err(28, 1371, 1, 1) # 0.0511 | |||

type1err(28, 1371, 1, 2) # 0.0000 | |||

type1err(10, 100, var.equal = FALSE) # 0.0519 | |||

type1err(28, 1371, 1, 1, var.equal = FALSE) # 0.0503 | |||

type1err(28, 1371, 1, 2, var.equal = FALSE) # 0.0509 | |||

type2err <- function(n1=10, n2=100, delta=1, sigma1=1, sigma2=1, n_simulations=10000) { | |||

set.seed(1) | |||

# Set the population means and standard deviations for the two groups | |||

mu1 <- 0 | |||

mu2 <- delta | |||

# Initialize a counter for the number of significant results | |||

n_significant <- 0 | |||

# Run the simulations | |||

for (i in 1:n_simulations) { | |||

# Generate random samples from two normal distributions | |||

sample1 <- rnorm(n1, mean = mu1, sd = sigma1) | |||

sample2 <- rnorm(n2, mean = mu2, sd = sigma2) | |||

# Perform a two-sample t-test | |||

t_test <- t.test(sample1, sample2, var.equal = FALSE) | |||

# Check if the result is significant at the 0.05 level | |||

if (t_test$p.value >= 0.05) { | |||

n_significant <- n_significant + 1 | |||

} | |||

} | |||

# Calculate the proportion of p-values greater than or equal to alpha (i.e., the estimated type II error rate) | |||

type2_error_rate <- n_significant / n_simulations | |||

cat("The estimated type II error rate is", type2_error_rate) | |||

} | |||

type2err() # 0.2209 | |||

type2err(delta=3) # 0 | |||

# A larger value of delta will increase the power | |||

# of the test and result in a lower type II error rate. | |||

</syntaxhighlight> | |||

<li>A deflated Type I error rate could occur in situations where the significance level is set too low, making it more difficult to reject the null hypothesis even when it is false. This could result in an increased rate of [https://www.scribbr.com/statistics/type-i-and-type-ii-errors/ Type II errors], or false negatives, where the null hypothesis is not rejected even though it is false. | |||

</ul> | |||

=== Compare to unequal variance === | |||

{| class="wikitable" | |||

|- | |||

! | |||

! denominator | |||

! df | |||

|- | |||

| Same variance<br />Student t-test | |||

| <math>\begin{align} & S_p\sqrt{1/n_1+1/n_2}\\ &S_p^2=\sqrt{\frac{(n_1-1)s_1^2+(n_2-1)s_2^2}{n_1+n_2-2}}\end{align}</math> <br>(complicated) | |||

| <math>n_1+n_2-2</math> <br>(simple) | |||

|- | |||

| Unequal variance<br />Welch t-test | |||

| <math>\sqrt{{s_1^2 \over n_1} + {s_2^2 \over n_2}} </math> (simple) | |||

| Satterthwaite's<br > approximation (complicated) | |||

|} | |||

== Two sample test assuming unequal variance == | == Two sample test assuming unequal variance == | ||

| Line 40: | Line 166: | ||

:<math>df = { ({s_1^2 \over n_1} + {s_2^2 \over n_2})^2 \over {({s_1^2 \over n_1})^2 \over n_1-1} + {({s_2^2 \over n_2})^2 \over n_2-1} }. </math> | :<math>df = { ({s_1^2 \over n_1} + {s_2^2 \over n_2})^2 \over {({s_1^2 \over n_1})^2 \over n_1-1} + {({s_2^2 \over n_2})^2 \over n_2-1} }. </math> | ||

== Paired test, Wilcoxon signed rank test == | See an example [https://www.statology.org/satterthwaite-approximation/ The Satterthwaite Approximation: Definition & Example]. | ||

== Different versions of t-test == | |||

[https://statsandr.com/blog/student-s-t-test-in-r-and-by-hand-how-to-compare-two-groups-under-different-scenarios/ Student's t-test in R and by hand: how to compare two groups under different scenarios?] | |||

== Paired test, Wilcoxon signed-rank test == | |||

<ul> | |||

<li><syntaxhighlight lang='r' inline = T>t.test(, paired = T), wilcox.test(, paired = T) </syntaxhighlight>. [https://www.rdocumentation.org/packages/stats/versions/3.6.2/topics/t.test ?t.test], [https://www.rdocumentation.org/packages/stats/versions/3.6.2/topics/wilcox.test ?wilcox.test] | |||

<li>[https://www.rdatagen.net/post/thinking-about-the-run-of-the-mill-pre-post-analysis/ Have you ever asked yourself, "how should I approach the classic pre-post analysis?"] | |||

<li>[https://stats.stackexchange.com/a/74105 How to best visualize one-sample test?] | |||

<li>[https://stackoverflow.com/a/60657543 Can R visualize the t.test or other hypothesis test results?], [https://cran.r-project.org/web/packages/gginference/index.html gginference] package | |||

<li>[https://www.datanovia.com/en/lessons/wilcoxon-test-in-r/ Wilcoxon Test in R] | |||

<li>[https://www.tandfonline.com/doi/full/10.1080/00031305.2022.2115552?af=R How Do We Perform a Paired t-Test When We Don’t Know How to Pair?] 2022 | |||

</ul> | |||

=== Relation to ANOVA === | |||

<ul> | |||

<li>[http://sthda.com/english/wiki/paired-samples-t-test-in-r#what-is-paired-samples-t-test Paired Samples T-test in R], [https://bioconductor.org/packages/release/bioc/vignettes/DESeq2/inst/doc/DESeq2.html#can-i-use-deseq2-to-analyze-paired-samples Can I use DESeq2 to analyze paired samples?] from DESeq2 vignette | |||

<syntaxhighlight lang='r'> | |||

# Weight of the mice before treatment | |||

before <-c(200.1, 190.9, 192.7, 213, 241.4, 196.9, 172.2, 185.5, 205.2, 193.7) | |||

# Weight of the mice after treatment | |||

after <-c(392.9, 393.2, 345.1, 393, 434, 427.9, 422, 383.9, 392.3, 352.2) | |||

my_data <- data.frame( | |||

group = rep(c("before", "after"), each = 10), | |||

weight = c(before, after), | |||

subject = factor(rep(1:10, 2))) | |||

aov(weight ~ subject + group, data = my_data) |> summary() | |||

# Df Sum Sq Mean Sq F value Pr(>F) | |||

# subject 9 6946 772 1.78 0.202 | |||

# group 1 189132 189132 436.11 6.2e-09 *** | |||

# Residuals 9 3903 434 | |||

# --- | |||

# Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1 | |||

t.test(weight ~ group, data = my_data, paired = TRUE) | |||

# Paired t-test | |||

# | |||

# data: weight by group | |||

# t = 20.883, df = 9, p-value = 6.2e-09 | |||

t.test(after-before) | |||

# One Sample t-test | |||

# | |||

# data: after - before | |||

# t = 20.883, df = 9, p-value = 6.2e-09 | |||

</syntaxhighlight> | |||

Or using [[#Repeated_measure|repeated measure ANOVA]] with [https://www.rdocumentation.org/packages/stats/versions/3.6.2/topics/aov Error()] function to incorporate a random factor. See [https://www.statology.org/nested-anova-in-r/ How to Perform a Nested ANOVA in R (Step-by-Step)] adn [https://www.statology.org/repeated-measures-anova-in-r/ How to Perform a Repeated Measures ANOVA in R]. | |||

<syntaxhighlight lang='r'> | |||

aov(weight ~ group + Error(subject), data = my_data) |> summary() | |||

# Assume same 10 random subjects for both group1 and group2 | |||

# | |||

# Error: subject | |||

# Df Sum Sq Mean Sq F value Pr(>F) | |||

# Residuals 9 6946 771.8 | |||

# | |||

# Error: Within | |||

# Df Sum Sq Mean Sq F value Pr(>F) | |||

# group 1 189132 189132 436.1 6.2e-09 *** | |||

# Residuals 9 3903 434 | |||

# Below is a nested design, not repeated measure. | |||

aov(weight ~ group + Error(subject/group), data = my_data) |> summary() | |||

# Assume subjects 1-10 and 11-20 in group1 and group2 are different. | |||

# So this model is not right in this case. | |||

# | |||

# Error: subject | |||

# Df Sum Sq Mean Sq F value Pr(>F) | |||

# Residuals 9 6946 771.8 | |||

# | |||

# Error: subject:group | |||

# Df Sum Sq Mean Sq F value Pr(>F) | |||

# group 1 189132 189132 436.1 6.2e-09 *** | |||

# Residuals 9 3903 434 | |||

</syntaxhighlight> | |||

<li>[https://stats.stackexchange.com/q/23276 Paired t-test as a special case of linear mixed-effect modeling] | |||

</ul> | |||

=== na.action = "na.pass" === | |||

[https://bugs.r-project.org/show_bug.cgi?id=14359 error-prone t.test(<formula>, paired = TRUE)] | |||

== [http://en.wikipedia.org/wiki/Standard_score Z-value/Z-score] == | == [http://en.wikipedia.org/wiki/Standard_score Z-value/Z-score] == | ||

If the population parameters are known, then rather than computing the t-statistic, one can compute the z-score. | If the population parameters are known, then rather than computing the t-statistic, one can compute the z-score. | ||

== | == Check assumptions, Shapiro-Wilk test == | ||

* [https://bmcmedresmethodol.biomedcentral.com/articles/10.1186/1471-2288-12-81 To test or not to test: Preliminary assessment of normality when comparing two independent samples] 2012 | |||

* Parametric tests are used when the data being analyzed meet certain assumptions, such as normality and homogeneity of variance. | |||

** To check for normality, you can use graphical methods such as a histogram or a Q-Q plot. You can also use statistical tests such as the Shapiro-Wilk test or the Anderson-Darling test. The shapiro.test() function can be used to perform a Shapiro-Wilk test in R. | |||

** To check for homogeneity of variance, you can use graphical methods such as a box plot or a scatter plot. You can also use statistical tests such as Levene’s test or Bartlett’s test. The leveneTest() function from the car package can be used to perform Levene’s test in R | |||

** [https://www.r-bloggers.com/2024/03/here-is-why-i-dont-care-about-the-levenes-test/ Here is why I don’t care about the Levene’s test] | |||

:<syntaxhighlight lang='r'> | |||

mydata <- PlantGrowth | |||

# Check for normality | |||

shapiro.test(mydata$weight) | |||

[https://www.blopig.com/blog/2013/10/wilcoxon-mann-whitney-test-and-a-small-sample-size/ Wilcoxon-Mann-Whitney test and a small sample size]. if performing a WMW test comparing S1=(1,2) and S2=(100,300) it wouldn’t differ of comparing S1=(1,2) and S2=(4,5). Therefore when having a small sample size this is a great loss of information. | # Check for homogeneity of variance | ||

library(car) | |||

leveneTest(weight ~ group, data = mydata) | |||

</syntaxhighlight> | |||

== Nonparametric test: Wilcoxon rank-sum test/Mann–Whitney U test for 2-sample data == | |||

* [https://en.wikipedia.org/wiki/Mann%E2%80%93Whitney_U_test Mann–Whitney U test/Wilcoxon rank-sum test or Wilcoxon–Mann–Whitney test] | |||

* Sensitive to differences in location | |||

* [https://stat.ethz.ch/R-manual/R-patched/library/stats/html/wilcox.test.html wilcox.test()] | |||

* [https://www.statsandr.com/blog/wilcoxon-test-in-r-how-to-compare-2-groups-under-the-non-normality-assumption/ Wilcoxon test in R: how to compare 2 groups under the non-normality assumption] | |||

* [https://www.blopig.com/blog/2013/10/wilcoxon-mann-whitney-test-and-a-small-sample-size/ Wilcoxon-Mann-Whitney test and a small sample size]. if performing a WMW test comparing S1=(1,2) and S2=(100,300) it wouldn’t differ of comparing S1=(1,2) and S2=(4,5). Therefore when having a small sample size this is a great loss of information. | |||

=== Kruskal-Wallis tests for multiple samples === | |||

[[T-test#Kruskal-Wallis_one-way_analysis_of_variance|Kruskal-Wallis tests]] | |||

== Nonparametric test: Kolmogorov-Smirnov test == | == Nonparametric test: Kolmogorov-Smirnov test == | ||

| Line 72: | Line 295: | ||

== Limma: Empirical Bayes method == | == Limma: Empirical Bayes method == | ||

<ul> | |||

<li>Some Bioconductor packages: limma, RnBeads, IMA, minfi packages. | |||

* Diagram of usage [https://www.rdocumentation.org/packages/limma/versions/3.28.14/topics/makeContrasts ?makeContrasts], [https://www.rdocumentation.org/packages/limma/versions/3.28.14/topics/contrasts.fit ?contrasts.fit], [https://www.rdocumentation.org/packages/limma/versions/3.28.14/topics/ebayes ?eBayes] < | <li>The '''moderated T-statistics''' used in Limma is defined on Limma's [https://bioconductor.org/packages/release/bioc/vignettes/limma/inst/doc/usersguide.pdf#page=63 user guide]. | ||

lmFit contrasts.fit | * Limma assumes that the variances of the genes from a certain distribution (Gamma distribution) and estimates this distribution from the data. The variance of each gene is then ''' "shrunk" '''towards the mean of this distribution. This shrinkage is more pronounced for genes with low counts or high variability, thereby stabilizing their variance estimates. | ||

x ------> fit ------------------> fit2 -----> fit2 ---------> | * '''The log fold changes are then calculated based on these shrunken variances'''. This has the effect of making the log fold changes more reliable and the resulting p-values more accurate. This is particularly important when you are dealing with multiple testing correction, as is often the case in differential expression analysis. | ||

^ | |||

| | <li>Moderated t-statistic | ||

model.matrix | makeContrasts | <math> | ||

t_g = \frac{\hat{\beta}_g}{\sqrt{s_{g,\text{post}}^2 \cdot u_g^2}}, | |||

s_{g,\text{post}}^2 = \frac{d_0 s_0^2 + d_g s_g^2}{d_0 + d_g} | |||

</math> with df = <math>d_0 + d_g</math> | |||

where | |||

* <math>s_g^2</math>: observed sample variance for gene 𝑔 | |||

* <math>d_g</math>: residual degrees of freedom for gene 𝑔 | |||

* <math>s_0^2</math>: prior (pooled) variance across all genes | |||

* <math>d_0</math>: prior degrees of freedom, estimated by eBayes() using empirical Bayes | |||

* <math>u_g^2</math>: unscaled variance of the coefficient estimate (from design matrix) | |||

<li>Moderated t-statistic will become regular t-statistic if all genes have a common variance | |||

<math> | |||

t_g = \frac{\hat{\beta}_g}{\sqrt{s^2 \cdot u_g^2}} | |||

</math> | |||

with df = <math>d_g</math> | |||

<li>[https://online.stat.psu.edu/stat555/node/46/ From PennStat] | |||

<li>[https://support.bioconductor.org/p/6124/ Interpretation of the moderated t-statistics and B-statistics] | |||

<li>不同方法的结果比较 [https://zouhua.top/post/math_statistics/2022-10-27-da-methods-comparsion/#不同方法的结果比较 转录组差异分析(DESeq2+limma+edgeR+t-test/wilcox-test)总结]. | |||

<li>Diagram of usage [https://www.rdocumentation.org/packages/limma/versions/3.28.14/topics/makeContrasts ?makeContrasts], [https://www.rdocumentation.org/packages/limma/versions/3.28.14/topics/contrasts.fit ?contrasts.fit], [https://www.rdocumentation.org/packages/limma/versions/3.28.14/topics/ebayes ?eBayes] | |||

<pre> | |||

lmFit contrasts.fit eBayes topTable | |||

x ------> fit -------------------> fit2 -----> fit2 ---------> | |||

^ ^ | |||

| | | |||

model.matrix | makeContrasts | | |||

class ---------> design ----------> contrasts | class ---------> design ----------> contrasts | ||

</ | </pre> | ||

* Examples of contrasts (search '''contrasts.fit''' and/or '''model.matrix''' from the user guide) <syntaxhighlight lang=' | <li>Moderated t-test [https://www.rdocumentation.org/packages/MKmisc/versions/1.6/topics/mod.t.test mod.t.test()] from [https://cran.r-project.org/web/packages/MKmisc/index.html MKmisc] package | ||

<li>[https://support.bioconductor.org/p/67984/ Correct assumptions of using limma moderated t-test] and the paper [http://journals.plos.org/plosone/article?id=10.1371/journal.pone.0012336 Should We Abandon the t-Test in the Analysis of Gene Expression Microarray Data: A Comparison of Variance Modeling Strategies]. | |||

* Evaluation: statistical power (figure 3, 4, 5), false-positive rate (table 2), execution time and ease of use (table 3) | |||

* Limma presents several advantages | |||

* RVM inflates the expected number of false-positives when sample size is small. On the other hand the, RVM is very close to Limma from either their formulas (p3 of the supporting info) or the Hierarchical clustering (figure 2) of two examples. | |||

* [https://www.slideshare.net/nahla0tammam/b4-jeanmougin Slides] | |||

<li>Comparison of ordinary T-statistic, RVM T-statistic and Limma/eBayes moderated T-statistic. | |||

{| class="wikitable" | |||

|- | |||

! !! Test statistic for gene g !! | |||

|- | |||

| [https://en.wikipedia.org/wiki/Student%27s_t-test#Equal_or_unequal_sample_sizes,_equal_variance Ordinary T-test] || <math> \frac{\overline{y}_{g1} - \overline{y}_{g2}}{S_g^{Pooled}/\sqrt{1/n_1 + 1/n_2}}</math> || <math>(S_g^{Pooled})^2 = \frac{(n_1-1)S_{g1}^2 + (n_2-1)S_{g2}^2}{n1+n2-2} </math> | |||

|- | |||

| [https://academic.oup.com/bioinformatics/article/19/18/2448/194552 RVM] || <math> \frac{\overline{y}_{g1} - \overline{y}_{g2}}{S_g^{RVM}/\sqrt{1/n_1 + 1/n_2}}</math> || <math>(S_g^{RVM})^2 = \frac{(n_1+n_2-2)S_{g}^2 + 2*a*(a*b)^{-1}}{n1+n2-2+2*a} </math> | |||

|- | |||

| Limma || <math> \frac{\overline{y}_{g1} - \overline{y}_{g2}}{S_g^{Limma}/\sqrt{1/n_1 + 1/n_2}}</math> || <math>(S_g^{Limma})^2 = \frac{d_0 S_0^2 + d_g S_g^2}{d_0 + d_g} </math> | |||

|} | |||

<li>In Limma, | |||

* <math>\sigma_g^2</math> assumes an inverse Chi-square distribution with mean <math>S_0^2</math> and <math>d_0</math> degrees of freedom | |||

* <math>d_0</math> (fit$df.prior) and <math>d_g</math> are, respectively, prior and residual/empirical degrees of freedom. | |||

* <math>S_0^2</math> (fit$s2.prior) is the prior distribution and <math>S_g^2</math> is the pooled variance. | |||

* <math>(S_g^{Limma})^2</math> can be obtained from fit$s2.post. | |||

<li>[https://arxiv.org/abs/1901.10679 Empirical Bayes estimation of normal means, accounting for uncertainty in estimated standard errors] Lu 2019 | |||

</ul> | |||

=== Examples === | |||

<ul> | |||

<li>Examples of contrasts (search '''contrasts.fit''' and/or '''model.matrix''' from the user guide) | |||

<syntaxhighlight lang='r'> | |||

# Ex 1 (Single channel design): | # Ex 1 (Single channel design): | ||

design <- model.matrix(~ 0+factor(c(1,1,1,2,2,3,3,3))) # number of samples x number of groups | design <- model.matrix(~ 0+factor(c(1,1,1,2,2,3,3,3))) # number of samples x number of groups | ||

| Line 136: | Line 420: | ||

fit2 <- eBayes(fit2) | fit2 <- eBayes(fit2) | ||

</syntaxhighlight> | </syntaxhighlight> | ||

<li>Example from user guide 17.3 (Mammary progenitor cell populations) | |||

<syntaxhighlight lang='r'> | |||

setwd("~/Downloads/IlluminaCaseStudy") | setwd("~/Downloads/IlluminaCaseStudy") | ||

url <- c("http://bioinf.wehi.edu.au/marray/IlluminaCaseStudy/probe%20profile.txt.gz", | url <- c("http://bioinf.wehi.edu.au/marray/IlluminaCaseStudy/probe%20profile.txt.gz", | ||

| Line 184: | Line 470: | ||

# [1] 24691 8 | # [1] 24691 8 | ||

</syntaxhighlight> | </syntaxhighlight> | ||

<li>Three groups comparison (What is the difference of A vs Other AND A vs (B+C)/2?). [https://grokbase.com/t/r/bioconductor/092bnp4147/bioc-limma-contrasts-comparing-one-factor-to-multiple-others Contrasts comparing one factor to multiple others] | |||

<syntaxhighlight lang='r'> | |||

library(limma) | library(limma) | ||

set.seed(1234) | set.seed(1234) | ||

| Line 231: | Line 519: | ||

# [1] 0.9640699 | # [1] 0.9640699 | ||

</syntaxhighlight> As we can see the p-values returned from the first contrast are very small (large mean but small variance) but the p-values returned from the 2nd contrast are large (still large mean but very large variance). The variance from the "Other" group can be calculated from a mixture distribution ( pdf = .4 N(12, 1) + .6 N(5, 1), VarY = E(Y^2) - (EY)^2 where E(Y^2) = .4 (VarX1 + (EX1)^2) + .6 (VarX2 + (EX2)^2) = 73.6 and EY = .4 * 12 + .6 * 5 = 7.8; so VarY = 73.6 - 7.8^2 = 12.76). | </syntaxhighlight> As we can see the p-values returned from the first contrast are very small (large mean but small variance) but the p-values returned from the 2nd contrast are large (still large mean but very large variance). The variance from the "Other" group can be calculated from a mixture distribution ( pdf = .4 N(12, 1) + .6 N(5, 1), VarY = E(Y^2) - (EY)^2 where E(Y^2) = .4 (VarX1 + (EX1)^2) + .6 (VarX2 + (EX2)^2) = 73.6 and EY = .4 * 12 + .6 * 5 = 7.8; so VarY = 73.6 - 7.8^2 = 12.76). | ||

* [ | |||

</ul> | |||

=== logFC (= coefficient): single gene example === | |||

<ul> | |||

<li>logFC is just the '''coefficient''' for the group variable from the least squares estimate of a linear regression. However, the SE ('''moderated SE''') of logFC is calculated by using '''empirical Bayes''' (not just Bayes) theorem. | |||

* On the volcano plot generated by the RBiomirGS package, x-axis is labelled as "model coefficient". | |||

<li>limma method. Limma assumes you're analyzing log2-transformed expression data (like log2 intensity values from microarrays, or logCPM from RNA-seq with '''voom'''). If your expression matrix is on the raw scale, then the logFC output is '''not a log2 fold change'''. | |||

<pre> | |||

expr <- matrix(c(5, 6, 9, 10), nrow = 1) | |||

colnames(expr) <- c("Ctrl1", "Ctrl2", "Trt1", "Trt2") | |||

rownames(expr) <- "GeneA" | |||

group <- factor(c("Control", "Control", "Treatment", "Treatment")) | |||

design <- model.matrix(~ group) | |||

expr_log2 <- log2(expr) | |||

fit <- lmFit(expr_log2, design) | |||

fit <- eBayes(fit) | |||

topTable(fit) | |||

# Removing intercept from test coefficients | |||

# logFC AveExpr t P.Value adj.P.Val B | |||

# 1 0.7924813 2.849686 5.217193 0.03483078 0.03483078 -2.858822 | |||

</pre> | |||

<li>By hand method 1 (biologist): logFC = log2(mean(intensities in Trt) / mean(intensities in Ctrl) ) | |||

<pre> | |||

log2(mean(c(9, 10)) / mean(c(5, 6))) # [1] 0.7884959 | |||

</pre> | |||

<li>By hand method 2 (statistician): logFC = mean(log2(intensities in Trt)) / mean(log2(intensities in Ctrl)). <span style="color: red"><math>Y=\beta_0 + \beta_1 * \text{Treatment} + \epsilon </math></span>. The least square coefficient estimate <span style="color: red"><math>\log \text{FC} = \hat{\beta_1} = \bar{Y}_{Treatment} - \bar{Y}_{Control}</math></span>. In general, the least squares solution is <math>\hat{\boldsymbol{\beta}} = (X^\top X)^{-1} X^\top \boldsymbol{y}</math>. | |||

<pre> | |||

mean(log2(c(9, 10))) - mean(log2(c(5, 6))) # [1] 0.7924813 | |||

</pre> | |||

<li>Why doesn’t limma use log2(mean_Treatment / mean_Control) as logFC? | |||

* Because limma is built around linear modeling of log-transformed data, and within that framework, the natural output is: <math>\log FC = \bar{Y}_{Treatment} - \bar{Y}_{Control}</math>. On log scale, the difference of means is the log2 fold change. | |||

* On raw scale, expression values often have heteroscedasticity (variance depends on mean). | |||

* The log2 of the ratio of means: <math>\log_2(\frac{\bar{Y}_{treatment}}{\bar{Y}_{control}}) </math> is non-linear and biased when data is skewed or noisy. Recall: <math>\log_2(\bar{y}) \neq \overline{\log_2(y)} </math>. Also by '''Jensen's inequality'''. log(mean) <math>\geq</math> mean of logs. log2(mean(c(9, 10)))=3.2479, mean(log2(c(9, 10)))=3.2459. But it is unclear when we do substractions. | |||

* In contrast, the difference of log2 values: <math>\overline{\log_2(Y_{treatment})} - \overline{\log_2(Y_{control})} </math> is linear , has better statistical properties (robust to skew and extreme values, unbiased under the normality errors assumptions), and is what regression models estimate directly. | |||

<li>AveExpr: | |||

<pre> | |||

mean(log2(c(5, 6, 9, 10))) # [1] 2.849686 | |||

</pre> | |||

</ul> | |||

=== logFC: multiple genes example === | |||

<ul> | |||

<li>limma | |||

<pre> | |||

# Raw expression matrix (genes × samples) | |||

expr <- matrix(c( | |||

# GeneA: Upregulated | |||

5, 6, 9, 10, | |||

# GeneB: No change | |||

5, 5, 5, 5, | |||

# GeneC: Downregulated | |||

10, 11, 5, 4 | |||

), nrow = 3, byrow = TRUE) | |||

rownames(expr) <- c("GeneA", "GeneB", "GeneC") | |||

colnames(expr) <- c("Ctrl1", "Ctrl2", "Trt1", "Trt2") | |||

# Group labels and design matrix | |||

group <- factor(c("Control", "Control", "Treatment", "Treatment")) | |||

design <- model.matrix(~ group) | |||

library(limma) | |||

expr_log2 <- log2(expr) | |||

fit <- lmFit(expr_log2, design) | |||

fit <- eBayes(fit) | |||

topTable(fit, number = Inf) | |||

# Removing intercept from test coefficients | |||

# logFC AveExpr t P.Value adj.P.Val B | |||

# GeneC -1.2297158 2.775822 -7.546268 0.01129495 0.0327472 -2.910716 | |||

# GeneA 0.7924813 2.849686 5.603761 0.02183147 0.0327472 -3.831430 | |||

# GeneB 0.0000000 2.321928 0.000000 1.00000000 1.0000000 -8.251181 | |||

</pre> | |||

<li>By hands | |||

{| class="wikitable" | |||

|- | |||

! | |||

! mean(log2) difference [limma] | |||

! log2(mean ratio) | |||

|- | |||

| geneA | |||

| .792 =<br />log2(mean(c(9,10)) / mean(c(5,6))) | |||

| .788 = <br />log2(mean(c(9,10)) / mean(c(5,6))) | |||

|- | |||

| geneB | |||

| 0 | |||

| 0 | |||

|- | |||

| geneC | |||

| -1.229 =<br />mean(log2(c(5, 4))) - mean(log2(c(10, 11))) | |||

| -1.222 = <br />log2(mean(c(5, 4)) / mean(c(10, 11))) | |||

|} | |||

</ul> | |||

=== Use Limma to run ordinary T tests === | |||

[https://support.bioconductor.org/p/80398/ Use Limma to run ordinary T tests], [https://sahirbhatnagar.com/blog/2017/02/07/limma-moderated-and-ordinary-t-statistics/ Limma Moderated and Ordinary t-statistics] | |||

<syntaxhighlight lang='r'> | |||

# where 'fit' is the output from lmFit() or contrasts.fit(). | # where 'fit' is the output from lmFit() or contrasts.fit(). | ||

unmod.t <- fit$coefficients/fit$stdev.unscaled/fit$sigma | unmod.t <- fit$coefficients/fit$stdev.unscaled/fit$sigma | ||

| Line 267: | Line 648: | ||

# [1] 3.561284 | # [1] 3.561284 | ||

</syntaxhighlight> | </syntaxhighlight> | ||

=== Very large logFC === | |||

<ul> | |||

<li>Low expression levels → unstable ratios. Filter out lowly expressed genes using filterByExpr() (recommended before voom()) | |||

<pre> | |||

boxplot(log2expr["GeneX", ] ~ group, main = "Expression of GeneX") | |||

</pre> | |||

<li>Small sample size → unstable estimates. With only 2–3 replicates per group, the regression coefficient (logFC) can be inflated due to sampling noise. A small standard error can make small differences look large. Also look at '''fit$s2["GeneX"]''' - gene-wise residual variance. | |||

<pre> | |||

fit$stdev.unscaled["GeneX", ] # Raw standard error (before shrinkage) | |||

</pre> | |||

* | <li>Outliers | ||

** | <pre> | ||

** | plot(log2expr["GeneX", ], main = "GeneX Expression") | ||

text(1:length(group), log2expr["GeneX", ], labels = group) | |||

</pre> | |||

<li>Annotation or duplication artifacts. Multiple probes or genes may map to the same feature → some may be broken or misaligned. | |||

<li>Run the standard pipeline with filtering and voom weighting: | |||

<pre> | |||

keep <- filterByExpr(counts, group = group) | |||

counts_filtered <- counts[keep, ] | |||

v <- voom(counts_filtered, design) | |||

fit <- lmFit(v, design) | |||

fit <- eBayes(fit) | |||

topTable(fit) | |||

</pre> | |||

</ul> | |||

=== voom === | |||

voom() is part of the limma pipeline for RNA-seq count data, and it handles zero counts safely. | |||

What voom() does: | |||

# Takes raw count data (including zeros) | |||

# Applies a log2 transformation with precision weights | |||

# Automatically adds a small offset (prior count) to avoid log(0) | |||

# Returns log2-counts per million (logCPM) + weights | |||

# Enables use of lmFit() as usual | |||

What voom Adds Internally | |||

* Adds a small offset, often via log2(count + 0.5) or so | |||

* Applies quantile normalization | |||

* Computes observation-level weights to stabilize variance | |||

These features make voom robust to: | |||

* Low or zero counts | |||

* Mean–variance relationships | |||

* Heteroscedasticity (non-constant variance) | |||

<pre> | |||

library(limma) | |||

library(edgeR) | |||

# Sample count matrix (genes × samples) with zeroes | |||

counts <- matrix(c( | |||

0, 10, 5, 8, | |||

3, 0, 2, 4, | |||

100, 120, 90, 110 | |||

), nrow = 3, byrow = TRUE) | |||

rownames(counts) <- c("GeneA", "GeneB", "GeneC") | |||

colnames(counts) <- c("Ctrl1", "Ctrl2", "Trt1", "Trt2") | |||

# Define groups | |||

group <- factor(c("Control", "Control", "Treatment", "Treatment")) | |||

design <- model.matrix(~ group) | |||

# Convert to DGEList | |||

dge <- DGEList(counts = counts) | |||

# Apply voom | |||

v <- voom(dge, design) | |||

# Now use limma as usual | |||

fit <- lmFit(v, design) | |||

fit <- eBayes(fit) | |||

topTable(fit) | |||

# Removing intercept from test coefficients | |||

# logFC AveExpr t P.Value adj.P.Val B | |||

# GeneA 1.85057066 15.09360 1.1386362 0.2982669 0.4677039 -4.594564 | |||

# GeneB 1.40826333 14.18360 0.7751266 0.4677039 0.4677039 -4.595725 | |||

# GeneC -0.05108744 19.82172 -1.0416138 0.3377309 0.4677039 -4.978014 | |||

</pre> | |||

We can draw a plot of the voom mean-variance trend (logCPM) where y=variance and x= mean log2(CPM + .5). | |||

<pre> | |||

v <- voom(dge, design, plot = TRUE) | |||

</pre> | |||

We expect to see a decreasing trend because random noise is proportionally larger in low-count data. That’s why '''voom() down-weights low-expression genes''' when fitting the linear model — they’re less reliable. | |||

Lowly expressed genes → higher estimated variance → lower weight. This reduces its influence on: | |||

* Estimated coefficients (logFC) | |||

* Standard errors | |||

* p-values | |||

* Multiple testing correction | |||

== t.test() vs aov() vs sva::f.pvalue() vs genefilter::rowttests() == | |||

* t.test() & aov() & sva::f.pvalue() & AT function will be equivalent if we assume equal variances in groups (not the default in t.test) | |||

* See examples in [https://gist.github.com/arraytools/d2e07a5df3377465c39ecc98551f0c0f gist]. | |||

<pre> | |||

# Method 1: | |||

tmp <- data.frame(groups=groups.gem, | |||

x=combat_edata3["TDRD7", 29:40]) | |||

anova(aov(x ~ groups, data = tmp)) | |||

# Analysis of Variance Table | |||

# | |||

# Response: x | |||

# Df Sum Sq Mean Sq F value Pr(>F) | |||

# groups 1 0.0659 0.06591 0.1522 0.7047 | |||

# Method 2: | |||

t.test(combat_edata3["TDRD7", 29:40][groups.gem == "CR"], | |||

combat_edata3["TDRD7", 29:40][groups.gem == "PD"]) | |||

# 0.7134 | |||

t.test(combat_edata3["TDRD7", 29:40][groups.gem == "CR"], | |||

combat_edata3["TDRD7", 29:40][groups.gem == "PD"], var.equal = TRUE) | |||

# 0.7047 | |||

# Method 3: | |||

require(sva) | |||

pheno <- data.frame(groups.gem = groups.gem) | |||

mod = model.matrix(~as.factor(groups.gem), data = pheno) | |||

mod0 = model.matrix(~1, data = pheno) | |||

f.pvalue(combat_edata3[c("TDRD7", "COX7A2"), 29:40], | |||

mod, mod0) | |||

# TDRD7 COX7A2 | |||

# 0.7046624 0.2516682 | |||

# Method 4: | |||

# load some functions from AT randomForest plugin | |||

tmp2 <- Vat2(combat_edata3[c("TDRD7", "COX7A2"), 29:40], | |||

ifelse(groups.gem == "CR", 0, 1), | |||

grp=c(0,1), rvm = FALSE) | |||

tmp2$tp | |||

# TDRD7 COX7A2 | |||

# 0.7046624 0.2516682 | |||

# Method 5: https://stats.stackexchange.com/a/474335 | |||

# library(genefilter) | |||

# Method 6: | |||

# library(limma) | |||

</pre> | |||

== sapply + lm() == | |||

[https://stackoverflow.com/a/12481500 using lm() in R for a series of independent fits]. Gene expression is on response part (Y). We have nG columns on Y. We'll fit a regression for each column of Y and the same X. | |||

== Treatment effect estimation == | == Treatment effect estimation == | ||

[https://projecteuclid.org/euclid.aos/1590480035 Model-assisted inference for treatment effects using regularized calibrated estimation with high-dimensional data] | [https://projecteuclid.org/euclid.aos/1590480035 Model-assisted inference for treatment effects using regularized calibrated estimation with high-dimensional data] | ||

== Permutation test | == A/B testing == | ||

* https://en.wikipedia.org/wiki/A/B_testing. See [https://www.reddit.com/r/ChatGPT/comments/1302yqn/anyone_else_seeing_modeltextdavinci002_in_the_url/ Anyone else seeing "model=text-davinci-002" in the URL whenever they try to use ChatGPT?] | |||

* [https://iyarlin.github.io/2024/07/10/better_ab_testing_with_survival/ Better A/B testing with survival analysis] | |||

== Degrees of freedom == | |||

* https://en.wikipedia.org/wiki/Degrees_of_freedom, [https://en.wikipedia.org/wiki/Degrees_of_freedom_(statistics) Degrees of freedom (statistics)] | |||

* t distribution. When the sample size is large, the df becomes very large and t distribution approximates the normal distribution. | |||

* Chi-squared distribution. When the sample size is large, standardized chi-squared distribution approximates the normal distribution. | |||

* The degrees of freedom for a [https://en.wikipedia.org/wiki/Chi-squared_test chi-squared test] depend on the type of test being performed. Here are some common examples: | |||

** [https://www.statology.org/chi-square-goodness-of-fit-test-in-r/ Chi-squared goodness-of-fit test]: The degrees of freedom are equal to the number of categories minus 1. For example, if you are testing whether a six-sided die is fair, there are six categories (one for each side of the die), so the degrees of freedom would be 6 - 1 = 5. | |||

** Chi-squared test of independence: The degrees of freedom are equal to (number of rows - 1) * (number of columns - 1). For example, if you have a contingency table with 3 rows and 4 columns, the degrees of freedom would be (3 - 1) * (4 - 1) = 6. | |||

** Chi-squared test for homogeneity: The degrees of freedom are equal to (number of groups - 1) * (number of categories - 1). For example, if you have three groups and four categories, the degrees of freedom would be (3 - 1) * (4 - 1) = 6. | |||

* By its definition, chi-squared distribution is the same of squared normal distribution. | |||

* The square of t distribution follows F(1, df). The F distribution is the ratio of two chi-squared random variables, each divided by its degrees of freedom. | |||

= Permutation test = | |||

<ul> | <ul> | ||

<li>https://en.wikipedia.org/wiki/Permutation_test | |||

<li>[https://link.springer.com/book/10.1007/978-3-030-74361-1 Permutation Statistical Methods with R] by Berry, et al. | |||

<li>[https://modernstatisticswithr.com/modchapter.html 7 Modern classical statistics] from "Modern Statistics with R" by Thulin | |||

<li> | |||

<math>p_{value} = \frac{\# \{ |t^*| \ge |t_{obs}|\}}{\text{Total number of permutations}} </math> | |||

<li>Run a permutation test in R to compare the means of two groups. See also [https://faculty.washington.edu/kenrice/sisg/SISG-08-06.pdf Permutation tests] notes by Rice & Lumley | |||

<syntaxhighlight lang='R'> | |||

# example data | |||

group1 <- c(1, 2, 3, 4, 5) | |||

group2 <- c(6, 7, 8, 9, 10) | |||

# Calculate observed test statistic, assuming unequal variances, Welch's t-test | |||

calculate_t_statistic <- function(x, y) { | |||

n1 <- length(x) | |||

n2 <- length(y) | |||

mean_diff <- mean(x) - mean(y) | |||

var1 <- var(x) | |||

var2 <- var(y) | |||

stderr <- sqrt((var1 / n1) + (var2 / n2)) | |||

return(mean_diff / stderr) | |||

} | |||

observed_t <- calculate_t_statistic(group1, group2) | |||

# combine data into one vector | |||

combined_data <- c(group1, group2) | |||

# number of permutations | |||

n_permutations <- 1000 | |||

# initialize vector to store permuted test statistics | |||

permuted_stats <- numeric(n_permutations) | |||

set.seed(1) | |||

# run permutation test | |||

for (i in seq_len(n_permutations)) { | |||

# permute combined data | |||

permuted_data <- sample(combined_data) | |||

# split permuted data into two groups | |||

permuted_group1 <- permuted_data[seq_along(group1)] | |||

permuted_group2 <- permuted_data[(length(group1) + 1):length(combined_data)] | |||

# calculate permuted test statistic | |||

permuted_stats[i] <- calculate_t_statistic(permuted_group1, permuted_group2) | |||

} | |||

# calculate p-value | |||

p_value <- mean(abs(permuted_stats) >= abs(observed_t)) | |||

p_value | |||

# [1] 0.013 | |||

t.test(group1, group2)$p.value * 0.5 | |||

# [1] 0.0005264129 | |||

</syntaxhighlight> | |||

Permutation tests may not always be as powerful as parametric t-tests when the assumptions of the t-test are met. This means that they may require larger sample sizes to achieve the same level of statistical power. | |||

<li>The smallest possible p-value is equal to 1 / (number of permutations + 1) if the observed test statistic is included in permutation. For example, if 1000 permutations are performed, the smallest possible p-value is 1 / (1000 + 1) = 0.000999. Since the [https://stats.stackexchange.com/a/112352 observed test statistic is included in one of permutations], the smallest possible p-value is 1 / (number of permutations + 1), where +1 accounts for the observed test statistic. [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC2687965/ Fewer permutations, more accurate P-values] (pseudo count). | |||

<li>Could permutation test p-value be zero? [https://arxiv.org/pdf/1603.05766.pdf Permutation P-values Should Never Be Zero: Calculating Exact P-values When Permutations Are Randomly Drawn] by Phipson and Smyth | |||

<li>[https://www.tandfonline.com/doi/abs/10.1080/00031305.2021.1902856?journalCode=utas20 When Your Permutation Test is Doomed to Fail]</li> | <li>[https://www.tandfonline.com/doi/abs/10.1080/00031305.2021.1902856?journalCode=utas20 When Your Permutation Test is Doomed to Fail]</li> | ||

<li>[https://pubmed.ncbi.nlm.nih.gov/21044043/ Permutation P-values should never be zero: calculating exact P-values when permutations are randomly drawn] Phipson & Smyth 2010</li> | <li>[https://pubmed.ncbi.nlm.nih.gov/21044043/ Permutation P-values should never be zero: calculating exact P-values when permutations are randomly drawn] Phipson & Smyth 2010</li> | ||

| Line 313: | Line 895: | ||

<li>In ArrayTools, LS score (= -sum (log p_j)) is always > 0. So the permutation p-value is calculated using one-sided. </li> | <li>In ArrayTools, LS score (= -sum (log p_j)) is always > 0. So the permutation p-value is calculated using one-sided. </li> | ||

<li>GSA package. [https://support.bioconductor.org/p/24160/ Correct p-value in GSA (Gene set enrichment) permutation tests?] Why it uses one-sided? </li> | <li>GSA package. [https://support.bioconductor.org/p/24160/ Correct p-value in GSA (Gene set enrichment) permutation tests?] Why it uses one-sided? </li> | ||

<li>[https://stats.stackexchange.com/questions/34052/two-sided-permutation-test-vs-two-one-sided Two-sided permutation test vs. two one-sided] </li> | <li>[https://stats.stackexchange.com/questions/34052/two-sided-permutation-test-vs-two-one-sided Two-sided permutation test vs. two one-sided] | ||

* [https://stats.oarc.ucla.edu/other/mult-pkg/faq/general/faq-what-are-the-differences-between-one-tailed-and-two-tailed-tests/ FAQ: What are the differences between one-tailed and two-tailed tests?] | |||

* [https://stats.stackexchange.com/a/73993 Why do we use a one-tailed test F-test in analysis of variance (ANOVA)?] | |||

</li> | |||

<li>https://faculty.washington.edu/kenrice/sisg/SISG-08-06.pdf </li> | <li>https://faculty.washington.edu/kenrice/sisg/SISG-08-06.pdf </li> | ||

<li>How about the [https://bioconductor.org/packages/release/bioc/html/fgsea.html fgsea] package? </li> | <li>How about the [https://bioconductor.org/packages/release/bioc/html/fgsea.html fgsea] package? </li> | ||

<li>Roughly estimate the number of permutations based on the desired FDR [https://support.bioconductor.org/p/111949/#112128 GSEA for RNA-seq analysis]</li> | <li>Roughly estimate the number of permutations based on the desired FDR [https://support.bioconductor.org/p/111949/#112128 GSEA for RNA-seq analysis]</li> | ||

</ul> | </ul> | ||

== How to choose B (number of random permutations) == | |||

[https://en.wikipedia.org/wiki/Binomial_proportion_confidence_interval Binomial proportion confidence interval] | |||

When you do a Monte-Carlo permutation test with '''B''' random permutations, the permutation p-value | |||

<math>\hat p=(r+1)/(B+1)</math> (where ''r'' is the number of permuted test statistics as or more extreme | |||

than observed) has sampling variability. A useful approximation (Wald interval/normal approximation) for the standard error of | |||

<math>\hat p</math> is: | |||

<math> | |||

\mathrm{SE}(\hat p) \approx \sqrt{\frac{p(1-p)}{B}} | |||

</math> | |||

(so ''r'' behaves like a binomial with parameters ''B'' and true ''p''). | |||

Rearrange to solve for ''B'': | |||

<math> | |||

B \approx \frac{p(1-p)}{\mathrm{SE}^2}. | |||

</math> | |||

=== Examples === | |||

Use ''p ≈ 0.05'' for planning around the significance threshold: | |||

<ul> | |||

<li>If you want '''SE ≤ 0.01''' (precision ±0.01): | |||

<math>B \approx 0.05\cdot0.95 / 0.01^2 = 475</math>. | |||

→ ~500 permutations. | |||

<li>If you want '''SE ≤ 0.005''': | |||

<math>B \approx 1900</math>. | |||

→ ~2,000 permutations. | |||

<li>If you want '''SE ≤ 0.001''' (very tight): | |||

<math>B \approx 47{,}500</math>. | |||

→ ~50,000 permutations. | |||

</ul> | |||

=== Other practical rules === | |||

* '''Resolution rule:''' your smallest nonzero p-value is <math>1/(B+1)</math>. If you want to report p-values as small as 0.001, you need ''B ≥ 999''. | |||

* If you expect very small true p-values (e.g. <math><10^{-4}</math>), you need ''B'' in the tens or hundreds of thousands. | |||

=== Recommended practical strategy (adaptive & efficient) === | |||

# Start moderate: run '''B = 5{,}000''' permutations. | |||

# If <math>\hat p</math> is far from your threshold (e.g. <math>\hat p > 0.1</math> or <math>\hat p < 0.01</math>), that may be enough. | |||

# If <math>\hat p</math> is near the decision boundary (around 0.05), increase ''B'' to 20k–50k. | |||

# When you need very small p-values (e.g. <0.001), increase to 100k–200k (or perform exhaustive permutation if feasible). | |||

Because you have only <math>\binom{28}{15}\approx 37</math> million possible permutations, you can sample randomly without replacement from that finite set. If you can afford it computationally, you could also do all 37M (exact permutations), but usually random sampling with ''B'' as above is adequate. | |||

=== R code snippet === | |||

<syntaxhighlight lang="r"> | |||

# r = number of permuted statistics >= observed | |||

# B = number of permutations | |||

r <- 12 | |||

B <- 5000 | |||

# Monte Carlo permutation p-value | |||

p_hat <- (r + 1) / (B + 1) | |||

# Exact 95% CI for the true p using Clopper-Pearson interval | |||

ci <- binom.test(r, B)$conf.int | |||

p_hat; ci | |||

# [1] 0.00259948 | |||

# [1] 0.001240711 0.004188560 | |||

# attr(,"conf.level") | |||

# [1] 0.95 | |||

c(p_hat -1.96 * sqrt(p_hat * (1-p_hat)/B), p_hat + 1.96 * sqrt(p_hat * (1-p_hat)/B)) | |||

# [1] 0.001188083 0.004010877 | |||

</syntaxhighlight> | |||

* Check whether the CI overlaps α=0.05 (suppose your nominal α=0.05). | |||

* If the entire CI < 0.05 → strong evidence that <math>𝑝_{true} < \alpha</math>. You can reject the null. | |||

* If the CI includes 0.05 → you can’t be certain; the observed p could be slightly above 0.05 in truth. | |||

* If the entire CI > 0.05 → clearly non-significant. | |||

=== How to report uncertainty === | |||

When you report permutation results, include either: | |||

* the Monte Carlo p-value <math>(r+1)/(B+1)</math> '''and''' a confidence interval for the true p (e.g. from <code>binom.test(r, B)</code> in R), or | |||

* state the number of permutations ''B'', so readers know the resolution (e.g. “p = 0.003 (based on 10,000 permutations, smallest possible nonzero p = 1/10001 ≈ 1.0e-4)”). | |||

=== Short recommendation for your case (n = 15 and 13, exhaustive = 37M) === | |||

* If you only care about whether p < 0.05 with reasonable precision, '''B = 5,000–10,000''' is fine. | |||

* If your observed <math>\hat p</math> is near 0.05, increase to '''20k–50k'''. | |||

* If you need very small p-values (e.g. <0.001) or high precision, try '''50k–200k''' (or more), or perform exhaustive permutation if you can afford it. | |||

= ANOVA = | = ANOVA = | ||

| Line 333: | Line 1,004: | ||

== Partition of sum of squares == | == Partition of sum of squares == | ||

https://en.wikipedia.org/wiki/Partition_of_sums_of_squares | https://en.wikipedia.org/wiki/Partition_of_sums_of_squares | ||

== Extract p-values == | |||

<syntaxhighlight lang='r'> | |||

my_anova <- aov(response_variable ~ factor_variable, data = my_data) | |||

# Extract the p-value | |||

p_value <- summary(my_anova)[[1]]$'Pr(>F)'[1] | |||

</syntaxhighlight> | |||

aov() allows multiple response variables. In a single response variable case, we still need to use <nowiki>[[1]]</nowiki> to subset the result. | |||

== F-test in anova == | == F-test in anova == | ||

[https://statisticaloddsandends.wordpress.com/2020/11/03/how-is-the-f-statistic-computed-in-anova-when-there-are-multiple-models/ How is the F-statistic computed in anova() when there are multiple models?] | <ul> | ||

<li>[https://statisticaloddsandends.wordpress.com/2020/11/03/how-is-the-f-statistic-computed-in-anova-when-there-are-multiple-models/ How is the F-statistic computed in anova() when there are multiple models?] | |||

<li>With | |||

<syntaxhighlight lang='r'> | |||

set.seed(123) | |||

n <- 20 | |||

x1 <- rnorm(n) | |||

x2 <- rnorm(n) | |||

x3 <- rnorm(n) | |||

x4 <- rnorm(n) | |||

# true model: y depends only on x1 and x2 | |||

y <- 3 + 2*x1 - 1.5*x2 + rnorm(n, sd = 1) | |||

toy <- data.frame(y, x1, x2, x3, x4) | |||

toy | |||

m1 <- lm(y ~ x1 + x2, data = toy) # df1=n-p1=n-3 | |||

m2 <- lm(y ~ x1 + x2 + x3 + x4, data = toy) # df2=n-p2=n-5, the larger model | |||

anova(m1, m2) | |||

</syntaxhighlight> | |||

you are testing: | |||

* H₀: β₃ = β₄ = 0 (the extra predictors x3 and x4 add no linear effect) | |||

* H₁: at least one of β₃, β₄ ≠ 0 | |||

Note that you need to restrict both models to observations where x1, x2, x3, x4, and y are all non-missing. | |||

The F statistic is <math>F = \frac{(RSS1 - RSS2)/(df1-df2)}{RSS2/df2} </math>. | |||

In R, | |||

<syntaxhighlight lang='r'> | |||

RSS1 <- sum(resid(m1)^2) | |||

RSS2 <- sum(resid(m2)^2) | |||

df1_df2 <- df.residual(m1) - df.residual(m2) # numerator df | |||

df2 <- df.residual(m2) # denominator df | |||

F_value <- ((RSS1 - RSS2)/df1_df2) / (RSS2/df2) | |||

F_value | |||

pf(F_value, df1, df2, lower.tail = FALSE) # 0.08039, same as anova() result | |||

</syntaxhighlight> | |||

lm() fits by ordinary least squares (OLS), not maximum likelihood (ML). anova() computes an extra sum-of-squares F-test, not a likelihood ratio test. Cf. the LRT follows a χ²(df difference) distribution. The LRT statistic can be calculated by '''lrt <- 2 * (logLik(m2) - logLik(m1))''' and p-value '''pchisq(lrt, df = df.residual(m1) - df.residual(m2), lower.tail = FALSE) ''' | |||

<syntaxhighlight lang='r'> | |||

lrt <- 2*(logLik(m2) - logLik(m1)) | |||

df_diff <- attr(logLik(m2), "df") - attr(logLik(m1), "df") | |||

p_LRT <- pchisq(lrt, df = df_diff, lower.tail = FALSE) | |||

lrt # 'log Lik.' 6.722214 (df=6) | |||

p_LRT # 'log Lik.' 0.03469683 (df=6) | |||

</syntaxhighlight> | |||

For small sample sizes, the F distribution with denominator df appears "heavier-tailed" than χ². This means: | |||

* F-test p-value tends to be larger | |||

* LRT p-value tends to be smaller | |||

For large samples, they become nearly identical. | |||

</ul> | |||

== F-test and likelihood ratio test == | |||

Since F is a monotone function of the likelihood ratio statistic, the F-test is a likelihood ratio test; See [https://en.wikipedia.org/wiki/F-test Wikipedia]. | |||

== Common tests are linear models == | == Common tests are linear models == | ||

| Line 342: | Line 1,084: | ||

== ANOVA Vs Multiple Comparisons == | == ANOVA Vs Multiple Comparisons == | ||

[https://predictivehacks.com/anova-vs-multiple-comparisons/ ANOVA Vs Multiple Comparisons] | [https://predictivehacks.com/anova-vs-multiple-comparisons/ ANOVA Vs Multiple Comparisons] | ||

== Descriptive Analysis by Groups == | |||

[https://cran.r-project.org/web/packages/compareGroups/index.html compareGroups]: Descriptive Analysis by Groups. Cf tableone package. | |||

== Post-hoc test == | == Post-hoc test == | ||

Determine which levels have significantly different means. | Determine which levels have significantly different means. | ||

<ul> | |||

<li>http://jamesmarquezportfolio.com/one_way_anova_with_post_hocs_in_r.html | |||

<li>[https://stats.idre.ucla.edu/r/faq/how-can-i-do-post-hoc-pairwise-comparisons-in-r/ pairwise.t.test()] for one-way ANOVA | |||

<li>[https://www.r-bloggers.com/post-hoc-pairwise-comparisons-of-two-way-anova/ Post-hoc Pairwise Comparisons of Two-way ANOVA] using TukeyHSD(). | |||

<li>The "TukeyHSD()" function is then used to perform a post-hoc multiple comparisons test to compare the treatment means, taking into account the effect of the block variable. | |||

<pre> | |||

summary(fm1 <- aov(breaks ~ wool + tension, data = warpbreaks)) | |||

# Df Sum Sq Mean Sq F value Pr(>F) | |||

# wool 1 451 450.7 3.339 0.07361 . | |||

# tension 2 2034 1017.1 7.537 0.00138 ** | |||

# Residuals 50 6748 135.0 | |||

TukeyHSD(fm1, "tension", ordered = TRUE) | |||

# Tukey multiple comparisons of means | |||

# 95% family-wise confidence level | |||

# factor levels have been ordered | |||

# | |||

# Fit: aov(formula = breaks ~ wool + tension, data = warpbreaks) | |||

# | |||

# $tension | |||

# diff lwr upr p adj | |||

# M-H 4.722222 -4.6311985 14.07564 0.4474210 | |||

# L-H 14.722222 5.3688015 24.07564 0.0011218 | |||

# L-M 10.000000 0.6465793 19.35342 0.0336262 | |||

tapply(warpbreaks$breaks, warpbreaks$tension, mean) | |||

# L M H | |||

# 36.38889 26.38889 21.66667 | |||

26.38889 - 21.66667 # [1] 4.72222 # M-H | |||

36.38889 - 21.66667 # [1] 14.72222 # L-H | |||

36.38889 - 26.38889 # [1] 10 # L-M | |||

plot(TukeyHSD(fm1, "tension")) | |||

</pre> | |||

<li>post-hoc tests: pairwise.t.test versus TukeyHSD test | |||

<li>[https://finnstats.com/index.php/2021/08/27/how-to-perform-dunnetts-test-in-r/ How to Perform Dunnett’s Test in R] | |||

<li>[https://datasciencetut.com/how-to-do-pairwise-comparisons-in-r/ How to do Pairwise Comparisons in R?] | |||

<li>[https://www.statology.org/tukey-vs-bonferroni-vs-scheffe/ | |||

</ul> | |||

== TukeyHSD (Honestly Significant Difference), diagnostic checking == | == TukeyHSD (Honestly Significant Difference), diagnostic checking == | ||

| Line 359: | Line 1,137: | ||

** compute something analogous to a t score for each pair of means, but you don’t compare it to the Student’s t distribution. Instead, you use a new distribution called the '''[https://en.wikipedia.org/wiki/Studentized_range_distribution studentized range]''' (from Wikipedia) or '''q distribution'''. | ** compute something analogous to a t score for each pair of means, but you don’t compare it to the Student’s t distribution. Instead, you use a new distribution called the '''[https://en.wikipedia.org/wiki/Studentized_range_distribution studentized range]''' (from Wikipedia) or '''q distribution'''. | ||

** Suppose that we take a sample of size ''n'' from each of ''k'' populations with the same normal distribution ''N''(''μ'', ''σ'') and suppose that <math>\bar{y}</math><sub>min</sub> is the smallest of these sample means and <math>\bar{y}</math><sub>max</sub> is the largest of these sample means, and suppose ''S''<sup>2</sup> is the pooled sample variance from these samples. Then the following random variable has a Studentized range distribution: <math>q = \frac{\overline{y}_{\max} - \overline{y}_{\min}}{S/\sqrt{n}}</math> | ** Suppose that we take a sample of size ''n'' from each of ''k'' populations with the same normal distribution ''N''(''μ'', ''σ'') and suppose that <math>\bar{y}</math><sub>min</sub> is the smallest of these sample means and <math>\bar{y}</math><sub>max</sub> is the largest of these sample means, and suppose ''S''<sup>2</sup> is the pooled sample variance from these samples. Then the following random variable has a Studentized range distribution: <math>q = \frac{\overline{y}_{\max} - \overline{y}_{\min}}{S/\sqrt{n}}</math> | ||

** [http://www.sthda.com/english/wiki/one-way-anova-test-in-r#tukey-multiple-pairwise-comparisons One-Way ANOVA Test in R] from sthda.com. <syntaxhighlight lang=' | ** [http://www.sthda.com/english/wiki/one-way-anova-test-in-r#tukey-multiple-pairwise-comparisons One-Way ANOVA Test in R] from sthda.com. <syntaxhighlight lang='r'> | ||

res.aov <- aov(weight ~ group, data = PlantGrowth) | res.aov <- aov(weight ~ group, data = PlantGrowth) | ||

summary(res.aov) | summary(res.aov) | ||

| Line 393: | Line 1,171: | ||

) | ) | ||

</syntaxhighlight> | </syntaxhighlight> | ||

** Or we can use Benjamini-Hochberg method for p-value adjustment in pairwise comparisons <syntaxhighlight lang=' | ** Or we can use Benjamini-Hochberg method for p-value adjustment in pairwise comparisons <syntaxhighlight lang='r'> | ||

library(multcomp) | library(multcomp) | ||

pairwise.t.test(my_data$weight, my_data$group, | pairwise.t.test(my_data$weight, my_data$group, | ||

| Line 403: | Line 1,181: | ||

# P value adjustment method: BH | # P value adjustment method: BH | ||

</syntaxhighlight> | </syntaxhighlight> | ||

* Shapiro-Wilk test for normality <syntaxhighlight lang=' | * Shapiro-Wilk test for normality <syntaxhighlight lang='r'> | ||

# Extract the residuals | # Extract the residuals | ||

aov_residuals <- residuals(object = res.aov ) | aov_residuals <- residuals(object = res.aov ) | ||

| Line 412: | Line 1,190: | ||

== Repeated measure == | == Repeated measure == | ||

<ul> | |||

<li>[https://neuropsychology.github.io/psycho.R//2018/05/01/repeated_measure_anovas.html How to do Repeated Measures ANOVAs in R] | |||

<li>[https://onlinecourses.science.psu.edu/stat502/node/206 Cross-over Repeated Measure Designs] | |||

<li>[https://www.rdatagen.net/post/when-the-research-question-doesn-t-fit-nicely-into-a-standard-study-design/ Cross-over study design with a major constraint] | |||

<li>[https://finnstats.com/index.php/2021/04/06/repeated-measures-of-anova-in-r/ Repeated Measures of ANOVA in R Complete Tutorial] | |||

<li>[https://stats.stackexchange.com/a/287671 How to write the error term in repeated measures ANOVA in R: Error(subject) vs Error(subject/time)] | |||

<li>[https://stats.stackexchange.com/a/51525 Specifying the Error() term in repeated measures ANOVA in R] | |||

<li>Simple example of using Error(). | |||

<pre> | |||

aov(y ~ x + Error(random_factor), data=mydata) | |||

# y=yield of a crop (measured in bushels per acre) | |||

# x=fertilizer: fertilizer treatment (1 = control, 2 = treatment A, 3 = treatment B) | |||

# random_factor=field (1, 2, 3, ...) | |||

# H1: there is a difference in yield due to fertilizer treatment, | |||

# while accounting for the fact that the fields may have different yields. | |||

</pre> | |||

* The Error() function is used to specify the random factor, which is assumed to be '''nested''' within the other factors in the model. This means that the '''levels of the random factor''' are not assumed to be independent of one another, and that the error term should be adjusted accordingly. | |||

* The Error() function is used when you want to specify the random factor in your model. This function is used when you want to specify the random factor in your model. A random factor is a factor that is assumed to be '''nested''' within another factor in the model. The error term is adjusted accordingly. | |||

* This aov() model assumes that the yield from different fields might be different, and the error term should be adjusted accordingly. By including the random factor "field" in the Error() function, we are accounting for the fact that the fields may have different yields. The analysis of variance will test whether there is a significant difference in yield due to fertilizer treatment, while adjusting for the difference in yields between fields. | |||

<li>More complicate example | |||

<pre> | |||

aov(y ~ x + Error(subject/x) ) | |||

# y: test scores (measured in percentage) | |||

# x: teaching method (1 = traditional, 2 = online) | |||

# subject: student ID | |||

# H1: We want to test whether there is a difference in test scores due to teaching method, | |||

# while accounting for the fact that different students may have different baseline test scores. | |||

</pre> | |||

* The formula "y ~ x + Error(subject/x)" means that there is a fixed factor "x" and a random factor "subject", which is nested within the levels of "x". The term "subject/x" in the Error() function is specifying that the random factor "subject" is nested within the levels of the fixed factor "x". | |||

* By including the random factor "subject" in the Error() function, we are accounting for the fact that the subjects may have different baseline levels of "y" and that this might affect the results. | |||

* This aov() model assumes that the test scores from different students might be different, and the error term should be adjusted accordingly. By including the random factor "subject" in the Error() function, we are accounting for the fact that different students may have different baseline test scores and that this might affect the results. | |||

* The term "subject/x" in the Error() function is specifying that the random factor "subject" is '''nested''' within the levels of the fixed factor "x". In other words, each subject is tested under different teaching methods. By including the random factor "subject" in the Error() function, we are accounting for the fact that the subjects may have different baseline levels of "y" and different teaching methods might affect them differently. | |||

The error term is adjusted accordingly. | |||

<li>[https://www.statology.org/nested-anova-in-r/ How to Perform a Nested ANOVA in R (Step-by-Step)]. Note the number of subjects (technicians, random variable, they are nested within fertilizer/fixed random variable) are different for each level of the fixed random variable. | |||

</ul> | |||

== Nested == | |||

See the [[#Repeated_measure|repeated measure ANOVA]] section. | |||

== Combining insignificant factor levels == | == Combining insignificant factor levels == | ||

| Line 426: | Line 1,240: | ||

== One-way ANOVA == | == One-way ANOVA == | ||

https://www.mathstat.dal.ca/~stat2080/Fall14/Lecturenotes/anova1.pdf | https://www.mathstat.dal.ca/~stat2080/Fall14/Lecturenotes/anova1.pdf | ||

== Randomized block design == | |||

<ul> | |||

<li>What is a '''randomized block design'''? | |||

* In a randomized block design, the subjects are first divided into blocks based on their similarities, and then they are randomly assigned to the treatment groups within each block. | |||

<li>How to interpret the result from a randomized block design? | |||

* If the results are statistically significant, it means that there is a significant difference between the treatment groups in terms of the response variable. This indicates that the treatment had an effect on the response variable, and that this effect is not likely due to chance alone. | |||

<li>How to incorporate the block variable in the interpretation? | |||

* In a randomized block design, the block variable is a characteristic that is used to group the subjects or experimental units into blocks. The goal of using a block variable is to control for the effects of this characteristic, so that the effects of the experimental variables can be more accurately measured. | |||

* To incorporate the block variable into the interpretation of the results, you will need to consider whether the block variable had an effect on the response variable. | |||

* If the block variable had a significant effect on the response variable, it means that the results may be '''confounded''' by the block variable. In this case, it may be necessary to take the block variable into account when interpreting the results. For example, you might need to consider whether the treatment effects are different for different blocks, or whether the block variable is interacting with the treatment in some way. | |||

<li>What does that mean the result is '''confounded''' by the variable? | |||

* If the results of an experiment are '''confounded''' by a variable, it means that the variable is influencing the results in some way and making it difficult to interpret the effects of the experimental treatment. This can occur when the variable is correlated with both the treatment and the response variable, and it can lead to incorrect conclusions about the relationship between the treatment and the response. | |||

* For example, consider an experiment in which the treatment is a drug that is being tested for its ability to lower blood pressure. If the subjects are divided into blocks based on their age, and the results show that the drug is more effective in younger subjects than in older subjects, this could be because the drug is more effective in younger subjects, or it could be because blood pressure tends to be higher in older subjects and the effect of the drug is being masked. In this case, the results would be confounded by age, and it would be difficult to draw conclusions about the effectiveness of the drug without taking age into account. | |||

* To avoid confounding, it is important to carefully control for any variables that could potentially influence the results of the experiment. This may involve stratifying the sample, using a matching or blocking design, or controlling for the variable in the statistical analysis. By controlling for confounding variables, you can more accurately interpret the results of the experiment and draw valid conclusions about the relationship between the treatment and the response. | |||

<li>What are the advantages of a randomized block design? | |||

* Control for extraneous variables: By grouping subjects into blocks based on a similar characteristic, a randomized block design can help to control for the effects of this characteristic on the response variable. This makes it easier to accurately measure the effects of the experimental variables. | |||

* Increased precision: Randomizing the subjects within each block helps to reduce the variance among the treatment groups. This increase the power of the statistical analysis and makes it more likely to detect true differences between the treatment groups. | |||

* Reduced sample size: Randomized block designs typically require a smaller sample size than completely randomized designs to achieve the same level of precision. This makes it more cost-effective and logistically easier to conduct the research | |||

* Identify the characteristics that affect the response: The block design can help to identify the characteristics of subjects or experimental units that affect the response variable. This can help to identify important factors that should be controlled for in future experiments or can be used to improve the understanding of the phenomena being studied. | |||

* Multiple comparison: with multiple blocks allows to perform multiple comparison of the treatment groups, which can help to identify specific variables or groups that are contributing to the overall results and to understand the mechanism of the effect. | |||

<li>What are the disadvantages of randomized block design | |||

* Complexity | |||

* Difficulty in identifying blocks | |||

* Increases experimental control | |||

* Difficulty in interpretation: When multiple blocks are used it can be difficult to interpret the results of the experiment and it's crucial to have sufficient sample size within each block to detect the effects of the experimental variable. | |||

<li>Does the power of the test change with the number of levels in the block variable in randomized block design analysis? | |||

* The analysis for a randomized block design does not change with the number of levels in the block variable, but the number of levels in the block variable does affect the power of the analysis. As the number of levels in the block variable increases, the power of the test to detect differences among treatments also increases. However, increasing the number of levels in the block variable also increases the number of blocks required, which can make the study more complex and costly to conduct. | |||

<li>How does the block variable affect the interpretation of the significant of the main effect in a randomized block design? | |||

* In a randomized block design, the block variable is used to control for sources of variation that are not of interest in the study, but that may affect the response variable. By blocking, we are trying to make sure that any differences in the response variable among the treatments are due to the treatment and not due to other sources of variation. | |||

* The main effect of the block variable represents the difference in the response variable among the blocks, regardless of the treatment. If the main effect of the block variable is significant, it means that there are systematic differences in the response variable among the blocks, and that the blocks are not exchangeable. This can affect the interpretation of the main effect of the treatment variable. | |||

* When the main effect of the block variable is significant, it means that the blocks are not homogeneous and that the treatment effect may be different among the blocks. In this case, we cannot conclude that the treatment effect is the same across all blocks. Therefore, it's important to examine the interaction between the block and treatment variables, which tells us whether the treatment effect is the same across all blocks. If the interaction is not significant, it means that the treatment effect is the same across all blocks, and we can conclude that the treatment has a consistent effect on the response variable. However, if the interaction is significant, it means that the treatment effect varies among the blocks, and we cannot conclude that the treatment has a consistent effect on the response variable. | |||

* In summary, when the main effect of the block variable is significant, it means that the blocks are not homogeneous, and it may affect the interpretation of the main effect of the treatment variable. The effect of treatment may vary across the blocks and it's important to examine the interaction between the block and treatment variables to understand whether the treatment effect is consistent across all blocks. | |||

<li>[https://www.real-statistics.com/design-of-experiments/completely-randomized-design/randomized-complete-block-design/ Visualize the data]. Both treatment and block variables are significant. | |||

<li>Examples | |||

* Random data | |||

<pre> | |||

library(ggplot2) | |||

#create a dataframe | |||

set.seed(1234) | |||

block <- factor(rep(1:5, each=6)) | |||

treatment <- rep(c("A","B","C"),5) | |||

yield <- rnorm(30, mean = 10, sd = 2) | |||

data <- data.frame(block, treatment, yield) | |||

summary(aov(yield ~ treatment + block, data = data)) | |||

# Create the box plot | |||

# By using the group argument with the interaction(block, treatment) function, | |||

# we are grouping the data by both the block and treatment variables, which means that | |||

# we will have a separate box plot for each unique combination of block and treatment. | |||

ggplot(data, aes(x = treatment, y = yield)) + | |||

geom_boxplot(aes(group = interaction(block, treatment), color = block)) + | |||

ggtitle("Main Effect of Treatment") + | |||

xlab("Treatment") + | |||

ylab("Yield") | |||

</pre> | |||

* Create a randomized block design data where the block variable is not significant but the treatment variable is significant: | |||

<pre> | |||

set.seed(1234) | |||

block <- factor(rep(1:5, each=6)) | |||

treatment <- rep(c("A","B","C"),5) | |||

yield <- rnorm(30, mean = 10, sd = 2) + ifelse(treatment == "B", 2,0) | |||

data <- data.frame(block, treatment, yield) | |||

summary(fm1 <- aov(yield ~ treatment + block, data = data)) | |||

# Df Sum Sq Mean Sq F value Pr(>F) | |||

# treatment 2 69.92 34.96 13.163 0.000155 *** | |||

# block 4 11.94 2.99 1.124 0.369624 | |||

# Residuals 23 61.09 2.66 | |||

TukeyHSD(fm1, "treatment", order = T) | |||

# order = T: A logical value indicating if the levels of the factor should be ordered | |||

# according to increasing average in the sample before taking differences. If ordered | |||

# is true then the calculated differences in the means will all be positive. | |||

# | |||

# Tukey multiple comparisons of means | |||

# 95% family-wise confidence level | |||

# factor levels have been ordered | |||

# $treatment | |||

# diff lwr upr p adj | |||

# C-A 1.851551 0.02630618 3.676796 0.0463529 | |||

# B-A 3.739487 1.91424233 5.564732 0.0000968 | |||

# B-C 1.887936 0.06269108 3.713181 0.0417048 | |||

TukeyHSD(fm1, "treatment") | |||

# diff lwr upr p adj | |||

# B-A 3.739487 1.91424233 5.56473246 0.0000968 | |||

# C-A 1.851551 0.02630618 3.67679631 0.0463529 | |||

# C-B -1.887936 -3.71318121 -0.06269108 0.0417048 | |||

</pre> | |||

* An example where both the block and treatment var are significant | |||

<pre> | |||

summary(fm1 <- aov(yield ~ treatment + block, data = data)) | |||

# Df Sum Sq Mean Sq F value Pr(>F) | |||

# treatment 2 92.09 46.05 9.555 0.000954 *** | |||

# block 4 140.45 35.11 7.286 0.000607 *** | |||

# Residuals 23 110.84 4.82 | |||

</pre> | |||

Base R style: | |||

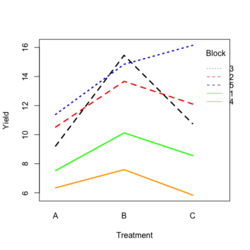

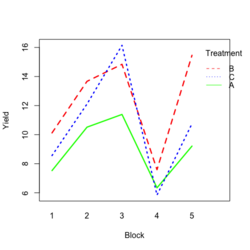

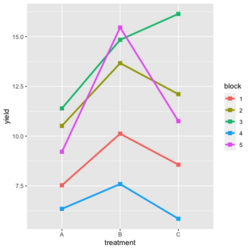

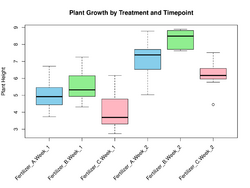

[[File:RbdTreat.png|250px]] [[File:RbdBlock.png|250px]] | |||

ggplot2 style: | |||

[[File:RbdGeom.png|250px]] | |||

</ul> | |||

== gl(): Generate Factor Levels == | |||

* [https://www.r-tutor.com/elementary-statistics/analysis-variance/randomized-block-design Randomized Block Design] | |||

* [https://www.rdocumentation.org/packages/base/versions/3.6.2/topics/gl gl()] | |||

* [https://davidlindelof.com/controlling-for-covariates-is-not-the-same-as-slicing/ Controlling for covariates is not the same as “slicing”] | |||

== Two-way ANOVA == | == Two-way ANOVA == | ||

<ul> | |||

<li>[https://www.theanalysisfactor.com/interactions-main-effects-not-significant/ Interpreting Interactions when Main Effects are Not Significant] | |||

<li>[https://www.theanalysisfactor.com/interpret-main-effects-interaction/ Actually, you can interpret some main effects in the presence of an interaction] | |||

<li>[https://support.bioconductor.org/p/9138302/ Confusion about Deseq2 wording in the vignette (additive model with main effects only vs interaction)] | |||