Statistics: Difference between revisions

| Line 327: | Line 327: | ||

[http://stats.stackexchange.com/questions/26408/what-is-the-difference-between-a-hazard-ratio-and-the-ecoef-of-a-cox-equation?rq=1 Compute ratio ratios from coxph()] in R (Hint: exp(beta)). | [http://stats.stackexchange.com/questions/26408/what-is-the-difference-between-a-hazard-ratio-and-the-ecoef-of-a-cox-equation?rq=1 Compute ratio ratios from coxph()] in R (Hint: exp(beta)). | ||

[http://www.sthda.com/english/wiki/cox-proportional-hazards-model#basics-of-the-cox-proportional-hazards-model Basics of the Cox proportional hazards model]. Good prognostic (b>0 or HR>1) and bad prognostic (b<0 or HR<1). | [http://www.sthda.com/english/wiki/cox-proportional-hazards-model#basics-of-the-cox-proportional-hazards-model Basics of the Cox proportional hazards model]. Good prognostic factor (b>0 or HR>1) and bad prognostic factor (b<0 or HR<1). | ||

=== Hazard Ratio Forest Plot === | === Hazard Ratio Forest Plot === | ||

Revision as of 09:28, 21 August 2017

Statistics for biologists

http://www.nature.com/collections/qghhqm

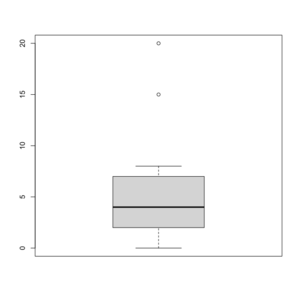

Box plot in R

See http://msenux.redwoods.edu/math/R/boxplot.php for a numerical explanation how boxplot() in R works.

> x=c(0,4,15, 1, 6, 3, 20, 5, 8, 1, 3)

> summary(x)

Min. 1st Qu. Median Mean 3rd Qu. Max.

0 2 4 6 7 20

> sort(x)

[1] 0 1 1 3 3 4 5 6 8 15 20

- The lower and upper edges of box is determined by the first and 3rd quartiles (2 and 7 in the above example).

- The thick dark horizon line is the median (4 in the example).

- Outliers are defined by observations larger than 3rd quartile + 1.5 * IQR (7+1.5*5=14.5) and smaller than 1st quartile - 1.5 * IQR (2-1.5*5=-5.5). See the empty circles in the plot.

- Upper whisker is defined by the largest data below 3rd quartile + 1.5 * IQR (8 in this example), and the lower whisker is defined by the smallest data greater than 1st quartile - 1.5 * IQR (0 in this example).

Note the wikipedia lists several possible definitions of a whisker. R uses the 2nd method (Tukey boxplot) to define whiskers.

BoxCox transformation

Finding transformation for normal distribution

the Holy Trinity (LRT, Wald, Score tests)

Don't invert that matrix

- http://www.johndcook.com/blog/2010/01/19/dont-invert-that-matrix/

- http://civilstat.com/2015/07/dont-invert-that-matrix-why-and-how/

Linear Regression

[Regression Models for Data Science in R https://leanpub.com/regmods] by Brian Caffo

Different models (in R)

http://www.quantide.com/raccoon-ch-1-introduction-to-linear-models-with-r/

dummy.coef.lm() in R

Extracts coefficients in terms of the original levels of the coefficients rather than the coded variables.

Contrasts in linear regression

- Page 147 of Modern Applied Statistics with S (4th ed)

- https://biologyforfun.wordpress.com/2015/01/13/using-and-interpreting-different-contrasts-in-linear-models-in-r/ This explains the meanings of 'treatment', 'helmert' and 'sum' contrasts.

Confounders

Non- and semi-parametric regression

Cubic and Smoothing Splines in R

Principal component analysis

R source code

> stats:::prcomp.default

function (x, retx = TRUE, center = TRUE, scale. = FALSE, tol = NULL,

...)

{

x <- as.matrix(x)

x <- scale(x, center = center, scale = scale.)

cen <- attr(x, "scaled:center")

sc <- attr(x, "scaled:scale")

if (any(sc == 0))

stop("cannot rescale a constant/zero column to unit variance")

s <- svd(x, nu = 0)

s$d <- s$d/sqrt(max(1, nrow(x) - 1))

if (!is.null(tol)) {

rank <- sum(s$d > (s$d[1L] * tol))

if (rank < ncol(x)) {

s$v <- s$v[, 1L:rank, drop = FALSE]

s$d <- s$d[1L:rank]

}

}

dimnames(s$v) <- list(colnames(x), paste0("PC", seq_len(ncol(s$v))))

r <- list(sdev = s$d, rotation = s$v, center = if (is.null(cen)) FALSE else cen,

scale = if (is.null(sc)) FALSE else sc)

if (retx)

r$x <- x %*% s$v

class(r) <- "prcomp"

r

}

<bytecode: 0x000000003296c7d8>

<environment: namespace:stats>

PCA and SVD

Using the SVD to perform PCA makes much better sense numerically than forming the covariance matrix to begin with, since the formation of XX⊤ can cause loss of precision.

http://math.stackexchange.com/questions/3869/what-is-the-intuitive-relationship-between-svd-and-pca

Related to Factor Analysis

- http://www.aaronschlegel.com/factor-analysis-introduction-principal-component-method-r/.

- http://support.minitab.com/en-us/minitab/17/topic-library/modeling-statistics/multivariate/principal-components-and-factor-analysis/differences-between-pca-and-factor-analysis/

In short,

- In Principal Components Analysis, the components are calculated as linear combinations of the original variables. In Factor Analysis, the original variables are defined as linear combinations of the factors.

- In Principal Components Analysis, the goal is to explain as much of the total variance in the variables as possible. The goal in Factor Analysis is to explain the covariances or correlations between the variables.

- Use Principal Components Analysis to reduce the data into a smaller number of components. Use Factor Analysis to understand what constructs underlie the data.

Calculated by Hand

http://strata.uga.edu/software/pdf/pcaTutorial.pdf

Do not scale your matrix

https://privefl.github.io/blog/(Linear-Algebra)-Do-not-scale-your-matrix/

Visualization based on simulated data

http://oracledmt.blogspot.com/2007/06/way-cooler-pca-and-visualization-linear.html

What does it do if we choose center=FALSE in prcomp()?

In USArrests data, use center=FALSE gives a better scatter plot of the first 2 PCA components.

x1 = prcomp(USArrests) x2 = prcomp(USArrests, center=F) plot(x1$x[,1], x1$x[,2]) # looks random windows(); plot(x2$x[,1], x2$x[,2]) # looks good in some sense

Relation to Multidimensional scaling/MDS

With no missing data, classical MDS (Euclidean distance metric) is the same as PCA.

Comparisons are here.

Differences are asked/answered on stackexchange.com. The post also answered the question when these two are the same.

Matrix factorization methods

http://joelcadwell.blogspot.com/2015/08/matrix-factorization-comes-in-many.html Review of principal component analysis (PCA), K-means clustering, nonnegative matrix factorization (NMF) and archetypal analysis (AA).

Independent component analysis

ICA is another dimensionality reduction method.

ICA vs PCA

ICS vs FA

Correspondence analysis

https://francoishusson.wordpress.com/2017/07/18/multiple-correspondence-analysis-with-factominer/ and the book Exploratory Multivariate Analysis by Example Using R

Visualize the random effects

http://www.quantumforest.com/2012/11/more-sense-of-random-effects/

Sensitivity/Specificity/Accuracy

| Predict | ||||

| 1 | 0 | |||

| True | 1 | TP | FN | Sens=TP/(TP+FN) |

| 0 | FP | TN | Spec=TN/(FP+TN) | |

| N = TP + FP + FN + TN | ||||

- Sensitivity = TP / (TP + FN)

- Specificity = TN / (TN + FP)

- Accuracy = (TP + TN) / N

ROC curve and Brier score

- Introduction to the ROCR package.

- http://freakonometrics.hypotheses.org/9066, http://freakonometrics.hypotheses.org/20002

- Illustrated Guide to ROC and AUC

- ROC Curves in Two Lines of R Code

genefilter package and rowpAUCs function

- rowpAUCs function in genefilter package. The aim is to find potential biomarkers whose expression level is able to distinguish between two groups.

# source("http://www.bioconductor.org/biocLite.R")

# biocLite("genefilter")

library(Biobase) # sample.ExpressionSet data

data(sample.ExpressionSet)

library(genefilter)

r2 = rowpAUCs(sample.ExpressionSet, "sex", p=0.1)

plot(r2[1]) # first gene, asking specificity = .9

r2 = rowpAUCs(sample.ExpressionSet, "sex", p=1.0)

plot(r2[1]) # it won't show pAUC

r2 = rowpAUCs(sample.ExpressionSet, "sex", p=.999)

plot(r2[1]) # pAUC is very close to AUC now

Maximum likelihood

Difference of partial likelihood, profile likelihood and marginal likelihood

Generalized Linear Model

Lectures from a course in Simon Fraser University Statistics.

Doing magic and analyzing seasonal time series with GAM (Generalized Additive Model) in R

Quasi Likelihood

Quasi-likelihood is like log-likelihood. The quasi-score function (first derivative of quasi-likelihood function) is the estimating equation.

- Original paper by Peter McCullagh.

- Lecture 20 from SFU.

- U. Washington and another lecture focuses on overdispersion.

- This lecture contains a table of quasi likelihood from common distributions.

Plot

Simulate data from a specified density

Multiple comparisons

- http://www.gs.washington.edu/academics/courses/akey/56008/lecture/lecture10.pdf

- Book 'Multiple Comparison Using R' by Bretz, Hothorn and Westfall, 2011.

- Plot a histogram of p-values, a post from varianceexplained.org.

- Comparison of different ways of multiple-comparison in R.

Take an example, Suppose 550 out of 10,000 genes are significant at .05 level

- P-value < .05 ==> Expect .05*10,000=500 false positives

- False discovery rate < .05 ==> Expect .05*550 =27.5 false positives

- Family wise error rate < .05 ==> The probablity of at least 1 false positive <.05

False Discovery Rate

- Definition by Benjamini and Hochberg in JRSS B 1995.

- A comic

- Statistical significance for genomewide studies by Storey and Tibshirani.

- What’s the probability that a significant p-value indicates a true effect?

- http://onetipperday.sterding.com/2015/12/my-note-on-multiple-testing.html

q-value

q-value is defined as the minimum FDR that can be attained when calling that feature significant (i.e., expected proportion of false positives incurred when calling that feature significant).

If gene X has a q-value of 0.013 it means that 1.3% of genes that show p-values at least as small as gene X are false positives.

SAM/Significance Analysis of Microarrays

The percentile option is used to define the number of falsely called genes based on 'B' permutations. If we use the 90-th percentile, the number of significant genes will be less than if we use the 50-th percentile/median.

In BRCA dataset, using the 90-th percentile will get 29 genes vs 183 genes if we use median.

Multivariate permutation test

In BRCA dataset, using 80% confidence gives 116 genes vs 237 genes if we use 50% confidence (assuming maximum proportion of false discoveries is 10%). The method is published on EL Korn, JF Troendle, LM McShane and R Simon, Controlling the number of false discoveries: Application to high dimensional genomic data, Journal of Statistical Planning and Inference, vol 124, 379-398 (2004).

String Permutations Algorithm

Bayes

Bayes factor

Empirical Bayes method

Overdispersion

https://en.wikipedia.org/wiki/Overdispersion

Var(Y) = phi * E(Y). If phi > 1, then it is overdispersion relative to Poisson. If phi <1, we have under-dispersion (rare).

Heterogeneity

The Poisson model fit is not good; residual deviance/df >> 1. The lack of fit maybe due to missing data, covariates or overdispersion.

Subjects within each covariate combination still differ greatly.

- https://onlinecourses.science.psu.edu/stat504/node/169.

- https://onlinecourses.science.psu.edu/stat504/node/162

Consider Quasi-Poisson or negative binomial.

Test of overdispersion or underdispersion in Poisson models

Negative Binomial

The mean of the Poisson distribution can itself be thought of as a random variable drawn from the gamma distribution thereby introducing an additional free parameter.

Cox Regression

Let Yi denote the observed time (either censoring time or event time) for subject i, and let Ci be the indicator that the time corresponds to an event (i.e. if Ci = 1 the event occurred and if Ci = 0 the time is a censoring time). The hazard function for the Cox proportional hazard model has the form

[math]\displaystyle{ \lambda(t|X) = \lambda_0(t)\exp(\beta_1X_1 + \cdots + \beta_pX_p) = \lambda_0(t)\exp(X \beta^\prime). }[/math]

This expression gives the hazard at time t for an individual with covariate vector (explanatory variables) X. Based on this hazard function, a partial likelihood can be constructed from the datasets as

[math]\displaystyle{ L(\beta) = \prod_{i:C_i=1}\frac{\theta_i}{\sum_{j:Y_j\ge Y_i}\theta_j}, }[/math]

where θj = exp(Xj β′) and X1, ..., Xn are the covariate vectors for the n independently sampled individuals in the dataset (treated here as column vectors).

The corresponding log partial likelihood is

[math]\displaystyle{ \ell(\beta) = \sum_{i:C_i=1} \left(X_i \beta^\prime - \log \sum_{j:Y_j\ge Y_i}\theta_j\right). }[/math]

This function can be maximized over β to produce maximum partial likelihood estimates of the model parameters.

The partial score function is [math]\displaystyle{ \ell^\prime(\beta) = \sum_{i:C_i=1} \left(X_i - \frac{\sum_{j:Y_j\ge Y_i}\theta_jX_j}{\sum_{j:Y_j\ge Y_i}\theta_j}\right), }[/math]

and the Hessian matrix of the partial log likelihood is

[math]\displaystyle{ \ell^{\prime\prime}(\beta) = -\sum_{i:C_i=1} \left(\frac{\sum_{j:Y_j\ge Y_i}\theta_jX_jX_j^\prime}{\sum_{j:Y_j\ge Y_i}\theta_j} - \frac{\sum_{j:Y_j\ge Y_i}\theta_jX_j\times \sum_{j:Y_j\ge Y_i}\theta_jX_j^\prime}{[\sum_{j:Y_j\ge Y_i}\theta_j]^2}\right). }[/math]

Using this score function and Hessian matrix, the partial likelihood can be maximized using the Newton-Raphson algorithm. The inverse of the Hessian matrix, evaluated at the estimate of β, can be used as an approximate variance-covariance matrix for the estimate, and used to produce approximate standard errors for the regression coefficients.

Hazard

A hazard is the rate at which events happen, so that the probability of an event happening in a short time interval is the length of time multiplied by the hazard.

[math]\displaystyle{ h(t) = \lim_{\Delta t \to 0} \frac{P(t \leq T \lt t+\Delta t|T \geq t)}{\Delta t} = \frac{f(t)}{S(t)} }[/math]

Hazards (or probability of hazards) may vary with time, while the assumption in proportional hazard models for survival is that the hazard is a constant proportion.

If h(t)=c, S(t) is exponential. If [math]\displaystyle{ \log h(t) = c + \rho t }[/math], S(t) is Gompertz distribution. If [math]\displaystyle{ \log h(t)=c + \rho \log (t) }[/math], S(t) is Weibull distribution.

For Cox regression, the survival function can be shown to be [math]\displaystyle{ S(t|X) = S_0(t) ^ {\exp(X\beta)} }[/math].

Hazard Ratio

A hazard ratio is often reported as a “reduction in risk of death or progression” – This reduction is calculated as 1 minus the Hazard Ratio, e.g., HR of 0.84 is equal to a 16% reduction in risk. See www.time4epi.com and stackexchange.com.

Hazard ratio is not the same as the relative risk ratio. See medicine.ox.ac.uk.

Interpreting risks and ratios in therapy trials from australianprescriber.com is useful too.

Compute ratio ratios from coxph() in R (Hint: exp(beta)).

Basics of the Cox proportional hazards model. Good prognostic factor (b>0 or HR>1) and bad prognostic factor (b<0 or HR<1).

Hazard Ratio Forest Plot

The forest plot quickly summarizes the hazard ratio data across multiple variables –If the line crosses the 1.0 value, the hazard ratio is not significant and there is no clear advantage for either arm.

Estimate baseline hazard

Google: how to estimate baseline hazard rate

- stackexchange.com

- ?basehaz from stackexchange.com

predict.coxph

- http://stats.stackexchange.com/questions/44896/how-to-interpret-the-output-of-predict-coxph

- http://www.togaware.com/datamining/survivor/Lung1.html

library(coxph) fit <- coxph(Surv(time, status) ~ age , lung) fit # Call: # coxph(formula = Surv(time, status) ~ age, data = lung) # coef exp(coef) se(coef) z p # age 0.0187 1.02 0.0092 2.03 0.042 # # Likelihood ratio test=4.24 on 1 df, p=0.0395 n= 228, number of events= 165 # type = "lr" (Linear predictor) as.numeric(predict(fit,type="lp"))[1:10] # [1] 0.21626733 0.10394626 -0.12069589 -0.10197571 -0.04581518 0.21626733 # [7] 0.10394626 0.16010680 -0.17685643 -0.02709500 0.0187 * (lung$age[1:10] - fit$means) # [1] 0.21603421 0.10383421 -0.12056579 -0.10186579 -0.04576579 0.21603421 # [7] 0.10383421 0.15993421 -0.17666579 -0.02706579 # type = "risk" (Risk score) > as.numeric(predict(fit,type="risk"))[1:10] [1] 1.2414342 1.1095408 0.8863035 0.9030515 0.9552185 1.2414342 1.1095408 [8] 1.1736362 0.8379001 0.9732688 > (exp(lung$age * 0.0187) / exp(mean(lung$age) * 0.0187))[1:10] [1] 1.2411448 1.1094165 0.8864188 0.9031508 0.9552657 1.2411448 1.1094165 [8] 1.1734337 0.8380598 0.9732972

Simulate data

More

- This pdf file from data.princeton.edu contains estimation, hypothesis testing, time varying covariates and baseline survival estimation.

- Survival analysis: basic terms, the exponential model, censoring, examples in R and JAGS

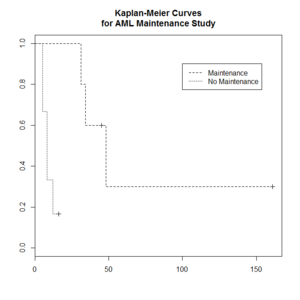

Kaplan–Meier curve

The Kaplan–Meier estimator (the product limit estimator) is an estimator for estimating the survival function from lifetime data. In medical research, it is often used to measure the fraction of patients living for a certain amount of time after treatment.

The "+" sign means censored observations and a long vertical line (not '+') means there is a dead observation at that time.

Usually the KM curve of treatment group is higher than that of the control group.

The Y-axis (the probability that a member from a given population will have a lifetime exceeding time) is often called

- Cumulative probability

- Cumulative survival

- Percent survival

- Probability without event

- Proportion alive/surviving

- Survival

- Survival probability

> library(survival)

> plot(leukemia.surv <- survfit(Surv(time, status) ~ x, data = aml[7:17,] ) , lty=2:3) # a (small) subset

> aml[7:17,]

time status x

7 31 1 Maintained

8 34 1 Maintained

9 45 0 Maintained

10 48 1 Maintained

11 161 0 Maintained

12 5 1 Nonmaintained

13 5 1 Nonmaintained

14 8 1 Nonmaintained

15 8 1 Nonmaintained

16 12 1 Nonmaintained

17 16 0 Nonmaintained

> legend(100, .9, c("Maintenance", "No Maintenance"), lty = 2:3)

> title("Kaplan-Meier Curves\nfor AML Maintenance Study")

- Kaplan-Meier estimator from the wikipedia.

- Two papers this and this to describe steps to calculate the KM estimate.

Estimate survival probability

See this post.

km <- survfit(Surv(time, status)~1, data=veteran) survest <- stepfun(km$time, c(1, km$surv)) # Now survest is a function that can be evaluated at any time. survest(0:100) # try plot.stepfun()?

We can also use the plot() function to visual the plot.

# Assume x and y have the same length. plot(y ~ x, type = "s")

Elements of Statistical Learning

- Book homepage

- From Linear Models to Machine Learning by Norman Matloff

Bagging

Chapter 8 of the book.

- Bootstrap mean is approximately a posterior average.

- Bootstrap aggregation or bagging average: Average the prediction over a collection of bootstrap samples, thereby reducing its variance. The bagging estimate is defined by

- [math]\displaystyle{ \hat{f}_{bag}(x) = \frac{1}{B}\sum_{b=1}^B \hat{f}^{*b}(x). }[/math]

Where Bagging Might Work Better Than Boosting

Boosting

- Ch8.2 Bagging, Random Forests and Boosting of An Introduction to Statistical Learning and the code.

- An Attempt To Understand Boosting Algorithm

- gbm package. An implementation of extensions to Freund and Schapire's AdaBoost algorithm and Friedman's gradient boosting machine. Includes regression methods for least squares, absolute loss, t-distribution loss, quantile regression, logistic, multinomial logistic, Poisson, Cox proportional hazards partial likelihood, AdaBoost exponential loss, Huberized hinge loss, and Learning to Rank measures (LambdaMart).

- https://www.biostat.wisc.edu/~kendzior/STAT877/illustration.pdf

- http://www.is.uni-freiburg.de/ressourcen/business-analytics/10_ensemblelearning.pdf and exercise

AdaBoost

AdaBoost.M1 by Freund and Schapire (1997):

The error rate on the training sample is [math]\displaystyle{ \bar{err} = \frac{1}{N} \sum_{i=1}^N I(y_i \neq G(x_i)), }[/math]

Sequentially apply the weak classification algorithm to repeatedly modified versions of the data, thereby producing a sequence of weak classifiers [math]\displaystyle{ G_m(x), m=1,2,\dots,M. }[/math]

The predictions from all of them are combined through a weighted majority vote to produce the final prediction: [math]\displaystyle{ G(x) = sign[\sum_{m=1}^M \alpha_m G_m(x)]. }[/math] Here [math]\displaystyle{ \alpha_1,\alpha_2,\dots,\alpha_M }[/math] are computed by the boosting algorithm and weight the contribution of each respective [math]\displaystyle{ G_m(x) }[/math]. Their effect is to give higher influence to the more accurate classifiers in the sequence.

Dropout regularization

DART: Dropout Regularization in Boosting Ensembles

Gradient descent

- An overview of Gradient descent optimization algorithms

- Simple Gradient Descent on R for linear regression

Classification and Regression Trees (CART)

Construction of the tree classifier

- Node proportion

- [math]\displaystyle{ p(1|t) + \dots + p(6|t) =1 }[/math] where [math]\displaystyle{ p(j|t) }[/math] define the node proportions (class proportion of class j on node t. Here we assume there are 6 classes.

- Impurity of node t

- [math]\displaystyle{ i(t) }[/math] is a nonnegative function [math]\displaystyle{ \phi }[/math] of the [math]\displaystyle{ p(1|t), \dots, p(6|t) }[/math] such that [math]\displaystyle{ \phi(1/6,1/6,\dots,1/6) }[/math] = maximumm [math]\displaystyle{ \phi(1,0,\dots,0)=0, \phi(0,1,0,\dots,0)=0, \dots, \phi(0,0,0,0,0,1)=0 }[/math]. That is, the node impurity is largest when all classes are equally mixed together in it, and smallest when the node contains only one class.

- Gini index of impurity

- [math]\displaystyle{ i(t) = - \sum_{j=1}^6 p(j|t) \log p(j|t). }[/math]

- Goodness of the split s on node t

- [math]\displaystyle{ \Delta i(s, t) = i(t) -p_Li(t_L) - p_Ri(t_R). }[/math] where [math]\displaystyle{ p_R }[/math] are the proportion of the cases in t go into the left node [math]\displaystyle{ t_L }[/math] and a proportion [math]\displaystyle{ p_R }[/math] go into right node [math]\displaystyle{ t_R }[/math].

A tree was grown in the following way: At the root node [math]\displaystyle{ t_1 }[/math], a search was made through all candidate splits to find that split [math]\displaystyle{ s^* }[/math] which gave the largest decrease in impurity;

- [math]\displaystyle{ \Delta i(s^*, t_1) = \max_{s} \Delta i(s, t_1). }[/math]

- Class character of a terminal node was determined by the plurality rule. Specifically, if [math]\displaystyle{ p(j_0|t)=\max_j p(j|t) }[/math], then t was designated as a class [math]\displaystyle{ j_0 }[/math] terminal node.

R packages

Supervised Classification, Logistic and Multinomial

Variable selection and variable importance plot

Variable selection and cross-validation

- http://freakonometrics.hypotheses.org/19925

- http://ellisp.github.io/blog/2016/06/05/bootstrap-cv-strategies/

Variable selection for mode regression

http://www.tandfonline.com/doi/full/10.1080/02664763.2017.1342781 Chen & Zhou, Journal of applied statistics ,June 2017

Neural network

- Build your own neural network in R

- (Video) 10.2: Neural Networks: Perceptron Part 1 - The Nature of Code from the Coding Train. The book THE NATURE OF CODE by DANIEL SHIFFMAN

Support vector machine (SVM)

- Improve SVM tuning through parallelism by using the foreach and doParallel packages.

Quadratic Discriminant Analysis (qda), KNN

Machine Learning. Stock Market Data, Part 3: Quadratic Discriminant Analysis and KNN

glmnet

- Glmnet Vignette

- https://www.rdocumentation.org/packages/glmnet/versions/2.0-10/topics/glmnet

- glmnetUtils: quality of life enhancements for elastic net regression with glmnet

- When the LASSO fails???

- Mixing parameter: alpha=1 is the lasso penalty, and alpha=0 the ridge penalty.

Ridge regression

Cross Validation

.632 bootstrap

Create partitions

set.seed(), sample.split(),createDataPartition(), and createFolds() functions.

k-fold cross validation with modelr and broom

Clustering

k-means clustering

- Assumptions, a post from varianceexplained.org.

Hierarchical clustering

For the kth cluster, define the Error Sum of Squares as [math]\displaystyle{ ESS_m = }[/math] sum of squared deviations (squared Euclidean distance) from the cluster centroid. [math]\displaystyle{ ESS_m = \sum_{l=1}^{n_m}\sum_{k=1}^p (x_{ml,k} - \bar{x}_{m,k})^2 }[/math] in which [math]\displaystyle{ \bar{x}_{m,k} = (1/n_m) \sum_{l=1}^{n_m} x_{ml,k} }[/math] the mean of the mth cluster for the kth variable, [math]\displaystyle{ x_{ml,k} }[/math] being the score on the kth variable [math]\displaystyle{ (k=1,\dots,p) }[/math] for the lth object [math]\displaystyle{ (l=1,\dots,n_m) }[/math] in the mth cluster [math]\displaystyle{ (m=1,\dots,g) }[/math].

If there are C clusters, define the Total Error Sum of Squares as Sum of Squares as [math]\displaystyle{ ESS = \sum_m ESS_m, m=1,\dots,C }[/math]

Consider the union of every possible pair of clusters.

Combine the 2 clusters whose combination combination results in the smallest increase in ESS.

Comments:

- Ward's method tends to join clusters with a small number of observations, and it is strongly biased toward producing clusters with the same shape and with roughly the same number of observations.

- It is also very sensitive to outliers. See Milligan (1980).

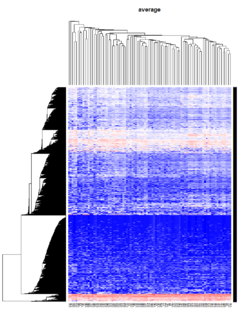

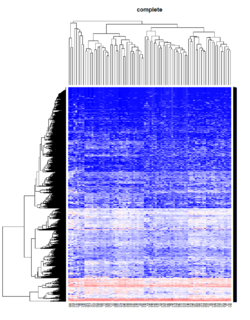

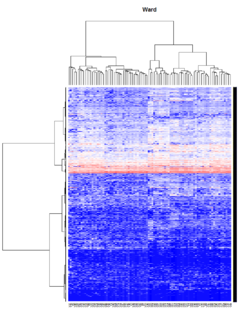

Take pomeroy data (7129 x 90) for an example:

library(gplots)

lr = read.table("C:/ArrayTools/Sample datasets/Pomeroy/Pomeroy -Project/NORMALIZEDLOGINTENSITY.txt")

lr = as.matrix(lr)

method = "average" # method <- "complete"; method <- "ward.D"; method <- "ward.D2"

hclust1 <- function(x) hclust(x, method= method)

heatmap.2(lr, col=bluered(75), hclustfun = hclust1, distfun = dist,

density.info="density", scale = "none",

key=FALSE, symkey=FALSE, trace="none",

main = method)

Density based clustering

http://www.r-exercises.com/2017/06/10/density-based-clustering-exercises/

Optimal number of clusters

Mixed Effect Model

- Paper by Laird and Ware 1982

- John Fox's Linear Mixed Models Appendix to An R and S-PLUS Companion to Applied Regression. Very clear. It provides 2 typical examples (hierarchical data and longitudinal data) of using the mixed effects model. It also uses Trellis plots to examine the data.

- Chapter 10 Random and Mixed Effects from Modern Applied Statistics with S by Venables and Ripley.

- (Book) lme4: Mixed-effects modeling with R by Douglas Bates.

- (Book) Mixed-effects modeling in S and S-Plus by José Pinheiro and Douglas Bates.

- Simulation and power analysis of generalized linear mixed models

Model selection criteria

Assessing the Accuracy of our models (R Squared, Adjusted R Squared, RMSE, MAE, AIC)

Overfitting

How to judge if a supervised machine learning model is overfitting or not?

Entropy

Definition

Entropy is defined by -log2(p) where p is a probability. Higher entropy represents higher unpredictable of an event.

Some examples:

- Fair 2-side die: Entropy = -.5*log2(.5) - .5*log2(.5) = 1.

- Fair 6-side die: Entropy = -6*1/6*log2(1/6) = 2.58

- Weighted 6-side die: Consider pi=.1 for i=1,..,5 and p6=.5. Entropy = -5*.1*log2(.1) - .5*log2(.5) = 2.16 (less unpredictable than a fair 6-side die).

Use

When entropy was applied to the variable selection, we want to select a class variable which gives a largest entropy difference between without any class variable (compute entropy using response only) and with that class variable (entropy is computed by adding entropy in each class level) because this variable is most discriminative and it gives most information gain. For example,

- entropy (without any class)=.94,

- entropy(var 1) = .69,

- entropy(var 2)=.91,

- entropy(var 3)=.725.

We will choose variable 1 since it gives the largest gain (.94 - .69) compared to the other variables (.94 -.91, .94 -.725).

Why is picking the attribute with the most information gain beneficial? It reduces entropy, which increases predictability. A decrease in entropy signifies an decrease in unpredictability, which also means an increase in predictability.

Consider a split of a continuous variable. Where should we cut the continuous variable to create a binary partition with the highest gain? Suppose cut point c1 creates an entropy .9 and another cut point c2 creates an entropy .1. We should choose c2.

Related

In addition to information gain, gini (dʒiːni) index is another metric used in decision tree. See wikipedia page about decision tree learning.

Ensembles

Combining classifiers. Pro: better classification performance. Con: time consuming.

Bagging

Draw N bootstrap samples and summary the results (averaging for regression problem, majority vote for classification problem). Decrease variance without changing bias. Not help much with underfit or high bias models.

Random forest

Variance importance: if you scramble the values of a variable, and the accuracy of your tree does not change much, then the variable is not very important.

Why is it useful to compute variance importance? So the model's predictions are easier to interpret (not improve the prediction performance).

Random forest has advantages of easier to run in parallel and suitable for small n large p problems.

Boosting

Instead of selecting data points randomly with the boostrap, it favors the misclassified points.

Algorithm:

- Initialize the weights

- Repeat

- resample with respect to weights

- retrain the model

- recompute weights

Since boosting requires computation in iterative and bagging can be run in parallel, bagging has an advantage over boosting when the data is very large.

p-values

p-values

- Prob(Data | H0)

- https://en.wikipedia.org/wiki/P-value

- THE ASA SAYS NO TO P-VALUES The problem is that with large samples, significance tests pounce on tiny, unimportant departures from the null hypothesis. We have the opposite problem with small samples: The power of the test is low, and we will announce that there is “no significant effect” when in fact we may have too little data to know whether the effect is important.

- It’s not the p-values’ fault

- Exploring P-values with Simulations in R from Stable Markets.

- p-value and effect size. http://journals.sagepub.com/doi/full/10.1177/1745691614553988

Distribution of p values in medical abstracts

http://www.ncbi.nlm.nih.gov/pubmed/26608725

T-statistic

Let [math]\displaystyle{ \scriptstyle\hat\beta }[/math] be an estimator of parameter β in some statistical model. Then a t-statistic for this parameter is any quantity of the form

- [math]\displaystyle{ t_{\hat{\beta}} = \frac{\hat\beta - \beta_0}{\mathrm{s.e.}(\hat\beta)}, }[/math]

where β0 is a non-random, known constant, and [math]\displaystyle{ \scriptstyle s.e.(\hat\beta) }[/math] is the standard error of the estimator [math]\displaystyle{ \scriptstyle\hat\beta }[/math].

Two sample test assuming equal variance

The t statistic to test whether the means are different can be calculated as follows:

- [math]\displaystyle{ t = \frac{\bar {X}_1 - \bar{X}_2}{s_{X_1X_2} \cdot \sqrt{\frac{1}{n_1}+\frac{1}{n_2}}} }[/math]

where

- [math]\displaystyle{ s_{X_1X_2} = \sqrt{\frac{(n_1-1)s_{X_1}^2+(n_2-1)s_{X_2}^2}{n_1+n_2-2}}. }[/math]

[math]\displaystyle{ s_{X_1X_2} }[/math] is an estimator of the common/pooled standard deviation of the two samples. The square-root of a pooled variance estimator is known as a pooled standard deviation.

The degrees of freedom is :[math]\displaystyle{ n_1 + n_2 - 2. }[/math]

Two sample test assuming unequal variance

The t statistic (Behrens-Welch test statistic) to test whether the population means are different is calculated as:

- [math]\displaystyle{ t = {\overline{X}_1 - \overline{X}_2 \over s_{\overline{X}_1 - \overline{X}_2}} }[/math]

where

- [math]\displaystyle{ s_{\overline{X}_1 - \overline{X}_2} = \sqrt{{s_1^2 \over n_1} + {s_2^2 \over n_2}}. }[/math]

Here s2 is the unbiased estimator of the variance of the two samples.

The degrees of freedom is evaluated using the Satterthwaite's approximation

- [math]\displaystyle{ df = { ({s_1^2 \over n_1} + {s_2^2 \over n_2})^2 \over {({s_1^2 \over n_1})^2 \over n_1-1} + {({s_2^2 \over n_2})^2 \over n_2-1} }. }[/math]

Unpooled vs pooled methods

Z-value/Z-score

If the population parameters are known, then rather than computing the t-statistic, one can compute the z-score.

Nonparametric test: Wilcoxon rank sum test

Sensitive to differences in location

Nonparametric test: Kolmogorov-Smirnov test

Sensitive to difference in shape and location of the distribution functions of two groups

Empirical Bayes method

See Bioconductor's limma, RnBeads, IMA, minfi packages.

ANOVA

- A simple ANOVA

- Repeated measures ANOVA in R Exercises

- Mixed models for ANOVA designs with one observation per unit of observation and cell of the design

- afex package

- Experiment designs for Agriculture

Sample Size

- Why Within-Subject Designs Require Fewer Participants than Between-Subject Designs

- Calculating required sample size in R and SAS

- Power analysis and sample size calculation for Agriculture

Goodness of fit

Chi-square tests

Contingency Tables

Odds ratio and Risk ratio

The ratio of the odds of an event occurring in one group to the odds of it occurring in another group

drawn | not drawn | ------------------------------------- white | A | B | Wh ------------------------------------- black | C | D | Bk

- Odds Ratio = (A / C) / (B / D) = (AD) / (BC)

- Risk Ratio = (A / Wh) / (C / Bk)

Hypergeometric, One-tailed Fisher exact test

- https://www.bioconductor.org/help/course-materials/2009/SeattleApr09/gsea/ (Are interesting features over-represented? or are selected genes more often in the GO category than expected by chance?)

- https://en.wikipedia.org/wiki/Hypergeometric_distribution. In a test for over-representation of successes in the sample, the hypergeometric p-value is calculated as the probability of randomly drawing k or more successes from the population in n total draws. In a test for under-representation, the p-value is the probability of randomly drawing k or fewer successes.

- http://stats.stackexchange.com/questions/62235/one-tailed-fishers-exact-test-and-the-hypergeometric-distribution

- Two sided hypergeometric test

- https://www.biostars.org/p/90662/ When computing the p-value (tail probability), consider to use 1 - Prob(observed -1) instead of 1 - Prob(observed) for discrete distribution.

- https://stat.ethz.ch/R-manual/R-devel/library/stats/html/Hypergeometric.html p(x) = choose(m, x) choose(n, k-x) / choose(m+n, k).

drawn | not drawn |

-------------------------------------

white | x | | m

-------------------------------------

black | k-x | | n

-------------------------------------

| k | | m+n

For example, k=100, m=100, m+n=1000,

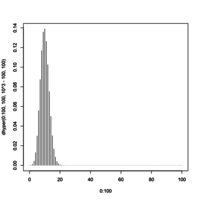

> 1 - phyper(10, 100, 10^3-100, 100, log.p=F) [1] 0.4160339 > a <- dhyper(0:10, 100, 10^3-100, 100) > cumsum(rev(a)) [1] 1.566158e-140 1.409558e-135 3.136408e-131 3.067025e-127 1.668004e-123 5.739613e-120 1.355765e-116 [8] 2.325536e-113 3.018276e-110 3.058586e-107 2.480543e-104 1.642534e-101 9.027724e-99 4.175767e-96 [15] 1.644702e-93 5.572070e-91 1.638079e-88 4.210963e-86 9.530281e-84 1.910424e-81 3.410345e-79 [22] 5.447786e-77 7.821658e-75 1.013356e-72 1.189000e-70 1.267638e-68 1.231736e-66 1.093852e-64 [29] 8.900857e-63 6.652193e-61 4.576232e-59 2.903632e-57 1.702481e-55 9.240350e-54 4.650130e-52 [36] 2.173043e-50 9.442985e-49 3.820823e-47 1.441257e-45 5.074077e-44 1.669028e-42 5.134399e-41 [43] 1.478542e-39 3.989016e-38 1.009089e-36 2.395206e-35 5.338260e-34 1.117816e-32 2.200410e-31 [50] 4.074043e-30 7.098105e-29 1.164233e-27 1.798390e-26 2.617103e-25 3.589044e-24 4.639451e-23 [57] 5.654244e-22 6.497925e-21 7.042397e-20 7.198582e-19 6.940175e-18 6.310859e-17 5.412268e-16 [64] 4.377256e-15 3.338067e-14 2.399811e-13 1.626091e-12 1.038184e-11 6.243346e-11 3.535115e-10 [71] 1.883810e-09 9.442711e-09 4.449741e-08 1.970041e-07 8.188671e-07 3.193112e-06 1.167109e-05 [78] 3.994913e-05 1.279299e-04 3.828641e-04 1.069633e-03 2.786293e-03 6.759071e-03 1.525017e-02 [85] 3.196401e-02 6.216690e-02 1.120899e-01 1.872547e-01 2.898395e-01 4.160339e-01 5.550192e-01 [92] 6.909666e-01 8.079129e-01 8.953150e-01 9.511926e-01 9.811343e-01 9.942110e-01 9.986807e-01 [99] 9.998018e-01 9.999853e-01 1.000000e+00 # Density plot plot(0:100, dhyper(0:100, 100, 10^3-100, 100), type='h')

Moreover,

1 - phyper(q=10, m, n, k)

= 1 - sum_{x=0}^{x=10} phyper(x, m, n, k)

= 1 - sum(a[1:11]) # R's index starts from 1.

Another example is the data from the functional annotation tool in DAVID.

| gene list | not gene list |

-------------------------------------------------------

pathway | 3 (q) | | 40 (m)

-------------------------------------------------------

not in pathway | 297 | | 29960 (n)

-------------------------------------------------------

| 300 (k) | | 30000

The one-tailed p-value from the hypergeometric test is calculated as 1 - phyper(3-1, 40, 29960, 300) = 0.0074.

Fisher's exact test

Following the above example from the DAVID website, the following R command calculates the Fisher exact test for independence in 2x2 contingency tables.

> fisher.test(matrix(c(3, 40, 297, 29960), nr=2)) # alternative = "two.sided" by default

Fisher's Exact Test for Count Data

data: matrix(c(3, 40, 297, 29960), nr = 2)

p-value = 0.008853

alternative hypothesis: true odds ratio is not equal to 1

95 percent confidence interval:

1.488738 23.966741

sample estimates:

odds ratio

7.564602

> fisher.test(matrix(c(3, 40, 297, 29960), nr=2), alternative="greater")

Fisher's Exact Test for Count Data

data: matrix(c(3, 40, 297, 29960), nr = 2)

p-value = 0.008853

alternative hypothesis: true odds ratio is greater than 1

95 percent confidence interval:

1.973 Inf

sample estimates:

odds ratio

7.564602

> fisher.test(matrix(c(3, 40, 297, 29960), nr=2), alternative="less")

Fisher's Exact Test for Count Data

data: matrix(c(3, 40, 297, 29960), nr = 2)

p-value = 0.9991

alternative hypothesis: true odds ratio is less than 1

95 percent confidence interval:

0.00000 20.90259

sample estimates:

odds ratio

7.564602

From the documentation of fisher.test

Usage:

fisher.test(x, y = NULL, workspace = 200000, hybrid = FALSE,

control = list(), or = 1, alternative = "two.sided",

conf.int = TRUE, conf.level = 0.95,

simulate.p.value = FALSE, B = 2000)

- For 2 by 2 cases, p-values are obtained directly using the (central or non-central) hypergeometric distribution.

- For 2 by 2 tables, the null of conditional independence is equivalent to the hypothesis that the odds ratio equals one.

- The alternative for a one-sided test is based on the odds ratio, so ‘alternative = "greater"’ is a test of the odds ratio being bigger than ‘or’.

- Two-sided tests are based on the probabilities of the tables, and take as ‘more extreme’ all tables with probabilities less than or equal to that of the observed table, the p-value being the sum of such probabilities.

Confidence vs Credibility Intervals

http://freakonometrics.hypotheses.org/18117

Confidence interval vs prediction interval

Confidence intervals tell you about how well you have determined the mean E(Y). Prediction intervals tell you where you can expect to see the next data point sampled. That is, CI is computed using Var(E(Y|X)) and PI is computed using Var(E(Y|X) + e).

- http://www.graphpad.com/support/faqid/1506/

- http://en.wikipedia.org/wiki/Prediction_interval

- http://robjhyndman.com/hyndsight/intervals/

- https://stat.duke.edu/courses/Fall13/sta101/slides/unit7lec3H.pdf

Power Analysis

Power analysis for default Bayesian t-tests

http://daniellakens.blogspot.com/2016/01/power-analysis-for-default-bayesian-t.html

Using simulation for power analysis: an example based on a stepped wedge study design

https://www.rdatagen.net/post/using-simulation-for-power-analysis-an-example/

Power analysis and sample size calculation for Agriculture

http://r-video-tutorial.blogspot.com/2017/07/power-analysis-and-sample-size.html

Counter/Special Examples

Suppose X is a normally-distributed random variable with zero mean. Let Y = X^2. Clearly X and Y are not independent: if you know X, you also know Y. And if you know Y, you know the absolute value of X.

The covariance of X and Y is

Cov(X,Y) = E(XY) - E(X)E(Y) = E(X^3) - 0*E(Y) = E(X^3)

= 0,

because the distribution of X is symmetric around zero. Thus the correlation r(X,Y) = Cov(X,Y)/Sqrt[Var(X)Var(Y)] = 0, and we have a situation where the variables are not independent, yet have (linear) correlation r(X,Y) = 0.

This example shows how a linear correlation coefficient does not encapsulate anything about the quadratic dependence of Y upon X.

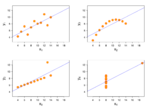

Anscombe quartet

Four datasets have almost same properties: same mean in X, same mean in Y, same variance in X, (almost) same variance in Y, same correlation in X and Y, same linear regression.

The real meaning of spurious correlations

https://nsaunders.wordpress.com/2017/02/03/the-real-meaning-of-spurious-correlations/

library(ggplot2)

set.seed(123)

spurious_data <- data.frame(x = rnorm(500, 10, 1),

y = rnorm(500, 10, 1),

z = rnorm(500, 30, 3))

cor(spurious_data$x, spurious_data$y)

# [1] -0.05943856

spurious_data %>% ggplot(aes(x, y)) + geom_point(alpha = 0.3) +

theme_bw() + labs(title = "Plot of y versus x for 500 observations with N(10, 1)")

cor(spurious_data$x / spurious_data$z, spurious_data$y / spurious_data$z)

# [1] 0.4517972

spurious_data %>% ggplot(aes(x/z, y/z)) + geom_point(aes(color = z), alpha = 0.5) +

theme_bw() + geom_smooth(method = "lm") +

scale_color_gradientn(colours = c("red", "white", "blue")) +

labs(title = "Plot of y/z versus x/z for 500 observations with x,y N(10, 1); z N(30, 3)")

spurious_data$z <- rnorm(500, 30, 6)

cor(spurious_data$x / spurious_data$z, spurious_data$y / spurious_data$z)

# [1] 0.8424597

spurious_data %>% ggplot(aes(x/z, y/z)) + geom_point(aes(color = z), alpha = 0.5) +

theme_bw() + geom_smooth(method = "lm") +

scale_color_gradientn(colours = c("red", "white", "blue")) +

labs(title = "Plot of y/z versus x/z for 500 observations with x,y N(10, 1); z N(30, 6)")

Time series

Structural change

Structural Changes in Global Warming

Dictionary

- Prognosis is the probability that an event or diagnosis will result in a particular outcome.