ICC: Difference between revisions

Jump to navigation

Jump to search

(→Basic) |

|||

| Line 2: | Line 2: | ||

ICC: '''intra-class correlation''' | ICC: '''intra-class correlation''' | ||

* https://en.wikipedia.org/wiki/Intraclass_correlation (the random effect <math>\alpha_j</math> in the one-way random model should be subjects, not raters) | * https://en.wikipedia.org/wiki/Intraclass_correlation (the random effect <math>\alpha_j</math> in the one-way random model should be subjects, not raters) | ||

** (Early def of ICC) In the case of paired data, The key difference between this ICC and the interclass (Pearson) correlation is that the data are pooled to estimate the mean and variance. The reason for this is that in the setting where an intraclass correlation is desired, the pairs are considered to be unordered. | |||

** [https://www.statisticshowto.com/intraclass-correlation/ Intraclass Correlation] from Statistics How To | ** [https://www.statisticshowto.com/intraclass-correlation/ Intraclass Correlation] from Statistics How To | ||

** [https://pdfs.semanticscholar.org/b8d4/7b0c0b12dd77543e82e6bf6636ddd335cfea.pdf Shrout, P.E., Fleiss, J.L. (1979), Intraclass correlation: uses in assessing rater reliability, Psychological Bulletin, 86, 420-428.] | ** [https://pdfs.semanticscholar.org/b8d4/7b0c0b12dd77543e82e6bf6636ddd335cfea.pdf Shrout, P.E., Fleiss, J.L. (1979), Intraclass correlation: uses in assessing rater reliability, Psychological Bulletin, 86, 420-428.] | ||

| Line 7: | Line 8: | ||

** ICC(2,1): k raters are randomly selected, then, each subject is measured by the same set of k raters; | ** ICC(2,1): k raters are randomly selected, then, each subject is measured by the same set of k raters; | ||

** ICC(3,1): similar to ICC(2,1) but k raters are fixed. | ** ICC(3,1): similar to ICC(2,1) but k raters are fixed. | ||

* <math> | * (Modern ICC definitions) <math> | ||

Y_{ij} = \mu + \alpha_i + \varepsilon_{ij}, | Y_{ij} = \mu + \alpha_i + \varepsilon_{ij}, | ||

</math> where <math>\alpha_i</math> is the random effect from subject i, | </math> where <math>\alpha_i</math> is the random effect from subject i, | ||

Revision as of 13:43, 7 December 2021

Basic

ICC: intra-class correlation

- https://en.wikipedia.org/wiki/Intraclass_correlation (the random effect [math]\displaystyle{ \alpha_j }[/math] in the one-way random model should be subjects, not raters)

- (Early def of ICC) In the case of paired data, The key difference between this ICC and the interclass (Pearson) correlation is that the data are pooled to estimate the mean and variance. The reason for this is that in the setting where an intraclass correlation is desired, the pairs are considered to be unordered.

- Intraclass Correlation from Statistics How To

- Shrout, P.E., Fleiss, J.L. (1979), Intraclass correlation: uses in assessing rater reliability, Psychological Bulletin, 86, 420-428.

- ICC(1,1): each subject is measured by a different set of k randomly selected raters?;

- ICC(2,1): k raters are randomly selected, then, each subject is measured by the same set of k raters;

- ICC(3,1): similar to ICC(2,1) but k raters are fixed.

- (Modern ICC definitions) [math]\displaystyle{ Y_{ij} = \mu + \alpha_i + \varepsilon_{ij}, }[/math] where [math]\displaystyle{ \alpha_i }[/math] is the random effect from subject i,

- [math]\displaystyle{ ICC(1,1) = \frac{\sigma_\alpha^2}{\sigma_\alpha^2+\sigma_\varepsilon^2}. }[/math]

- See also the formula and estimation here. The intuition can be found in examples where the 1st case has large [math]\displaystyle{ \sigma_\alpha^2 }[/math] and the other does not.

- The reason ICC(1,1) defined above is intraclass correlation [math]\displaystyle{ Corr(Y_{ij}, Y_{ik}) }[/math] can be derived here: Calculating the Intraclass Correlation Coefficient (ICC) in SAS. Excellent!!

- Intraclass correlation – A discussion and demonstration of basic features Liljequist, PLOS One 2019. It gives a good comprehensive review with formulas (including a derivation of expected mean square relations) and some simulation studies. The table also shows the differences of agreement and consistency.

- A Guideline of Selecting and Reporting Intraclass Correlation Coefficients for Reliability Research Koo 2016.

- Intraclass correlation coefficient vs. F-test (one-way ANOVA)?

- Good ICC, bad CV or vice-versa, how to interpret? Two examples are given show the difference of between-sample (think of using one value such as the average to represent a sample) variability and within-sample variability.

- Intraclass Correlation Coefficient in R. icc() [irr package] and the function ICC() [psych package] are considered with a simple example.

- Simulation. https://stats.stackexchange.com/q/135345 chapter 4 of this book: Headrick, T. C. (2010). Statistical simulation: Power method polynomials and other transformations. Boca Raton, FL: Chapman & Hall

- https://bookdown.org/roback/bookdown-bysh/ch-corrdata.html

- https://www.sas.com/content/dam/SAS/en_ca/User%20Group%20Presentations/Health-User-Groups/Maki-InterraterReliability-Apr2014.pdf

R packages

The main input is a matrix of n subjects x p raters. Each rater is a class/group.

- psych: ICC()

- irr: icc() for one-way or two-way model. This works on my data 30k by 58. The default option gives ICC(1). It can also compute ICC(A,1)/agreement and ICC(C,1)/consistency.

- psy: icc(). No options are provided. I got an error: vector memory exhausted (limit reached?) when the data is 30k by 58.

- rptR:

Negative ICC

- Can ICC values be negative?

- Negative Values of the Intraclass Correlation Coefficient Are Not Theoretically Possible

- What to do with negative ICC values? Adjust the test or interpret it differently?

- Intraclass correlation – A discussion and demonstration of basic features on PLOS One. A negative ICC(1) is simply a bad (unfortunate) estimate. This may occur by chance, especially if the sample size (number of subjects n) is small.

Examples

psych package data

It shows ICC1 = ICC(1,1)

R> library(psych)

R> (o <- ICC(anxiety, lmer=FALSE) )

Call: ICC(x = anxiety, lmer = FALSE)

Intraclass correlation coefficients

type ICC F df1 df2 p lower bound upper bound

Single_raters_absolute ICC1 0.18 1.6 19 40 0.094 -0.0405 0.44

Single_random_raters ICC2 0.20 1.8 19 38 0.056 -0.0045 0.45

Single_fixed_raters ICC3 0.22 1.8 19 38 0.056 -0.0073 0.48

Average_raters_absolute ICC1k 0.39 1.6 19 40 0.094 -0.1323 0.70

Average_random_raters ICC2k 0.43 1.8 19 38 0.056 -0.0136 0.71

Average_fixed_raters ICC3k 0.45 1.8 19 38 0.056 -0.0222 0.73

Number of subjects = 20 Number of Judges = 3

R> library(irr)

R> (o2 <- icc(anxiety, model="oneway")) # subjects be considered as random effects

Single Score Intraclass Correlation

Model: oneway

Type : consistency

Subjects = 20

Raters = 3

ICC(1) = 0.175

F-Test, H0: r0 = 0 ; H1: r0 > 0

F(19,40) = 1.64 , p = 0.0939

95%-Confidence Interval for ICC Population Values:

-0.077 < ICC < 0.484

R> o$results["Single_raters_absolute", "ICC"]

[1] 0.1750224

R> o2$value

[1] 0.1750224

R> icc(anxiety, model="twoway", type = "consistency")

Single Score Intraclass Correlation

Model: twoway

Type : consistency

Subjects = 20

Raters = 3

ICC(C,1) = 0.216

F-Test, H0: r0 = 0 ; H1: r0 > 0

F(19,38) = 1.83 , p = 0.0562

95%-Confidence Interval for ICC Population Values:

-0.046 < ICC < 0.522

R> icc(anxiety, model="twoway", type = "agreement")

Single Score Intraclass Correlation

Model: twoway

Type : agreement

Subjects = 20

Raters = 3

ICC(A,1) = 0.198

F-Test, H0: r0 = 0 ; H1: r0 > 0

F(19,39.7) = 1.83 , p = 0.0543

95%-Confidence Interval for ICC Population Values:

-0.039 < ICC < 0.494

library(magrittr)

library(tidyr)

library(ggplot2)

set.seed(1)

r1 <- round(rnorm(20, 10, 4))

r2 <- round(r1 + 10 + rnorm(20, 0, 2))

r3 <- round(r1 + 20 + rnorm(20, 0, 2))

df <- data.frame(r1, r2, r3) %>% pivot_longer(cols=1:3)

df %>% ggplot(aes(x=name, y=value)) + geom_point()

df0 <- cbind(r1, r2, r3)

icc(df0, model="oneway") # ICC(1) = -0.262 --> Negative.

# Shift can mess up the ICC. See wikipedia.

icc(df0, model="twoway", type = "consistency") # ICC(C,1) = 0.846 --> Make sense

icc(df0, model="twoway", type = "agreement") # ICC(A,1) = 0.106 --> Why?

ICC(df0)

Call: ICC(x = df0, lmer = T)

Intraclass correlation coefficients

type ICC F df1 df2 p lower bound upper bound

Single_raters_absolute ICC1 -0.26 0.38 19 40 9.9e-01 -0.3613 -0.085

Single_random_raters ICC2 0.11 17.43 19 38 2.9e-13 0.0020 0.293

Single_fixed_raters ICC3 0.85 17.43 19 38 2.9e-13 0.7353 0.920

Average_raters_absolute ICC1k -1.65 0.38 19 40 9.9e-01 -3.9076 -0.307

Average_random_raters ICC2k 0.26 17.43 19 38 2.9e-13 0.0061 0.555

Average_fixed_raters ICC3k 0.94 17.43 19 38 2.9e-13 0.8929 0.972

Number of subjects = 20 Number of Judges = 3

Wine rating

Intraclass Correlation: Multiple Approaches from David C. Howell. The data appeared on the paper by Shrout and Fleiss 1979.

library(magrittr)

library(psych); library(lme4)

rating <- matrix(c(9, 2, 5, 8,

6, 1, 3, 2,

8, 4, 6, 8,

7, 1, 2, 6,

10, 5, 6, 9,

6, 2, 4, 7), ncol=4, byrow=TRUE)

(o <- ICC(rating))

o$results[, 1:2]

# type ICC

#Single_raters_absolute ICC1 0.1657423 # match with icc(, "oneway")

#Single_random_raters ICC2 0.2897642 # match with icc(, "twoway", "agreement")

#Single_fixed_raters ICC3 0.7148415 # match with icc(, "twoway", "consistency")

#Average_raters_absolute ICC1k 0.4427981

#Average_random_raters ICC2k 0.6200510

#Average_fixed_raters ICC3k 0.9093159

# Plot

rating2 <- data.frame(rating) %>%

dplyr::bind_cols(data.frame(subj = paste0("s", 1:nrow(rating)))) %>%

tidyr::pivot_longer(1:4, names_to="group", values_to="y")

rating2 %>% ggplot(aes(x=group, y=y)) + geom_point()

library(irr) icc(rating, "oneway") # Single Score Intraclass Correlation # # Model: oneway # Type : consistency # # Subjects = 6 # Raters = 4 # ICC(1) = 0.166 # # F-Test, H0: r0 = 0 ; H1: r0 > 0 # F(5,18) = 1.79 , p = 0.165 # # 95%-Confidence Interval for ICC Population Values: # -0.133 < ICC < 0.723 icc(rating, "twoway", "agreement") # Single Score Intraclass Correlation # # Model: twoway # Type : agreement # # Subjects = 6 # Raters = 4 # ICC(A,1) = 0.29 # # F-Test, H0: r0 = 0 ; H1: r0 > 0 # F(5,4.79) = 11 , p = 0.0113 # # 95%-Confidence Interval for ICC Population Values: # 0.019 < ICC < 0.761 icc(rating, "twoway", "consistency") # Single Score Intraclass Correlation # # Model: twoway # Type : consistency # # Subjects = 6 # Raters = 4 # ICC(C,1) = 0.715 # # F-Test, H0: r0 = 0 ; H1: r0 > 0 # F(5,15) = 11 , p = 0.000135 # # 95%-Confidence Interval for ICC Population Values: # 0.342 < ICC < 0.946

anova(aov(y ~ subj + group, rating2))

# Analysis of Variance Table

#

# Response: y

# Df Sum Sq Mean Sq F value Pr(>F)

# subj 5 56.208 11.242 11.027 0.0001346 ***

# group 3 97.458 32.486 31.866 9.454e-07 ***

# Residuals 15 15.292 1.019

# ---

# Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

(11.242 - (97.458+15.292)/18) / (11.242 + 3*(97.458+15.292)/18)

# [1] 0.165751 # ICC(1) = (BMS - WMS) / (BMS + (k-1)WMS)

# k = number of raters

(11.242 - 1.019) / (11.242 + 3*1.019 + 4*(32.486-1.019)/6)

# [1] 0.2897922 # ICC(2,1) = (BMS - EMS) / (BMS + (k-1)EMS + k(JMS-EMS)/n)

# n = number of subjects/targets

(11.242 - 1.019) / (11.242 + 3*1.019)

# [1] 0.7149451 # ICC(3,1)

Wine rating2

Introclass correlation (from Real Statistics Using Excel) with a simple example.

R> wine <- cbind(c(1,1,3,6,6,7,8,9), c(2,3,8,4,5,5,7,9),

c(0,3,1,3,5,6,7,9), c(1,2,4,3,6,2,9,8))

R> icc(wine, model="oneway")

Single Score Intraclass Correlation

Model: oneway

Type : consistency

Subjects = 8

Raters = 4

ICC(1) = 0.728

F-Test, H0: r0 = 0 ; H1: r0 > 0

F(7,24) = 11.7 , p = 2.18e-06

95%-Confidence Interval for ICC Population Values:

0.434 < ICC < 0.927

# For one-way random model, the order of raters is not important

R> wine2 <- wine

R> for(j in 1:8) wine2[j, ] <- sample(wine[j,])

R> icc(wine2, model="oneway")

Single Score Intraclass Correlation

Model: oneway

Type : consistency

Subjects = 8

Raters = 4

ICC(1) = 0.728

F-Test, H0: r0 = 0 ; H1: r0 > 0

F(7,24) = 11.7 , p = 2.18e-06

95%-Confidence Interval for ICC Population Values:

0.434 < ICC < 0.927

R> icc(wine, model="twoway", type="agreement")

Single Score Intraclass Correlation

Model: twoway

Type : agreement

Subjects = 8

Raters = 4

ICC(A,1) = 0.728

F-Test, H0: r0 = 0 ; H1: r0 > 0

F(7,24) = 11.8 , p = 2.02e-06

95%-Confidence Interval for ICC Population Values:

0.434 < ICC < 0.927

R> icc(wine, model="twoway", type="consistency")

Single Score Intraclass Correlation

Model: twoway

Type : consistency

Subjects = 8

Raters = 4

ICC(C,1) = 0.729

F-Test, H0: r0 = 0 ; H1: r0 > 0

F(7,21) = 11.8 , p = 5.03e-06

95%-Confidence Interval for ICC Population Values:

0.426 < ICC < 0.928

Two-way fixed effects model

R> wine3 <- data.frame(wine) %>%

dplyr::bind_cols(data.frame(subj = paste0("s", 1:8))) %>%

tidyr::pivot_longer(1:4, names_to="group", values_to="y")

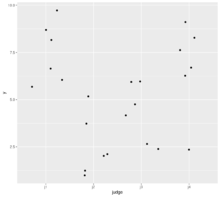

R> wine3 %>% ggplot(aes(x=group, y=y)) + geom_point()

R> anova(aov(y ~ subj + group, data = wine3))

Analysis of Variance Table

Response: y

Df Sum Sq Mean Sq F value Pr(>F)

subj 7 188.219 26.8884 11.7867 5.026e-06 ***

group 3 7.344 2.4479 1.0731 0.3818

Residuals 21 47.906 2.2813

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

R> anova(aov(y ~ group + subj, data = wine3))

Analysis of Variance Table

Response: y

Df Sum Sq Mean Sq F value Pr(>F)

group 3 7.344 2.4479 1.0731 0.3818

subj 7 188.219 26.8884 11.7867 5.026e-06 ***

Residuals 21 47.906 2.2812

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

R> library(car)

R> Anova(aov(y ~ subj + group, data = wine3))

Anova Table (Type II tests)

Response: y

Sum Sq Df F value Pr(>F)

subj 188.219 7 11.7867 5.026e-06 ***

group 7.344 3 1.0731 0.3818

Residuals 47.906 21

---

Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1