Statistics

Boxcox transformation

Finding transformation for normal distribution

Visualize the random effects

http://www.quantumforest.com/2012/11/more-sense-of-random-effects/

Sensitivity/Specificity/Accuracy

| Predict | ||||

| 1 | 0 | |||

| True | 1 | TP | FN | Sens=TP/(TP+FN) |

| 0 | FP | TN | Spec=TN/(FP+TN) | |

| N = TP + FP + FN + TN | ||||

- Sensitivity = TP / (TP + FN)

- Specificity = TN / (TN + FP)

- Accuracy = (TP + TN) / N

ROC curve and Brier score

Elements of Statistical Learning

Bagging

Chapter 8 of the book.

- Bootstrap mean is approximately a posterior average.

- Bootstrap aggregation or bagging average: Average the prediction over a collection of bootstrap samples, thereby reducing its variance. The bagging estimate is defined by

- [math]\displaystyle{ \hat{f}_{bag}(x) = \frac{1}{B}\sum_{b=1}^B \hat{f}^{*b}(x). }[/math]

Boosting

AdaBoost.M1 by Freund and Schapire (1997):

The error rate on the training sample is [math]\displaystyle{ \bar{err} = \frac{1}{N} \ sum_{i=1}^N I(y_i \neq G(x_i)), }[/math]

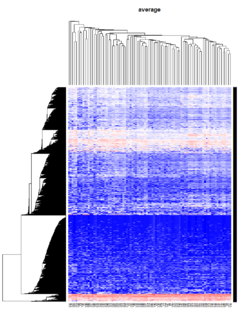

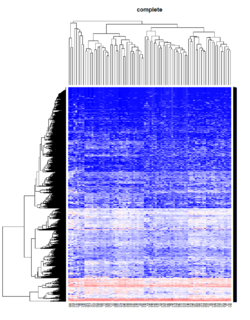

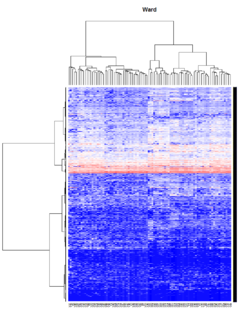

Hierarchical clustering

For the kth cluster, define the Error Sum of Squares as [math]\displaystyle{ ESS_k = }[/math] sum of squared deviations (squared Euclidean distance) from the cluster centroid.

If there are C clusters, define the Total Error Sum of Squares as Sum of Squares as [math]\displaystyle{ ESS = \sum_k ESS_k, for k=1,\dots,C }[/math]

Consider the union of every possible pair of clusters.

Combine the 2 clusters whose combination combination results in the smallest increase in ESS.

Comments:

- Ward's method tends to join clusters with a small number of observations, and it is strongly biased toward producing clusters with the same shape and with roughly the same number of observations.

- It is also very sensitive to outliers. See Milligan (1980).

Take pomeroy data (7129 x 90) for an example:

library(gplots)

lr = read.table("C:/ArrayTools/Sample datasets/Pomeroy/Pomeroy -Project/NORMALIZEDLOGINTENSITY.txt")

lr = as.matrix(lr)

method = "average" # method <- "complete"; method <- "ward"

hclust1 <- function(x) hclust(x, method= method)

heatmap.2(lr, col=bluered(75), hclustfun = hclust1, distfun = dist,

density.info="density", scale = "none",

key=FALSE, symkey=FALSE, trace="none",

main = method)