R: Difference between revisions

| Line 4,700: | Line 4,700: | ||

<syntaxhighlight lang='rsplus'> | <syntaxhighlight lang='rsplus'> | ||

source("https://bioconductor.org/biocLite.R") | source("https://bioconductor.org/biocLite.R") | ||

biocLite("survcomp") | biocLite("survcomp") # this has to be run before the next command of installing a bunch of packages from CRAN | ||

install.packages("https://cran.r-project.org/src/contrib/Archive/biospear/biospear_1.0.1.tar.gz", | |||

repos = NULL, type="source") | |||

# ERROR: dependencies ‘pkgconfig’, ‘cobs’, ‘corpcor’, ‘devtools’, ‘glmnet’, ‘grplasso’, ‘mboost’, ‘plsRcox’, | |||

# ‘pROC’, ‘PRROC’, ‘RCurl’, ‘survAUC’ are not available for package ‘biospear’ | |||

install.packages("https://cran.r-project.org/src/contrib/Archive/biospear/biospear_1.0.1.tar.gz", repos = NULL, type="source") | |||

# ERROR: dependencies ‘pkgconfig’, ‘cobs’, ‘corpcor’, ‘devtools’, ‘glmnet’, ‘grplasso’, ‘mboost’, ‘plsRcox’, ‘pROC’, ‘PRROC’, ‘RCurl’, ‘survAUC’ | |||

install.packages(c("pkgconfig", "cobs", "corpcor", "devtools", "glmnet", "grplasso", "mboost", | install.packages(c("pkgconfig", "cobs", "corpcor", "devtools", "glmnet", "grplasso", "mboost", | ||

"plsRcox", "pROC", "PRROC", "RCurl", "survAUC")) | "plsRcox", "pROC", "PRROC", "RCurl", "survAUC")) | ||

install.packages("https://cran.r-project.org/src/contrib/Archive/biospear/biospear_1.0.1.tar.gz", | install.packages("https://cran.r-project.org/src/contrib/Archive/biospear/biospear_1.0.1.tar.gz", | ||

repos = NULL, type="source") | repos = NULL, type="source") | ||

# OR | |||

# devtools::install_github("cran/biospear") | |||

library(biospear) # verify | library(biospear) # verify | ||

</syntaxhighlight> | </syntaxhighlight> | ||

| Line 4,732: | Line 4,729: | ||

# install.packages("~/Downloads/inSilicoDb", repos = NULL) | # install.packages("~/Downloads/inSilicoDb", repos = NULL) | ||

# install.packages("~/Downloads/inSilicoMerging", repos = NULL) | # install.packages("~/Downloads/inSilicoMerging", repos = NULL) | ||

install.packages("http://www.bioconductor.org/packages/3.3/bioc/src/contrib/inSilicoDb_2.7.0.tar.gz", repos = NULL, type = "source") | install.packages("http://www.bioconductor.org/packages/3.3/bioc/src/contrib/inSilicoDb_2.7.0.tar.gz", | ||

install.packages("http://www.bioconductor.org/packages/3.3/bioc/src/contrib/inSilicoMerging_1.15.0.tar.gz", repos = NULL, type = "source") | repos = NULL, type = "source") | ||

install.packages("http://www.bioconductor.org/packages/3.3/bioc/src/contrib/inSilicoMerging_1.15.0.tar.gz", | |||

repos = NULL, type = "source") | |||

</syntaxhighlight> | </syntaxhighlight> | ||

Revision as of 15:22, 8 June 2018

Install Rtools for Windows users

See http://goo.gl/gYh6C for a step-by-step instruction (based on Rtools30.exe) with screenshot. Note that in the step of 'Select Components', the default is 'Package authoring installation'. But we want 'Full installation to build 32 or 64 bit R'; that is, check all components (including tcl/tk) available. The "extra" files will be stored in subdirectories of the R source home directory. These files are not needed to build packages, only to build R itself. By default, the 32-bit R source home is C:\R and 64-bit source home is C:\R64. After the installation, these two directories will contain a new directory 'Tcl'.

My preferred way is not to check the option of setting PATH environment. But I manually add the followings to the PATH environment (based on Rtools v3.2.2)

c:\Rtools\bin; c:\Rtools\gcc-4.6.3\bin; C:\Program Files\R\R-3.2.2\bin\i386;

We can make our life easy by creating a file <Rcommand.bat> with the content (also useful if you have C:\cygwin\bin in your PATH although cygwin setup will not do it automatically for you.)

PS. I put <Rcommand.bat> under C:\Program Files\R folder. I create a shortcut called 'Rcmd' on desktop. I enter C:\Windows\System32\cmd.exe /K "Rcommand.bat" in the Target entry and "C:\Program Files\R" in Start in entry.

@echo off set PATH=C:\Rtools\bin;c:\Rtools\gcc-4.6.3\bin set PATH=C:\Program Files\R\R-3.2.2\bin\i386;%PATH% set PKG_LIBS=`Rscript -e "Rcpp:::LdFlags()"` set PKG_CPPFLAGS=`Rscript -e "Rcpp:::CxxFlags()"` echo Setting environment for using R cmd

So we can open the Command Prompt anywhere and run <Rcommand.bat> to get all environment variables ready! On Windows Vista, 7 and 8, we need to run it as administrator. OR we can change the security of the property so the current user can have an executive right.

Windows Toolset

Note that R on Windows supports Mingw-w64 (not Mingw which is a separate project). See here for the issue of developing a Qt application that links against R using Rcpp. And http://qt-project.org/wiki/MinGW is the wiki for compiling Qt using MinGW and MinGW-w64.

Build R from its source on Windows OS (not cross compile on Linux)

Reference: https://cran.r-project.org/doc/manuals/R-admin.html#Installing-R-under-Windows

First we try to build 32-bit R (tested on R 3.2.2 using Rtools33). At the end I will see how to build a 64-bit R.

Download https://www.stats.ox.ac.uk/pub/Rtools/goodies/multilib/local320.zip (read https://www.stats.ox.ac.uk/pub/Rtools/libs.html). create an empty directory, say c:/R/extsoft, and unpack it in that directory by e.g.

unzip local320.zip -d c:/R/extsoft

Tcl: two methods

- Download tcl file from http://www.stats.ox.ac.uk/pub/Rtools/R_Tcl_8-5-8.zip. Unzip and put 'Tcl' into R_HOME folder.

- If you have chosen a full installation when running Rtools, then copy C:/R/Tcl or C:/R64/Tcl (not the same) to R_HOME folder.

Open a command prompt as Administrator"

set PATH=c:\Rtools\bin;c:\Rtools\gcc-4.6.3\bin set PATH=%PATH%;C:\Users\brb\Downloads\R-3.2.2\bin\i386;c:\windows;c:\windows\system32 set TMPDIR=C:/tmp tar --no-same-owner -xf R-3.2.2.tar.gz cp -R c:\R64\Tcl c:\Users\brb\Downloads\R-3.2.2 cd R-3.2.2\src\gnuwin32 cp MkRules.dist MkRules.local # Modify MkRules.local file; specifically uncomment + change the following 2 flags. # LOCAL_SOFT = c:/R/extsoft # EXT_LIBS = $(LOCAL_SOFT) make

If we see an error of texi2dvi() complaining pdflatex is not available, it means a vanilla R is successfully built.

If we want to build the recommended packages (MASS, lattice, Matrix, ...) as well, run (check all make option in <R_HOME\src\gnuwin32\Makefile>)

make recommended

If we need to rebuild R for whatever reason, run

make clean

If we want to build R with debug information, run

make DEBUG=T

NB: 1. The above works for creating 32-bit R from its source. If we want to build 64-bit R from its source, we need to modify MkRules.local file to turn on the MULTI flag.

MULTI = 64

and reset the PATH variable

set PATH=c:\Rtools\bin;c:\Rtools\gcc-4.6.3\bin set PATH=%PATH%;C:\Users\brb\Downloads\R-3.2.2\bin\x64;c:\windows;c:\windows\system32

I don't need to mess up with other flags like BINPREF64, M_ARCH, AS_ARCH, RC_ARCH, DT_ARCH or even WIN. The note http://www.stat.yale.edu/~jay/Admin3.3.pdf is kind of old and is not needed. 2. If we have already built 32-bit R and want to continue to build 64-bit R, it is not enough to run 'make clean' before run 'make' again since it will give an error message incompatible ./libR.dll.a when searching for -lR in building Rgraphapp.dll. In fact, libR.dll.a can be cleaned up if we run 'make distclean' but it will also wipe out /bin/i386 folder:(

See also Create_a_standalone_Rmath_library below about how to create and use a standalone Rmath library in your own C/C++/Fortran program. For example, if you want to know the 95-th percentile of a T distribution or generate a bunch of random variables, you don't need to search internet to find a library; you can just use Rmath library.

Build R from its source on Linux (cross compile)

Compile and install an R package

Command line

cd C:\Documents and Settings\brb wget http://www.bioconductor.org/packages/2.11/bioc/src/contrib/affxparser_1.30.2.tar.gz C:\progra~1\r\r-2.15.2\bin\R CMD INSTALL --build affxparser_1.30.2.tar.gz

N.B. the --build is used to create a binary package (i.e. affxparser_1.30.2.zip). In the above example, it will both install the package and create a binary version of the package. If we don't want the binary package, we can ignore the flag.

R console

install.packages("C:/Users/USERNAME/Downloads/DESeq2paper_1.3.tar.gz", repos=NULL, type="source")

See Chapter 6 of R Installation and Administration

Check/Upload to CRAN

http://win-builder.r-project.org/

64 bit toolchain

See January 2010 email https://stat.ethz.ch/pipermail/r-devel/2010-January/056301.html and R-Admin manual.

From R 2.11.0 there is 64 bit Windows binary for R.

Install R using binary package on Linux OS

Ubuntu/Debian

https://cran.rstudio.com/bin/linux/ubuntu/. For more info about GPG stuff, see GPG Authentication_key.

sudo apt-key adv --keyserver keyserver.ubuntu.com --recv-keys E084DAB9 # Some people have reported difficulties using this approach. The issue is usually related to a firewall blocking port 11371 # So alternatively (no sudo is needed in front of the gpg command) # gpg --keyserver keyserver.ubuntu.com --recv-key E084DAB9 # gpg -a --export E084DAB9 | sudo apt-key add - sudo nano /etc/apt/sources.list # For Ubuntu 14.04 (codename is trusty; https://wiki.ubuntu.com/Releases) # deb https://cran.rstudio.com/bin/linux/ubuntu trusty/ # deb-src https://cran.rstudio.com/bin/linux/ubuntu trusty/ sudo apt-get update sudo apt-get install r-base

Manually create the public key file if the gpg command failed.

Ubuntu/Debian goodies

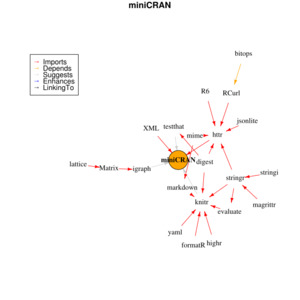

Since the R packages XML & RCurl & httr are frequently used by other packages (e.g. miniCRAN), it is useful to run the following so the install.packages("c(RCurl", "XML", "httr")) can work without hiccups.

sudo apt-get update sudo apt-get install libxml2-dev sudo apt-get install curl libcurl4-openssl-dev sudo apt-get install libssl-dev

See also Simple bash script for a fresh install of R and its dependencies in Linux.

To find out the exact package names (in the situation the version number changes, not likely with these two cases: xml and curl), consider the following approach

# Search 'curl' but also highlight matches containing both 'lib' and 'dev' > apt-cache search curl | awk '/lib/ && /dev/' libcurl4-gnutls-dev - development files and documentation for libcurl (GnuTLS flavour) libcurl4-nss-dev - development files and documentation for libcurl (NSS flavour) libcurl4-openssl-dev - development files and documentation for libcurl (OpenSSL flavour) libcurl-ocaml-dev - OCaml libcurl bindings (Development package) libcurlpp-dev - c++ wrapper for libcurl (development files) libflickcurl-dev - C library for accessing the Flickr API - development files libghc-curl-dev - GHC libraries for the libcurl Haskell bindings libghc-hxt-curl-dev - LibCurl interface for HXT libghc-hxt-http-dev - Interface to native Haskell HTTP package HTTP libresource-retriever-dev - Robot OS resource_retriever library - development files libstd-rust-dev - Rust standard libraries - development files lua-curl-dev - libcURL development files for the Lua language

If we need to install 'rgl' and related packages,

sudo apt install libcgal-dev libglu1-mesa-dev sudo apt install libfreetype6-dev

Windows Subsystem for Linux

http://blog.revolutionanalytics.com/2017/12/r-in-the-windows-subsystem-for-linux.html

Redhat el6

It should be pretty easy to install via the EPEL: http://fedoraproject.org/wiki/EPEL

Just follow the instructions to enable the EPEL OR using the command line

sudo rpm -ivh https://dl.fedoraproject.org/pub/epel/epel-release-latest-7.noarch.rpm sudo yum update # not sure if this is necessary

and then from the CLI:

sudo yum install R

Install R from source (ix86, x86_64 and arm platforms, Linux system)

Debian system (focus on arm architecture with notes from x86 system)

Simplest configuration

<Method 1 of installing requirements>

On my debian system in Pogoplug (armv5), Raspberry Pi (armv6) OR Beaglebone Black & Udoo(armv7), I can compile R. See R's admin manual. If the OS needs x11, I just need to install 2 required packages.

- install gfortran: apt-get install build-essential gfortran (gfortran is not part of build-essential)

- install readline library: apt-get install libreadline5-dev (pogoplug), apt-get install libreadline6-dev (raspberry pi/BBB), apt-get install libreadline-dev (Ubuntu)

Note: if I need X11, I should install

- libX11 and libX11-devel, libXt, libXt-devel (for fedora)

- libx11-dev (for debian) or xorg-dev (for pogoplug/raspberry pi/BBB/Odroid debian). See here for the difference of x11 and Xorg.

and optional

- texinfo (to fix 'WARNING: you cannot build info or HTML versions of the R manuals')

<Method 2 of installing requirements (recommended)>

Note that it is also safe to install required tools via (please run sudo nano /etc/apt/sources.list to include the source repository of your favorite R mirror, such as deb-src https://cran.rstudio.com/bin/linux/ubuntu xenial/ and also run sudo apt-get update first)

sudo apt-get build-dep r-base

The above command will install R dependence like jdk, tcl, tex, X11 libraries, etc. The apt-get build-dep gave a more complete list than apt-get install r-base-dev for some reasons.

[Arm architecture] I also run apt-get install readline-common. I don't know if this is necessary. If x11 is not needed or not available (eg Pogoplug), I can add --with-x=no option in ./configure command. If R will be called from other applications such as Rserve, I can add --enable-R-shlib option in ./configure command. Check out ./configure --help to get a complete list of all options.

After running

wget https://cran.rstudio.com/src/base/R-3/R-3.2.3.tar.gz tar xzvf R-3.2.3.tar.gz cd R-3.2.3 ./configure --enable-R-shlib

(--enable-R-shlib option will create a shared R library libR.so in $RHOME/lib subdirectory. This allows R to be embedded in other applications. See Embedding R.) I got

R is now configured for armv5tel-unknown-linux-gnueabi Source directory: . Installation directory: /usr/local C compiler: gcc -std=gnu99 -g -O2 Fortran 77 compiler: gfortran -g -O2 C++ compiler: g++ -g -O2 Fortran 90/95 compiler: gfortran -g -O2 Obj-C compiler: Interfaces supported: External libraries: readline Additional capabilities: NLS Options enabled: shared R library, shared BLAS, R profiling Recommended packages: yes configure: WARNING: you cannot build info or HTML versions of the R manuals configure: WARNING: you cannot build PDF versions of the R manuals configure: WARNING: you cannot build PDF versions of vignettes and help pages configure: WARNING: I could not determine a browser configure: WARNING: I could not determine a PDF viewer

After that, we can run make to create R binary. If the computer has multiple cores, we can run make in parallel by using the -j flag (for example, '-j4' means to run 4 jobs simultaneously). We can also add time command in front of make to report the make time (useful for benchmark).

make # make -j4 # time make

PS 1. On my raspberry pi machine, it shows R is now configured for armv6l-unknown-linux-gnueabihf and on Beaglebone black it shows R is now configured for armv7l-unknown-linux-gnueabihf.

PS 2. On my Beaglebone black, it took 2 hours to run 'make', Raspberry Pi 2 took 1 hour, Odroid XU4 took 23 minutes and it only took 5 minutes to run 'make -j 12' on my Xeon W3690 @ 3.47Ghz (6 cores with hyperthread) based on R 3.1.2. The timing is obtained by using 'time' command as described above.

PS 3. On my x86 system, it shows

R is now configured for x86_64-unknown-linux-gnu Source directory: . Installation directory: /usr/local C compiler: gcc -std=gnu99 -g -O2 Fortran 77 compiler: gfortran -g -O2 C++ compiler: g++ -g -O2 Fortran 90/95 compiler: gfortran -g -O2 Obj-C compiler: Interfaces supported: X11, tcltk External libraries: readline, lzma Additional capabilities: PNG, JPEG, TIFF, NLS, cairo Options enabled: shared R library, shared BLAS, R profiling, Java Recommended packages: yes

[arm] However, make gave errors for recommanded packages like KernSmooth, MASS, boot, class, cluster, codetools, foreign, lattice, mgcv, nlme, nnet, rpart, spatial, and survival. The error stems from

gcc: SHLIB_LIBADD: No such file or directory. Note that I can get this error message even I try install.packages("MASS", type="source"). A suggested fix is here; adding perl = TRUE in sub() call for two lines in src/library/tools/R/install.R file. However, I got another error shared object 'MASS.so' not found. See also http://ftp.debian.org/debian/pool/main/r/r-base/. To build R without recommended packages like ./configure --without-recommended.

make[1]: Entering directory `/mnt/usb/R-2.15.2/src/library/Recommended'

make[2]: Entering directory `/mnt/usb/R-2.15.2/src/library/Recommended'

begin installing recommended package MASS

* installing *source* package 'MASS' ...

** libs

make[3]: Entering directory `/tmp/Rtmp4caBfg/R.INSTALL1d1244924c77/MASS/src'

gcc -std=gnu99 -I/mnt/usb/R-2.15.2/include -DNDEBUG -I/usr/local/include -fpic -g -O2 -c MASS.c -o MASS.o

gcc -std=gnu99 -I/mnt/usb/R-2.15.2/include -DNDEBUG -I/usr/local/include -fpic -g -O2 -c lqs.c -o lqs.o

gcc -std=gnu99 -shared -L/usr/local/lib -o MASSSHLIB_EXT MASS.o lqs.o SHLIB_LIBADD -L/mnt/usb/R-2.15.2/lib -lR

gcc: SHLIB_LIBADD: No such file or directory

make[3]: *** [MASSSHLIB_EXT] Error 1

make[3]: Leaving directory `/tmp/Rtmp4caBfg/R.INSTALL1d1244924c77/MASS/src'

ERROR: compilation failed for package 'MASS'

* removing '/mnt/usb/R-2.15.2/library/MASS'

make[2]: *** [MASS.ts] Error 1

make[2]: Leaving directory `/mnt/usb/R-2.15.2/src/library/Recommended'

make[1]: *** [recommended-packages] Error 2

make[1]: Leaving directory `/mnt/usb/R-2.15.2/src/library/Recommended'

make: *** [stamp-recommended] Error 2

root@debian:/mnt/usb/R-2.15.2#

root@debian:/mnt/usb/R-2.15.2# bin/R

R version 2.15.2 (2012-10-26) -- "Trick or Treat"

Copyright (C) 2012 The R Foundation for Statistical Computing

ISBN 3-900051-07-0

Platform: armv5tel-unknown-linux-gnueabi (32-bit)

R is free software and comes with ABSOLUTELY NO WARRANTY.

You are welcome to redistribute it under certain conditions.

Type 'license()' or 'licence()' for distribution details.

R is a collaborative project with many contributors.

Type 'contributors()' for more information and

'citation()' on how to cite R or R packages in publications.

Type 'demo()' for some demos, 'help()' for on-line help, or

'help.start()' for an HTML browser interface to help.

Type 'q()' to quit R.

> library(MASS)

Error in library(MASS) : there is no package called 'MASS'

> library()

Packages in library '/mnt/usb/R-2.15.2/library':

base The R Base Package

compiler The R Compiler Package

datasets The R Datasets Package

grDevices The R Graphics Devices and Support for Colours

and Fonts

graphics The R Graphics Package

grid The Grid Graphics Package

methods Formal Methods and Classes

parallel Support for Parallel computation in R

splines Regression Spline Functions and Classes

stats The R Stats Package

stats4 Statistical Functions using S4 Classes

tcltk Tcl/Tk Interface

tools Tools for Package Development

utils The R Utils Package

> Sys.info()["machine"]

machine

"armv5tel"

> gc()

used (Mb) gc trigger (Mb) max used (Mb)

Ncells 170369 4.6 350000 9.4 350000 9.4

Vcells 163228 1.3 905753 7.0 784148 6.0

See http://bugs.debian.org/cgi-bin/bugreport.cgi?bug=679180

PS 4. The complete log of building R from source is in here File:Build R log.txt

Full configuration

Interfaces supported: X11, tcltk External libraries: readline Additional capabilities: PNG, JPEG, TIFF, NLS, cairo Options enabled: shared R library, shared BLAS, R profiling, Java

Update: R 3.0.1 on Beaglebone Black (armv7a) + Ubuntu 13.04

See the page here.

Update: R 3.1.3 & R 3.2.0 on Raspberry Pi 2

It took 134m to run 'make -j 4' on RPi 2 using R 3.1.3.

But I got an error when I ran './configure; make -j 4' using R 3.2.0. The errors start from compiling <main/connections.c> file with 'undefined reference to ....'. The gcc version is 4.6.3.

Install all dependencies for building R

This is a comprehensive list. This list is even larger than r-base-dev.

root@debian:/mnt/usb/R-2.15.2# apt-get build-dep r-base Reading package lists... Done Building dependency tree Reading state information... Done The following packages will be REMOVED: libreadline5-dev The following NEW packages will be installed: bison ca-certificates ca-certificates-java debhelper defoma ed file fontconfig gettext gettext-base html2text intltool-debian java-common libaccess-bridge-java libaccess-bridge-java-jni libasound2 libasyncns0 libatk1.0-0 libaudit0 libavahi-client3 libavahi-common-data libavahi-common3 libblas-dev libblas3gf libbz2-dev libcairo2 libcairo2-dev libcroco3 libcups2 libdatrie1 libdbus-1-3 libexpat1-dev libflac8 libfontconfig1-dev libfontenc1 libfreetype6-dev libgif4 libglib2.0-dev libgtk2.0-0 libgtk2.0-common libice-dev libjpeg62-dev libkpathsea5 liblapack-dev liblapack3gf libnewt0.52 libnspr4-0d libnss3-1d libogg0 libopenjpeg2 libpango1.0-0 libpango1.0-common libpango1.0-dev libpcre3-dev libpcrecpp0 libpixman-1-0 libpixman-1-dev libpng12-dev libpoppler5 libpulse0 libreadline-dev libreadline6-dev libsm-dev libsndfile1 libthai-data libthai0 libtiff4-dev libtiffxx0c2 libunistring0 libvorbis0a libvorbisenc2 libxaw7 libxcb-render-util0 libxcb-render-util0-dev libxcb-render0 libxcb-render0-dev libxcomposite1 libxcursor1 libxdamage1 libxext-dev libxfixes3 libxfont1 libxft-dev libxi6 libxinerama1 libxkbfile1 libxmu6 libxmuu1 libxpm4 libxrandr2 libxrender-dev libxss-dev libxt-dev libxtst6 luatex m4 openjdk-6-jdk openjdk-6-jre openjdk-6-jre-headless openjdk-6-jre-lib openssl pkg-config po-debconf preview-latex-style shared-mime-info tcl8.5-dev tex-common texi2html texinfo texlive-base texlive-binaries texlive-common texlive-doc-base texlive-extra-utils texlive-fonts-recommended texlive-generic-recommended texlive-latex-base texlive-latex-extra texlive-latex-recommended texlive-pictures tk8.5-dev tzdata-java whiptail x11-xkb-utils x11proto-render-dev x11proto-scrnsaver-dev x11proto-xext-dev xauth xdg-utils xfonts-base xfonts-encodings xfonts-utils xkb-data xserver-common xvfb zlib1g-dev 0 upgraded, 136 newly installed, 1 to remove and 0 not upgraded. Need to get 139 MB of archives. After this operation, 410 MB of additional disk space will be used. Do you want to continue [Y/n]?

Instruction of installing a development version of R under Ubuntu

https://github.com/wch/r-source/wiki (works on Ubuntu 12.04)

Note that texi2dvi has to be installed first to avoid the following error. It is better to follow the Ubuntu instruction (https://github.com/wch/r-source/wiki/Ubuntu-build-instructions) when we work on Ubuntu OS.

$ (cd doc/manual && make front-matter html-non-svn) creating RESOURCES /bin/bash: number-sections: command not found make: [../../doc/RESOURCES] Error 127 (ignored)

To build R, run the following script. To run the built R, type 'bin/R'.

# Get recommended packages if necessary

tools/rsync-recommended

R_PAPERSIZE=letter \

R_BATCHSAVE="--no-save --no-restore" \

R_BROWSER=xdg-open \

PAGER=/usr/bin/pager \

PERL=/usr/bin/perl \

R_UNZIPCMD=/usr/bin/unzip \

R_ZIPCMD=/usr/bin/zip \

R_PRINTCMD=/usr/bin/lpr \

LIBnn=lib \

AWK=/usr/bin/awk \

CC="ccache gcc" \

CFLAGS="-ggdb -pipe -std=gnu99 -Wall -pedantic" \

CXX="ccache g++" \

CXXFLAGS="-ggdb -pipe -Wall -pedantic" \

FC="ccache gfortran" \

F77="ccache gfortran" \

MAKE="make" \

./configure \

--prefix=/usr/local/lib/R-devel \

--enable-R-shlib \

--with-blas \

--with-lapack \

--with-readline

#CC="clang -O3" \

#CXX="clang++ -03" \

# Workaround for explicit SVN check introduced by

# https://github.com/wch/r-source/commit/4f13e5325dfbcb9fc8f55fc6027af9ae9c7750a3

# Need to build FAQ

(cd doc/manual && make front-matter html-non-svn)

rm -f non-tarball

# Get current SVN revsion from git log and save in SVN-REVISION

echo -n 'Revision: ' > SVN-REVISION

git log --format=%B -n 1 \

| grep "^git-svn-id" \

| sed -E 's/^git-svn-id: https:\/\/svn.r-project.org\/R\/.*?@([0-9]+).*$/\1/' \

>> SVN-REVISION

echo -n 'Last Changed Date: ' >> SVN-REVISION

git log -1 --pretty=format:"%ad" --date=iso | cut -d' ' -f1 >> SVN-REVISION

# End workaround

# Set this to the number of cores on your computer

make --jobs=4

If we DO NOT use -depth option in git clone command, we can use git checkout SHA1 (40 characters) to get a certain version of code.

git checkout f1d91a0b34dbaa6ac807f3852742e3d646fbe95e # plot(<dendrogram>): Bug 15215 fixed 5/2/2015 git checkout trunk # switch back to trunk

The svn revision number for a certain git revision can be found in the blue box on the github website (git-svn-id). For example, this revision has an svn revision number 68302 even the current trunk is 68319.

Now suppose we have run 'git check trunk', create a devel'R successfully. If we want to build R for a certain svn or git revision, we run 'git checkout SHA1', 'make distclean', code to generate the SVN-REVISION file (it will update this number) and finally './configure' & 'make'.

time (./configure --with-recommended-packages=no && make --jobs=5)

The timing is 4m36s if I skip recommended packages and 7m37s if I don't skip. This is based on Xeon W3690 @ 3.47GHz.

The full bash script is available on Github Gist.

Install multiple versions of R on Ubuntu

- Installing multiple versions of R on Linux especially on RStudio Server, Mar 13, 2018.

- Some common locations are /usr/lib/R/bin, /usr/local/bin (create softlinks for the binaries here), /usr/bin.

- When build R from source, specify prefix. In the following example, RStudio IDE can detect R.

$ ./configure --prefix=/opt/R/3.5.0 --enable-R-shlib

$ make

$ sudo make install

$ which R

$ tree -L 3 /opt/R/3.5.0/

/opt/R/3.5.0/

├── bin

│ ├── R

│ └── Rscript

├── lib

│ ├── pkgconfig

│ │ └── libR.pc

│ └── R

│ ├── bin

│ ├── COPYING

│ ├── doc

│ ├── etc

│ ├── include

│ ├── lib

│ ├── library

│ ├── modules

│ ├── share

│ └── SVN-REVISION

└── share

└── man

└── man1

- http://r.789695.n4.nabble.com/Installing-different-versions-of-R-simultaneously-on-Linux-td879536.html

- Instruction_of_installing_a_development_version_of_R_under_Ubuntu. You can launch the devel version of R using 'RD' command.

- Use 'export PATH'

- http://stackoverflow.com/questions/24019503/installing-multiple-versions-of-r

- http://stackoverflow.com/questions/8343686/how-to-install-2-different-r-versions-on-debian

To install the devel version of R alongside the current version of R. See this post. For example you need a script that will build r-devel, but install it in a location different from the stable version of R (eg use --prefix=/usr/local/R-X.Y.Z in the config command). Note that the executable is installed in “/usr/local/lib/R-devel/bin”, but that can be changed to others like "/usr/local/bin".

Another fancy way is to use docker.

Minimal installation of R from source

Assume we have installed g++ (or build-essential) and gfortran (Ubuntu has only gcc pre-installed, but not g++),

sudo apt-get install build-essential gfortran

we can go ahead to build a minimal R.

wget http://cran.rstudio.com/src/base/R-3/R-3.1.1.tar.gz tar -xzvf R-3.1.1.tar.gz; cd R-3.1.1 ./configure --with-x=no --with-recommended-packages=no --with-readline=no

See ./configure --help. This still builds the essential packages like base, compiler, datasets, graphics, grDevices, grid, methods, parallel, splines, stats, stats4, tcltk, tools, and utils.

Note that at the end of 'make', it shows an error of 'cannot find any java interpreter. Please make sure java is on your PATH or set JAVA_HOME correspondingly'. Even with the error message, we can use R by typing bin/R.

To check whether we have Java installed, type 'java -version'.

$ java -version java version "1.6.0_32" OpenJDK Runtime Environment (IcedTea6 1.13.4) (6b32-1.13.4-4ubuntu0.12.04.2) OpenJDK 64-Bit Server VM (build 23.25-b01, mixed mode)

Recommended packages

R can be installed without recommended packages. Keep it in mind. Some people have assumed that a `recommended' package can safely be used unconditionally, but this is not so.

Run R commands on bash terminal

http://pacha.hk/2017-10-20_r_on_ubuntu_17_10.html

# Install R

sudo apt-get update

sudo apt-get install gdebi libxml2-dev libssl-dev libcurl4-openssl-dev r-base r-base-dev

# install common packages

R --vanilla << EOF

install.packages(c("tidyverse","data.table","dtplyr","devtools","roxygen2"), repos = "https://cran.rstudio.com/")

q()

EOF

# Export to HTML/Excel

R --vanilla << EOF

install.packages(c("htmlTable","openxlsx"), repos = "https://cran.rstudio.com/")

q()

EOF# Blog tools

R --vanilla << EOF

install.packages(c("knitr","rmarkdown"), repos='http://cran.us.r-project.org')

q()

EOF

R CMD

- R CMD build someDirectory - create a package

- R CMD check somePackage_1.2-3.tar.gz - check a package

- R CMD INSTALL somePackage_1.2-3.tar.gz - install a package from its source

bin/R (shell script) and bin/exec/R (binary executable) on Linux OS

bin/R is just a shell script to launch bin/exec/R program. So if we try to run the following program

# test.R

cat("-- reading arguments\n", sep = "");

cmd_args = commandArgs();

for (arg in cmd_args) cat(" ", arg, "\n", sep="");

from command line like

$ R --slave --no-save --no-restore --no-environ --silent --args arg1=abc < test.R # OR using Rscript -- reading arguments /home/brb/R-3.0.1/bin/exec/R --slave --no-save --no-restore --no-environ --silent --args arg1=abc

we can see R actually call bin/exec/R program.

CentOS 6.x

Install build-essential (make, gcc, gdb, ...).

su yum groupinstall "Development Tools" yum install kernel-devel kernel-headers

Install readline and X11 (probably not necessary if we use ./configure --with-x=no)

yum install readline-devel yum install libX11 libX11-devel libXt libXt-devel

Install libpng (already there) and libpng-devel library. This is for web application purpose because png (and possibly svg) is a standard and preferred graphics format. If we want to output different graphics formats, we have to follow the guide in R-admin manual to install extra graphics libraries in Linux.

yum install libpng-devel rpm -qa | grep "libpng" # make sure both libpng and libpng-devel exist.

Install Java. One possibility is to download from Oracle. We want to download jdk-7u45-linux-x64.rpm and jre-7u45-linux-x64.rpm (assume 64-bit OS).

rpm -Uvh jdk-7u45-linux-x64.rpm rpm -Uvh jre-7u45-linux-x64.rpm # Check java -version

Now we are ready to build R by using "./configure" and then "make" commands.

We can make R accessible from any directory by either run "make install" command or creating an R_HOME environment variable and export it to PATH environment variable, such as

export R_HOME="path to R" export PATH=$PATH:$R_HOME/bin

Install R on Mac

A binary version of R is available on Mac OS X.

Noted that personal R packages will be installed to ~/Library/R directory. More specifically, packages from R 3.3.x will be installed onto ~/Library/R/3.3/library.

For R 3.4.x, the R packages go to /Library/Frameworks/R.framework/Versions/3.4/Resources/library. The advantages of using this folder is 1. the folder is writable by anyone. 2. even the built-in packages can be upgraded by users.

gfortran

macOS does not include gfortran. So we cannot compile package like quantreg which is required by the car package. Another example is robustbase package.

Development Tools and Libraries for R of R on Mac OS X.

For now, I am using gfortran 6.1 downloaded from https://gcc.gnu.org/wiki/GFortranBinaries#MacOS on my OS X El Capitan (10.11).

Upgrade R

Online Editor

We can run R on web browsers without installing it on local machines (similar to [/ideone.com Ideone.com] for C++. It does not require an account either (cf RStudio).

rstudio.cloud

RDocumentation

The interactive engine is based on DataCamp Light

For example, tbl_df function from dplyr package.

The website DataCamp allows to run library() on the Script window. After that, we can use the packages on R Console.

Here is a list of (common) R packages that users can use on the web.

The packages on RDocumentation may be outdated. For example, the current stringr on CRAN is v1.2.0 (2/18/2017) but RDocumentation has v1.1.0 (8/19/2016).

Web Applications

See also CRAN Task View: Web Technologies and Services

TexLive

TexLive can be installed by 2 ways

- Ubuntu repository; does not include tlmgr utility for package manager.

- Official website

texlive-latex-extra

https://packages.debian.org/sid/texlive-latex-extra

For example, framed and titling packages are included.

tlmgr - TeX Live package manager

https://www.tug.org/texlive/tlmgr.html

TinyTex

https://github.com/yihui/tinytex

pkgdown: create a website for your package

Building a website with pkgdown: a short guide

Create HTML5 web and slides using knitr, rmarkdown and pandoc

- http://rmarkdown.rstudio.com/html_document_format.html

- https://www.rstudio.com/wp-content/uploads/2015/02/rmarkdown-cheatsheet.pdf

- https://www.rstudio.com/wp-content/uploads/2015/03/rmarkdown-reference.pdf

HTML5 slides examples

- http://yihui.name/slides/knitr-slides.html

- http://yihui.name/slides/2012-knitr-RStudio.html

- http://yihui.name/slides/2011-r-dev-lessons.html#slide1

- http://inundata.org/R_talks/BARUG/#intro

Software requirement

- Rstudio

- knitr, XML, RCurl (See omegahat or this internal link for installation on Ubuntu)

- pandoc package This is a command line tool. I am testing it on Windows 7.

Slide #22 gives an instruction to create

- regular html file by using RStudio -> Knit HTML button

- HTML5 slides by using pandoc from command line.

Files:

- Rcmd source: 009-slides.Rmd Note that IE 8 was not supported by github. For IE 9, be sure to turn off "Compatibility View".

- markdown output: 009-slides.md

- HTML output: 009-slides.html

We can create Rcmd source in Rstudio by File -> New -> R Markdown.

There are 4 ways to produce slides with pandoc

- S5

- DZSlides

- Slidy

- Slideous

Use the markdown file (md) and convert it with pandoc

pandoc -s -S -i -t dzslides --mathjax html5_slides.md -o html5_slides.html

If we are comfortable with HTML and CSS code, open the html file (generated by pandoc) and modify the CSS style at will.

Built-in examples from rmarkdown

# This is done on my ODroid xu4 running Ubuntu Mate 15.10 (Wily)

# I used sudo apt-get install pandoc in shell

# and install.packages("rmarkdown") in R 3.2.3

library(rmarkdown)

rmarkdown::render("~/R/armv7l-unknown-linux-gnueabihf-library/3.2/rmarkdown/rmarkdown/templates/html_vignette/skeleton/skeleton.Rmd")

# the output <skeleton.html> is located under the same dir as <skeleton.Rmd>

Note that the image files in the html are embedded Base64 images in the html file. See

- http://stackoverflow.com/questions/1207190/embedding-base64-images

- Data URI scheme

- http://www.r-bloggers.com/embed-images-in-rd-documents/

- How to not embed Base64 images in RMarkdown

- Upload plots as PNG file to your wordpress

Templates

- https://github.com/rstudio/rticles/tree/master/inst/rmarkdown/templates

- https://github.com/rstudio/rticles/blob/master/inst/rmarkdown/templates/jss_article/resources/template.tex

Knit button

- It calls rmarkdown::render()

- R Markdown = knitr + Pandoc

- rmarkdown::render () = knitr::knit() + a system() call to pandoc

Pandoc's Markdown

Originally Pandoc is for html.

Extensions

- YAML metadata

- Latex Math

- syntax highlight

- embed raw HTML/Latex (raw HTML only works for HTML output and raw Latex only for Latex/pdf output)

- tables

- footnotes

- citations

Types of output documents

- Latex/pdf, HTML, Word

- beamer, ioslides, Slidy, reval.js

- Ebooks

- ...

Some examples:

pandoc test.md -o test.html pandoc test.md -s --mathjax -o test.html pandoc test.md -o test.docx pandoc test.md -o test.pdf pandoc test.md --latex-engine=xlelatex -o test.pdf pandoc test.md -o test.epb

Check out ?rmarkdown::pandoc_convert()/

When you click the Knit button in RStudio, you will see the actual command that is executed.

Global options

Suppose I want to create a simple markdown only documentation without worrying about executing code, instead of adding eval = FALSE to each code chunks, I can insert the following between YAML header and the content. Even bash chunks will not be executed.

```{r setup, include=FALSE}

knitr::opts_chunk$set(echo = TRUE, eval = FALSE)

```

Examples/gallery

Some examples of creating papers (with references) based on knitr can be found on the Papers and reports section of the knitr website.

- https://rmarkdown.rstudio.com/gallery.html

- https://github.com/EBI-predocs/knitr-example

- https://github.com/timchurches/meta-analyses

- http://www.gastonsanchez.com/depot/knitr-slides

Read the docs Sphinx theme and journal article formats

http://blog.rstudio.org/2016/03/21/r-markdown-custom-formats/

- rticles package

- rmdformats package

rmarkdown news

Useful tricks when including images in Rmarkdown documents

http://blog.revolutionanalytics.com/2017/06/rmarkdown-tricks.html

Converting Rmarkdown to F1000Research LaTeX Format

BiocWorkflowTools package and paper

icons for rmarkdown

https://ropensci.org/technotes/2018/05/15/icon/

Reproducible data analysis

Automatic document production with R

https://itsalocke.com/improving-automatic-document-production-with-r/

Documents with logos, watermarks, and corporate styles

http://ellisp.github.io/blog/2017/09/09/rmarkdown

rticles and pinp for articles

- https://cran.r-project.org/web/packages/rticles/index.html

- http://dirk.eddelbuettel.com/code/pinp.html

Markdown language

According to wikipedia:

Markdown is a lightweight markup language, originally created by John Gruber with substantial contributions from Aaron Swartz, allowing people “to write using an easy-to-read, easy-to-write plain text format, then convert it to structurally valid XHTML (or HTML)”.

- Markup is a general term for content formatting - such as HTML - but markdown is a library that generates HTML markup.

- Nice summary from stackoverflow.com and more complete list from github.

- An example https://gist.github.com/jeromyanglim/2716336

- Convert mediawiki to markdown using online conversion tool from pandoc.

- R markdown file and use it in RStudio. Customizing Chunk Options can be found in knitr page and rpubs.com.

RStudio

RStudio is the best editor.

Markdown has two drawbacks: 1. it does not support TOC natively. 2. RStudio cannot show headers in the editor.

Therefore, use rmarkdown format instead of markdown.

HTTP protocol

- http://en.wikipedia.org/wiki/File:Http_request_telnet_ubuntu.png

- Query string

- How to capture http header? Use curl -i en.wikipedia.org.

- Web Inspector. Build-in in Chrome. Right click on any page and choose 'Inspect Element'.

- Web server

- Simple TCP/IP web server

- HTTP Made Really Easy

- Illustrated Guide to HTTP

- nweb: a tiny, safe Web server with 200 lines

- Tiny HTTPd

An HTTP server is conceptually simple:

- Open port 80 for listening

- When contact is made, gather a little information (get mainly - you can ignore the rest for now)

- Translate the request into a file request

- Open the file and spit it back at the client

It gets more difficult depending on how much of HTTP you want to support - POST is a little more complicated, scripts, handling multiple requests, etc.

Example in R

> co <- socketConnection(port=8080, server=TRUE, blocking=TRUE) > # Now open a web browser and type http://localhost:8080/index.html > readLines(co,1) [1] "GET /index.html HTTP/1.1" > readLines(co,1) [1] "Host: localhost:8080" > readLines(co,1) [1] "User-Agent: Mozilla/5.0 (X11; Ubuntu; Linux i686; rv:23.0) Gecko/20100101 Firefox/23.0" > readLines(co,1) [1] "Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8" > readLines(co,1) [1] "Accept-Language: en-US,en;q=0.5" > readLines(co,1) [1] "Accept-Encoding: gzip, deflate" > readLines(co,1) [1] "Connection: keep-alive" > readLines(co,1) [1] ""

Example in C (Very simple http server written in C, 187 lines)

Create a simple hello world html page and save it as <index.html> in the current directory (/home/brb/Downloads/)

Launch the server program (assume we have done gcc http_server.c -o http_server)

$ ./http_server -p 50002 Server started at port no. 50002 with root directory as /home/brb/Downloads

Secondly open a browser and type http://localhost:50002/index.html. The server will respond

GET /index.html HTTP/1.1 Host: localhost:50002 User-Agent: Mozilla/5.0 (X11; Ubuntu; Linux i686; rv:23.0) Gecko/20100101 Firefox/23.0 Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8 Accept-Language: en-US,en;q=0.5 Accept-Encoding: gzip, deflate Connection: keep-alive file: /home/brb/Downloads/index.html GET /favicon.ico HTTP/1.1 Host: localhost:50002 User-Agent: Mozilla/5.0 (X11; Ubuntu; Linux i686; rv:23.0) Gecko/20100101 Firefox/23.0 Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8 Accept-Language: en-US,en;q=0.5 Accept-Encoding: gzip, deflate Connection: keep-alive file: /home/brb/Downloads/favicon.ico GET /favicon.ico HTTP/1.1 Host: localhost:50003 User-Agent: Mozilla/5.0 (X11; Ubuntu; Linux i686; rv:23.0) Gecko/20100101 Firefox/23.0 Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8 Accept-Language: en-US,en;q=0.5 Accept-Encoding: gzip, deflate Connection: keep-alive file: /home/brb/Downloads/favicon.ico

The browser will show the page from <index.html> in server.

The only bad thing is the code does not close the port. For example, if I have use Ctrl+C to close the program and try to re-launch with the same port, it will complain socket() or bind(): Address already in use.

Another Example in C (55 lines)

http://mwaidyanatha.blogspot.com/2011/05/writing-simple-web-server-in-c.html

The response is embedded in the C code.

If we test the server program by opening a browser and type "http://localhost:15000/", the server received the follwing 7 lines

GET / HTTP/1.1 Host: localhost:15000 User-Agent: Mozilla/5.0 (X11; Ubuntu; Linux i686; rv:23.0) Gecko/20100101 Firefox/23.0 Accept: text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8 Accept-Language: en-US,en;q=0.5 Accept-Encoding: gzip, deflate Connection: keep-alive

If we include a non-executable file's name in the url, we will be able to download that file. Try "http://localhost:15000/client.c".

If we use telnet program to test, wee need to type anything we want

$ telnet localhost 15000 Trying 127.0.0.1... Connected to localhost. Escape character is '^]'. ThisCanBeAnything <=== This is what I typed in the client and it is also shown on server HTTP/1.1 200 OK <=== From here is what I got from server Content-length: 37Content-Type: text/html HTML_DATA_HERE_AS_YOU_MENTIONED_ABOVE <=== The html tags are not passed from server, interesting! Connection closed by foreign host. $

See also more examples under C page.

Others

- http://rosettacode.org/wiki/Hello_world/ (Different languages)

- http://kperisetla.blogspot.com/2012/07/simple-http-web-server-in-c.html (Windows web server)

- http://css.dzone.com/articles/web-server-c (handling HTTP GET request, handling content types(txt, html, jpg, zip. rar, pdf, php etc.), sending proper HTTP error codes, serving the files from a web root, change in web root in a config file, zero copy optimization using sendfile method and php file handling.)

- https://github.com/gtungatkar/Simple-HTTP-server

- https://github.com/davidmoreno/onion

shiny

See Shiny.

Docker

- Using Docker as a Personal Productivity Tool – Running Command Line Apps Bundled in Docker Containers

- Dockerized RStudio server from Duke University. 110 containers were set up on a cloud server (4 cores, 28GB RAM, 400GB disk). Each container has its own port number. Each student is mapped to a single container. https://github.com/mccahill/docker-rstudio

- RStudio in the cloud with Amazon Lightsail and docker

- Mark McCahill (RStudio + Docker)

- BiocImageBuilder

- Why Use Docker with R? A DevOps Perspective

httpuv

http and WebSocket library.

See also the servr package which can start an HTTP server in R to serve static files, or dynamic documents that can be converted to HTML files (e.g., R Markdown) under a given directory.

RApache

gWidgetsWWW

- http://www.jstatsoft.org/v49/i10/paper

- gWidgetsWWW2 gWidgetsWWW based on Rook

- Compare shiny with gWidgetsWWW2.rapache

Rook

Since R 2.13, the internal web server was exposed.

Tutorual from useR2012 and Jeffrey Horner

Here is another one from http://www.rinfinance.com.

Rook is also supported by [rApache too. See http://rapache.net/manual.html.

Google group. https://groups.google.com/forum/?fromgroups#!forum/rrook

Advantage

- the web applications are created on desktop, whether it is Windows, Mac or Linux.

- No Apache is needed.

- create multiple applications at the same time. This complements the limit of rApache.

4 lines of code example.

library(Rook)

s <- Rhttpd$new()

s$start(quiet=TRUE)

s$print()

s$browse(1) # OR s$browse("RookTest")

Notice that after s$browse() command, the cursor will return to R because the command just a shortcut to open the web page http://127.0.0.1:10215/custom/RookTest.

We can add Rook application to the server; see ?Rhttpd.

s$add(

app=system.file('exampleApps/helloworld.R',package='Rook'),name='hello'

)

s$add(

app=system.file('exampleApps/helloworldref.R',package='Rook'),name='helloref'

)

s$add(

app=system.file('exampleApps/summary.R',package='Rook'),name='summary'

)

s$print()

#Server started on 127.0.0.1:10221

#[1] RookTest http://127.0.0.1:10221/custom/RookTest

#[2] helloref http://127.0.0.1:10221/custom/helloref

#[3] summary http://127.0.0.1:10221/custom/summary

#[4] hello http://127.0.0.1:10221/custom/hello

# Stops the server but doesn't uninstall the app

## Not run:

s$stop()

## End(Not run)

s$remove(all=TRUE)

rm(s)

For example, the interface and the source code of summary app are given below

app <- function(env) {

req <- Rook::Request$new(env)

res <- Rook::Response$new()

res$write('Choose a CSV file:\n')

res$write('<form method="POST" enctype="multipart/form-data">\n')

res$write('<input type="file" name="data">\n')

res$write('<input type="submit" name="Upload">\n</form>\n<br>')

if (!is.null(req$POST())){

data <- req$POST()[['data']]

res$write("<h3>Summary of Data</h3>");

res$write("<pre>")

res$write(paste(capture.output(summary(read.csv(data$tempfile,stringsAsFactors=FALSE)),file=NULL),collapse='\n'))

res$write("</pre>")

res$write("<h3>First few lines (head())</h3>");

res$write("<pre>")

res$write(paste(capture.output(head(read.csv(data$tempfile,stringsAsFactors=FALSE)),file=NULL),collapse='\n'))

res$write("</pre>")

}

res$finish()

}

More example:

- http://lamages.blogspot.com/2012/08/rook-rocks-example-with-googlevis.html

- Self-organizing map

- Deploy Rook apps with rApache. First one and two.

- A Simple Prediction Web Service Using the New fiery Package

sumo

Sumo is a fully-functional web application template that exposes an authenticated user's R session within java server pages. See the paper http://journal.r-project.org/archive/2012-1/RJournal_2012-1_Bergsma+Smith.pdf.

Stockplot

FastRWeb

http://cran.r-project.org/web/packages/FastRWeb/index.html

Rwui

CGHWithR and WebDevelopR

CGHwithR is still working with old version of R although it is removed from CRAN. Its successor is WebDevelopR. Its The vignette (year 2013) provides a review of several available methods.

manipulate from RStudio

This is not a web application. But the manipulate package can be used to create interactive plot within R(Studio) environment easily. Its source is available at here.

Mathematica also has manipulate function for plotting; see here.

RCloud

RCloud is an environment for collaboratively creating and sharing data analysis scripts. RCloud lets you mix analysis code in R, HTML5, Markdown, Python, and others. Much like Sage, iPython notebooks and Mathematica, RCloud provides a notebook interface that lets you easily record a session and annotate it with text, equations, and supporting images.

See also the Talk in UseR 2014.

Dropbox access

rdrop2 package

Web page scraping

http://www.slideshare.net/schamber/web-data-from-r#btnNext

xml2 package

rvest package depends on xml2.

purrr

- https://purrr.tidyverse.org/

- Getting started with the purrr package in R, especially the map() function.

rvest

On Ubuntu, we need to install two packages first!

sudo apt-get install libcurl4-openssl-dev # OR libcurl4-gnutls-dev sudo apt-get install libxml2-dev

- https://github.com/hadley/rvest

- Visualizing obesity across United States by using data from Wikipedia

- rvest tutorial: scraping the web using R

- https://renkun.me/pipeR-tutorial/Examples/rvest.html

- http://zevross.com/blog/2015/05/19/scrape-website-data-with-the-new-r-package-rvest/

- Google scholar scraping with rvest package

- Animating Changes in Football Kits using R: rvest, tidyverse, xml2, purrr & magick

V8: Embedded JavaScript Engine for R

R⁶ — General (Attys) Distributions: V8, rvest, ggbeeswarm, hrbrthemes and tidyverse packages are used.

pubmed.mineR

Text mining of PubMed Abstracts (http://www.ncbi.nlm.nih.gov/pubmed). The algorithms are designed for two formats (text and XML) from PubMed.

R code for scraping the P-values from pubmed, calculating the Science-wise False Discovery Rate, et al (Jeff Leek)

Diving Into Dynamic Website Content with splashr

https://rud.is/b/2017/02/09/diving-into-dynamic-website-content-with-splashr/

Send email

mailR

Easiest. Require rJava package (not trivial to install, see rJava). mailR is an interface to Apache Commons Email to send emails from within R. See also send bulk email

Before we use the mailR package, we have followed here to have Allow less secure apps: 'ON' ; or you might get an error Error: EmailException (Java): Sending the email to the following server failed : smtp.gmail.com:465. Once we turn on this option, we may get an email for the notification of this change. Note that the recipient can be other than a gmail.

> send.mail(from = "[email protected]", to = c("[email protected]", "Recipient 2 <[email protected]>"), replyTo = c("Reply to someone else <[email protected]>") subject = "Subject of the email", body = "Body of the email", smtp = list(host.name = "smtp.gmail.com", port = 465, user.name = "gmail_username", passwd = "password", ssl = TRUE), authenticate = TRUE, send = TRUE) [1] "Java-Object{org.apache.commons.mail.SimpleEmail@7791a895}"

gmailr

More complicated. gmailr provides access the Google's gmail.com RESTful API. Vignette and an example on here. Note that it does not use a password; it uses a json file for oauth authentication downloaded from https://console.cloud.google.com/. See also https://github.com/jimhester/gmailr/issues/1.

library(gmailr)

gmail_auth('mysecret.json', scope = 'compose')

test_email <- mime() %>%

to("[email protected]") %>%

from("[email protected]") %>%

subject("This is a subject") %>%

html_body("<html><body>I wish <b>this</b> was bold</body></html>")

send_message(test_email)

sendmailR

sendmailR provides a simple SMTP client. It is not clear how to use the package (i.e. where to enter the password).

GEO (Gene Expression Omnibus)

See this internal link.

Interactive html output

sendplot

RIGHT

The supported plot types include scatterplot, barplot, box plot, line plot and pie plot.

In addition to tooltip boxes, the package can create a table showing all information about selected nodes.

d3Network

- http://christophergandrud.github.io/d3Network/ (old)

- https://christophergandrud.github.io/networkD3/ (new)

library(d3Network)

Source <- c("A", "A", "A", "A", "B", "B", "C", "C", "D")

Target <- c("B", "C", "D", "J", "E", "F", "G", "H", "I")

NetworkData <- data.frame(Source, Target)

d3SimpleNetwork(NetworkData, height = 800, width = 1024, file="tmp.html")

htmlwidgets for R

Embed widgets in R Markdown documents and Shiny web applications.

- Official website http://www.htmlwidgets.org/.

- How to write a useful htmlwidgets in R: tips and walk-through a real example

networkD3

This is a port of Christopher Gandrud's d3Network package to the htmlwidgets framework.

scatterD3

scatterD3 is an HTML R widget for interactive scatter plots visualization. It is based on the htmlwidgets R package and on the d3.js javascript library.

d3heatmap

A package generats interactive heatmaps using d3.js and htmlwidgets. The following screenshots shows 3 features.

- Shows the row/column/value under the mouse cursor

- Zoom in a region (click on the zoom-in image will bring back the original heatmap)

- Highlight a row or a column (click the label of another row will highlight another row. Click the same label again will bring back the original image)

svgPanZoom

This 'htmlwidget' provides pan and zoom interactivity to R graphics, including 'base', 'lattice', and 'ggplot2'. The interactivity is provided through the 'svg-pan-zoom.js' library.

DT: An R interface to the DataTables library

plotly

- Power curves and ggplot2.

- TIME SERIES CHARTS BY THE ECONOMIST IN R USING PLOTLY & FIVE INTERACTIVE R VISUALIZATIONS WITH D3, GGPLOT2, & RSTUDIO

- Filled chord diagram

- DASHBOARDS IN R WITH SHINY & PLOTLY

- Plotly Graphs in Shiny,

- How to plot basic charts with plotly

Amazon

Download product information and reviews from Amazon.com

sudo apt-get install libxml2-dev sudo apt-get install libcurl4-openssl-dev

and in R

install.packages("devtools")

install.packages("XML")

install.packages("pbapply")

install.packages("dplyr")

devtools::install_github("56north/Rmazon")

product_info <- Rmazon::get_product_info("1593273843")

reviews <- Rmazon::get_reviews("1593273843")

reviews[1,6] # only show partial characters from the 1st review

nchar(reviews[1,6])

as.character(reviews[1,6]) # show the complete text from the 1st review

gutenbergr

OCR

Tesseract package: High Quality OCR in R

Creating local repository for CRAN and Bioconductor (focus on Windows binary packages only)

How to set up a local repository

- CRAN specific: http://cran.r-project.org/mirror-howto.html

- Bioconductor specific: http://www.bioconductor.org/about/mirrors/mirror-how-to/

General guide: http://cran.r-project.org/doc/manuals/R-admin.html#Setting-up-a-package-repository

Utilities such as install.packages can be pointed at any CRAN-style repository, and R users may want to set up their own. The ‘base’ of a repository is a URL such as http://www.omegahat.org/R/: this must be an URL scheme that download.packages supports (which also includes ‘ftp://’ and ‘file://’, but not on most systems ‘https://’). Under that base URL there should be directory trees for one or more of the following types of package distributions:

- "source": located at src/contrib and containing .tar.gz files. Other forms of compression can be used, e.g. .tar.bz2 or .tar.xz files.

- "win.binary": located at bin/windows/contrib/x.y for R versions x.y.z and containing .zip files for Windows.

- "mac.binary.leopard": located at bin/macosx/leopard/contrib/x.y for R versions x.y.z and containing .tgz files.

Each terminal directory must also contain a PACKAGES file. This can be a concatenation of the DESCRIPTION files of the packages separated by blank lines, but only a few of the fields are needed. The simplest way to set up such a file is to use function write_PACKAGES in the tools package, and its help explains which fields are needed. Optionally there can also be a PACKAGES.gz file, a gzip-compressed version of PACKAGES—as this will be downloaded in preference to PACKAGES it should be included for large repositories. (If you have a mis-configured server that does not report correctly non-existent files you will need PACKAGES.gz.)

To add your repository to the list offered by setRepositories(), see the help file for that function.

A repository can contain subdirectories, when the descriptions in the PACKAGES file of packages in subdirectories must include a line of the form

Path: path/to/subdirectory

—once again write_PACKAGES is the simplest way to set this up.

Space requirement if we want to mirror WHOLE repository

- Whole CRAN takes about 92GB (rsync -avn cran.r-project.org::CRAN > ~/Downloads/cran).

- Bioconductor is big (> 64G for BioC 2.11). Please check the size of what will be transferred with e.g. (rsync -avn bioconductor.org::2.11 > ~/Downloads/bioc) and make sure you have enough room on your local disk before you start.

On the other hand, we if only care about Windows binary part, the space requirement is largely reduced.

- CRAN: 2.7GB

- Bioconductor: 28GB.

Misc notes

- If the binary package was built on R 2.15.1, then it cannot be installed on R 2.15.2. But vice is OK.

- Remember to issue "--delete" option in rsync, otherwise old version of package will be installed.

- The repository still need src directory. If it is missing, we will get an error

Warning: unable to access index for repository http://arraytools.no-ip.org/CRAN/src/contrib Warning message: package ‘glmnet’ is not available (for R version 2.15.2)

The error was given by available.packages() function.

To bypass the requirement of src directory, I can use

install.packages("glmnet", contriburl = contrib.url(getOption('repos'), "win.binary"))

but there may be a problem when we use biocLite() command.

I find a workaround. Since the error comes from missing CRAN/src directory, we just need to make sure the directory CRAN/src/contrib exists AND either CRAN/src/contrib/PACKAGES or CRAN/src/contrib/PACKAGES.gz exists.

To create CRAN repository

Before creating a local repository please give a dry run first. You don't want to be surprised how long will it take to mirror a directory.

Dry run (-n option). Pipe out the process to a text file for an examination.

rsync -avn cran.r-project.org::CRAN > crandryrun.txt

To mirror only partial repository, it is necessary to create directories before running rsync command.

cd mkdir -p ~/Rmirror/CRAN/bin/windows/contrib/2.15 rsync -rtlzv --delete cran.r-project.org::CRAN/bin/windows/contrib/2.15/ ~/Rmirror/CRAN/bin/windows/contrib/2.15 (one line with space before ~/Rmirror) # src directory is very large (~27GB) since it contains source code for each R version. # We just need the files PACKAGES and PACKAGES.gz in CRAN/src/contrib. So I comment out the following line. # rsync -rtlzv --delete cran.r-project.org::CRAN/src/ ~/Rmirror/CRAN/src/ mkdir -p ~/Rmirror/CRAN/src/contrib rsync -rtlzv --delete cran.r-project.org::CRAN/src/contrib/PACKAGES ~/Rmirror/CRAN/src/contrib/ rsync -rtlzv --delete cran.r-project.org::CRAN/src/contrib/PACKAGES.gz ~/Rmirror/CRAN/src/contrib/

And optionally

library(tools)

write_PACKAGES("~/Rmirror/CRAN/bin/windows/contrib/2.15", type="win.binary")

and if we want to get src directory

rsync -rtlzv --delete cran.r-project.org::CRAN/src/contrib/*.tar.gz ~/Rmirror/CRAN/src/contrib/ rsync -rtlzv --delete cran.r-project.org::CRAN/src/contrib/2.15.3 ~/Rmirror/CRAN/src/contrib/

We can use du -h to check the folder size.

For example (as of 1/7/2013),

$ du -k ~/Rmirror --max-depth=1 --exclude ".*" | sort -nr | cut -f2 | xargs -d '\n' du -sh 30G /home/brb/Rmirror 28G /home/brb/Rmirror/Bioc 2.7G /home/brb/Rmirror/CRAN

To create Bioconductor repository

Dry run

rsync -avn bioconductor.org::2.11 > biocdryrun.txt

Then creates directories before running rsync.

cd mkdir -p ~/Rmirror/Bioc wget -N http://www.bioconductor.org/biocLite.R -P ~/Rmirror/Bioc

where -N is to overwrite original file if the size or timestamp change and -P in wget means an output directory, not a file name.

Optionally, we can add the following in order to see the Bioconductor front page.

rsync -zrtlv --delete bioconductor.org::2.11/BiocViews.html ~/Rmirror/Bioc/packages/2.11/ rsync -zrtlv --delete bioconductor.org::2.11/index.html ~/Rmirror/Bioc/packages/2.11/

The software part (aka bioc directory) installation:

cd mkdir -p ~/Rmirror/Bioc/packages/2.11/bioc/bin/windows mkdir -p ~/Rmirror/Bioc/packages/2.11/bioc/src rsync -zrtlv --delete bioconductor.org::2.11/bioc/bin/windows/ ~/Rmirror/Bioc/packages/2.11/bioc/bin/windows # Either rsync whole src directory or just essential files # rsync -zrtlv --delete bioconductor.org::2.11/bioc/src/ ~/Rmirror/Bioc/packages/2.11/bioc/src rsync -zrtlv --delete bioconductor.org::2.11/bioc/src/contrib/PACKAGES ~/Rmirror/Bioc/packages/2.11/bioc/src/contrib/ rsync -zrtlv --delete bioconductor.org::2.11/bioc/src/contrib/PACKAGES.gz ~/Rmirror/Bioc/packages/2.11/bioc/src/contrib/ # Optionally the html part mkdir -p ~/Rmirror/Bioc/packages/2.11/bioc/html rsync -zrtlv --delete bioconductor.org::2.11/bioc/html/ ~/Rmirror/Bioc/packages/2.11/bioc/html mkdir -p ~/Rmirror/Bioc/packages/2.11/bioc/vignettes rsync -zrtlv --delete bioconductor.org::2.11/bioc/vignettes/ ~/Rmirror/Bioc/packages/2.11/bioc/vignettes mkdir -p ~/Rmirror/Bioc/packages/2.11/bioc/news rsync -zrtlv --delete bioconductor.org::2.11/bioc/news/ ~/Rmirror/Bioc/packages/2.11/bioc/news mkdir -p ~/Rmirror/Bioc/packages/2.11/bioc/licenses rsync -zrtlv --delete bioconductor.org::2.11/bioc/licenses/ ~/Rmirror/Bioc/packages/2.11/bioc/licenses mkdir -p ~/Rmirror/Bioc/packages/2.11/bioc/manuals rsync -zrtlv --delete bioconductor.org::2.11/bioc/manuals/ ~/Rmirror/Bioc/packages/2.11/bioc/manuals mkdir -p ~/Rmirror/Bioc/packages/2.11/bioc/readmes rsync -zrtlv --delete bioconductor.org::2.11/bioc/readmes/ ~/Rmirror/Bioc/packages/2.11/bioc/readmes

and annotation (aka data directory) part:

mkdir -p ~/Rmirror/Bioc/packages/2.11/data/annotation/bin/windows mkdir -p ~/Rmirror/Bioc/packages/2.11/data/annotation/src/contrib # one line for each of the following rsync -zrtlv --delete bioconductor.org::2.11/data/annotation/bin/windows/ ~/Rmirror/Bioc/packages/2.11/data/annotation/bin/windows rsync -zrtlv --delete bioconductor.org::2.11/data/annotation/src/contrib/PACKAGES ~/Rmirror/Bioc/packages/2.11/data/annotation/src/contrib/ rsync -zrtlv --delete bioconductor.org::2.11/data/annotation/src/contrib/PACKAGES.gz ~/Rmirror/Bioc/packages/2.11/data/annotation/src/contrib/

and experiment directory:

mkdir -p ~/Rmirror/Bioc/packages/2.11/data/experiment/bin/windows/contrib/2.15 mkdir -p ~/Rmirror/Bioc/packages/2.11/data/experiment/src/contrib # one line for each of the following # Note that we are cheating by only downloading PACKAGES and PACKAGES.gz files rsync -zrtlv --delete bioconductor.org::2.11/data/experiment/bin/windows/contrib/2.15/PACKAGES ~/Rmirror/Bioc/packages/2.11/data/experiment/bin/windows/contrib/2.15/ rsync -zrtlv --delete bioconductor.org::2.11/data/experiment/bin/windows/contrib/2.15/PACKAGES.gz ~/Rmirror/Bioc/packages/2.11/data/experiment/bin/windows/contrib/2.15/ rsync -zrtlv --delete bioconductor.org::2.11/data/experiment/src/contrib/PACKAGES ~/Rmirror/Bioc/packages/2.11/data/experiment/src/contrib/ rsync -zrtlv --delete bioconductor.org::2.11/data/experiment/src/contrib/PACKAGES.gz ~/Rmirror/Bioc/packages/2.11/data/experiment/src/contrib/

and extra directory:

mkdir -p ~/Rmirror/Bioc/packages/2.11/extra/bin/windows/contrib/2.15 mkdir -p ~/Rmirror/Bioc/packages/2.11/extra/src/contrib # one line for each of the following # Note that we are cheating by only downloading PACKAGES and PACKAGES.gz files rsync -zrtlv --delete bioconductor.org::2.11/extra/bin/windows/contrib/2.15/PACKAGES ~/Rmirror/Bioc/packages/2.11/extra/bin/windows/contrib/2.15/ rsync -zrtlv --delete bioconductor.org::2.11/extra/bin/windows/contrib/2.15/PACKAGES.gz ~/Rmirror/Bioc/packages/2.11/extra/bin/windows/contrib/2.15/ rsync -zrtlv --delete bioconductor.org::2.11/extra/src/contrib/PACKAGES ~/Rmirror/Bioc/packages/2.11/extra/src/contrib/ rsync -zrtlv --delete bioconductor.org::2.11/extra/src/contrib/PACKAGES.gz ~/Rmirror/Bioc/packages/2.11/extra/src/contrib/

To test local repository

Create soft links in Apache server

su ln -s /home/brb/Rmirror/CRAN /var/www/html/CRAN ln -s /home/brb/Rmirror/Bioc /var/www/html/Bioc ls -l /var/www/html

The soft link mode should be 777.

To test CRAN

Replace the host name arraytools.no-ip.org by IP address 10.133.2.111 if necessary.

r <- getOption("repos"); r["CRAN"] <- "http://arraytools.no-ip.org/CRAN"

options(repos=r)

install.packages("glmnet")

We can test if the backup server is working or not by installing a package which was removed from the CRAN. For example, 'ForImp' was removed from CRAN in 11/8/2012, but I still a local copy built on R 2.15.2 (run rsync on 11/6/2012).

r <- getOption("repos"); r["CRAN"] <- "http://cran.r-project.org"

r <- c(r, BRB='http://arraytools.no-ip.org/CRAN')

# CRAN CRANextra BRB

# "http://cran.r-project.org" "http://www.stats.ox.ac.uk/pub/RWin" "http://arraytools.no-ip.org/CRAN"

options(repos=r)

install.packages('ForImp')

Note by default, CRAN mirror is selected interactively.

> getOption("repos")

CRAN CRANextra

"@CRAN@" "http://www.stats.ox.ac.uk/pub/RWin"

To test Bioconductor

# CRAN part:

r <- getOption("repos"); r["CRAN"] <- "http://arraytools.no-ip.org/CRAN"

options(repos=r)

# Bioconductor part:

options("BioC_mirror" = "http://arraytools.no-ip.org/Bioc")

source("http://bioconductor.org/biocLite.R")

# This source biocLite.R line can be placed either before or after the previous 2 lines

biocLite("aCGH")

If there is a connection problem, check folder attributes.

chmod -R 755 ~/CRAN/bin

- Note that if a binary package was created for R 2.15.1, then it can be installed under R 2.15.1 but not R 2.15.2. The R console will show package xxx is not available (for R version 2.15.2).

- For binary installs, the function also checks for the availability of a source package on the same repository, and reports if the source package has a later version, or is available but no binary version is.

So for example, if the mirror does not have contents under src directory, we need to run the following line in order to successfully run install.packages() function.

options(install.packages.check.source = "no")

- If we only mirror the essential directories, we can run biocLite() successfully. However, the R console will give some warning

> biocLite("aCGH")

BioC_mirror: http://arraytools.no-ip.org/Bioc

Using Bioconductor version 2.11 (BiocInstaller 1.8.3), R version 2.15.

Installing package(s) 'aCGH'

Warning: unable to access index for repository http://arraytools.no-ip.org/Bioc/packages/2.11/data/experiment/src/contrib

Warning: unable to access index for repository http://arraytools.no-ip.org/Bioc/packages/2.11/extra/src/contrib

Warning: unable to access index for repository http://arraytools.no-ip.org/Bioc/packages/2.11/data/experiment/bin/windows/contrib/2.15

Warning: unable to access index for repository http://arraytools.no-ip.org/Bioc/packages/2.11/extra/bin/windows/contrib/2.15

trying URL 'http://arraytools.no-ip.org/Bioc/packages/2.11/bioc/bin/windows/contrib/2.15/aCGH_1.36.0.zip'

Content type 'application/zip' length 2431158 bytes (2.3 Mb)

opened URL

downloaded 2.3 Mb

package ‘aCGH’ successfully unpacked and MD5 sums checked

The downloaded binary packages are in

C:\Users\limingc\AppData\Local\Temp\Rtmp8IGGyG\downloaded_packages

Warning: unable to access index for repository http://arraytools.no-ip.org/Bioc/packages/2.11/data/experiment/bin/windows/contrib/2.15

Warning: unable to access index for repository http://arraytools.no-ip.org/Bioc/packages/2.11/extra/bin/windows/contrib/2.15

> library()

CRAN repository directory structure

The information below is specific to R 2.15.2. There are linux and macosx subdirecotries whenever there are windows subdirectory.

bin/winows/contrib/2.15 src/contrib /contrib/2.15.2 /contrib/Archive web/checks /dcmeta /packages /views

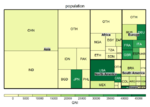

A clickable map [1]

CRAN package download statistics from RStudio

- Daily download statistics http://cran-logs.rstudio.com/. Note the page is split into 'package' download and 'R' download. It tracks

- Package: date, time, size, r_version, r_arch, r_os, package, version, country, ip_id.

- R: date, time, size, R version, os (win/src/osx), county, ip_id (reset daily).

- https://www.r-bloggers.com/finally-tracking-cran-packages-downloads/. The code still works.

- https://strengejacke.wordpress.com/2015/03/07/cran-download-statistics-of-any-packages-rstats/

Bioconductor package download statistics

http://bioconductor.org/packages/stats/

Bioconductor repository directory structure

The information below is specific to Bioc 2.11 (R 2.15). There are linux and macosx subdirecotries whenever there are windows subdirectory.

bioc/bin/windows/contrib/2.15

/html

/install

/license

/manuals

/news

/src

/vignettes

data/annotation/bin/windows/contrib/2.15

/html

/licenses

/manuals

/src

/vignettes

/experiment/bin/windows/contrib/2.15

/html

/manuals

/src/contrib

/vignettes

extra/bin/windows/contrib

/html

/src

/vignettes

List all R packages from CRAN/Bioconductor

Check my daily result based on R 2.15 and Bioc 2.11 in [2]

See METACRAN for packages hosted on CRAN. The 'https://github.com/metacran/PACKAGES' file contains the latest update.

r-hub: the everything-builder the R community needs

https://github.com/r-hub/proposal

Introducing R-hub, the R package builder service

- https://www.rstudio.com/resources/videos/r-hub-overview/

- http://blog.revolutionanalytics.com/2016/10/r-hub-public-beta.html

Parallel Computing

- Example code for the book Parallel R by McCallum and Weston.

- A gentle introduction to parallel computing in R

- An introduction to distributed memory parallelism in R and C

- Processing: When does it worth?

Windows Security Warning

It seems it is safe to choose 'Cancel' when Windows Firewall tried to block R program when we use makeCluster() to create a socket cluster.

library(parallel)

cl <- makeCluster(2)

clusterApply(cl, 1:2, get("+"), 3)

stopCluster(cl)

If we like to see current firewall settings, just click Windows Start button, search 'Firewall' and choose 'Windows Firewall with Advanced Security'. In the 'Inbound Rules', we can see what programs (like, R for Windows GUI front-end, or Rserve) are among the rules. These rules are called 'private' in the 'Profile' column. Note that each of them may appear twice because one is 'TCP' protocol and the other one has a 'UDP' protocol.

Detect number of cores

parallel::detectCores()

Don't use the default option getOption("mc.cores", 2L) (PS it only returns 2.) in mclapply() unless you are a developer for a package.

However, it is a different story when we run the R code in HPC cluster. Read the discussion Whether to use the detectCores function in R to specify the number of cores for parallel processing?

On NIH's biowulf, even I specify an interactive session with 4 cores, the parallel::detectCores() function returns 56. This number is the same as the output from the bash command grep processor /proc/cpuinfo or (better) lscpu. The free -hm also returns a full 125GB size instead of my requested size (4GB by default).

parallel package

Parallel package was included in R 2.14.0. It is derived from the snow and multicore packages and provides many of the same functions as those packages.

The parallel package provides several *apply functions for R users to quickly modify their code using parallel computing.

- makeCluster(makePSOCKcluster, makeForkCluster), stopCluster. Other cluster types are passed to package snow.

- clusterCall, clusterEvalQ, clusterSplit

- clusterApply, clusterApplyLB

- clusterExport

- clusterMap

- parLapply, parSapply, parApply, parRapply, parCapply

- parLapplyLB, parSapplyLB (load balance version)

- clusterSetRNGStream, nextRNGStream, nextRNGSubStream

Examples (See ?clusterApply)

library(parallel)

cl <- makeCluster(2, type = "SOCK")

clusterApply(cl, 1:2, function(x) x*3) # OR clusterApply(cl, 1:2, get("*"), 3)

# [[1]]

# [1] 3

#

# [[2]]

# [1] 6

parSapply(cl, 1:20, get("+"), 3)

# [1] 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23

stopCluster(cl)

snow package

Supported cluster types are "SOCK", "PVM", "MPI", and "NWS".

multicore package

This package is removed from CRAN.

Consider using package ‘parallel’ instead.

foreach package

This package depends on one of the following

- doParallel - Foreach parallel adaptor for the parallel package

- doSNOW - Foreach parallel adaptor for the snow package

- doMC - Foreach parallel adaptor for the multicore package. Used in glmnet vignette.

- doMPI - Foreach parallel adaptor for the Rmpi package

- doRedis - Foreach parallel adapter for the rredis package

as a backend.

library(foreach) library(doParallel) m <- matrix(rnorm(9), 3, 3) cl <- makeCluster(2, type = "SOCK") registerDoParallel(cl) foreach(i=1:nrow(m), .combine=rbind) %dopar% (m[i,] / mean(m[i,])) stopCluster(cl)

See also this post Updates to the foreach package and its friends on Oct 2015.

Replacing double loops

- https://stackoverflow.com/questions/30927693/how-can-i-parallelize-a-double-for-loop-in-r

- http://www.exegetic.biz/blog/2013/08/the-wonders-of-foreach/

library(foreach)

library(doParallel)

nc <- 4

nr <- 2

cores=detectCores()

cl <- makeCluster(cores[1]-1)

registerDoParallel(cl)

# set.seed(1234) # not work

# set.seed(1234, "L'Ecuyer-CMRG") # not work either

# library("doRNG")

# registerDoRNG(seed = 1985) # not work with nested foreach

# Error in list(e1 = list(args = (1:nr)(), argnames = "i", evalenv = <environment>, :

# nested/conditional foreach loops are not supported yet.

m <- foreach (i = 1:nr, .combine='rbind') %:% # nesting operator

foreach (j = 1:nc) %dopar% {

rnorm(1, i*5, j) # code to parallelise

}

m

stopCluster(cl)

Note that since the random seed (see the next session) does not work on nested loop, it is better to convert nested loop (two indices) to a single loop (one index).

Random number

- https://cran.r-project.org/web/packages/doRNG/ and its vignette

- doRNG package example

- How to set seed for random simulations with foreach and doMC packages?

- Use clusterSetRNGStream() from the parallel package; see How-to go parallel in R – basics + tips

- http://www.stat.colostate.edu/~scharfh/CSP_parallel/handouts/foreach_handout.html#random-numbers

library("doRNG") # doRNG does not need to be loaded after doParallel

library("doParallel")

cl <- makeCluster(2)

registerDoParallel(cl)

registerDoRNG(seed = 1234) # works for a single loop

m1 <- foreach(i = 1:5, .combine = 'c') %dopar% rnorm(1)

registerDoRNG(seed = 1234)

m2 <- foreach(i = 1:5, .combine = 'c') %dopar% rnorm(1)

identical(m1, m2)

stopCluster(cl)

attr(m1, "rng") <- NULL # remove rng attribute

Export libraries and variables

clusterEvalQ(cl, {

library(biospear)

library(glmnet)

library(survival)

})

clusterExport(cl, list("var1", "foo2"))

snowfall package

Rmpi package

Some examples/tutorials

- http://trac.nchc.org.tw/grid/wiki/R-MPI_Install