Statistics: Difference between revisions

| (790 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

= Statisticians = | |||

* [https://en.wikipedia.org/wiki/Karl_Pearson Karl Pearson] (1857-1936): chi-square, p-value, PCA | * [https://en.wikipedia.org/wiki/Karl_Pearson Karl Pearson] (1857-1936): chi-square, p-value, PCA | ||

* [https://en.wikipedia.org/wiki/William_Sealy_Gosset William Sealy Gosset] (1876-1937): Student's t | * [https://en.wikipedia.org/wiki/William_Sealy_Gosset William Sealy Gosset] (1876-1937): Student's t | ||

| Line 5: | Line 5: | ||

* [https://en.wikipedia.org/wiki/Egon_Pearson Egon Pearson] (1895-1980): son of Karl Pearson | * [https://en.wikipedia.org/wiki/Egon_Pearson Egon Pearson] (1895-1980): son of Karl Pearson | ||

* [https://en.wikipedia.org/wiki/Jerzy_Neyman Jerzy Neyman] (1894-1981): type 1 error | * [https://en.wikipedia.org/wiki/Jerzy_Neyman Jerzy Neyman] (1894-1981): type 1 error | ||

* [https://www.youtube.com/playlist?list=PLt_pNkbycxqahVksaNnjz3M6759xHIZ-r Ten Statistical Ideas that Changed the World] | |||

== | == The most important statistical ideas of the past 50 years == | ||

[https://arxiv.org/pdf/2012.00174.pdf What are the most important statistical ideas of the past 50 years?], [https://www.tandfonline.com/doi/full/10.1080/01621459.2021.1938081 JASA 2021] | |||

== | = Some Advice = | ||

https:// | * [http://www.nature.com/collections/qghhqm Statistics for biologists] | ||

* [https://www.bmj.com/content/379/bmj-2022-072883 On the 12th Day of Christmas, a Statistician Sent to Me . . .], [https://tinyurl.com/yzpv2uu6 The abridged 1-page print version]. | |||

= Data = | |||

== | == Rules for initial data analysis == | ||

[https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1009819 Ten simple rules for initial data analysis] | |||

== Types of probabilities == | |||

See this [https://twitter.com/5_utr/status/1688730481171279872?s=20 illustration] | |||

== Exploratory Analysis (EDA) == | |||

* [https://soroosj.netlify.app/2020/09/26/penguins-cluster/ Kmeans Clustering of Penguins] | |||

* [https://cran.r-project.org/web/packages/skimr/index.html skimr] package | |||

** [https://github.com/agstn/dataxray dataxray] package - An interactive table interface (of skimr) for data summaries. [https://www.r-bloggers.com/2023/01/cut-your-eda-time-into-5-minutes-with-exploratory-dataxray-analysis-edxa/ Cut your EDA time into 5 minutes with Exploratory DataXray Analysis (EDXA)] | |||

* [https://medium.com/@jchen001/20-useful-r-packages-you-may-not-know-about-54d57fe604f3 20 Useful R Packages You May Not Know Of] | |||

[ | * [https://twitter.com/ItaiYanai/status/1612627199332433922 12 guidelines for data exploration and analysis with the right attitude for discovery] | ||

== Kurtosis == | |||

[https://finnstats.com/index.php/2021/06/08/kurtosis-in-r/ Kurtosis in R-What do you understand by Kurtosis?] | |||

== Phi coefficient == | |||

<ul> | |||

<li>[https://en.wikipedia.org/wiki/Phi_coefficient Phi coefficient]. Its values is [-1, 1]. A value of zero means that the binary variables are not positively or negatively associated. | |||

* [https://finnstats.com/index.php/2021/07/24/how-to-calculate-phi-coefficient-in-r/ How to Calculate Phi Coefficient in R]. It is a measurement of the degree of association between two binary variables. | |||

<li>[https://en.wikipedia.org/wiki/Cram%C3%A9r%27s_V Cramér’s V]. Its value is [0, 1]. A value of zero indicates that there is no association between the two variables. This means that knowing the value of one variable does not help predict the value of the other variable. | |||

* [https://www.statology.org/interpret-cramers-v/ How to Interpret Cramer’s V (With Examples)] | |||

<pre> | |||

library(vcd) | |||

cramersV <- assocstats(table(x, y))$cramer | |||

</pre> | |||

</ul> | |||

== Coefficient of variation (CV) == | |||

[https://en.wikipedia.org/wiki/Coefficient_of_variation Coefficient of variation] | |||

Motivating the coefficient of variation (CV) for beginners: | |||

* Boss: Measure it 5 times. | |||

* You: 8, 8, 9, 6, and 8'' | |||

* B: SD=1. Make it three times more precise! | |||

* Y: 0.20 0.20 0.23 0.15 0.20 meters. SD=0.3! | |||

* B: All you did was change to meters! Report the CV instead! | |||

* Y: Damn it. | |||

<pre> | |||

R> sd(c(8, 8, 9, 6, 8)) | |||

[1] 1.095445 | |||

R> sd(c(8, 8, 9, 6, 8)*2.54/100) | |||

[1] 0.02782431 | |||

</pre> | |||

=== | == Agreement == | ||

[ | === Pitfalls === | ||

[https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5654219/ Common pitfalls in statistical analysis: Measures of agreement] 2017 | |||

=== | === Cohen's Kappa statistic (2-class) === | ||

* [https://en.wikipedia.org/wiki/Cohen%27s_kappa Cohen's kappa]. Cohen's kappa measures the agreement between two raters who each classify N items into C mutually exclusive categories. | |||

* [https://stats.stackexchange.com/a/437418 Fleiss kappa vs Cohen kappa]. | |||

* Cohen’s kappa is calculated based on the '''confusion matrix'''. However, in contrast to calculating overall accuracy, Cohen’s kappa takes '''imbalance''' in class distribution into account and can therefore be more complex to interpret. | |||

** [https://towardsdatascience.com/cohens-kappa-what-it-is-when-to-use-it-and-how-to-avoid-its-pitfalls-e42447962bbc Cohen’s Kappa: What it is, when to use it, and how to avoid its pitfalls] | |||

** [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC7019105/ Normalization Methods on Single-Cell RNA-seq Data: An Empirical Survey] Lytal 2020 | |||

[[ | === Fleiss Kappa statistic (more than two raters) === | ||

* https://en.wikipedia.org/wiki/Fleiss%27_kappa | |||

* Fleiss kappa (more than two raters) to test interrater reliability or to evaluate the repeatability and stability of models ('''robustness'''). This was used by [https://bmcbioinformatics.biomedcentral.com/articles/10.1186/s12859-020-03791-0 Cancer prognosis prediction] of Zheng 2020. '' "In our case, each trained model is designed to be a rater to assign the affiliation of each variable (gene or pathway). We conducted 20 replications of fivefold cross validation. As such, we had 100 trained models, or 100 raters in total, among which the agreement was measured by the Fleiss kappa..." '' | |||

* [https://www.datanovia.com/en/lessons/fleiss-kappa-in-r-for-multiple-categorical-variables/ Fleiss’ Kappa in R: For Multiple Categorical Variables]. '''irr::kappam.fleiss()''' was used. | |||

* Kappa statistic vs ICC | |||

** [https://stats.stackexchange.com/a/64997 ICC and Kappa totally disagree] | |||

** [https://www.sciencedirect.com/science/article/pii/S1556086415318876 Measures of Interrater Agreement] by Mandrekar 2011. '' "In certain clinical studies, agreement between the raters is assessed for a clinical outcome that is measured on a continuous scale. In such instances, intraclass correlation is calculated as a measure of agreement between the raters. Intraclass correlation is equivalent to weighted kappa under certain conditions, see the study by Fleiss and Cohen6, 7 for details." '' | |||

== | === ICC: intra-class correlation === | ||

[ | See [[ICC|ICC]] | ||

=== Compare two sets of p-values === | |||

https://stats.stackexchange.com/q/155407 | |||

== Computing different kinds of correlations == | |||

[https://github.com/easystats/correlation correlation] package | |||

=== Partial correlation === | |||

[https://en.wikipedia.org/wiki/Partial_correlation Partial correlation] | |||

== Association is not causation == | |||

[ | * [https://rafalab.github.io/dsbook/association-is-not-causation.html Association is not causation] | ||

* [https://www.statology.org/correlation-does-not-imply-causation-examples/ Correlation Does Not Imply Causation: 5 Real-World Examples] | |||

* Reasons Why Correlation Does Not Imply Causation | |||

** Third-Variable Problem: There may be an unseen third variable that is influencing both correlated variables. For example, ice cream sales and drowning incidents might be correlated because both increase during the summer, but neither causes the other. | |||

** Reverse Causation: The direction of cause and effect might be opposite to what we assume. For example, one might assume that stress causes poor health (which it can), but it’s also possible that poor health increases stress. | |||

** Coincidence: Sometimes, correlations occur purely by chance, especially if the sample size is large or if many variables are tested. | |||

** Complex Interactions: The relationship between variables can be influenced by a complex interplay of multiple factors that correlation alone cannot unpack. | |||

* Examples | |||

** Example of Correlation without Causation: There is a correlation between the number of fire trucks at a fire scene and the amount of damage caused by the fire. However, this does not mean that the fire trucks cause the damage; rather, larger fires both require more fire trucks and cause more damage. | |||

** Example of Potential Misinterpretation: Studies might find a correlation between coffee consumption and heart disease. Without further investigation, one might mistakenly conclude that drinking coffee causes heart disease. However, it could be that people who drink a lot of coffee are more likely to smoke, and smoking is the actual cause of heart disease. | |||

== Predictive power score == | |||

* https://cran.r-project.org/web/packages/ppsr/index.html | |||

* [https://paulvanderlaken.com/2021/03/02/ppsr-live-on-cran/ ppsr live on CRAN!] | |||

== Transform sample values to their percentiles == | |||

<ul> | |||

<li>[https://stat.ethz.ch/R-manual/R-devel/library/stats/html/ecdf.html ecdf()] | |||

<li>[https://stat.ethz.ch/R-manual/R-devel/library/stats/html/quantile.html quantile()] | |||

* An [https://github.com/cran/TreatmentSelection/blob/master/R/evaluate.trtsel.R example] from the TreatmentSelection package where "type = 1" was used. | |||

{{Pre}} | |||

R> x <- c(1,2,3,4,4.5,6,7) | |||

R> Fn <- ecdf(x) | |||

R> Fn # a *function* | |||

Empirical CDF | |||

Call: ecdf(x) | |||

x[1:7] = 1, 2, 3, ..., 6, 7 | |||

R> Fn(x) # returns the percentiles for x | |||

[1] 0.1428571 0.2857143 0.4285714 0.5714286 0.7142857 0.8571429 1.0000000 | |||

R> diff(Fn(x)) | |||

[1] 0.1428571 0.1428571 0.1428571 0.1428571 0.1428571 0.1428571 | |||

R> quantile(x, Fn(x)) | |||

14.28571% 28.57143% 42.85714% 57.14286% 71.42857% 85.71429% 100% | |||

1.857143 2.714286 3.571429 4.214286 4.928571 6.142857 7.000000 | |||

R> quantile(x, Fn(x), type = 1) | |||

14.28571% 28.57143% 42.85714% 57.14286% 71.42857% 85.71429% 100% | |||

1.0 2.0 3.0 4.0 4.5 6.0 7.0 | |||

R> x <- c(2, 6, 8, 10, 20) | |||

R> Fn <- ecdf(x) | |||

R> Fn(x) | |||

[1] 0.2 0.4 0.6 0.8 1.0 | |||

</pre> | |||

<li>[https://www.thoughtco.com/what-is-a-percentile-3126238 Definition of a Percentile in Statistics and How to Calculate It] | |||

<li>https://en.wikipedia.org/wiki/Percentile | |||

<li>[https://www.statology.org/percentile-vs-quartile-vs-quantile/ Percentile vs. Quartile vs. Quantile: What’s the Difference?] | |||

* Percentiles: Range from 0 to 100. | |||

* Quartiles: Range from 0 to 4. | |||

* Quantiles: Range from any value to any other value. | |||

</ul> | |||

== Standardization == | |||

[https://davidlindelof.com/feature-standardization-considered-harmful/ Feature standardization considered harmful] | |||

== Eleven quick tips for finding research data == | |||

http://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1006038 | |||

== An archive of 1000+ datasets distributed with R == | |||

https://vincentarelbundock.github.io/Rdatasets/ | |||

== Data and global == | |||

* Age Structure from [https://ourworldindata.org/age-structure One Data in World]. '''Our World in Data''' is a non-profit organization that provides free and open access to data and insights on how the world is changing across 115 topics. | |||

= | = Box(Box, whisker & outlier) = | ||

* [https://en.wikipedia.org/wiki/ | * https://en.wikipedia.org/wiki/Box_plot, [https://en.wikipedia.org/wiki/Box_plot#/media/File:Boxplot_vs_PDF.svg Boxplot and a probability density function (pdf) of a Normal Population] for a good annotation. | ||

* | * https://owi.usgs.gov/blog/boxplots/ (ggplot2 is used, graph-assisting explanation) | ||

* https://flowingdata.com/2008/02/15/how-to-read-and-use-a-box-and-whisker-plot/ | |||

* [https://en.wikipedia.org/wiki/Quartile Quartile] from Wikipedia. The quartiles returned from R are the same as the method defined by Method 2 described in Wikipedia. | |||

* [https://www.rforecology.com/post/2022-04-06-how-to-make-a-boxplot-in-r/ How to make a boxplot in R]. The '''whiskers''' of a box and whisker plot are the dotted lines outside of the grey box. These end at the minimum and maximum values of your data set, '''excluding outliers'''. | |||

= | An example for a graphical explanation. [[:File:Boxplot.svg]], [[:File:Geom boxplot.png]] | ||

{{Pre}} | |||

> x=c(0,4,15, 1, 6, 3, 20, 5, 8, 1, 3) | |||

> summary(x) | |||

Min. 1st Qu. Median Mean 3rd Qu. Max. | |||

0 2 4 6 7 20 | |||

> sort(x) | |||

[1] 0 1 1 3 3 4 5 6 8 15 20 | |||

> y <- boxplot(x, col = 'grey') | |||

> t(y$stats) | |||

[,1] [,2] [,3] [,4] [,5] | |||

[1,] 0 2 4 7 8 | |||

# the extreme of the lower whisker, the lower hinge, the median, | |||

# the upper hinge and the extreme of the upper whisker | |||

# https://en.wikipedia.org/wiki/Quartile#Example_1 | |||

> summary(c(6, 7, 15, 36, 39, 40, 41, 42, 43, 47, 49)) | |||

* http:// | Min. 1st Qu. Median Mean 3rd Qu. Max. | ||

6.00 25.50 40.00 33.18 42.50 49.00 | |||

</pre> | |||

* The lower and upper edges of box (also called the lower/upper '''hinge''') is determined by the first and 3rd '''quartiles''' (2 and 7 in the above example). | |||

** 2 = median(c(0, 1, 1, 3, 3, 4)) = (1+3)/2 | |||

** 7 = median(c(4, 5, 6, 8, 15, 20)) = (6+8)/2 | |||

** IQR = 7 - 2 = 5 | |||

* The thick dark horizon line is the '''median''' (4 in the example). | |||

* '''Outliers''' are defined by (the empty circles in the plot) | |||

** Observations larger than 3rd quartile + 1.5 * IQR (7+1.5*5=14.5) and | |||

** smaller than 1st quartile - 1.5 * IQR (2-1.5*5=-5.5). | |||

** Note that ''the cutoffs are not shown in the Box plot''. | |||

* Whisker (defined using the cutoffs used to define outliers) | |||

** '''Upper whisker''' is defined by '''the largest "data" below 3rd quartile + 1.5 * IQR''' (8 in this example). Note Upper whisker is NOT defined as 3rd quartile + 1.5 * IQR. | |||

** '''Lower whisker''' is defined by '''the smallest "data" greater than 1st quartile - 1.5 * IQR''' (0 in this example). Note lower whisker is NOT defined as 1st quartile - 1.5 * IQR. | |||

** See another example below where we can see the whiskers fall on observations. | |||

Note the [http://en.wikipedia.org/wiki/Box_plot wikipedia] lists several possible definitions of a whisker. R uses the 2nd method (Tukey boxplot) to define whiskers. | |||

== | == Create boxplots from a list object == | ||

Normally we use a vector to create a single boxplot or a formula on a data to create boxplots. | |||

But we can also use [https://www.rdocumentation.org/packages/base/versions/3.5.1/topics/split split()] to create a list and then make boxplots. | |||

<syntaxhighlight lang='rsplus'> | == Dot-box plot == | ||

* http://civilstat.com/2012/09/the-grammar-of-graphics-notes-on-first-reading/ | |||

* http://www.r-graph-gallery.com/89-box-and-scatter-plot-with-ggplot2/ | |||

* http://www.sthda.com/english/wiki/ggplot2-box-plot-quick-start-guide-r-software-and-data-visualization | |||

* [https://designdatadecisions.wordpress.com/2015/06/09/graphs-in-r-overlaying-data-summaries-in-dotplots/ Graphs in R – Overlaying Data Summaries in Dotplots]. Note that for some reason, the boxplot will cover the dots when we save the plot to an svg or a png file. So an alternative solution is to change the order <syntaxhighlight lang='rsplus'> | |||

par(cex.main=0.9,cex.lab=0.8,font.lab=2,cex.axis=0.8,font.axis=2,col.axis="grey50") | |||

boxplot(weight ~ feed, data = chickwts, range=0, whisklty = 0, staplelty = 0) | |||

par(new = TRUE) | |||

stripchart(weight ~ feed, data = chickwts, xlim=c(0.5,6.5), vertical=TRUE, method="stack", offset=0.8, pch=19, | |||

main = "Chicken weights after six weeks", xlab = "Feed Type", ylab = "Weight (g)") | |||

</syntaxhighlight> | |||

[[:File:Boxdot.svg]] | |||

== geom_boxplot == | |||

Note the geom_boxplot() does not create crossbars. See | |||

[https://community.rstudio.com/t/how-to-generate-a-boxplot-graph-with-whisker-by-ggplot/15619/4 How to generate a boxplot graph with whisker by ggplot] or [https://stackoverflow.com/a/13003038 this]. A trick is to add the '''stat_boxplot'''() function. | |||

Without jitter | |||

<pre> | |||

ggplot(dfbox, aes(x=sample, y=expr)) + | |||

geom_boxplot() + | |||

theme(axis.text.x=element_text(color = "black", angle=30, vjust=.8, | |||

hjust=0.8, size=6), | |||

plot.title = element_text(hjust = 0.5)) + | |||

labs(title="", y = "", x = "") | |||

</pre> | |||

With jitter | |||

<pre> | |||

ggplot(dfbox, aes(x=sample, y=expr)) + | |||

geom_boxplot(outlier.shape=NA) + #avoid plotting outliers twice | |||

geom_jitter(position=position_jitter(width=.2, height=0)) + | |||

theme(axis.text.x=element_text(color = "black", angle=30, vjust=.8, | |||

hjust=0.8, size=6), | |||

</ | plot.title = element_text(hjust = 0.5)) + | ||

labs(title="", y = "", x = "") | |||

</pre> | |||

[https://stackoverflow.com/a/21794246 Why geom_boxplot identify more outliers than base boxplot?] | |||

[https:// | |||

[https://stackoverflow.com/a/7267364 What do hjust and vjust do when making a plot using ggplot?] The value of hjust and vjust are only defined between 0 and 1: 0 means left-justified, 1 means right-justified. | |||

=== | == Other boxplots == | ||

[[:File:Lotsboxplot.png]] | |||

== | == Annotated boxplot == | ||

https://stackoverflow.com/a/38032281 | |||

= stem and leaf plot = | |||

[https://stat.ethz.ch/R-manual/R-devel/library/graphics/html/stem.html stem()]. See [http://www.r-tutor.com/elementary-statistics/quantitative-data/stem-and-leaf-plot R Tutorial]. | |||

Note that stem plot is useful when there are outliers. | |||

{{Pre}} | |||

> stem(x) | |||

The decimal point is 10 digit(s) to the right of the | | |||

0 | 00000000000000000000000000000000000000000000000000000000000000000000+419 | |||

1 | | |||

2 | | |||

3 | | |||

4 | | |||

5 | | |||

6 | | |||

7 | | |||

8 | | |||

9 | | |||

10 | | |||

11 | | |||

12 | 9 | |||

> max(x) | |||

[1] 129243100275 | |||

> max(x)/1e10 | |||

[1] 12.92431 | |||

> stem(y) | |||

The decimal point is at the | | |||

0 | 014478 | |||

1 | 0 | |||

2 | 1 | |||

3 | 9 | |||

4 | 8 | |||

> | > y | ||

[1] 3.8667356428 0.0001762708 0.7993462430 0.4181079732 0.9541728562 | |||

[6] 4.7791262101 0.6899313108 2.1381289177 0.0541736818 0.3868776083 | |||

> set.seed(1234) | |||

> z <- rnorm(10)*10 | |||

> z | |||

[1] -12.070657 2.774292 10.844412 -23.456977 4.291247 5.060559 | |||

[7] -5.747400 -5.466319 -5.644520 -8.900378 | |||

> stem(z) | |||

The decimal point is 1 digit(s) to the right of the | | |||

-2 | 3 | |||

-1 | 2 | |||

-0 | 9665 | |||

0 | 345 | |||

1 | 1 | |||

</pre> | |||

== | = Box-Cox transformation = | ||

[http:// | * [https://en.wikipedia.org/wiki/Power_transform#Box%E2%80%93Cox_transformation Power transformation] | ||

* [http://denishaine.wordpress.com/2013/03/11/veterinary-epidemiologic-research-linear-regression-part-3-box-cox-and-matrix-representation/ Finding transformation for normal distribution] | |||

= | = CLT/Central limit theorem = | ||

https:// | [https://en.wikipedia.org/wiki/Central_limit_theorem Central limit theorem] | ||

== | == Delta method == | ||

[[Delta|Delta]] | |||

== | == Sample median, x-percentiles == | ||

<ul> | |||

<li>[https://stats.stackexchange.com/questions/45124/central-limit-theorem-for-sample-medians Central limit theorem for sample medians] | |||

<li>For the q-th sample quantile in sufficiently large samples, we get that it will approximately have a normal distribution with mean the <math>𝑞</math>th population quantile <math>𝑥_𝑞</math> and variance <math>𝑞(1−𝑞)/(𝑛𝑓_𝑋(𝑥_𝑞)^2)</math>. | |||

Hence for the '''median''' (𝑞=1/2), the variance in sufficiently large samples will be approximately <math>1/(4𝑛𝑓_𝑋(m)^2)</math>. | |||

=== | <li>For example for an exponential distribution with a rate parameter <math>\lambda >0</math>, the pdf is <math>f(x)=\lambda \exp(-\lambda x)</math>. The population median <math>m</math> is the value such as <math>F(m)=.5</math>. So <math>m=log(2)/\lambda</math>. For large n, the '''sample median''' <math>\tilde{X}</math> will be approximately normal distributed around the population median <math>m</math>, but with the asymptotic variance given by <math>Var(\tilde{X}) \approx \frac{1}{4nf(m)^2} </math> where <math>f(m)</math> is the PDF evaluated at the median <math>m=\log(2)/\lambda</math>. For the exponential distribution with rate <math>\lambda</math>, we have <math>f(m) = \lambda e^{-\lambda m} = \lambda/2</math>. Substituting this into the expression for the variance we have <math>Var(\tilde{X}) \approx \frac{1}{n\lambda^2} </math>. | ||

<math>\ | |||

<li>For normal distribution with mean <math>\mu</math> and variance <math>\sigma^2</math>. The '''sample median''' has a limiting distribution of normal with mean <math>\mu</math> and variance <math> \frac{1}{4nf(m)^2} = \frac{\pi \sigma^2}{2n} </math>. | |||

< | |||

> | |||

} | |||

</ | |||

<li>Some references: | |||

* "Mathematical Statistics" by Jun Shao | |||

< | * "Probability and Statistics" by DeGroot and Schervish | ||

* "Order Statistics" by H.A. David and H.N. Nagaraja | |||

</ul> | |||

</ | |||

= the Holy Trinity (LRT, Wald, Score tests) = | |||

* https://en.wikipedia.org/wiki/Likelihood_function which includes '''profile likelihood''' and '''partial likelihood''' | |||

* [http://data.princeton.edu/wws509/notes/a1.pdf Review of the likelihood theory] | |||

# | * [http://www.tandfonline.com/doi/full/10.1080/00031305.2014.955212#abstract?ai=rv&mi=3be122&af=R The “Three Plus One” Likelihood-Based Test Statistics: Unified Geometrical and Graphical Interpretations] | ||

* [https://www.ncbi.nlm.nih.gov/pmc/articles/PMC5969114/ Variable selection – A review and recommendations for the practicing statistician] by Heinze et al 2018. | |||

** [https://en.wikipedia.org/wiki/Score_test '''Score test'''] is step-up. Score test is typically used in forward steps to screen covariates currently not included in a model for their ability to improve model. | |||

** [https://en.wikipedia.org/wiki/Wald_test '''Wald test'''] is step-down. Wald test starts at the full model. It evaluate the significance of a variable by comparing the ratio of its estimate and its standard error with an appropriate '''T distribution (for linear models)''' or '''standard normal distribution (for logistic or Cox regression)'''. | |||

** [https://en.wikipedia.org/wiki/Likelihood-ratio_test '''Likelihood ratio tests'''] provide the best control over nuisance parameters by maximizing the likelihood over them both in H0 model and H1 model. In particular, if several coefficients are being tested simultaneously, LRTs for model comparison are preferred over Wald or score tests. | |||

* R packages | |||

** [https://cran.r-project.org/web/packages/lmtest/ lmtest] package, [https://www.rdocumentation.org/packages/lmtest/versions/0.9-37/topics/waldtest waldtest()] and [https://www.rdocumentation.org/packages/lmtest/versions/0.9-37/topics/lrtest lrtest()]. [https://finnstats.com/index.php/2021/11/24/likelihood-ratio-test-in-r/ Likelihood Ratio Test in R with Example] | |||

** [https://cran.r-project.org/web/packages/aod/index.html aod] package. [https://www.statology.org/wald-test-in-r/ How to Perform a Wald Test in R] | |||

** [https://cran.r-project.org/web/packages/survey/index.html survey] package. regTermTest() | |||

** [https://cran.r-project.org/web/packages/nlWaldTest/index.html nlWaldTest] package. | |||

* [https://stats.stackexchange.com/a/503720 Likelihood ratio test multiplying by 2]. Hint: Approximate the log-likelihood for the '''true value of the parameter''' using the Taylor expansion around the '''MLE'''. | |||

* Wald statistic relationship to Z-statistic: The Wald statistic is essentially the square of the Z-statistic. In other words, a Wald statistic is computed as Z squared. However, '''there is a key difference in the denominator of these statistics: the Z-statistic uses the null standard error (calculated using the hypothesized value), while the Wald statistic uses the standard error evaluated at the maximum likelihood estimate'''. | |||

[https:// | ** [https://stats.stackexchange.com/questions/60074/wald-test-for-logistic-regression Wald test for logistic regression] | ||

** [https://stats.stackexchange.com/questions/152630/wald-test-and-z-test Wald Test and Z Test] | |||

** [https://stats.stackexchange.com/questions/609613/what-is-the-difference-between-z-value-and-the-wald-statistic-in-the-summary-fun What is the difference between z-value and the Wald statistic in the summary function of the Cox Proportional Hazards model of the “survival” package?] | |||

= | = Don't invert that matrix = | ||

* http://www. | * http://www.johndcook.com/blog/2010/01/19/dont-invert-that-matrix/ | ||

* http:// | * http://civilstat.com/2015/07/dont-invert-that-matrix-why-and-how/ | ||

== Different matrix decompositions/factorizations == | |||

* [https://en.wikipedia.org/wiki/QR_decomposition QR decomposition], [https://www.rdocumentation.org/packages/base/versions/3.5.1/topics/qr qr()] | |||

* [https://en.wikipedia.org/wiki/LU_decomposition LU decomposition], [https://www.rdocumentation.org/packages/Matrix/versions/1.2-14/topics/lu lu()] from the 'Matrix' package | |||

* [https://en.wikipedia.org/wiki/Cholesky_decomposition Cholesky decomposition], [https://www.rdocumentation.org/packages/base/versions/3.5.1/topics/chol chol()] | |||

* [https://en.wikipedia.org/wiki/Singular-value_decomposition Singular value decomposition], [https://www.rdocumentation.org/packages/base/versions/3.5.1/topics/svd svd()] | |||

{{Pre}} | |||

set.seed(1234) | |||

x <- matrix(rnorm(10*2), nr= 10) | |||

cmat <- cov(x); cmat | |||

# [,1] [,2] | |||

# [1,] 0.9915928 -0.1862983 | |||

# [2,] -0.1862983 1.1392095 | |||

# cholesky decom | |||

* [ | d1 <- chol(cmat) | ||

t(d1) %*% d1 # equal to cmat | |||

d1 # upper triangle | |||

# [,1] [,2] | |||

# [1,] 0.9957875 -0.1870864 | |||

# [2,] 0.0000000 1.0508131 | |||

# svd | |||

d2 <- svd(cmat) | |||

d2$u %*% diag(d2$d) %*% t(d2$v) # equal to cmat | |||

d2$u %*% diag(sqrt(d2$d)) | |||

# [,1] [,2] | |||

# [1,] -0.6322816 0.7692937 | |||

# [2,] 0.9305953 0.5226872 | |||

</pre> | |||

</pre> | |||

== | = Model Estimation with R = | ||

[https://m-clark.github.io/models-by-example/ Model Estimation by Example] Demonstrations with R. Michael Clark | |||

= Regression = | |||

[[Regression|Regression]] | |||

= Non- and semi-parametric regression = | |||

* [https://mathewanalytics.com/2018/03/05/semiparametric-regression-in-r/ Semiparametric Regression in R] | |||

* https://socialsciences.mcmaster.ca/jfox/Courses/Oxford-2005/R-nonparametric-regression.html | |||

== Mean squared error == | |||

* [https://www.statworx.com/de/blog/simulating-the-bias-variance-tradeoff-in-r/ Simulating the bias-variance tradeoff in R] | |||

* [https://alemorales.info/post/variance-estimators/ Estimating variance: should I use n or n - 1? The answer is not what you think] | |||

== | == Splines == | ||

* https://en.wikipedia.org/wiki/B-spline | |||

* [https://www.r-bloggers.com/cubic-and-smoothing-splines-in-r/ Cubic and Smoothing Splines in R]. '''bs()''' is for cubic spline and '''smooth.spline()''' is for smoothing spline. | |||

* [https://www.rdatagen.net/post/generating-non-linear-data-using-b-splines/ Can we use B-splines to generate non-linear data?] | |||

* [https://stats.stackexchange.com/questions/29400/spline-fitting-in-r-how-to-force-passing-two-data-points How to force passing two data points?] ([https://cran.r-project.org/web/packages/cobs/index.html cobs] package) | |||

* https://www.rdocumentation.org/packages/cobs/versions/1.3-3/topics/cobs | |||

== | == k-Nearest neighbor regression == | ||

[https:// | * [https://www.rdocumentation.org/packages/class/versions/7.3-21/topics/knn class::knn()] | ||

* k-NN regression in practice: boundary problem, discontinuities problem. | |||

* Weighted k-NN regression: want weight to be small when distance is large. Common choices - weight = kernel(xi, x) | |||

== Partial Least Squares (PLS) = | == Kernel regression == | ||

* Instead of weighting NN, weight ALL points. Nadaraya-Watson kernel weighted average: | |||

<math>\hat{y}_q = \sum c_{qi} y_i/\sum c_{qi} = \frac{\sum \text{Kernel}_\lambda(\text{distance}(x_i, x_q))*y_i}{\sum \text{Kernel}_\lambda(\text{distance}(x_i, x_q))} </math>. | |||

* Choice of bandwidth <math>\lambda</math> for bias, variance trade-off. Small <math>\lambda</math> is over-fitting. Large <math>\lambda</math> can get an over-smoothed fit. '''Cross-validation'''. | |||

* Kernel regression leads to locally constant fit. | |||

* Issues with high dimensions, data scarcity and computational complexity. | |||

= Principal component analysis = | |||

See [[PCA|PCA]]. | |||

= Partial Least Squares (PLS) = | |||

* [https://twitter.com/slavov_n/status/1642570040737402881 Accounting for measurement errors with total least squares]. Demonstrate the bias of the PLS. | |||

* https://en.wikipedia.org/wiki/Partial_least_squares_regression. The general underlying model of multivariate PLS is | * https://en.wikipedia.org/wiki/Partial_least_squares_regression. The general underlying model of multivariate PLS is | ||

:<math>X = T P^\mathrm{T} + E</math> | :<math>X = T P^\mathrm{T} + E</math> | ||

:<math>Y = U Q^\mathrm{T} + F</math> | :<math>Y = U Q^\mathrm{T} + F</math> | ||

where {{mvar|X}} is an <math>n \times m</math> matrix of predictors, {{mvar|Y}} is an <math>n \times p</math> matrix of responses; {{mvar|T}} and {{mvar|U}} are <math>n \times l</math> matrices that are, respectively, '''projections''' of {{mvar|X}} (the X '''score''', ''component'' or '''factor matrix''') and projections of {{mvar|Y}} (the ''Y scores''); {{mvar|P}} and {{mvar|Q}} are, respectively, <math>m \times l</math> and <math>p \times l</math> orthogonal '''loading matrices'''; and matrices {{mvar|E}} and {{mvar|F}} are the error terms, assumed to be independent and identically distributed random normal variables. The decompositions of {{mvar|X}} and {{mvar|Y}} are made so as to maximise the '''covariance''' between {{mvar|T}} and {{mvar|U}} (projection matrices). | :where {{mvar|X}} is an <math>n \times m</math> matrix of predictors, {{mvar|Y}} is an <math>n \times p</math> matrix of responses; {{mvar|T}} and {{mvar|U}} are <math>n \times l</math> matrices that are, respectively, '''projections''' of {{mvar|X}} (the X '''score''', ''component'' or '''factor matrix''') and projections of {{mvar|Y}} (the ''Y scores''); {{mvar|P}} and {{mvar|Q}} are, respectively, <math>m \times l</math> and <math>p \times l</math> orthogonal '''loading matrices'''; and matrices {{mvar|E}} and {{mvar|F}} are the error terms, assumed to be independent and identically distributed random normal variables. The decompositions of {{mvar|X}} and {{mvar|Y}} are made so as to maximise the '''covariance''' between {{mvar|T}} and {{mvar|U}} (projection matrices). | ||

* [https://www.gokhanciflikli.com/post/learning-brexit/ Supervised vs. Unsupervised Learning: Exploring Brexit with PLS and PCA] | * [https://www.gokhanciflikli.com/post/learning-brexit/ Supervised vs. Unsupervised Learning: Exploring Brexit with PLS and PCA] | ||

* [https://cran.r-project.org/web/packages/pls/index.html pls] R package | * [https://cran.r-project.org/web/packages/pls/index.html pls] R package | ||

* [https://cran.r-project.org/web/packages/plsRcox/index.html plsRcox] R package (archived). See [[R#install_a_tar.gz_.28e.g._an_archived_package.29_from_a_local_directory|here]] for the installation. | * [https://cran.r-project.org/web/packages/plsRcox/index.html plsRcox] R package (archived). See [[R#install_a_tar.gz_.28e.g._an_archived_package.29_from_a_local_directory|here]] for the installation. | ||

* [https://web.stanford.edu/~hastie/ElemStatLearn//printings/ESLII_print12.pdf#page=101 PLS, PCR (principal components regression) and ridge regression tend to behave similarly]. Ridge regression may be preferred because it shrinks smoothly, rather than in discrete steps. | |||

* [https://bmcbioinformatics.biomedcentral.com/articles/10.1186/s12859-019-3310-7 So you think you can PLS-DA?]. Compare PLS with PCA. | |||

* [https://cran.r-project.org/web/packages/plsRglm/index.html plsRglm] package - Partial Least Squares Regression for Generalized Linear Models | |||

[https:// | = High dimension = | ||

* [https://projecteuclid.org/euclid.aos/1547197242 Partial least squares prediction in high-dimensional regression] Cook and Forzani, 2019 | |||

* [https://arxiv.org/pdf/1912.06667v1.pdf#:~:text=Patient-derived High dimensional precision medicine from patient-derived xenografts] JASA 2020 | |||

== [https://en.wikipedia.org/wiki/Independent_component_analysis Independent component analysis] | == dimRed package == | ||

[https://cran.r-project.org/web/packages/dimRed/index.html dimRed] package | |||

== Feature selection == | |||

* https://en.wikipedia.org/wiki/Feature_selection | |||

* [https://seth-dobson.github.io/a-feature-preprocessing-workflow/ A Feature Preprocessing Workflow] | |||

* [https://doi.org/10.1080/01621459.2020.1783274 Model-Free Feature Screening and FDR Control With Knockoff Features] and [https://arxiv.org/pdf/1908.06597v2.pdf pdf]. The proposed method is based on the '''projection correlation''' which measures the dependence between two random vectors. | |||

== Goodness-of-fit == | |||

* [https://onlinelibrary.wiley.com/doi/10.1002/sim.8968 A simple yet powerful test for assessing goodness‐of‐fit of high‐dimensional linear models] Zhang 2021 | |||

* [https://www.tandfonline.com/doi/full/10.1080/02664763.2021.2017413 Pearson's goodness-of-fit tests for sparse distributions] Chang 2021 | |||

= [https://en.wikipedia.org/wiki/Independent_component_analysis Independent component analysis] = | |||

ICA is another dimensionality reduction method. | ICA is another dimensionality reduction method. | ||

== ICA vs PCA == | |||

== ICS vs FA == | |||

== | == Robust independent component analysis == | ||

[https://bmcbioinformatics.biomedcentral.com/articles/10.1186/s12859-022-05043-9 robustica: customizable robust independent component analysis] 2022 | |||

== | = Canonical correlation analysis = | ||

https:// | * https://en.wikipedia.org/wiki/Canonical_correlation. If we have two vectors ''X'' = (''X''<sub>1</sub>, ..., ''X''<sub>''n''</sub>) and ''Y'' = (''Y''<sub>1</sub>, ..., ''Y''<sub>''m''</sub>) of random variables, and there are correlations among the variables, then canonical-correlation analysis will find linear combinations of ''X'' and ''Y'' which have maximum correlation with each other. | ||

* [https://stats.idre.ucla.edu/r/dae/canonical-correlation-analysis/ R data analysis examples] | |||

* [https://online.stat.psu.edu/stat505/book/export/html/682 Canonical Correlation Analysis] from psu.edu | |||

* see the [https://www.rdocumentation.org/packages/stats/versions/3.6.2/topics/cancor cancor] function in base R; canocor in the [https://cran.r-project.org/web/packages/calibrate/ calibrate] package; and the [https://cran.r-project.org/web/packages/CCA/index.html CCA] package. | |||

* [https://cmdlinetips.com/2020/12/canonical-correlation-analysis-in-r/ Introduction to Canonical Correlation Analysis (CCA) in R] | |||

== | == Non-negative CCA == | ||

* https://cran.r-project.org/web/packages/nscancor/ | |||

* [https://www.mdpi.com/2076-3417/12/13/6596/html Pan-Cancer Analysis for Immune Cell Infiltration and Mutational Signatures Using Non-Negative Canonical Correlation Analysis] 2022. Non-negative constraints that force all input elements and coefficients to be zero or positive values. | |||

* https:// | = [https://en.wikipedia.org/wiki/Correspondence_analysis Correspondence analysis] = | ||

* https://lvdmaaten.github.io/tsne/ | * [https://en.wikipedia.org/wiki/Principal_component_analysis#Correspondence_analysis Relationship of PCA and Correspondence analysis] | ||

* [http://www.sthda.com/english/articles/31-principal-component-methods-in-r-practical-guide/113-ca-correspondence-analysis-in-r-essentials/ CA - Correspondence Analysis in R: Essentials] | |||

* [https://www.displayr.com/math-correspondence-analysis/ Understanding the Math of Correspondence Analysis], [https://www.displayr.com/interpret-correspondence-analysis-plots-probably-isnt-way-think/ How to Interpret Correspondence Analysis Plots] | |||

* https://francoishusson.wordpress.com/2017/07/18/multiple-correspondence-analysis-with-factominer/ and the book [https://www.crcpress.com/Exploratory-Multivariate-Analysis-by-Example-Using-R-Second-Edition/Husson-Le-Pages/p/book/9781138196346?tab=rev Exploratory Multivariate Analysis by Example Using R] | |||

= Non-negative matrix factorization = | |||

[https://bmcbioinformatics.biomedcentral.com/articles/10.1186/s12859-019-3312-5 Optimization and expansion of non-negative matrix factorization] | |||

= Nonlinear dimension reduction = | |||

[https://www.biorxiv.org/content/10.1101/2021.08.25.457696v1 The Specious Art of Single-Cell Genomics] by Chari 2021 | |||

== t-SNE == | |||

'''t-Distributed Stochastic Neighbor Embedding''' (t-SNE) is a technique for dimensionality reduction that is particularly well suited for the visualization of high-dimensional datasets. | |||

* [https://en.wikipedia.org/wiki/Nonlinear_dimensionality_reduction#t-distributed_stochastic_neighbor_embedding Wikipedia] | |||

* [https://youtu.be/NEaUSP4YerM StatQuest: t-SNE, Clearly Explained] | |||

* https://lvdmaaten.github.io/tsne/ | |||

* [https://rpubs.com/Saskia/520216 Workshop: Dimension reduction with R] Saskia Freytag | |||

* Application to [http://amp.pharm.mssm.edu/archs4/data.html ARCHS4] | * Application to [http://amp.pharm.mssm.edu/archs4/data.html ARCHS4] | ||

* [https://www.codeproject.com/tips/788739/visualization-of-high-dimensional-data-using-t-sne Visualization of High Dimensional Data using t-SNE with R] | * [https://www.codeproject.com/tips/788739/visualization-of-high-dimensional-data-using-t-sne Visualization of High Dimensional Data using t-SNE with R] | ||

* http://blog.thegrandlocus.com/2018/08/a-tutorial-on-t-sne-1 | * http://blog.thegrandlocus.com/2018/08/a-tutorial-on-t-sne-1 | ||

* [https://intobioinformatics.wordpress.com/2019/05/30/quick-and-easy-t-sne-analysis-in-r/ Quick and easy t-SNE analysis in R]. [https://bioconductor.org/packages/devel/bioc/html/M3C.html M3C] package was used. | |||

* [https://link.springer.com/protocol/10.1007%2F978-1-0716-0301-7_8 Visualization of Single Cell RNA-Seq Data Using t-SNE in R]. [https://cran.r-project.org/web/packages/Seurat/index.html Seurat] (both Seurat and M3C call [https://cran.r-project.org/web/packages/Rtsne/index.html Rtsne]) package was used. | |||

* [https://github.com/berenslab/rna-seq-tsne The art of using t-SNE for single-cell transcriptomics] | |||

* [https://www.frontiersin.org/articles/10.3389/fgene.2020.00041/full Normalization Methods on Single-Cell RNA-seq Data: An Empirical Survey] | |||

* [https://github.com/jdonaldson/rtsne An R package for t-SNE (pure R implementation)] | |||

* [https://pair-code.github.io/understanding-umap/ Understanding UMAP] by Andy Coenen, Adam Pearce. Note that the Fashion MNIST data was used to explain what a global structure means (it means similar categories (such as sandal, sneaker, and ankle boot)). | |||

*# Hyperparameters really matter | |||

*# Cluster sizes in a UMAP plot mean nothing | |||

*# Distances between clusters might not mean anything | |||

*# Random noise doesn’t always look random. | |||

*# You may need more than one plot | |||

== | === Perplexity parameter === | ||

* Balance attention between local and global aspects of the dataset | |||

* A guess about the number of close neighbors | |||

* In a real setting is important to try different values | |||

* Must be lower than the number of input records | |||

* [https://jef.works/tsne-online/ Interactive t-SNE ? Online]. We see in addition to '''perplexity''' there are '''learning rate''' and '''max iterations'''. | |||

== | === Classifying digits with t-SNE: MNIST data === | ||

Below is an example from datacamp [https://learn.datacamp.com/courses/advanced-dimensionality-reduction-in-r Advanced Dimensionality Reduction in R]. | |||

The mnist_sample is very small 200x785. Here ([http://varianceexplained.org/r/digit-eda/ Exploring handwritten digit classification: a tidy analysis of the MNIST dataset]) is a large data with 60k records (60000 x 785). | |||

<ol> | |||

<li>Generating t-SNE features | |||

<pre> | |||

library(readr) | |||

library(dplyr) | |||

# 104MB | |||

mnist_raw <- read_csv("https://pjreddie.com/media/files/mnist_train.csv", col_names = FALSE) | |||

mnist_10k <- mnist_raw[1:10000, ] | |||

colnames(mnist_10k) <- c("label", paste0("pixel", 0:783)) | |||

library(ggplot2) | |||

library(Rtsne) | |||

=== | tsne <- Rtsne(mnist_10k[, -1], perplexity = 5) | ||

tsne_plot <- data.frame(tsne_x= tsne$Y[1:5000,1], | |||

tsne_y = tsne$Y[1:5000,2], | |||

digit = as.factor(mnist_10k[1:5000,]$label)) | |||

# visualize obtained embedding | |||

ggplot(tsne_plot, aes(x= tsne_x, y = tsne_y, color = digit)) + | |||

ggtitle("MNIST embedding of the first 5K digits") + | |||

geom_text(aes(label = digit)) + theme(legend.position= "none") | |||

</pre></li> | |||

<li>Computing centroids | |||

<pre> | <pre> | ||

# | library(data.table) | ||

# | # Get t-SNE coordinates | ||

centroids <- as.data.table(tsne$Y[1:5000,]) | |||

setnames(centroids, c("X", "Y")) | |||

centroids[, label := as.factor(mnist_10k[1:5000,]$label)] | |||

# Compute centroids | |||

centroids[, mean_X := mean(X), by = label] | |||

centroids[, mean_Y := mean(Y), by = label] | |||

centroids <- unique(centroids, by = "label") | |||

# visualize centroids | |||

ggplot(centroids, aes(x= mean_X, y = mean_Y, color = label)) + | |||

ggtitle("Centroids coordinates") + geom_text(aes(label = label)) + | |||

theme(legend.position = "none") | |||

</pre></li> | |||

<li>Classifying new digits | |||

<pre> | |||

# Get new examples of digits 4 and 9 | |||

distances <- as.data.table(tsne$Y[5001:10000,]) | |||

setnames(distances, c("X" , "Y")) | |||

distances[, label := mnist_10k[5001:10000,]$label] | |||

distances <- distances[label == 4 | label == 9] | |||

# Compute the distance to the centroids | |||

distances[, dist_4 := sqrt(((X - centroids[label==4,]$mean_X) + | |||

(Y - centroids[label==4,]$mean_Y))^2)] | |||

dim(distances) | |||

# [1] 928 4 | |||

distances[1:3, ] | |||

# X Y label dist_4 | |||

# 1: -15.90171 27.62270 4 1.494578 | |||

# 2: -33.66668 35.69753 9 8.195562 | |||

# 3: -16.55037 18.64792 9 8.128860 | |||

# Plot distance to each centroid | |||

ggplot(distances, aes(x=dist_4, fill = as.factor(label))) + | |||

geom_histogram(binwidth=5, alpha=.5, position="identity", show.legend = F) | |||

</pre></li> | |||

</ol> | |||

=== Fashion MNIST data === | |||

* fashion_mnist is only 500x785 | |||

* [https://tensorflow.rstudio.com/reference/keras/dataset_fashion_mnist/ keras] has 60k x 785. Miniconda is required when we want to use the package. | |||

=== tSNE vs PCA === | |||

* [https://medium.com/analytics-vidhya/pca-vs-t-sne-17bcd882bf3d PCA vs t-SNE: which one should you use for visualization]. This uses MNIST dataset for a comparison. | |||

* [https://www.subioplatform.com/info_casestudy/338/why-pca-on-bulk-rna-seq-and-t-sne-on-scrna-seq Why PCA on bulk RNA-Seq and t-SNE on scRNA-Seq?] | |||

* [https://support.bioconductor.org/p/97594/ What to use: PCA or tSNE dimension reduction in DESeq2 analysis?] (with discussion) | |||

* [https://stats.stackexchange.com/a/249520 Are there cases where PCA is more suitable than t-SNE?] | |||

* [https://stats.stackexchange.com/a/502392 How to interpret data not separated by PCA but by T-sne/UMAP] | |||

* [https://towardsdatascience.com/dimensionality-reduction-for-data-visualization-pca-vs-tsne-vs-umap-be4aa7b1cb29 Dimensionality Reduction for Data Visualization: PCA vs TSNE vs UMAP vs LDA] | |||

=== Two groups example === | |||

* [http://www.bioconductor.org/packages/release/bioc/vignettes/splatter/inst/doc/splatter.html#61_Simulating_groups Simulating groups] | |||

<pre> | |||

suppressPackageStartupMessages({ | |||

library(splatter) | |||

library(scater) | |||

}) | |||

sim.groups <- splatSimulate(group.prob = c(0.5, 0.5), method = "groups", | |||

verbose = FALSE) | |||

sim.groups <- logNormCounts(sim.groups) | |||

sim.groups <- runPCA(sim.groups) | |||

plotPCA(sim.groups, colour_by = "Group") # 2 groups separated in PC1 | |||

sim.groups <- runTSNE(sim.groups) | |||

plotTSNE(sim.groups, colour_by = "Group") # 2 groups separated in TSNE2 | |||

</pre> | </pre> | ||

=== | == UMAP == | ||

* [https://en.wikipedia.org/wiki/Nonlinear_dimensionality_reduction#Uniform_manifold_approximation_and_projection Uniform manifold approximation and projection] | |||

* https://cran.r-project.org/web/packages/umap/index.html | |||

* [https://intobioinformatics.wordpress.com/2019/06/08/running-umap-for-data-visualisation-in-r/ Running UMAP for data visualisation in R] | |||

* [https://juliasilge.com/blog/cocktail-recipes-umap/ PCA and UMAP with tidymodels] | |||

* https://arxiv.org/abs/1802.03426 | |||

* https://www.biorxiv.org/content/early/2018/04/10/298430 | |||

* [https://poissonisfish.com/2020/11/14/umap-clustering-in-python/ UMAP clustering in Python] | |||

* [https://juliasilge.com/blog/un-voting/ Dimensionality reduction of #TidyTuesday United Nations voting patterns], [https://juliasilge.com/blog/billboard-100/ Dimensionality reduction for #TidyTuesday Billboard Top 100 songs]. The [https://cran.r-project.org/web/packages/embed/index.html embed] package was used. | |||

* [https://tonyelhabr.rbind.io/post/dimensionality-reduction-and-clustering/ Tired: PCA + kmeans, Wired: UMAP + GMM] | |||

* [https://www.nature.com/articles/s41596-020-00409-w Tutorial: guidelines for the computational analysis of single-cell RNA sequencing data] Andrews 2020. | |||

** One shortcoming of both t-SNE and UMAP is that they both require a user-defined hyperparameter, and the result can be sensitive to the value chosen. Moreover, the methods are stochastic, and providing a good initialization can significantly improve the results of both algorithms. | |||

** '''Neither visualization algorithm preserves cell-cell distances, so the resulting embedding should not be used directly by downstream analysis methods such as clustering or pseudotime inference'''. | |||

* [https://youtu.be/eN0wFzBA4Sc?t=53 UMAP Dimension Reduction, Main Ideas!!!], [https://youtu.be/jth4kEvJ3P8 UMAP: Mathematical Details (clearly explained!!!)] | |||

* [https://towardsdatascience.com/how-exactly-umap-works-13e3040e1668 How Exactly UMAP Works] (open it in an incognito window] | |||

* [https://statquest.gumroad.com/l/nixkdy t-SNE and UMAP Study Guide] | |||

* [https://twitter.com/lpachter/status/1440696798218100753 UMAP monkey] | |||

== GECO == | |||

[https://bmcbioinformatics.biomedcentral.com/articles/10.1186/s12859-020-03951-2 GECO: gene expression clustering optimization app for non-linear data visualization of patterns] | |||

= | = Visualize the random effects = | ||

http://www.quantumforest.com/2012/11/more-sense-of-random-effects/ | |||

= | = [https://en.wikipedia.org/wiki/Calibration_(statistics) Calibration] = | ||

[ | |||

* Search by image: graphical explanation of calibration problem | |||

* Does calibrating classification models improve prediction? | |||

** Calibrating a classification model can improve the reliability and accuracy of the '''predicted probabilities''', but it may not necessarily improve the '''overall prediction performance of the model''' in terms of metrics such as accuracy, precision, or recall. | |||

** Calibration is about ensuring that the predicted probabilities from a model match the observed proportions of outcomes in the data. This can be important when the predicted probabilities are used to make decisions or when they are presented to users as a measure of confidence or uncertainty. | |||

** However, calibrating a model does not change its ability to discriminate between positive and negative outcomes. In other words, calibration does not affect how well the model separates the classes, but rather how accurately it estimates the probabilities of class membership. | |||

** In some cases, calibrating a model may improve its overall prediction performance by making the predicted probabilities more accurate. However, this is not always the case, and the impact of calibration on prediction performance may vary depending on the specific needs and goals of the analysis. | |||

[https:// | * A real-world example of calibration in machine learning is in the field of fraud detection. In this case, it might be desirable to have the model '''predict probabilities''' of data belonging to each possible '''class''' instead of crude class labels. Gaining access to '''probabilities''' is useful for a richer interpretation of the responses, analyzing the model shortcomings, or presenting the uncertainty to the end-users ². [https://wttech.blog/blog/2021/a-guide-to-model-calibration/ A guide to model calibration | Wunderman Thompson Technology]. | ||

* Another example where calibration is more important than prediction on new samples is in the field of medical diagnosis. In this case, it is important to have well-calibrated probabilities for the presence of a disease, so that doctors can make informed decisions about treatment. For example, if a diagnostic test predicts an 80% chance that a patient has a certain disease, doctors would expect that 80% of the time when such a prediction is made, the patient actually has the disease. This example does not mean that prediction on new samples is not feasible or not a concern, but rather that having well-calibrated probabilities is crucial for making accurate predictions and informed decisions. | |||

* [ | * [https://bmcmedicine.biomedcentral.com/articles/10.1186/s12916-019-1466-7 Calibration: the Achilles heel of predictive analytics] Calster 2019 | ||

* [ | * https://www.itl.nist.gov/div898/handbook/pmd/section1/pmd133.htm Calibration and '''calibration curve'''. | ||

* [ | ** Y=voltage (''observed''), X=temperature (''true/ideal''). The calibration curve for a thermocouple is often constructed by comparing thermocouple ''(observed)output'' to relatively ''(true)precise'' thermometer data. | ||

* [ | ** when a new temperature is measured with the thermocouple, the voltage is converted to temperature terms by plugging the observed voltage into the regression equation and solving for temperature. | ||

** It is important to note that the thermocouple measurements, made on the ''secondary measurement scale'', are treated as the response variable and the more precise thermometer results, on the ''primary scale'', are treated as the predictor variable because this best satisfies the '''underlying assumptions''' (Y=observed, X=true) of the analysis. | |||

** '''Calibration interval''' | |||

** In almost all calibration applications the ultimate quantity of interest is the true value of the primary-scale measurement method associated with a measurement made on the secondary scale. | |||

** It seems the x-axis and y-axis have similar ranges in many application. | |||

* An Exercise in the Real World of Design and Analysis, Denby, Landwehr, and Mallows 2001. Inverse regression | |||

* [https://stats.stackexchange.com/questions/43053/how-to-determine-calibration-accuracy-uncertainty-of-a-linear-regression How to determine calibration accuracy/uncertainty of a linear regression?] | |||

* [https://chem.libretexts.org/Textbook_Maps/Analytical_Chemistry/Book%3A_Analytical_Chemistry_2.0_(Harvey)/05_Standardizing_Analytical_Methods/5.4%3A_Linear_Regression_and_Calibration_Curves Linear Regression and Calibration Curves] | |||

* [https://www.webdepot.umontreal.ca/Usagers/sauves/MonDepotPublic/CHM%203103/LCGC%20Eur%20Burke%202001%20-%202%20de%204.pdf Regression and calibration] Shaun Burke | |||

* [https://cran.r-project.org/web/packages/calibrate calibrate] package | |||

* [https://cran.r-project.org/web/packages/investr/index.html investr]: An R Package for Inverse Estimation. [https://journal.r-project.org/archive/2014-1/greenwell-kabban.pdf Paper] | |||

* [https://diagnprognres.biomedcentral.com/articles/10.1186/s41512-018-0029-2 The index of prediction accuracy: an intuitive measure useful for evaluating risk prediction models] by Kattan and Gerds 2018. The following code demonstrates Figure 2. <syntaxhighlight lang='rsplus'> | |||

# Odds ratio =1 and calibrated model | |||

set.seed(666) | |||

x = rnorm(1000) | |||

z1 = 1 + 0*x | |||

pr1 = 1/(1+exp(-z1)) | |||

y1 = rbinom(1000,1,pr1) | |||

mean(y1) # .724, marginal prevalence of the outcome | |||

dat1 <- data.frame(x=x, y=y1) | |||

newdat1 <- data.frame(x=rnorm(1000), y=rbinom(1000, 1, pr1)) | |||

=== | # Odds ratio =1 and severely miscalibrated model | ||

set.seed(666) | |||

x = rnorm(1000) | |||

z2 = -2 + 0*x | |||

pr2 = 1/(1+exp(-z2)) | |||

y2 = rbinom(1000,1,pr2) | |||

mean(y2) # .12 | |||

dat2 <- data.frame(x=x, y=y2) | |||

newdat2 <- data.frame(x=rnorm(1000), y=rbinom(1000, 1, pr2)) | |||

=== [https://en.wikipedia.org/wiki/Deviance_(statistics) Deviance], stats::deviance() and glmnet::deviance.glmnet() from R | library(riskRegression) | ||

* '''It is a generalization of the idea of using the sum of squares of residuals (RSS) in ordinary least squares''' to cases where model-fitting is achieved by maximum likelihood. See [https://stats.stackexchange.com/questions/6581/what-is-deviance-specifically-in-cart-rpart What is Deviance? (specifically in CART/rpart)] to manually compute deviance and compare it with the returned value of the '''deviance()''' function from a linear regression. Summary: deviance() = RSS in linear models. | lrfit1 <- glm(y ~ x, data = dat1, family = 'binomial') | ||

IPA(lrfit1, newdata = newdat1) | |||

# Variable Brier IPA IPA.gain | |||

# 1 Null model 0.1984710 0.000000e+00 -0.003160010 | |||

# 2 Full model 0.1990982 -3.160010e-03 0.000000000 | |||

# 3 x 0.1984800 -4.534668e-05 -0.003114664 | |||

1 - 0.1990982/0.1984710 | |||

# [1] -0.003160159 | |||

lrfit2 <- glm(y ~ x, family = 'binomial') | |||

IPA(lrfit2, newdata = newdat1) | |||

# Variable Brier IPA IPA.gain | |||

# 1 Null model 0.1984710 0.000000 -1.859333763 | |||

# 2 Full model 0.5674948 -1.859334 0.000000000 | |||

# 3 x 0.5669200 -1.856437 -0.002896299 | |||

1 - 0.5674948/0.1984710 | |||

# [1] -1.859334 | |||

</syntaxhighlight> From the simulated data, we see IPA = -3.16e-3 for a calibrated model and IPA = -1.86 for a severely miscalibrated model. | |||

= ROC curve = | |||

See [[ROC|ROC]]. | |||

= [https://en.wikipedia.org/wiki/Net_reclassification_improvement NRI] (Net reclassification improvement) = | |||

= Maximum likelihood = | |||

[http://stats.stackexchange.com/questions/622/what-is-the-difference-between-a-partial-likelihood-profile-likelihood-and-marg Difference of partial likelihood, profile likelihood and marginal likelihood] | |||

== EM Algorithm == | |||

* https://en.wikipedia.org/wiki/Expectation%E2%80%93maximization_algorithm | |||

* [https://stephens999.github.io/fiveMinuteStats/intro_to_em.html Introduction to EM: Gaussian Mixture Models] | |||

== Mixture model == | |||

[https://cran.r-project.org/web/packages/mixComp/ mixComp]: Estimation of the Order of Mixture Distributions | |||

== MLE == | |||

[https://cimentadaj.github.io/blog/2020-11-26-maximum-likelihood-distilled/maximum-likelihood-distilled/ Maximum Likelihood Distilled] | |||

== Efficiency of an estimator == | |||

[https://stats.stackexchange.com/a/350362 What does it mean by more “efficient” estimator] | |||

== Inference == | |||

[https://www.tidyverse.org/blog/2021/08/infer-1-0-0/ infer] package | |||

= Generalized Linear Model = | |||

* Lectures from a course in [http://people.stat.sfu.ca/~raltman/stat851.html Simon Fraser University Statistics]. | |||

* [https://myweb.uiowa.edu/pbreheny/uk/teaching/760-s13/index.html Advanced Regression] from Patrick Breheny. | |||

* [https://petolau.github.io/Analyzing-double-seasonal-time-series-with-GAM-in-R/ Doing magic and analyzing seasonal time series with GAM (Generalized Additive Model) in R] | |||

== Link function == | |||

[http://www.win-vector.com/blog/2019/07/link-functions-versus-data-transforms/ Link Functions versus Data Transforms] | |||

== Extract coefficients, z, p-values == | |||

Use '''coef(summary(glmObject))''' | |||

<pre> | |||

> coef(summary(glm.D93)) | |||

Estimate Std. Error z value Pr(>|z|) | |||

(Intercept) 3.044522e+00 0.1708987 1.781478e+01 5.426767e-71 | |||

outcome2 -4.542553e-01 0.2021708 -2.246889e+00 2.464711e-02 | |||

outcome3 -2.929871e-01 0.1927423 -1.520097e+00 1.284865e-01 | |||

treatment2 1.337909e-15 0.2000000 6.689547e-15 1.000000e+00 | |||

treatment3 1.421085e-15 0.2000000 7.105427e-15 1.000000e+00 | |||

</pre> | |||

== Quasi Likelihood == | |||

Quasi-likelihood is like log-likelihood. The quasi-score function (first derivative of quasi-likelihood function) is the estimating equation. | |||

* [http://www.stat.uchicago.edu/~pmcc/pubs/paper6.pdf Original paper] by Peter McCullagh. | |||

* [http://people.stat.sfu.ca/~raltman/stat851/851L20.pdf Lecture 20] from SFU. | |||

* [http://courses.washington.edu/b571/lectures/notes131-181.pdf U. Washington] and [http://faculty.washington.edu/heagerty/Courses/b571/handouts/OverdispQL.pdf another lecture] focuses on overdispersion. | |||

* [http://www.maths.usyd.edu.au/u/jchan/GLM/QuasiLikelihood.pdf This lecture] contains a table of quasi likelihood from common distributions. | |||

== IRLS == | |||

* [https://statisticaloddsandends.wordpress.com/2020/05/14/glmnet-v4-0-generalizing-the-family-parameter/ glmnet v4.0: generalizing the family parameter] | |||

* [https://bwlewis.github.io/GLM/ Generalized linear models, abridged] (include algorithm and code) | |||

== Plot == | |||

https://strengejacke.wordpress.com/2015/02/05/sjplot-package-and-related-online-manuals-updated-rstats-ggplot/ | |||

== [https://en.wikipedia.org/wiki/Deviance_(statistics) Deviance], stats::deviance() and glmnet::deviance.glmnet() from R == | |||

* '''It is a generalization of the idea of using the sum of squares of residuals (RSS) in ordinary least squares''' to cases where model-fitting is achieved by maximum likelihood. See [https://stats.stackexchange.com/questions/6581/what-is-deviance-specifically-in-cart-rpart What is Deviance? (specifically in CART/rpart)] to manually compute deviance and compare it with the returned value of the '''deviance()''' function from a linear regression. Summary: deviance() = RSS in linear models. | |||

* [https://www.datascienceblog.net/post/machine-learning/interpreting_generalized_linear_models/ Interpreting Generalized Linear Models] | |||

* [https://statisticaloddsandends.wordpress.com/2019/03/27/what-is-deviance/ What is deviance?] You can think of the deviance of a model as twice the negative log likelihood plus a constant. | |||

* https://www.rdocumentation.org/packages/stats/versions/3.4.3/topics/deviance | * https://www.rdocumentation.org/packages/stats/versions/3.4.3/topics/deviance | ||

* Likelihood ratio tests and the deviance http://data.princeton.edu/wws509/notes/a2.pdf#page=6 | * Likelihood ratio tests and the deviance http://data.princeton.edu/wws509/notes/a2.pdf#page=6 | ||

* Deviance(y,muhat) = 2*(loglik_saturated - loglik_proposed) | * Deviance(y,muhat) = 2*(loglik_saturated - loglik_proposed) | ||

* [http://r.qcbs.ca/workshop06/book-en/binomial-glm.html Binomial GLM] and the [https://www.rdocumentation.org/packages/base/versions/3.6.2/topics/ls objects()] function that seems to be the same as str(, max=1). | |||

* [https://stats.stackexchange.com/questions/108995/interpreting-residual-and-null-deviance-in-glm-r Interpreting Residual and Null Deviance in GLM R] | * [https://stats.stackexchange.com/questions/108995/interpreting-residual-and-null-deviance-in-glm-r Interpreting Residual and Null Deviance in GLM R] | ||

** Null Deviance = 2(LL(Saturated Model) - LL(Null Model)) on df = df_Sat - df_Null. The '''null deviance''' shows how well the response variable is predicted by a model that includes only the intercept (grand mean). | ** Null Deviance = 2(LL(Saturated Model) - LL(Null Model)) on df = df_Sat - df_Null. The '''null deviance''' shows how well the response variable is predicted by a model that includes only the intercept (grand mean). | ||

| Line 621: | Line 846: | ||

</syntaxhighlight> | </syntaxhighlight> | ||

== Saturated model == | |||

* The saturated model always has n parameters where n is the sample size. | * The saturated model always has n parameters where n is the sample size. | ||

* [https://stats.stackexchange.com/questions/114073/logistic-regression-how-to-obtain-a-saturated-model Logistic Regression : How to obtain a saturated model] | * [https://stats.stackexchange.com/questions/114073/logistic-regression-how-to-obtain-a-saturated-model Logistic Regression : How to obtain a saturated model] | ||

== Simulate data == | == Testing == | ||

* [https://rss.onlinelibrary.wiley.com/doi/full/10.1111/rssb.12369?campaign=wolearlyview Robust testing in generalized linear models by sign flipping score contributions] | |||

* [https://rss.onlinelibrary.wiley.com/doi/full/10.1111/rssb.12371?campaign=wolearlyview Goodness‐of‐fit testing in high dimensional generalized linear models] | |||

== Generalized Additive Models == | |||

* [https://www.seascapemodels.org/rstats/2021/03/27/common-GAM-problems.html How to solve common problems with GAMs] | |||

* [https://www.mzes.uni-mannheim.de/socialsciencedatalab/article/gam/ Generalized Additive Models: Allowing for some wiggle room in your models] | |||

* [https://www.rdatagen.net/post/2022-08-09-simulating-data-from-a-non-linear-function-by-specifying-some-points-on-the-curve/ Simulating data from a non-linear function by specifying a handful of points] | |||

* [https://www.rdatagen.net/post/2022-11-01-modeling-secular-trend-in-crt-using-gam/ Modeling the secular trend in a cluster randomized trial using very flexible models] | |||

= Simulate data = | |||

* [https://rviews.rstudio.com/2020/09/09/fake-data-with-r/ Fake Data with R] | |||

* Understanding statistics through programming: [https://twitter.com/domliebl/status/1469347307267182601?s=20 You don’t really understand a stochastic process until you know how to simulate it] - D.G. Kendall. | |||

== Density plot == | |||

{{Pre}} | |||

# plot a Weibull distribution with shape and scale | # plot a Weibull distribution with shape and scale | ||

func <- function(x) dweibull(x, shape = 1, scale = 3.38) | func <- function(x) dweibull(x, shape = 1, scale = 3.38) | ||

| Line 634: | Line 872: | ||

func <- function(x) dweibull(x, shape = 1.1, scale = 3.38) | func <- function(x) dweibull(x, shape = 1.1, scale = 3.38) | ||

curve(func, .1, 10) | curve(func, .1, 10) | ||

</ | </pre> | ||

The shape parameter plays a role on the shape of the density function and the failure rate. | The shape parameter plays a role on the shape of the density function and the failure rate. | ||

| Line 642: | Line 880: | ||

* Shape >1: failure rate increases with time | * Shape >1: failure rate increases with time | ||

== Simulate data from a specified density == | |||

* http://stackoverflow.com/questions/16134786/simulate-data-from-non-standard-density-function | * http://stackoverflow.com/questions/16134786/simulate-data-from-non-standard-density-function | ||

=== Signal to noise ratio | === Permuted block randomization === | ||

[https://www.rdatagen.net/post/permuted-block-randomization-using-simstudy/ Permuted block randomization using simstudy] | |||

== Correlated data == | |||

<ul> | |||

<li> [https://predictivehacks.com/how-to-generate-correlated-data-in-r/ How To Generate Correlated Data In R] | |||

<li> [https://www.r-bloggers.com/2023/02/flexible-correlation-generation-an-update-to-gencormat-in-simstudy/ Flexible correlation generation: an update to genCorMat in simstudy] | |||

<li> [https://en.wikipedia.org/wiki/Cholesky_decomposition#Monte_Carlo_simulation Cholesky decomposition] | |||

<pre> | |||

set.seed(1) | |||

n <- 1000 | |||

R <- matrix(c(1, 0.75, 0.75, 1), nrow=2) | |||

M <- matrix(rnorm(2 * n), ncol=2) | |||

M <- M %*% chol(R) # chol(R) is an upper triangular matrix | |||

x <- M[, 1] # First correlated vector | |||

y <- M[, 2] | |||

cor(x, y) | |||

# 0.7502607 | |||

</pre> | |||

</ul> | |||

== Clustered data with marginal correlations == | |||

[https://www.rdatagen.net/post/2022-11-22-generating-cluster-data-with-marginal-correlations/ Generating clustered data with marginal correlations] | |||

== Signal to noise ratio/SNR == | |||

* https://en.wikipedia.org/wiki/Signal-to-noise_ratio | * https://en.wikipedia.org/wiki/Signal-to-noise_ratio | ||

* https://stats.stackexchange.com/questions/31158/how-to-simulate-signal-noise-ratio | * https://stats.stackexchange.com/questions/31158/how-to-simulate-signal-noise-ratio | ||

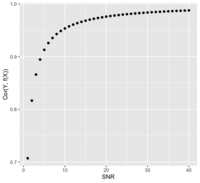

: <math>\frac{\sigma^2_{signal}}{\sigma^2_{noise}} = \frac{Var(f(X))}{Var(e)} </math> if Y = f(X) + e | : <math>SNR = \frac{\sigma^2_{signal}}{\sigma^2_{noise}} = \frac{Var(f(X))}{Var(e)} </math> if Y = f(X) + e | ||

* The SNR is related to the correlation of Y and f(X). Assume X and e are independent (<math>X \perp e </math>): | |||

: <math> | |||

\begin{align} | |||

Cor(Y, f(X)) &= Cor(f(X)+e, f(X)) \\ | |||

&= \frac{Cov(f(X)+e, f(X))}{\sqrt{Var(f(X)+e) Var(f(X))}} \\ | |||

&= \frac{Var(f(X))}{\sqrt{Var(f(X)+e) Var(f(X))}} \\ | |||

&= \frac{\sqrt{Var(f(X))}}{\sqrt{Var(f(X)) + Var(e))}} = \frac{\sqrt{SNR}}{\sqrt{SNR + 1}} \\ | |||

&= \frac{1}{\sqrt{1 + Var(e)/Var(f(X))}} = \frac{1}{\sqrt{1 + SNR^{-1}}} | |||

\end{align} | |||

</math> [[File:SnrVScor.png|200px]] | |||

: Or <math>SNR = \frac{Cor^2}{1-Cor^2} </math> | |||

* Page 401 of ESLII (https://web.stanford.edu/~hastie/ElemStatLearn//) 12th print. | * Page 401 of ESLII (https://web.stanford.edu/~hastie/ElemStatLearn//) 12th print. | ||

| Line 655: | Line 928: | ||

* Yuan and Lin 2006: 1.8, 3 | * Yuan and Lin 2006: 1.8, 3 | ||

* [https://academic.oup.com/biostatistics/article/19/3/263/4093306#123138354 A framework for estimating and testing qualitative interactions with applications to predictive biomarkers] Roth, Biostatistics, 2018 | * [https://academic.oup.com/biostatistics/article/19/3/263/4093306#123138354 A framework for estimating and testing qualitative interactions with applications to predictive biomarkers] Roth, Biostatistics, 2018 | ||

* [https://stackoverflow.com/a/47232502 Matlab: computing signal to noise ratio (SNR) of two highly correlated time domain signals] | |||

== Effect size, Cohen's d and volcano plot == | |||

* https://en.wikipedia.org/wiki/Effect_size | * https://en.wikipedia.org/wiki/Effect_size (See also the estimation by the [[#Two_sample_test_assuming_equal_variance|pooled sd]]) | ||

: <math>\theta = \frac{\mu_1 - \mu_2} \sigma,</math> | : <math>\theta = \frac{\mu_1 - \mu_2} \sigma,</math> | ||

* [https://learningstatisticswithr.com/book/hypothesistesting.html#effectsize Effect size, sample size and power] from ebook '''[https://learningstatisticswithr.com/book/ Learning statistics with R]''': A tutorial for psychology students and other beginners. | |||

* [https://en.wikipedia.org/wiki/Effect_size#t-test_for_mean_difference_between_two_independent_groups t-statistic and Cohen's d] for the case of mean difference between two independent groups | |||

* [http://www.win-vector.com/blog/2019/06/cohens-d-for-experimental-planning/ Cohen’s D for Experimental Planning] | |||

* [https://en.wikipedia.org/wiki/Volcano_plot_(statistics) Volcano plot] | |||

** Y-axis: -log(p) | |||

** X-axis: log2 fold change OR effect size (Cohen's D). [https://twitter.com/biobenkj/status/1072141825568329728 An example] from RNA-Seq data. | |||

== Multiple comparisons | == Treatment/control == | ||

* [https://github.com/cran/biospear/blob/master/R/simdata.R simdata()] from [https://cran.r-project.org/web/packages/biospear/index.html biospear] package | |||

* [https://github.com/cran/ROCSI/blob/master/R/ROCSI.R#L598 data.gen()] from [https://cran.r-project.org/web//packages/ROCSI/index.html ROCSI] package. The response contains continuous, binary and survival outcomes. The input include prevalence of predictive biomarkers, effect size (beta) for prognostic biomarker, etc. | |||

== Cauchy distribution has no expectation == | |||

https://en.wikipedia.org/wiki/Cauchy_distribution | |||

<pre> | |||

replicate(10, mean(rcauchy(10000))) | |||

</pre> | |||

== Dirichlet distribution == | |||

* [https://en.wikipedia.org/wiki/Dirichlet_distribution Dirichlet distribution] | |||

** It is a multivariate generalization of the '''beta''' distribution | |||

** The Dirichlet distribution is the conjugate prior of the categorical distribution and '''multinomial distribution'''. | |||

* [https://cran.r-project.org/web/packages/dirmult/ dirmult]::rdirichlet() | |||

== Relationships among probability distributions == | |||

https://en.wikipedia.org/wiki/Relationships_among_probability_distributions | |||

== What is the probability that two persons have the same initials == | |||

[https://www.r-bloggers.com/2023/12/what-is-the-probability-that-two-persons-have-the-same-initials/ The post]. The probability that at least two persons have the same initials depends on the size of the group. For a team of 8 people, simulations suggest that the probability is close to 4.1%. This probability increases with the size of the group. If there are 1000 people in the room, [https://www.numerade.com/ask/question/whats-the-probability-that-someone-else-in-a-room-full-of-people-has-the-exact-same-3-initials-in-their-name-thats-in-another-persons-name-a-038-b-333-c-0057-d-0064/ the probability is almost 100%]. [https://math.stackexchange.com/a/606272 How many people do you need to guarantee that two of them have the same initals?] | |||

= Multiple comparisons = | |||

* If you perform experiments over and over, you's bound to find something. So significance level must be adjusted down when performing multiple hypothesis tests. | * If you perform experiments over and over, you's bound to find something. So significance level must be adjusted down when performing multiple hypothesis tests. | ||

* http://www.gs.washington.edu/academics/courses/akey/56008/lecture/lecture10.pdf | * http://www.gs.washington.edu/academics/courses/akey/56008/lecture/lecture10.pdf | ||

| Line 669: | Line 971: | ||

* [http://varianceexplained.org/statistics/interpreting-pvalue-histogram/ Plot a histogram of p-values], a post from varianceexplained.org. The anti-conservative histogram (tail on the RHS) is what we have typically seen in e.g. microarray gene expression data. | * [http://varianceexplained.org/statistics/interpreting-pvalue-histogram/ Plot a histogram of p-values], a post from varianceexplained.org. The anti-conservative histogram (tail on the RHS) is what we have typically seen in e.g. microarray gene expression data. | ||

* [http://statistic-on-air.blogspot.com/2015/01/adjustment-for-multiple-comparison.html Comparison of different ways of multiple-comparison] in R. | * [http://statistic-on-air.blogspot.com/2015/01/adjustment-for-multiple-comparison.html Comparison of different ways of multiple-comparison] in R. | ||

* [https://peerj.com/articles/10387/ Comparing multiple comparisons: practical guidance for choosing the best multiple comparisons test] Midway 2020 | |||

Take an example, Suppose 550 out of 10,000 genes are significant at .05 level | Take an example, Suppose 550 out of 10,000 genes are significant at .05 level | ||

| Line 677: | Line 980: | ||

According to [https://www.cancer.org/cancer/cancer-basics/lifetime-probability-of-developing-or-dying-from-cancer.html Lifetime Risk of Developing or Dying From Cancer], there is a 39.7% risk of developing a cancer for male during his lifetime (in other words, 1 out of every 2.52 men in US will develop some kind of cancer during his lifetime) and 37.6% for female. So the probability of getting at least one cancer patient in a 3-generation family is 1-.6**3 - .63**3 = 0.95. | According to [https://www.cancer.org/cancer/cancer-basics/lifetime-probability-of-developing-or-dying-from-cancer.html Lifetime Risk of Developing or Dying From Cancer], there is a 39.7% risk of developing a cancer for male during his lifetime (in other words, 1 out of every 2.52 men in US will develop some kind of cancer during his lifetime) and 37.6% for female. So the probability of getting at least one cancer patient in a 3-generation family is 1-.6**3 - .63**3 = 0.95. | ||

=== False Discovery Rate | == Flexible method == | ||

[https://rdrr.io/bioc/GSEABenchmarkeR/man/runDE.html ?GSEABenchmarkeR::runDE]. Unadjusted (too few DE genes), FDR, and Bonferroni (too many DE genes) are applied depending on the proportion of DE genes. | |||

== Family-Wise Error Rate (FWER) == | |||

* https://en.wikipedia.org/wiki/Family-wise_error_rate | |||

* [https://www.statology.org/family-wise-error-rate/ How to Estimate the Family-wise Error Rate] | |||

* [https://rviews.rstudio.com/2019/10/02/multiple-hypothesis-testing/ Multiple Hypothesis Testing in R] | |||

== Bonferroni == | |||

* https://en.wikipedia.org/wiki/Bonferroni_correction | |||

* This correction method is the most conservative of all and due to its strict filtering, potentially increases the false negative rate which simply means rejecting true positives among false positives. | |||

== False Discovery Rate/FDR == | |||

* https://en.wikipedia.org/wiki/False_discovery_rate | * https://en.wikipedia.org/wiki/False_discovery_rate | ||

* Paper [http://www.stat.purdue.edu/~doerge/BIOINFORM.D/FALL06/Benjamini%20and%20Y%20FDR.pdf Definition] by Benjamini and Hochberg in JRSS B 1995. | * Paper [http://www.stat.purdue.edu/~doerge/BIOINFORM.D/FALL06/Benjamini%20and%20Y%20FDR.pdf Definition] by Benjamini and Hochberg in JRSS B 1995. | ||

* [https://youtu.be/K8LQSvtjcEo False Discovery Rates, FDR, clearly explained] by StatQuest | |||

* A [http://xkcd.com/882/ comic] | * A [http://xkcd.com/882/ comic] | ||

* [http://www.nonlinear.com/support/progenesis/comet/faq/v2.0/pq-values.aspx A p-value of 0.05 implies that 5% of all tests will result in false positives. An FDR adjusted p-value (or q-value) of 0.05 implies that 5% of significant tests will result in false positives. The latter will result in fewer false positives]. | |||

* [https://stats.stackexchange.com/a/456087 How to interpret False Discovery Rate?] | |||

* P-value vs false discovery rate vs family wise error rate. See [http://jtleek.com/talks 10 statistics tip] or [http://www.biostat.jhsph.edu/~jleek/teaching/2011/genomics/mt140688.pdf#page=14 Statistics for Genomics (140.688)] from Jeff Leek. Suppose 550 out of 10,000 genes are significant at .05 level | |||